Jaekyum Kim

MGTANet: Encoding Sequential LiDAR Points Using Long Short-Term Motion-Guided Temporal Attention for 3D Object Detection

Dec 21, 2022Abstract:Most scanning LiDAR sensors generate a sequence of point clouds in real-time. While conventional 3D object detectors use a set of unordered LiDAR points acquired over a fixed time interval, recent studies have revealed that substantial performance improvement can be achieved by exploiting the spatio-temporal context present in a sequence of LiDAR point sets. In this paper, we propose a novel 3D object detection architecture, which can encode LiDAR point cloud sequences acquired by multiple successive scans. The encoding process of the point cloud sequence is performed on two different time scales. We first design a short-term motion-aware voxel encoding that captures the short-term temporal changes of point clouds driven by the motion of objects in each voxel. We also propose long-term motion-guided bird's eye view (BEV) feature enhancement that adaptively aligns and aggregates the BEV feature maps obtained by the short-term voxel encoding by utilizing the dynamic motion context inferred from the sequence of the feature maps. The experiments conducted on the public nuScenes benchmark demonstrate that the proposed 3D object detector offers significant improvements in performance compared to the baseline methods and that it sets a state-of-the-art performance for certain 3D object detection categories. Code is available at https://github.com/HYjhkoh/MGTANet.git

Joint 3D Object Detection and Tracking Using Spatio-Temporal Representation of Camera Image and LiDAR Point Clouds

Dec 15, 2021

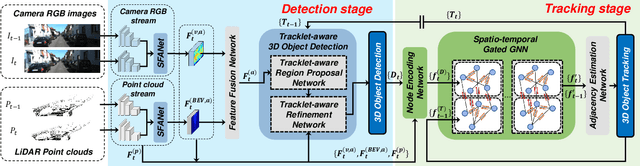

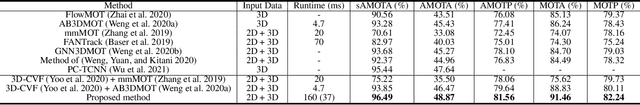

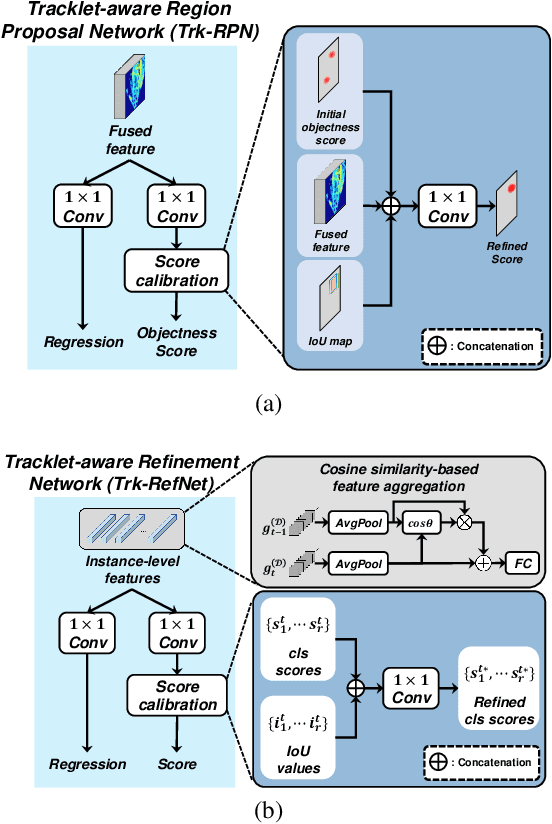

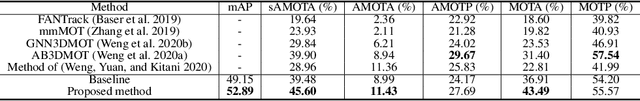

Abstract:In this paper, we propose a new joint object detection and tracking (JoDT) framework for 3D object detection and tracking based on camera and LiDAR sensors. The proposed method, referred to as 3D DetecTrack, enables the detector and tracker to cooperate to generate a spatio-temporal representation of the camera and LiDAR data, with which 3D object detection and tracking are then performed. The detector constructs the spatio-temporal features via the weighted temporal aggregation of the spatial features obtained by the camera and LiDAR fusion. Then, the detector reconfigures the initial detection results using information from the tracklets maintained up to the previous time step. Based on the spatio-temporal features generated by the detector, the tracker associates the detected objects with previously tracked objects using a graph neural network (GNN). We devise a fully-connected GNN facilitated by a combination of rule-based edge pruning and attention-based edge gating, which exploits both spatial and temporal object contexts to improve tracking performance. The experiments conducted on both KITTI and nuScenes benchmarks demonstrate that the proposed 3D DetecTrack achieves significant improvements in both detection and tracking performances over baseline methods and achieves state-of-the-art performance among existing methods through collaboration between the detector and tracker.

Joint Representation of Temporal Image Sequences and Object Motion for Video Object Detection

Nov 20, 2020

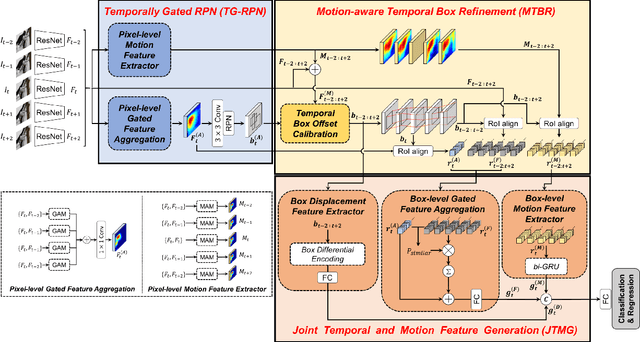

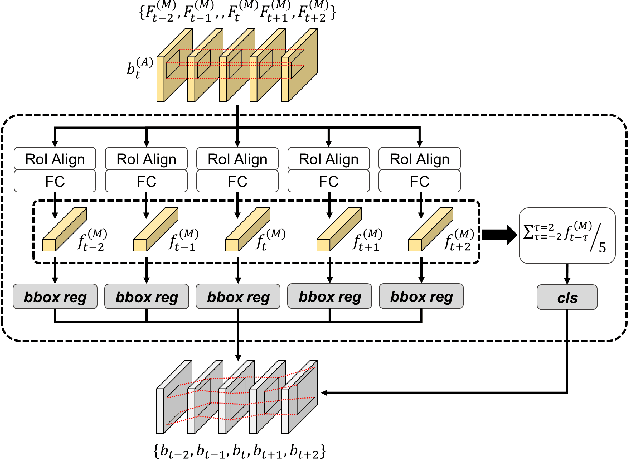

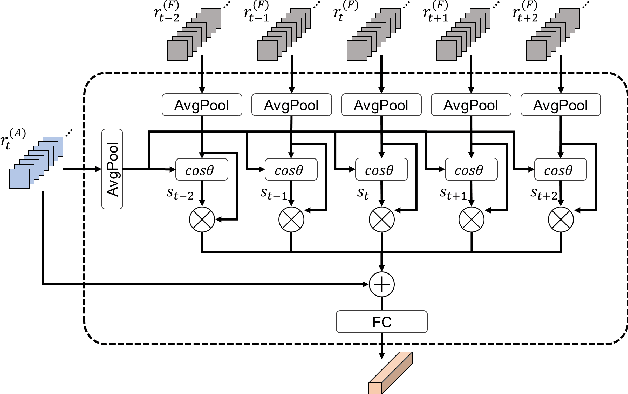

Abstract:In this paper, we propose a new video object detector (VoD) method referred to as temporal feature aggregation and motion-aware VoD (TM-VoD), which produces a joint representation of temporal image sequences and object motion. The proposed TM-VoD aggregates visual feature maps extracted by convolutional neural networks applying the temporal attention gating and spatial feature alignment. This temporal feature aggregation is performed in two stages in a hierarchical fashion. In the first stage, the visual feature maps are fused at a pixel level via gated attention model. In the second stage, the proposed method aggregates the features after aligning the object features using temporal box offset calibration and weights them according to the cosine similarity measure. The proposed TM-VoD also finds the representation of the motion of objects in two successive steps. The pixel-level motion features are first computed based on the incremental changes between the adjacent visual feature maps. Then, box-level motion features are obtained from both the region of interest (RoI)-aligned pixel-level motion features and the sequential changes of the box coordinates. Finally, all these features are concatenated to produce a joint representation of the objects for VoD. The experiments conducted on the ImageNet VID dataset demonstrate that the proposed method outperforms existing VoD methods and achieves a performance comparable to that of state-of-the-art VoDs.

Robust Deep Multi-modal Learning Based on Gated Information Fusion Network

Nov 02, 2018

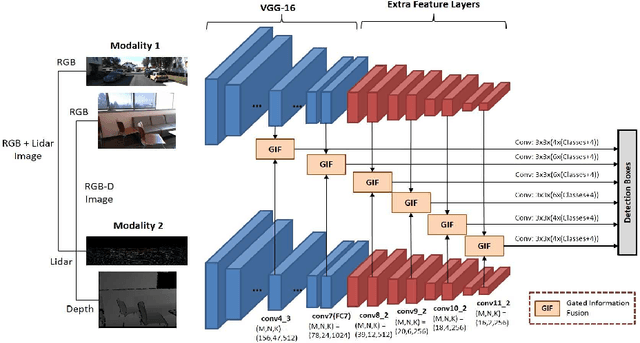

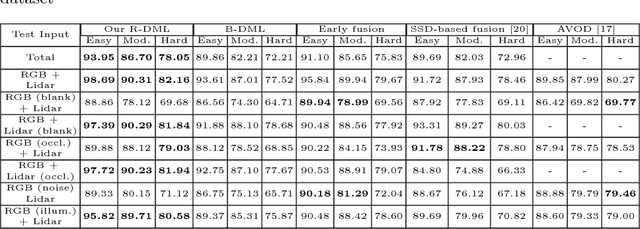

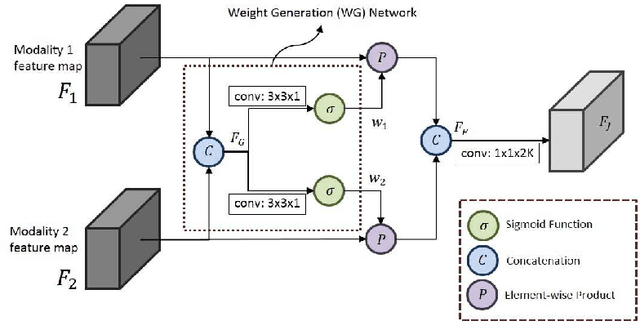

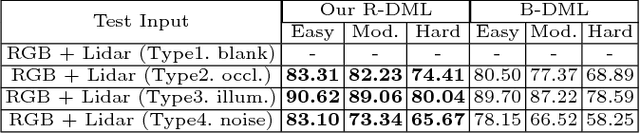

Abstract:The goal of multi-modal learning is to use complimentary information on the relevant task provided by the multiple modalities to achieve reliable and robust performance. Recently, deep learning has led significant improvement in multi-modal learning by allowing for the information fusion in the intermediate feature levels. This paper addresses a problem of designing robust deep multi-modal learning architecture in the presence of imperfect modalities. We introduce deep fusion architecture for object detection which processes each modality using the separate convolutional neural network (CNN) and constructs the joint feature map by combining the intermediate features from the CNNs. In order to facilitate the robustness to the degraded modalities, we employ the gated information fusion (GIF) network which weights the contribution from each modality according to the input feature maps to be fused. The weights are determined through the convolutional layers followed by a sigmoid function and trained along with the information fusion network in an end-to-end fashion. Our experiments show that the proposed GIF network offers the additional architectural flexibility to achieve robust performance in handling some degraded modalities, and show a significant performance improvement based on Single Shot Detector (SSD) for KITTI dataset using the proposed fusion network and data augmentation schemes.

Probabilistic Vehicle Trajectory Prediction over Occupancy Grid Map via Recurrent Neural Network

Sep 01, 2017

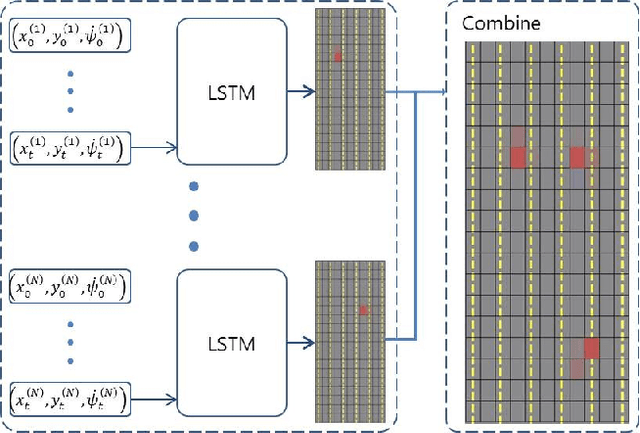

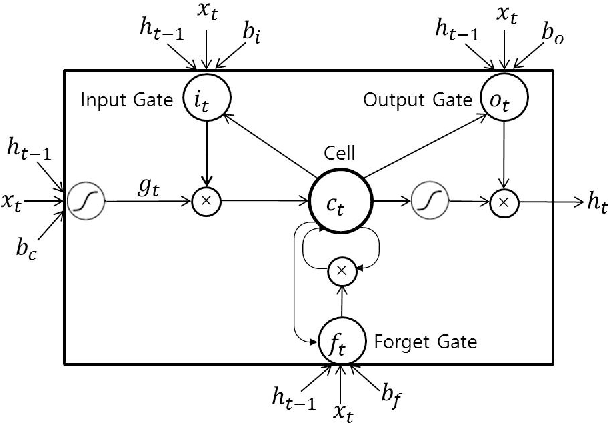

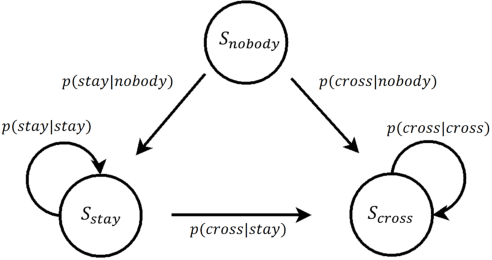

Abstract:In this paper, we propose an efficient vehicle trajectory prediction framework based on recurrent neural network. Basically, the characteristic of the vehicle's trajectory is different from that of regular moving objects since it is affected by various latent factors including road structure, traffic rules, and driver's intention. Previous state of the art approaches use sophisticated vehicle behavior model describing these factors and derive the complex trajectory prediction algorithm, which requires a system designer to conduct intensive model optimization for practical use. Our approach is data-driven and simple to use in that it learns complex behavior of the vehicles from the massive amount of trajectory data through deep neural network model. The proposed trajectory prediction method employs the recurrent neural network called long short-term memory (LSTM) to analyze the temporal behavior and predict the future coordinate of the surrounding vehicles. The proposed scheme feeds the sequence of vehicles' coordinates obtained from sensor measurements to the LSTM and produces the probabilistic information on the future location of the vehicles over occupancy grid map. The experiments conducted using the data collected from highway driving show that the proposed method can produce reasonably good estimate of future trajectory.

Autonomous Braking System via Deep Reinforcement Learning

Apr 24, 2017

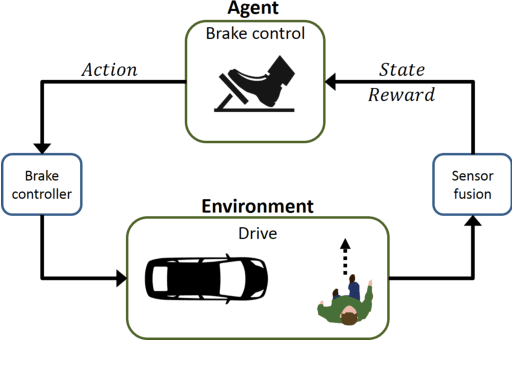

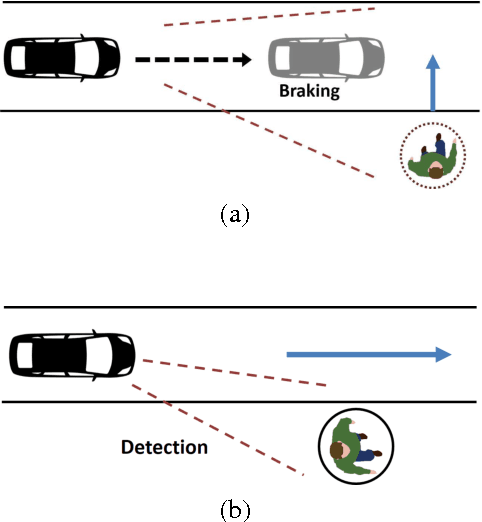

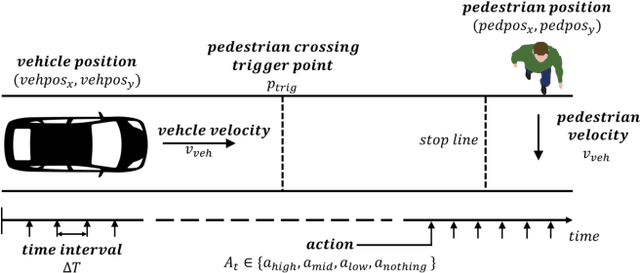

Abstract:In this paper, we propose a new autonomous braking system based on deep reinforcement learning. The proposed autonomous braking system automatically decides whether to apply the brake at each time step when confronting the risk of collision using the information on the obstacle obtained by the sensors. The problem of designing brake control is formulated as searching for the optimal policy in Markov decision process (MDP) model where the state is given by the relative position of the obstacle and the vehicle's speed, and the action space is defined as whether brake is stepped or not. The policy used for brake control is learned through computer simulations using the deep reinforcement learning method called deep Q-network (DQN). In order to derive desirable braking policy, we propose the reward function which balances the damage imposed to the obstacle in case of accident and the reward achieved when the vehicle runs out of risk as soon as possible. DQN is trained for the scenario where a vehicle is encountered with a pedestrian crossing the urban road. Experiments show that the control agent exhibits desirable control behavior and avoids collision without any mistake in various uncertain environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge