Humberto Bustince

ARTxAI: Explainable Artificial Intelligence Curates Deep Representation Learning for Artistic Images using Fuzzy Techniques

Aug 29, 2023

Abstract:Automatic art analysis employs different image processing techniques to classify and categorize works of art. When working with artistic images, we need to take into account further considerations compared to classical image processing. This is because such artistic paintings change drastically depending on the author, the scene depicted, and their artistic style. This can result in features that perform very well in a given task but do not grasp the whole of the visual and symbolic information contained in a painting. In this paper, we show how the features obtained from different tasks in artistic image classification are suitable to solve other ones of similar nature. We present different methods to improve the generalization capabilities and performance of artistic classification systems. Furthermore, we propose an explainable artificial intelligence method to map known visual traits of an image with the features used by the deep learning model considering fuzzy rules. These rules show the patterns and variables that are relevant to solve each task and how effective is each of the patterns found. Our results show that our proposed context-aware features can achieve up to $6\%$ and $26\%$ more accurate results than other context- and non-context-aware solutions, respectively, depending on the specific task. We also show that some of the features used by these models can be more clearly correlated to visual traits in the original image than others.

The Concept of Semantic Value in Social Network Analysis: an Application to Comparative Mythology

Sep 13, 2021

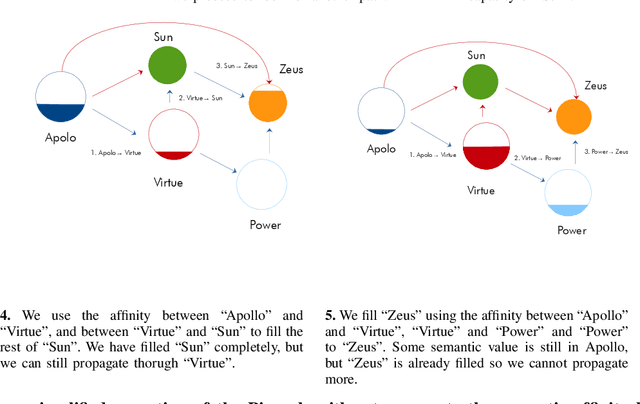

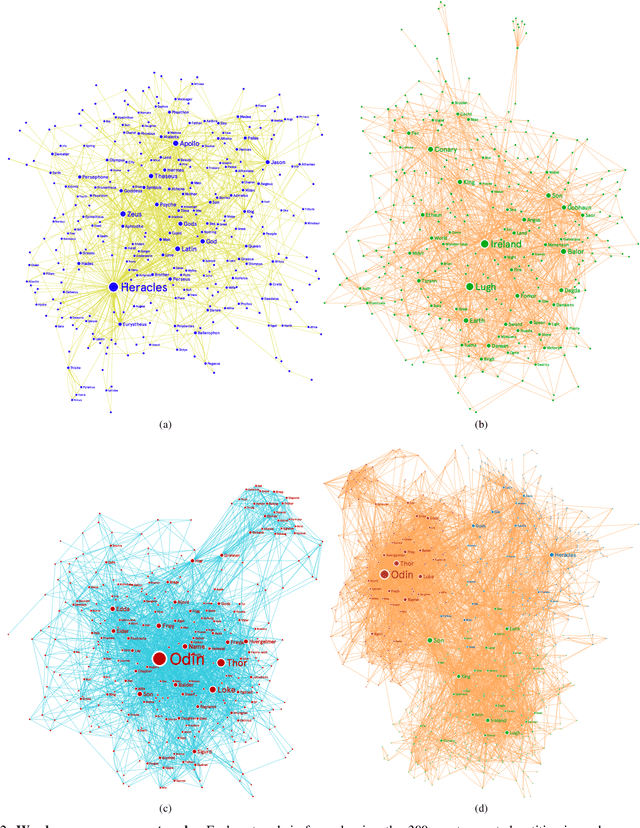

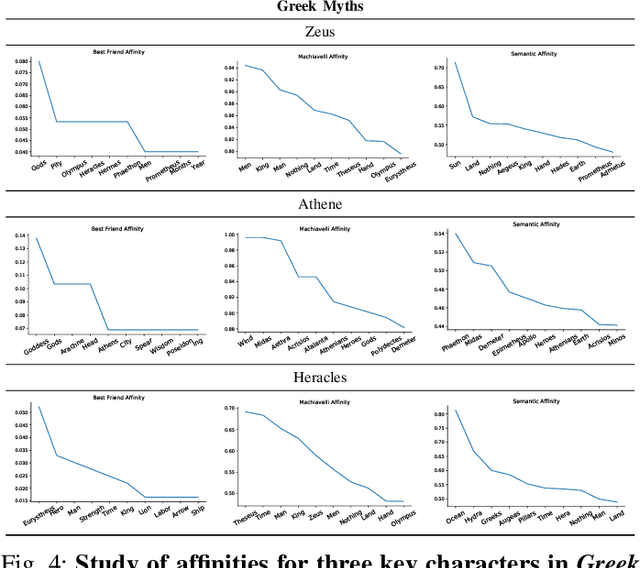

Abstract:Human sciences have traditionally relied on human reasoning and intelligence to infer knowledge from a wide range of sources, such as oral and written narrations, reports, and traditions. Here we develop an extension of classical social network analysis approaches to incorporate the concept of meaning in each actor, as a mean to quantify and infer further knowledge from the original source of the network. This extension is based on a new affinity function, the semantic affinity, that establishes fuzzy-like relationships between the different actors in the network, using combinations of affinity functions. We also propose a new heuristic algorithm based on the shortest capacity problem to compute this affinity function. We use these concept of meaning and semantic affinity to analyze and compare the gods and heroes from three different classical mythologies: Greek, Celtic and Nordic. We study the relationships of each individual mythology and those of common structure that is formed when we fuse the three of them. We show a strong connection between the Celtic and Nordic gods and that Greeks put more emphasis on heroic characters rather than deities. Our approach provides a technique to highlight and quantify important relationships in the original domain of the network not deducible from its structural properties.

Towards interval uncertainty propagation control in bivariate aggregation processes and the introduction of width-limited interval-valued overlap functions

Jun 08, 2021

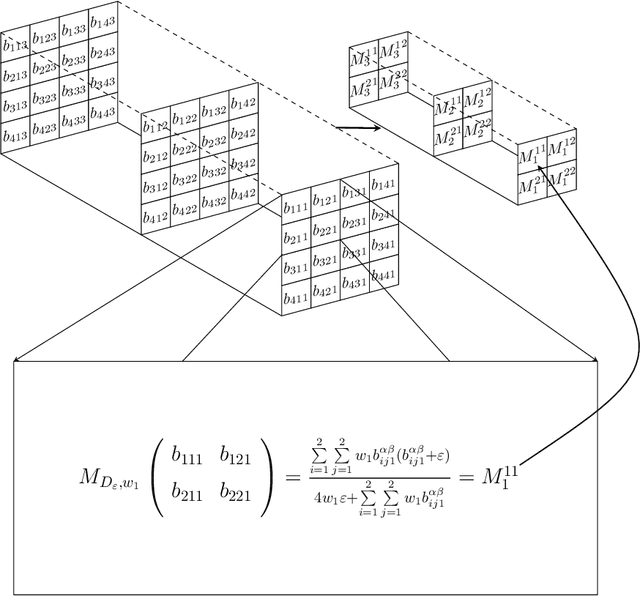

Abstract:Overlap functions are a class of aggregation functions that measure the overlapping degree between two values. Interval-valued overlap functions were defined as an extension to express the overlapping of interval-valued data, and they have been usually applied when there is uncertainty regarding the assignment of membership degrees. The choice of a total order for intervals can be significant, which motivated the recent developments on interval-valued aggregation functions and interval-valued overlap functions that are increasing to a given admissible order, that is, a total order that refines the usual partial order for intervals. Also, width preservation has been considered on these recent works, in an intent to avoid the uncertainty increase and guarantee the information quality, but no deeper study was made regarding the relation between the widths of the input intervals and the output interval, when applying interval-valued functions, or how one can control such uncertainty propagation based on this relation. Thus, in this paper we: (i) introduce and develop the concepts of width-limited interval-valued functions and width limiting functions, presenting a theoretical approach to analyze the relation between the widths of the input and output intervals of bivariate interval-valued functions, with special attention to interval-valued aggregation functions; (ii) introduce the concept of $(a,b)$-ultramodular aggregation functions, a less restrictive extension of one-dimension convexity for bivariate aggregation functions, which have an important predictable behaviour with respect to the width when extended to the interval-valued context; (iii) define width-limited interval-valued overlap functions, taking into account a function that controls the width of the output interval; (iv) present and compare three construction methods for these width-limited interval-valued overlap functions.

Neuro-inspired edge feature fusion using Choquet integrals

Apr 22, 2021

Abstract:It is known that the human visual system performs a hierarchical information process in which early vision cues (or primitives) are fused in the visual cortex to compose complex shapes and descriptors. While different aspects of the process have been extensively studied, as the lens adaptation or the feature detection, some other,as the feature fusion, have been mostly left aside. In this work we elaborate on the fusion of early vision primitives using generalizations of the Choquet integral, and novel aggregation operators that have been extensively studied in recent years. We propose to use generalizations of the Choquet integral to sensibly fuse elementary edge cues, in an attempt to model the behaviour of neurons in the early visual cortex. Our proposal leads to a full-framed edge detection algorithm, whose performance is put to the test in state-of-the-art boundary detection datasets.

A fusion method for multi-valued data

Jan 25, 2021

Abstract:In this paper we propose an extension of the notion of deviation-based aggregation function tailored to aggregate multidimensional data. Our objective is both to improve the results obtained by other methods that try to select the best aggregation function for a particular set of data, such as penalty functions, and to reduce the temporal complexity required by such approaches. We discuss how this notion can be defined and present three illustrative examples of the applicability of our new proposal in areas where temporal constraints can be strict, such as image processing, deep learning and decision making, obtaining favourable results in the process.

Motor-Imagery-Based Brain Computer Interface using Signal Derivation and Aggregation Functions

Jan 18, 2021

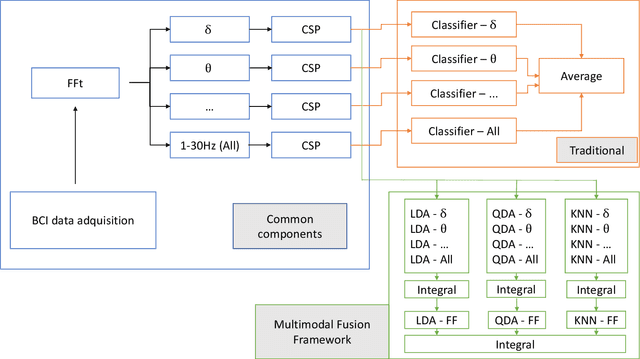

Abstract:Brain Computer Interface technologies are popular methods of communication between the human brain and external devices. One of the most popular approaches to BCI is Motor Imagery. In BCI applications, the ElectroEncephaloGraphy is a very popular measurement for brain dynamics because of its non-invasive nature. Although there is a high interest in the BCI topic, the performance of existing systems is still far from ideal, due to the difficulty of performing pattern recognition tasks in EEG signals. BCI systems are composed of a wide range of components that perform signal pre-processing, feature extraction and decision making. In this paper, we define a BCI Framework, named Enhanced Fusion Framework, where we propose three different ideas to improve the existing MI-based BCI frameworks. Firstly, we include aan additional pre-processing step of the signal: a differentiation of the EEG signal that makes it time-invariant. Secondly, we add an additional frequency band as feature for the system and we show its effect on the performance of the system. Finally, we make a profound study of how to make the final decision in the system. We propose the usage of both up to six types of different classifiers and a wide range of aggregation functions (including classical aggregations, Choquet and Sugeno integrals and their extensions and overlap functions) to fuse the information given by the considered classifiers. We have tested this new system on a dataset of 20 volunteers performing motor imagery-based brain-computer interface experiments. On this dataset, the new system achieved a 88.80% of accuracy. We also propose an optimized version of our system that is able to obtain up to 90,76%. Furthermore, we find that the pair Choquet/Sugeno integrals and overlap functions are the ones providing the best results.

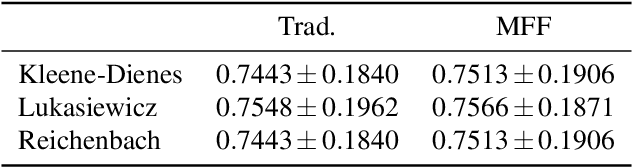

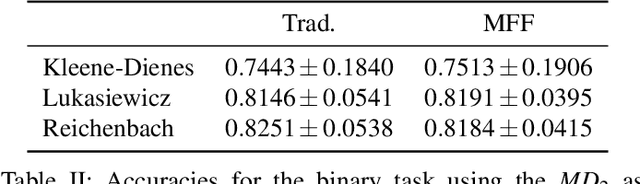

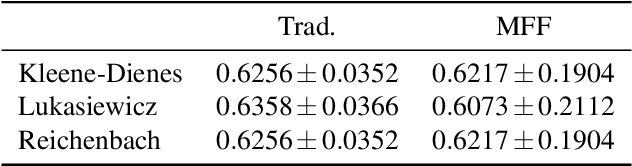

Interval-valued aggregation functions based on moderate deviations applied to Motor-Imagery-Based Brain Computer Interface

Nov 19, 2020

Abstract:In this work we study the use of moderate deviation functions to measure similarity and dissimilarity among a set of given interval-valued data. To do so, we introduce the notion of interval-valued moderate deviation function and we study in particular those interval-valued moderate deviation functions which preserve the width of the input intervals. Then, we study how to apply these functions to construct interval-valued aggregation functions. We have applied them in the decision making phase of two Motor-Imagery Brain Computer Interface frameworks, obtaining better results than those obtained using other numerical and intervalar aggregations.

Learning ordered pooling weights in image classification

Jul 02, 2020

Abstract:Spatial pooling is an important step in computer vision systems like Convolutional Neural Networks or the Bag-of-Words method. The spatial pooling purpose is to combine neighbouring descriptors to obtain a single descriptor for a given region (local or global). The resultant combined vector must be as discriminant as possible, in other words, must contain relevant information, while removing irrelevant and confusing details. Maximum and average are the most common aggregation functions used in the pooling step. To improve the aggregation of relevant information without degrading their discriminative power for image classification, we introduce a simple but effective scheme based on Ordered Weighted Average (OWA) aggregation operators. We present a method to learn the weights of the OWA aggregation operator in a Bag-of-Words framework and in Convolutional Neural Networks, and provide an extensive evaluation showing that OWA based pooling outperforms classical aggregation operators.

Co-occurrence of deep convolutional features for image search

Mar 30, 2020

Abstract:Image search can be tackled using deep features from pre-trained Convolutional Neural Networks (CNN). The feature map from the last convolutional layer of a CNN encodes descriptive information from which a discriminative global descriptor can be obtained. We propose a new representation of co-occurrences from deep convolutional features to extract additional relevant information from this last convolutional layer. Combining this co-occurrence map with the feature map, we achieve an improved image representation. We present two different methods to get the co-occurrence representation, the first one based on direct aggregation of activations, and the second one, based on a trainable co-occurrence representation. The image descriptors derived from our methodology improve the performance in very well-known image retrieval datasets as we prove in the experiments.

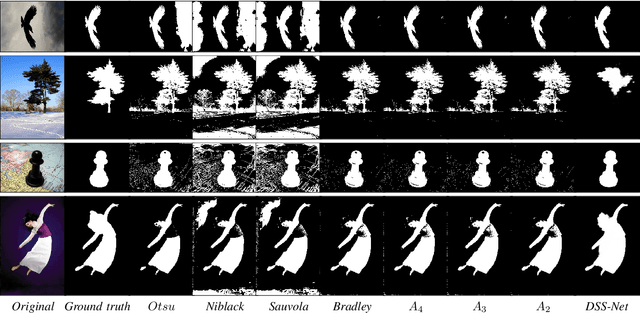

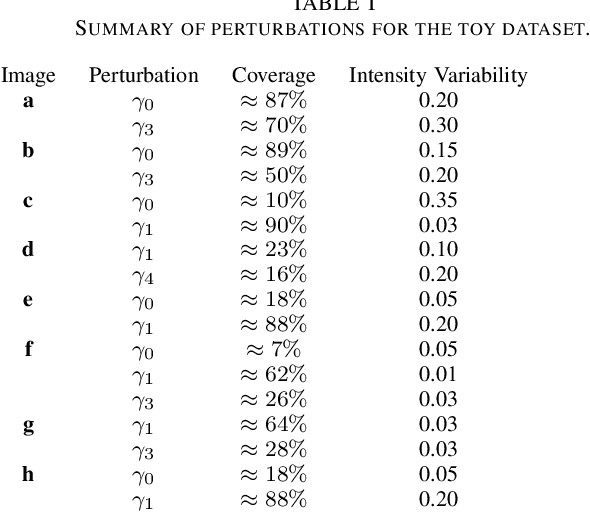

Adaptive binarization based on fuzzy integrals

Mar 04, 2020

Abstract:Adaptive binarization methodologies threshold the intensity of the pixels with respect to adjacent pixels exploiting the integral images. In turn, the integral images are generally computed optimally using the summed-area-table algorithm (SAT). This document presents a new adaptive binarization technique based on fuzzy integral images through an efficient design of a modified SAT for fuzzy integrals. We define this new methodology as FLAT (Fuzzy Local Adaptive Thresholding). The experimental results show that the proposed methodology have produced an image quality thresholding often better than traditional algorithms and saliency neural networks. We propose a new generalization of the Sugeno and CF 1,2 integrals to improve existing results with an efficient integral image computation. Therefore, these new generalized fuzzy integrals can be used as a tool for grayscale processing in real-time and deep-learning applications. Index Terms: Image Thresholding, Image Processing, Fuzzy Integrals, Aggregation Functions

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge