Javier Andreu-Perez

A Fast Interpretable Fuzzy Tree Learner

Dec 12, 2025Abstract:Fuzzy rule-based systems have been mostly used in interpretable decision-making because of their interpretable linguistic rules. However, interpretability requires both sensible linguistic partitions and small rule-base sizes, which are not guaranteed by many existing fuzzy rule-mining algorithms. Evolutionary approaches can produce high-quality models but suffer from prohibitive computational costs, while neural-based methods like ANFIS have problems retaining linguistic interpretations. In this work, we propose an adaptation of classical tree-based splitting algorithms from crisp rules to fuzzy trees, combining the computational efficiency of greedy algoritms with the interpretability advantages of fuzzy logic. This approach achieves interpretable linguistic partitions and substantially improves running time compared to evolutionary-based approaches while maintaining competitive predictive performance. Our experiments on tabular classification benchmarks proof that our method achieves comparable accuracy to state-of-the-art fuzzy classifiers with significantly lower computational cost and produces more interpretable rule bases with constrained complexity. Code is available in: https://github.com/Fuminides/fuzzy_greedy_tree_public

Crisp complexity of fuzzy classifiers

Apr 22, 2025Abstract:Rule-based systems are a very popular form of explainable AI, particularly in the fuzzy community, where fuzzy rules are widely used for control and classification problems. However, fuzzy rule-based classifiers struggle to reach bigger traction outside of fuzzy venues, because users sometimes do not know about fuzzy and because fuzzy partitions are not so easy to interpret in some situations. In this work, we propose a methodology to reduce fuzzy rule-based classifiers to crisp rule-based classifiers. We study different possible crisp descriptions and implement an algorithm to obtain them. Also, we analyze the complexity of the resulting crisp classifiers. We believe that our results can help both fuzzy and non-fuzzy practitioners understand better the way in which fuzzy rule bases partition the feature space and how easily one system can be translated to another and vice versa. Our complexity metric can also help to choose between different fuzzy classifiers based on what the equivalent crisp partitions look like.

Reliable Classification with Conformal Learning and Interval-Type 2 Fuzzy Sets

Apr 21, 2025Abstract:Classical machine learning classifiers tend to be overconfident can be unreliable outside of the laboratory benchmarks. Properly assessing the reliability of the output of the model per sample is instrumental for real-life scenarios where these systems are deployed. Because of this, different techniques have been employed to properly quantify the quality of prediction for a given model. These are most commonly Bayesian statistics and, more recently, conformal learning. Given a calibration set, conformal learning can produce outputs that are guaranteed to cover the target class with a desired significance level, and are more reliable than the standard confidence intervals used by Bayesian methods. In this work, we propose to use conformal learning with fuzzy rule-based systems in classification and show some metrics of their performance. Then, we discuss how the use of type 2 fuzzy sets can improve the quality of the output of the system compared to both fuzzy and crisp rules. Finally, we also discuss how the fine-tuning of the system can be adapted to improve the quality of the conformal prediction.

Compact Rule-Based Classifier Learning via Gradient Descent

Feb 03, 2025

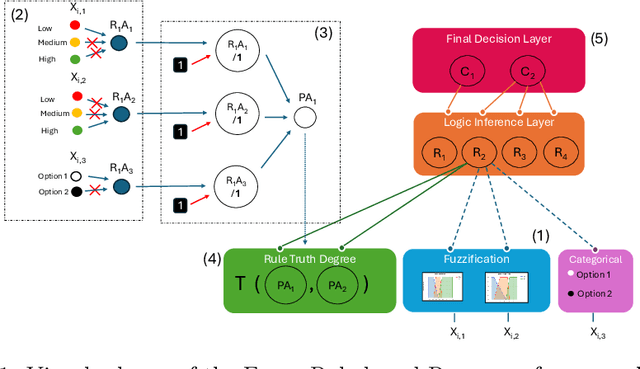

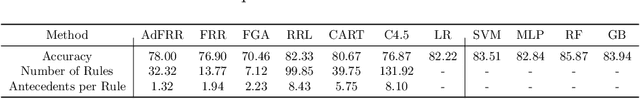

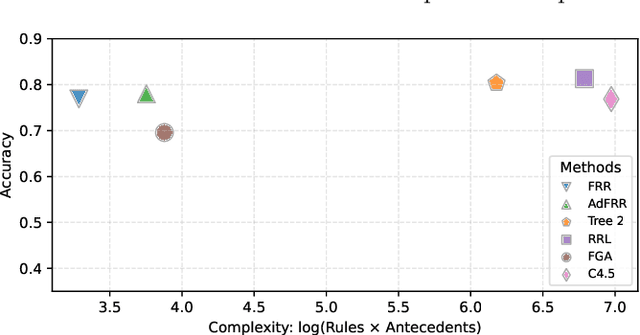

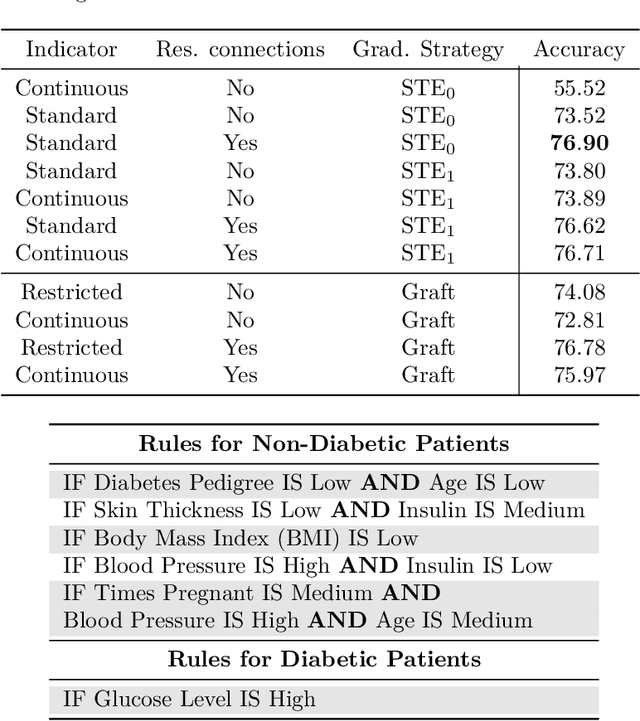

Abstract:Rule-based models play a crucial role in scenarios that require transparency and accountable decision-making. However, they primarily consist of discrete parameters and structures, which presents challenges for scalability and optimization. In this work, we introduce a new rule-based classifier trained using gradient descent, in which the user can control the maximum number and length of the rules. For numerical partitions, the user can also control the partitions used with fuzzy sets, which also helps keep the number of partitions small. We perform a series of exhaustive experiments on $40$ datasets to show how this classifier performs in terms of accuracy and rule base size. Then, we compare our results with a genetic search that fits an equivalent classifier and with other explainable and non-explainable state-of-the-art classifiers. Our results show how our method can obtain compact rule bases that use significantly fewer patterns than other rule-based methods and perform better than other explainable classifiers.

Transfer Learning with Active Sampling for Rapid Training and Calibration in BCI-P300 Across Health States and Multi-centre Data

Dec 14, 2024

Abstract:Machine learning and deep learning advancements have boosted Brain-Computer Interface (BCI) performance, but their wide-scale applicability is limited due to factors like individual health, hardware variations, and cultural differences affecting neural data. Studies often focus on uniform single-site experiments in uniform settings, leading to high performance that may not translate well to real-world diversity. Deep learning models aim to enhance BCI classification accuracy, and transfer learning has been suggested to adapt models to individual neural patterns using a base model trained on others' data. This approach promises better generalizability and reduced overfitting, yet challenges remain in handling diverse and imbalanced datasets from different equipment, subjects, multiple centres in different countries, and both healthy and patient populations for effective model transfer and tuning. In a setting characterized by maximal heterogeneity, we proposed P300 wave detection in BCIs employing a convolutional neural network fitted with adaptive transfer learning based on Poison Sampling Disk (PDS) called Active Sampling (AS), which flexibly adjusts the transition from source data to the target domain. Our results reported for subject adaptive with 40% of adaptive fine-tuning that the averaged classification accuracy improved by 5.36% and standard deviation reduced by 12.22% using two distinct, internationally replicated datasets. These results outperformed in classification accuracy, computational time, and training efficiency, mainly due to the proposed Active Sampling (AS) method for transfer learning.

Fuzzy Norm-Explicit Product Quantization for Recommender Systems

Dec 08, 2024

Abstract:As the data resources grow, providing recommendations that best meet the demands has become a vital requirement in business and life to overcome the information overload problem. However, building a system suggesting relevant recommendations has always been a point of debate. One of the most cost-efficient techniques in terms of producing relevant recommendations at a low complexity is Product Quantization (PQ). PQ approaches have continued developing in recent years. This system's crucial challenge is improving product quantization performance in terms of recall measures without compromising its complexity. This makes the algorithm suitable for problems that require a greater number of potentially relevant items without disregarding others, at high-speed and low-cost to keep up with traffic. This is the case of online shops where the recommendations for the purpose are important, although customers can be susceptible to scoping other products. This research proposes a fuzzy approach to perform norm-based product quantization. Type-2 Fuzzy sets (T2FSs) define the codebook allowing sub-vectors (T2FSs) to be associated with more than one element of the codebook, and next, its norm calculus is resolved by means of integration. Our method finesses the recall measure up, making the algorithm suitable for problems that require querying at most possible potential relevant items without disregarding others. The proposed method outperforms all PQ approaches such as NEQ, PQ, and RQ up to +6%, +5%, and +8% by achieving a recall of 94%, 69%, 59% in Netflix, Audio, Cifar60k datasets, respectively. More and over, computing time and complexity nearly equals the most computationally efficient existing PQ method in the state-of-the-art.

Personalised and Adjustable Interval Type-2 Fuzzy-Based PPG Quality Assessment for the Edge

Sep 23, 2023

Abstract:Most of today's wearable technology provides seamless cardiac activity monitoring. Specifically, the vast majority employ Photoplethysmography (PPG) sensors to acquire blood volume pulse information, which is further analysed to extract useful and physiologically related features. Nevertheless, PPG-based signal reliability presents different challenges that strongly affect such data processing. This is mainly related to the fact of PPG morphological wave distortion due to motion artefacts, which can lead to erroneous interpretation of the extracted cardiac-related features. On this basis, in this paper, we propose a novel personalised and adjustable Interval Type-2 Fuzzy Logic System (IT2FLS) for assessing the quality of PPG signals. The proposed system employs a personalised approach to adapt the IT2FLS parameters to the unique characteristics of each individual's PPG signals.Additionally, the system provides adjustable levels of personalisation, allowing healthcare providers to adjust the system to meet specific requirements for different applications. The proposed system obtained up to 93.72\% for average accuracy during validation. The presented system has the potential to enable ultra-low complexity and real-time PPG quality assessment, improving the accuracy and reliability of PPG-based health monitoring systems at the edge.

ARTxAI: Explainable Artificial Intelligence Curates Deep Representation Learning for Artistic Images using Fuzzy Techniques

Aug 29, 2023

Abstract:Automatic art analysis employs different image processing techniques to classify and categorize works of art. When working with artistic images, we need to take into account further considerations compared to classical image processing. This is because such artistic paintings change drastically depending on the author, the scene depicted, and their artistic style. This can result in features that perform very well in a given task but do not grasp the whole of the visual and symbolic information contained in a painting. In this paper, we show how the features obtained from different tasks in artistic image classification are suitable to solve other ones of similar nature. We present different methods to improve the generalization capabilities and performance of artistic classification systems. Furthermore, we propose an explainable artificial intelligence method to map known visual traits of an image with the features used by the deep learning model considering fuzzy rules. These rules show the patterns and variables that are relevant to solve each task and how effective is each of the patterns found. Our results show that our proposed context-aware features can achieve up to $6\%$ and $26\%$ more accurate results than other context- and non-context-aware solutions, respectively, depending on the specific task. We also show that some of the features used by these models can be more clearly correlated to visual traits in the original image than others.

A Temporal Type-2 Fuzzy System for Time-dependent Explainable Artificial Intelligence

Oct 22, 2022Abstract:Explainable Artificial Intelligence (XAI) is a paradigm that delivers transparent models and decisions, which are easy to understand, analyze, and augment by a non-technical audience. Fuzzy Logic Systems (FLS) based XAI can provide an explainable framework, while also modeling uncertainties present in real-world environments, which renders it suitable for applications where explainability is a requirement. However, most real-life processes are not characterized by high levels of uncertainties alone; they are inherently time-dependent as well, i.e., the processes change with time. In this work, we present novel Temporal Type-2 FLS Based Approach for time-dependent XAI (TXAI) systems, which can account for the likelihood of a measurement's occurrence in the time domain using (the measurement's) frequency of occurrence. In Temporal Type-2 Fuzzy Sets (TT2FSs), a four-dimensional (4D) time-dependent membership function is developed where relations are used to construct the inter-relations between the elements of the universe of discourse and its frequency of occurrence. The TXAI system manifested better classification prowess, with 10-fold test datasets, with a mean recall of 95.40\% than a standard XAI system (based on non-temporal general type-2 (GT2) fuzzy sets) that had a mean recall of 87.04\%. TXAI also performed significantly better than most non-explainable AI systems between 3.95\%, to 19.04\% improvement gain in mean recall. In addition, TXAI can also outline the most likely time-dependent trajectories using the frequency of occurrence values embedded in the TXAI model; viz. given a rule at a determined time interval, what will be the next most likely rule at a subsequent time interval. In this regard, the proposed TXAI system can have profound implications for delineating the evolution of real-life time-dependent processes, such as behavioural or biological processes.

A Gentle Introduction and Survey on Computing with Words Methodologies

Aug 12, 2022

Abstract:Human beings have an inherent capability to use linguistic information (LI) seamlessly even though it is vague and imprecise. Computing with Words (CWW) was proposed to impart computing systems with this capability of human beings. The interest in the field of CWW is evident from a number of publications on various CWW methodologies. These methodologies use different ways to model the semantics of the LI. However, to the best of our knowledge, the literature on these methodologies is mostly scattered and does not give an interested researcher a comprehensive but gentle guide about the notion and utility of these methodologies. Hence, to introduce the foundations and state-of-the-art CWW methodologies, we provide a concise but a wide-ranging coverage of them in a simple and easy to understand manner. We feel that the simplicity with which we give a high-quality review and introduction to the CWW methodologies is very useful for investigators, especially those embarking on the use of CWW for the first time. We also provide future research directions to build upon for the interested and motivated researchers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge