Mehrin Kiani

A Temporal Type-2 Fuzzy System for Time-dependent Explainable Artificial Intelligence

Oct 22, 2022Abstract:Explainable Artificial Intelligence (XAI) is a paradigm that delivers transparent models and decisions, which are easy to understand, analyze, and augment by a non-technical audience. Fuzzy Logic Systems (FLS) based XAI can provide an explainable framework, while also modeling uncertainties present in real-world environments, which renders it suitable for applications where explainability is a requirement. However, most real-life processes are not characterized by high levels of uncertainties alone; they are inherently time-dependent as well, i.e., the processes change with time. In this work, we present novel Temporal Type-2 FLS Based Approach for time-dependent XAI (TXAI) systems, which can account for the likelihood of a measurement's occurrence in the time domain using (the measurement's) frequency of occurrence. In Temporal Type-2 Fuzzy Sets (TT2FSs), a four-dimensional (4D) time-dependent membership function is developed where relations are used to construct the inter-relations between the elements of the universe of discourse and its frequency of occurrence. The TXAI system manifested better classification prowess, with 10-fold test datasets, with a mean recall of 95.40\% than a standard XAI system (based on non-temporal general type-2 (GT2) fuzzy sets) that had a mean recall of 87.04\%. TXAI also performed significantly better than most non-explainable AI systems between 3.95\%, to 19.04\% improvement gain in mean recall. In addition, TXAI can also outline the most likely time-dependent trajectories using the frequency of occurrence values embedded in the TXAI model; viz. given a rule at a determined time interval, what will be the next most likely rule at a subsequent time interval. In this regard, the proposed TXAI system can have profound implications for delineating the evolution of real-life time-dependent processes, such as behavioural or biological processes.

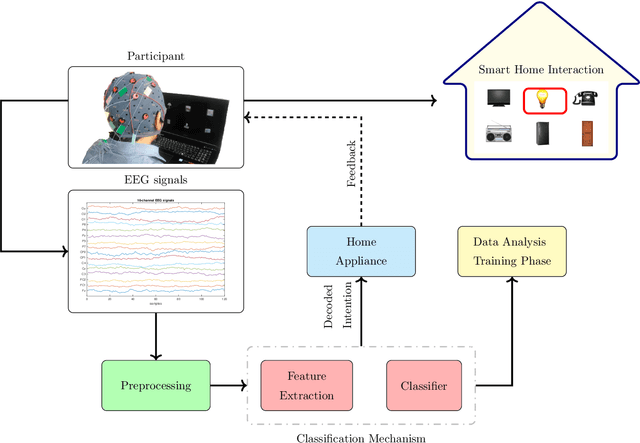

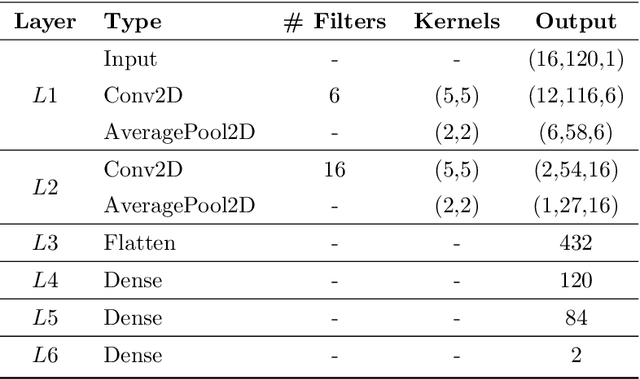

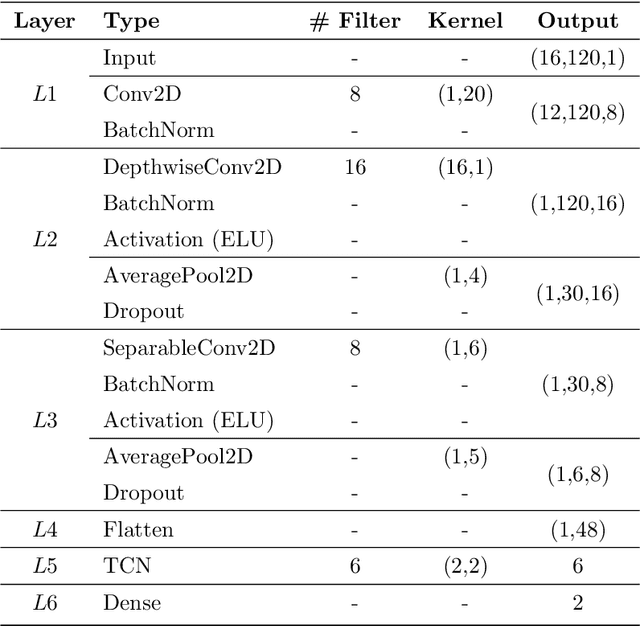

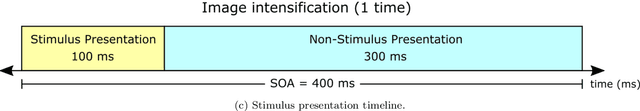

Fuzzy temporal convolutional neural networks in P300-based Brain-computer interface for smart home interaction

Apr 09, 2022

Abstract:The processing and classification of electroencephalographic signals (EEG) are increasingly performed using deep learning frameworks, such as convolutional neural networks (CNNs), to generate abstract features from brain data, automatically paving the way for remarkable classification prowess. However, EEG patterns exhibit high variability across time and uncertainty due to noise. It is a significant problem to be addressed in P300-based Brain Computer Interface (BCI) for smart home interaction. It operates in a non-optimal natural environment where added noise is often present. In this work, we propose a sequential unification of temporal convolutional networks (TCNs) modified to EEG signals, LSTM cells, with a fuzzy neural block (FNB), which we called EEG-TCFNet. Fuzzy components may enable a higher tolerance to noisy conditions. We applied three different architectures comparing the effect of using block FNB to classify a P300 wave to build a BCI for smart home interaction with healthy and post-stroke individuals. Our results reported a maximum classification accuracy of 98.6% and 74.3% using the proposed method of EEG-TCFNet in subject-dependent strategy and subject-independent strategy, respectively. Overall, FNB usage in all three CNN topologies outperformed those without FNB. In addition, we compared the addition of FNB to other state-of-the-art methods and obtained higher classification accuracies on account of the integration with FNB. The remarkable performance of the proposed model, EEG-TCFNet, and the general integration of fuzzy units to other classifiers would pave the way for enhanced P300-based BCIs for smart home interaction within natural settings.

Towards Understanding Human Functional Brain Development with Explainable Artificial Intelligence: Challenges and Perspectives

Dec 24, 2021

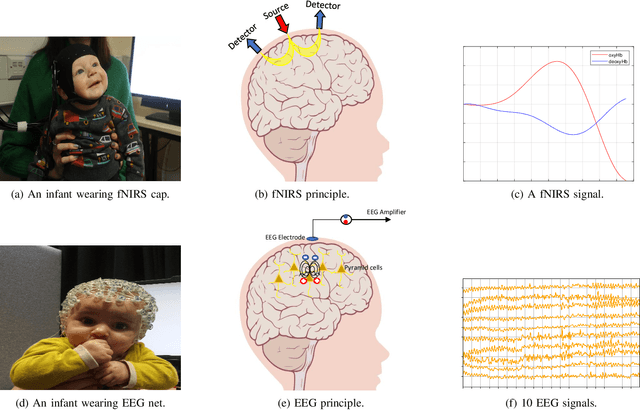

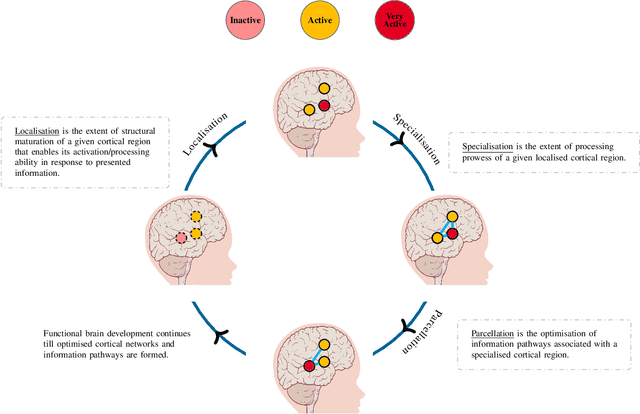

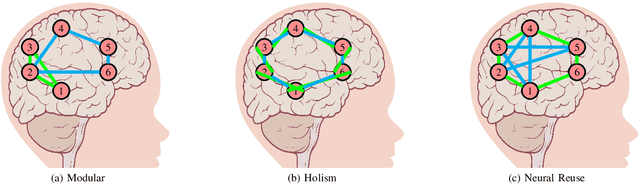

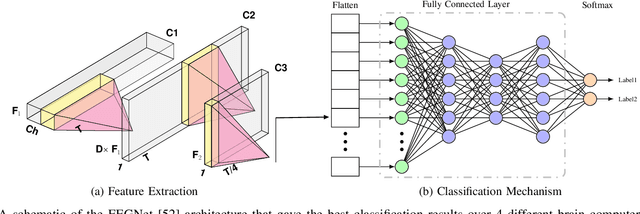

Abstract:The last decades have seen significant advancements in non-invasive neuroimaging technologies that have been increasingly adopted to examine human brain development. However, these improvements have not necessarily been followed by more sophisticated data analysis measures that are able to explain the mechanisms underlying functional brain development. For example, the shift from univariate (single area in the brain) to multivariate (multiple areas in brain) analysis paradigms is of significance as it allows investigations into the interactions between different brain regions. However, despite the potential of multivariate analysis to shed light on the interactions between developing brain regions, artificial intelligence (AI) techniques applied render the analysis non-explainable. The purpose of this paper is to understand the extent to which current state-of-the-art AI techniques can inform functional brain development. In addition, a review of which AI techniques are more likely to explain their learning based on the processes of brain development as defined by developmental cognitive neuroscience (DCN) frameworks is also undertaken. This work also proposes that eXplainable AI (XAI) may provide viable methods to investigate functional brain development as hypothesised by DCN frameworks.

Recognition of Patient Groups with Sleep Related Disorders using Bio-signal Processing and Deep Learning

Nov 10, 2021

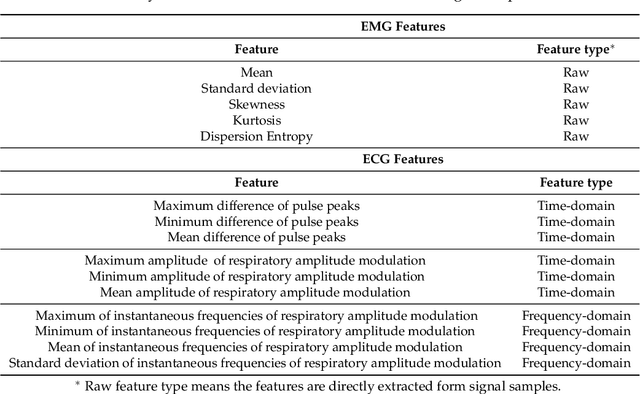

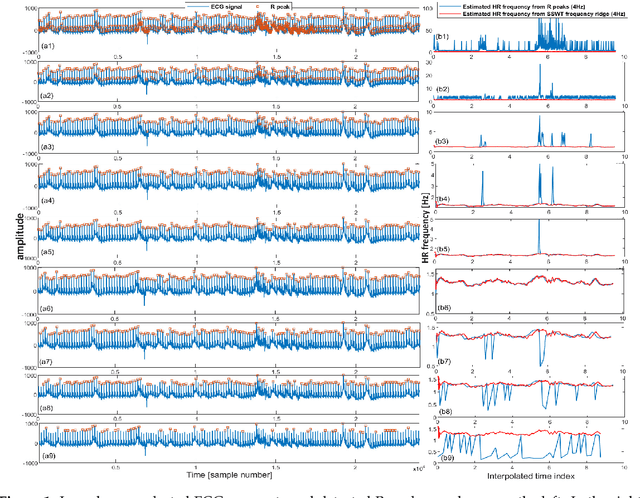

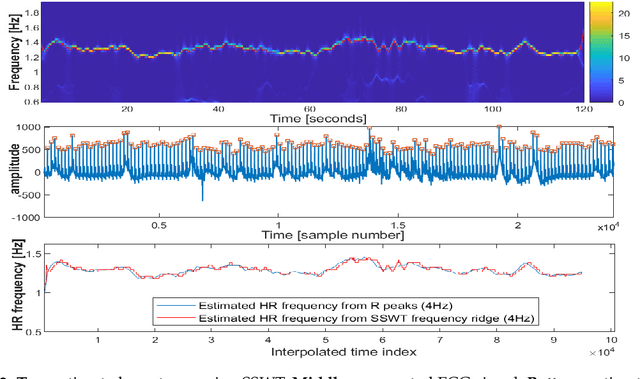

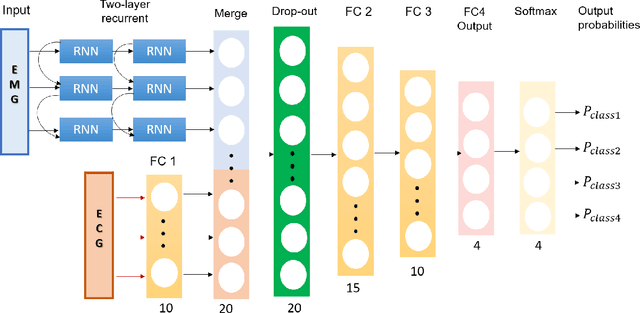

Abstract:Accurately diagnosing sleep disorders is essential for clinical assessments and treatments. Polysomnography (PSG) has long been used for detection of various sleep disorders. In this research, electrocardiography (ECG) and electromayography (EMG) have been used for recognition of breathing and movement-related sleep disorders. Bio-signal processing has been performed by extracting EMG features exploiting entropy and statistical moments, in addition to developing an iterative pulse peak detection algorithm using synchrosqueezed wavelet transform (SSWT) for reliable extraction of heart rate and breathing-related features from ECG. A deep learning framework has been designed to incorporate EMG and ECG features. The framework has been used to classify four groups: healthy subjects, patients with obstructive sleep apnea (OSA), patients with restless leg syndrome (RLS) and patients with both OSA and RLS. The proposed deep learning framework produced a mean accuracy of 72% and weighted F1 score of 0.57 across subjects for our formulated four-class problem.

* Paper is offered by the publisher as Open Acess: https://www.mdpi.com/1424-8220/20/9/2594

A Generic Deep Learning Based Cough Analysis System from Clinically Validated Samples for Point-of-Need Covid-19 Test and Severity Levels

Nov 10, 2021

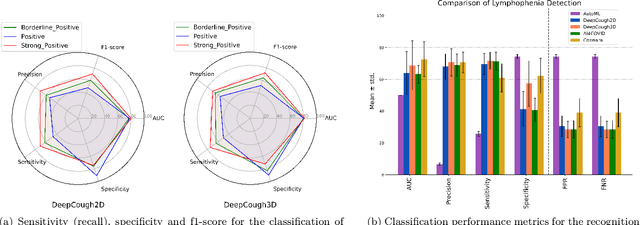

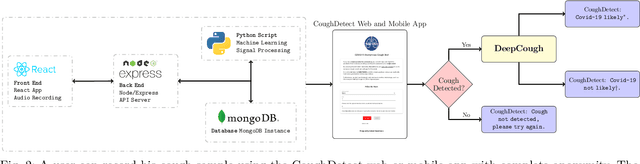

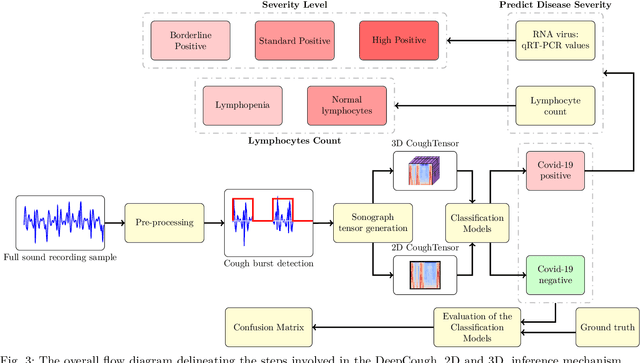

Abstract:We seek to evaluate the detection performance of a rapid primary screening tool of Covid-19 solely based on the cough sound from 8,380 clinically validated samples with laboratory molecular-test (2,339 Covid-19 positives and 6,041 Covid-19 negatives). Samples were clinically labeled according to the results and severity based on quantitative RT-PCR (qRT-PCR) analysis, cycle threshold, and lymphocytes count from the patients. Our proposed generic method is an algorithm based on Empirical Mode Decomposition (EMD) with subsequent classification based on a tensor of audio features and a deep artificial neural network classifier with convolutional layers called DeepCough'. Two different versions of DeepCough based on the number of tensor dimensions, i.e. DeepCough2D and DeepCough3D, have been investigated. These methods have been deployed in a multi-platform proof-of-concept Web App CoughDetect to administer this test anonymously. Covid-19 recognition results rates achieved a promising AUC (Area Under Curve) of 98.800.83%, sensitivity of 96.431.85%, and specificity of 96.201.74%, and 81.08%5.05% AUC for the recognition of three severity levels. Our proposed web tool and underpinning algorithm for the robust, fast, point-of-need identification of Covid-19 facilitates the rapid detection of the infection. We believe that it has the potential to significantly hamper the Covid-19 pandemic across the world.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge