Silvia Rigato

Learning mental states estimation through self-observation: a developmental synergy between intentions and beliefs representations in a deep-learning model of Theory of Mind

Jul 25, 2024

Abstract:Theory of Mind (ToM), the ability to attribute beliefs, intentions, or mental states to others, is a crucial feature of human social interaction. In complex environments, where the human sensory system reaches its limits, behaviour is strongly driven by our beliefs about the state of the world around us. Accessing others' mental states, e.g., beliefs and intentions, allows for more effective social interactions in natural contexts. Yet, these variables are not directly observable, making understanding ToM a challenging quest of interest for different fields, including psychology, machine learning and robotics. In this paper, we contribute to this topic by showing a developmental synergy between learning to predict low-level mental states (e.g., intentions, goals) and attributing high-level ones (i.e., beliefs). Specifically, we assume that learning beliefs attribution can occur by observing one's own decision processes involving beliefs, e.g., in a partially observable environment. Using a simple feed-forward deep learning model, we show that, when learning to predict others' intentions and actions, more accurate predictions can be acquired earlier if beliefs attribution is learnt simultaneously. Furthermore, we show that the learning performance improves even when observed actors have a different embodiment than the observer and the gain is higher when observing beliefs-driven chunks of behaviour. We propose that our computational approach can inform the understanding of human social cognitive development and be relevant for the design of future adaptive social robots able to autonomously understand, assist, and learn from human interaction partners in novel natural environments and tasks.

Towards Understanding Human Functional Brain Development with Explainable Artificial Intelligence: Challenges and Perspectives

Dec 24, 2021

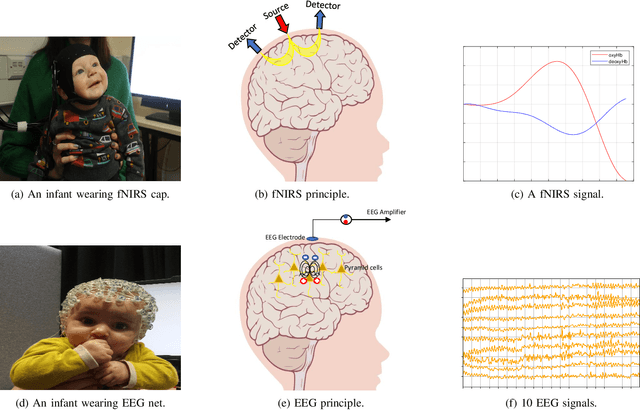

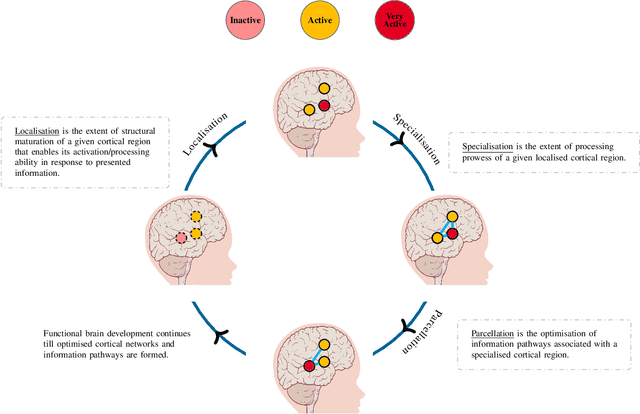

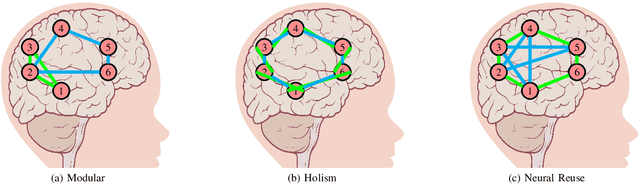

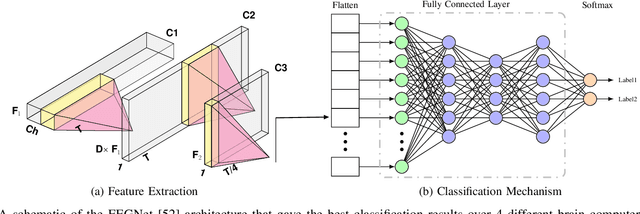

Abstract:The last decades have seen significant advancements in non-invasive neuroimaging technologies that have been increasingly adopted to examine human brain development. However, these improvements have not necessarily been followed by more sophisticated data analysis measures that are able to explain the mechanisms underlying functional brain development. For example, the shift from univariate (single area in the brain) to multivariate (multiple areas in brain) analysis paradigms is of significance as it allows investigations into the interactions between different brain regions. However, despite the potential of multivariate analysis to shed light on the interactions between developing brain regions, artificial intelligence (AI) techniques applied render the analysis non-explainable. The purpose of this paper is to understand the extent to which current state-of-the-art AI techniques can inform functional brain development. In addition, a review of which AI techniques are more likely to explain their learning based on the processes of brain development as defined by developmental cognitive neuroscience (DCN) frameworks is also undertaken. This work also proposes that eXplainable AI (XAI) may provide viable methods to investigate functional brain development as hypothesised by DCN frameworks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge