Francesca Bianco

Learning mental states estimation through self-observation: a developmental synergy between intentions and beliefs representations in a deep-learning model of Theory of Mind

Jul 25, 2024

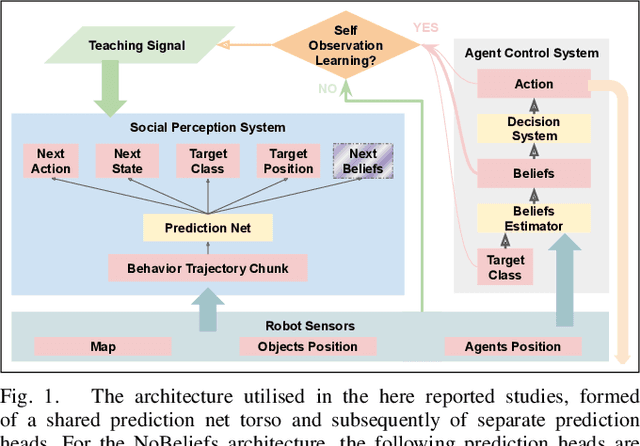

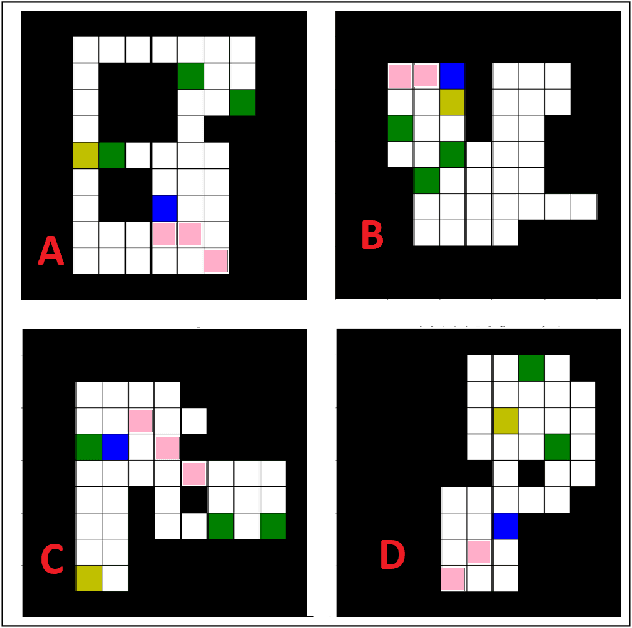

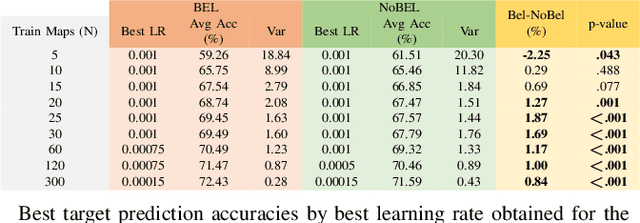

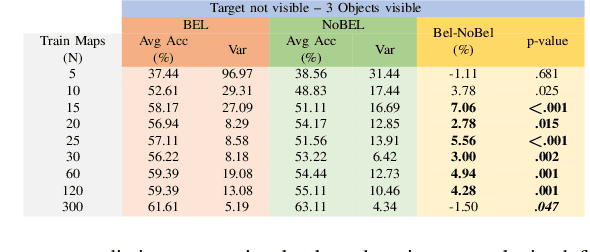

Abstract:Theory of Mind (ToM), the ability to attribute beliefs, intentions, or mental states to others, is a crucial feature of human social interaction. In complex environments, where the human sensory system reaches its limits, behaviour is strongly driven by our beliefs about the state of the world around us. Accessing others' mental states, e.g., beliefs and intentions, allows for more effective social interactions in natural contexts. Yet, these variables are not directly observable, making understanding ToM a challenging quest of interest for different fields, including psychology, machine learning and robotics. In this paper, we contribute to this topic by showing a developmental synergy between learning to predict low-level mental states (e.g., intentions, goals) and attributing high-level ones (i.e., beliefs). Specifically, we assume that learning beliefs attribution can occur by observing one's own decision processes involving beliefs, e.g., in a partially observable environment. Using a simple feed-forward deep learning model, we show that, when learning to predict others' intentions and actions, more accurate predictions can be acquired earlier if beliefs attribution is learnt simultaneously. Furthermore, we show that the learning performance improves even when observed actors have a different embodiment than the observer and the gain is higher when observing beliefs-driven chunks of behaviour. We propose that our computational approach can inform the understanding of human social cognitive development and be relevant for the design of future adaptive social robots able to autonomously understand, assist, and learn from human interaction partners in novel natural environments and tasks.

Robot Learning Theory of Mind through Self-Observation: Exploiting the Intentions-Beliefs Synergy

Oct 17, 2022

Abstract:In complex environments, where the human sensory system reaches its limits, our behaviour is strongly driven by our beliefs about the state of the world around us. Accessing others' beliefs, intentions, or mental states in general, could thus allow for more effective social interactions in natural contexts. Yet these variables are not directly observable. Theory of Mind (TOM), the ability to attribute to other agents' beliefs, intentions, or mental states in general, is a crucial feature of human social interaction and has become of interest to the robotics community. Recently, new models that are able to learn TOM have been introduced. In this paper, we show the synergy between learning to predict low-level mental states, such as intentions and goals, and attributing high-level ones, such as beliefs. Assuming that learning of beliefs can take place by observing own decision and beliefs estimation processes in partially observable environments and using a simple feed-forward deep learning model, we show that when learning to predict others' intentions and actions, faster and more accurate predictions can be acquired if beliefs attribution is learnt simultaneously with action and intentions prediction. We show that the learning performance improves even when observing agents with a different decision process and is higher when observing beliefs-driven chunks of behaviour. We propose that our architectural approach can be relevant for the design of future adaptive social robots that should be able to autonomously understand and assist human partners in novel natural environments and tasks.

Transferring Adaptive Theory of Mind to social robots: insights from developmental psychology to robotics

Aug 31, 2019Abstract:Despite the recent advancement in the social robotic field, important limitations restrain its progress and delay the application of robots in everyday scenarios. In the present paper, we propose to develop computational models inspired by our knowledge of human infants' social adaptive abilities. We believe this may provide solutions at an architectural level to overcome the limits of current systems. Specifically, we present the functional advantages that adaptive Theory of Mind (ToM) systems would support in robotics (i.e., mentalizing for belief understanding, proactivity and preparation, active perception and learning) and contextualize them in practical applications. We review current computational models mainly based on the simulation and teleological theories, and robotic implementations to identify the limitations of ToM functions in current robotic architectures and suggest a possible future developmental pathway. Finally, we propose future studies to create innovative computational models integrating the properties of the simulation and teleological approaches for an improved adaptive ToM ability in robots with the aim of enhancing human-robot interactions and permitting the application of robots in unexplored environments, such as disasters and construction sites. To achieve this goal, we suggest directing future research towards the modern cross-talk between the fields of robotics and developmental psychology.

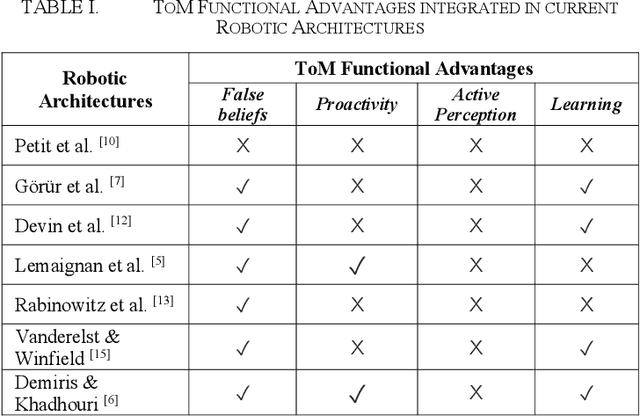

Functional advantages of an adaptive Theory of Mind for robotics: a review of current architectures

Aug 31, 2019

Abstract:Great advancements have been achieved in the field of robotics, however, main challenges remain, including building robots with an adaptive Theory of Mind (ToM). In the present paper, seven current robotic architectures for human-robot interactions were described as well as four main functional advantages of equipping robots with an adaptive ToM. The aim of the present paper was to determine in which way and how often ToM features are integrated in the architectures analyzed, and if they provide robots with the associated functional advantages. Our assessment shows that different methods are used to implement ToM features in robotic architectures. Furthermore, while a ToM for false-belief understanding and tracking is often built in social robotic architectures, a ToM for proactivity, active perception and learning is less common. Nonetheless, progresses towards better adaptive ToM features in robots are warranted to provide them with full access to the advantages of having a ToM resembling that of humans.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge