Huidong Liang

On the Importance of Task Complexity in Evaluating LLM-Based Multi-Agent Systems

Oct 05, 2025Abstract:Large language model multi-agent systems (LLM-MAS) offer a promising paradigm for harnessing collective intelligence to achieve more advanced forms of AI behaviour. While recent studies suggest that LLM-MAS can outperform LLM single-agent systems (LLM-SAS) on certain tasks, the lack of systematic experimental designs limits the strength and generality of these conclusions. We argue that a principled understanding of task complexity, such as the degree of sequential reasoning required and the breadth of capabilities involved, is essential for assessing the effectiveness of LLM-MAS in task solving. To this end, we propose a theoretical framework characterising tasks along two dimensions: depth, representing reasoning length, and width, representing capability diversity. We theoretically examine a representative class of LLM-MAS, namely the multi-agent debate system, and empirically evaluate its performance in both discriminative and generative tasks with varying depth and width. Theoretical and empirical results show that the benefit of LLM-MAS over LLM-SAS increases with both task depth and width, and the effect is more pronounced with respect to depth. This clarifies when LLM-MAS are beneficial and provides a principled foundation for designing future LLM-MAS methods and benchmarks.

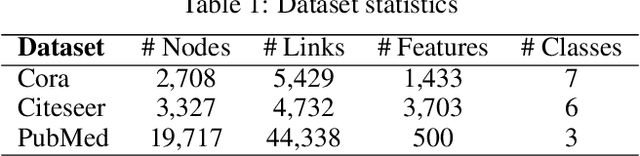

Towards Quantifying Long-Range Interactions in Graph Machine Learning: a Large Graph Dataset and a Measurement

Mar 12, 2025Abstract:Long-range dependencies are critical for effective graph representation learning, yet most existing datasets focus on small graphs tailored to inductive tasks, offering limited insight into long-range interactions. Current evaluations primarily compare models employing global attention (e.g., graph transformers) with those using local neighborhood aggregation (e.g., message-passing neural networks) without a direct measurement of long-range dependency. In this work, we introduce City-Networks, a novel large-scale transductive learning dataset derived from real-world city roads. This dataset features graphs with over $10^5$ nodes and significantly larger diameters than those in existing benchmarks, naturally embodying long-range information. We annotate the graphs using an eccentricity-based approach, ensuring that the classification task inherently requires information from distant nodes. Furthermore, we propose a model-agnostic measurement based on the Jacobians of neighbors from distant hops, offering a principled quantification of long-range dependencies. Finally, we provide theoretical justifications for both our dataset design and the proposed measurement - particularly by focusing on over-smoothing and influence score dilution - which establishes a robust foundation for further exploration of long-range interactions in graph neural networks.

Bayesian Optimization of Functions over Node Subsets in Graphs

May 24, 2024

Abstract:We address the problem of optimizing over functions defined on node subsets in a graph. The optimization of such functions is often a non-trivial task given their combinatorial, black-box and expensive-to-evaluate nature. Although various algorithms have been introduced in the literature, most are either task-specific or computationally inefficient and only utilize information about the graph structure without considering the characteristics of the function. To address these limitations, we utilize Bayesian Optimization (BO), a sample-efficient black-box solver, and propose a novel framework for combinatorial optimization on graphs. More specifically, we map each $k$-node subset in the original graph to a node in a new combinatorial graph and adopt a local modeling approach to efficiently traverse the latter graph by progressively sampling its subgraphs using a recursive algorithm. Extensive experiments under both synthetic and real-world setups demonstrate the effectiveness of the proposed BO framework on various types of graphs and optimization tasks, where its behavior is analyzed in detail with ablation studies.

ByteCover3: Accurate Cover Song Identification on Short Queries

Mar 21, 2023

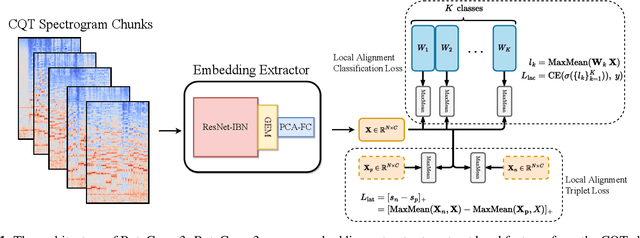

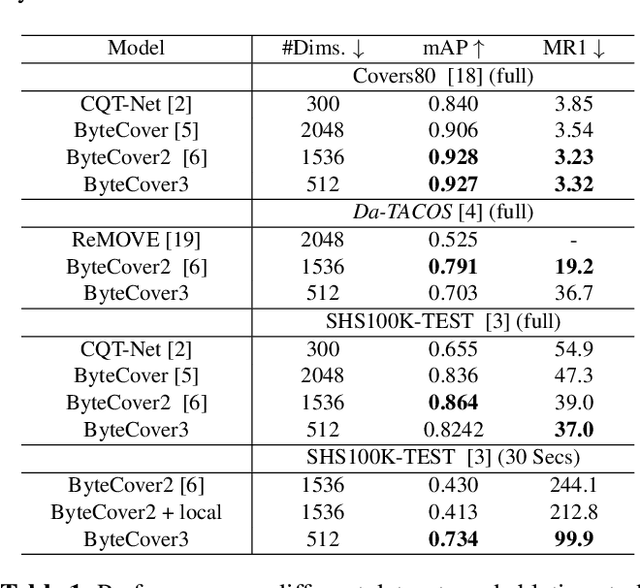

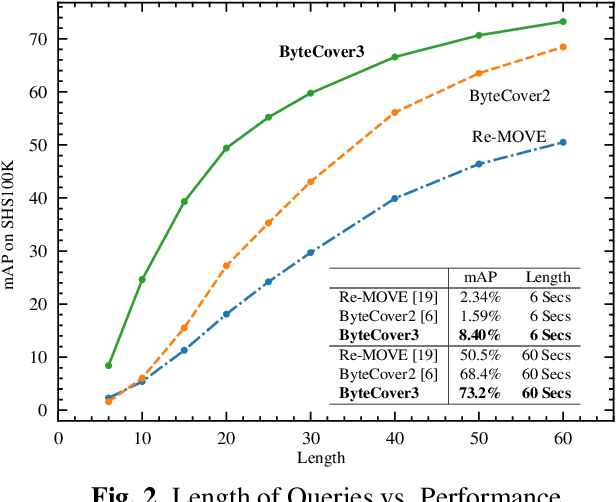

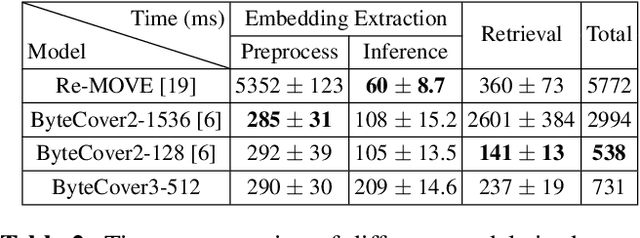

Abstract:Deep learning based methods have become a paradigm for cover song identification (CSI) in recent years, where the ByteCover systems have achieved state-of-the-art results on all the mainstream datasets of CSI. However, with the burgeon of short videos, many real-world applications require matching short music excerpts to full-length music tracks in the database, which is still under-explored and waiting for an industrial-level solution. In this paper, we upgrade the previous ByteCover systems to ByteCover3 that utilizes local features to further improve the identification performance of short music queries. ByteCover3 is designed with a local alignment loss (LAL) module and a two-stage feature retrieval pipeline, allowing the system to perform CSI in a more precise and efficient way. We evaluated ByteCover3 on multiple datasets with different benchmark settings, where ByteCover3 beat all the compared methods including its previous versions.

Graph Contrastive Learning with Implicit Augmentations

Nov 07, 2022

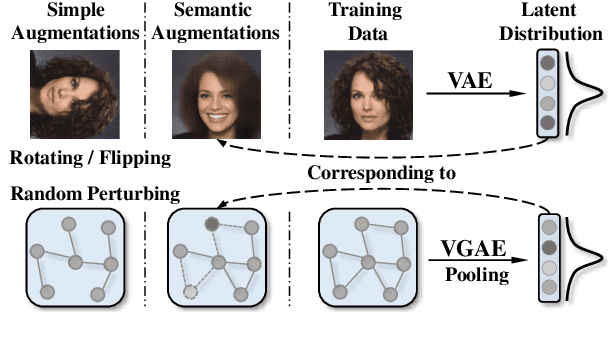

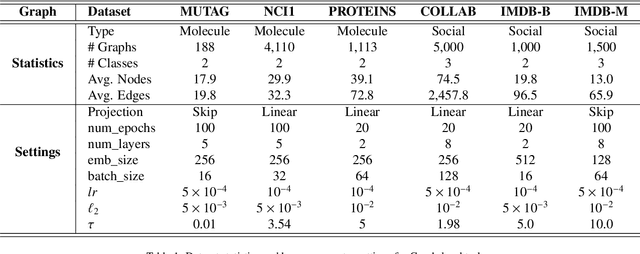

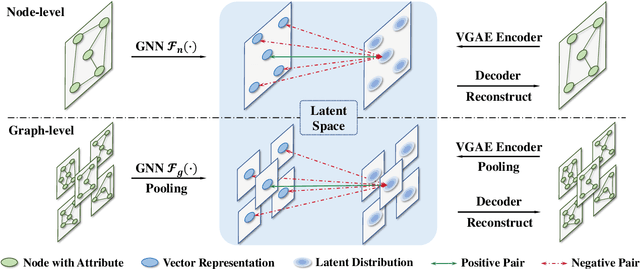

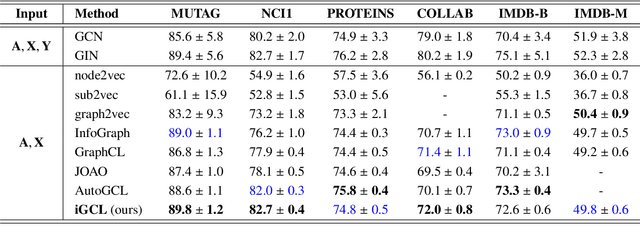

Abstract:Existing graph contrastive learning methods rely on augmentation techniques based on random perturbations (e.g., randomly adding or dropping edges and nodes). Nevertheless, altering certain edges or nodes can unexpectedly change the graph characteristics, and choosing the optimal perturbing ratio for each dataset requires onerous manual tuning. In this paper, we introduce Implicit Graph Contrastive Learning (iGCL), which utilizes augmentations in the latent space learned from a Variational Graph Auto-Encoder by reconstructing graph topological structure. Importantly, instead of explicitly sampling augmentations from latent distributions, we further propose an upper bound for the expected contrastive loss to improve the efficiency of our learning algorithm. Thus, graph semantics can be preserved within the augmentations in an intelligent way without arbitrary manual design or prior human knowledge. Experimental results on both graph-level and node-level tasks show that the proposed method achieves state-of-the-art performance compared to other benchmarks, where ablation studies in the end demonstrate the effectiveness of modules in iGCL.

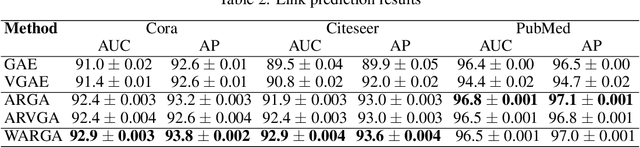

Wasserstein Adversarially Regularized Graph Autoencoder

Nov 09, 2021

Abstract:This paper introduces Wasserstein Adversarially Regularized Graph Autoencoder (WARGA), an implicit generative algorithm that directly regularizes the latent distribution of node embedding to a target distribution via the Wasserstein metric. The proposed method has been validated in tasks of link prediction and node clustering on real-world graphs, in which WARGA generally outperforms state-of-the-art models based on Kullback-Leibler (KL) divergence and typical adversarial framework.

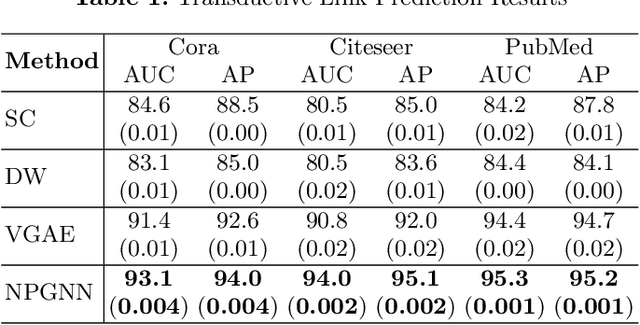

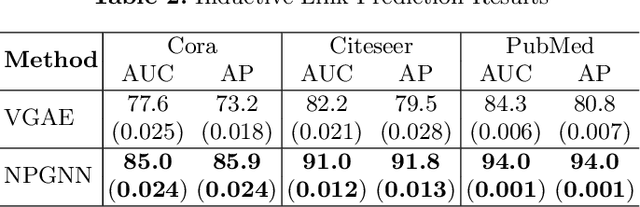

How Neural Processes Improve Graph Link Prediction

Sep 30, 2021

Abstract:Link prediction is a fundamental problem in graph data analysis. While most of the literature focuses on transductive link prediction that requires all the graph nodes and majority of links in training, inductive link prediction, which only uses a proportion of the nodes and their links in training, is a more challenging problem in various real-world applications. In this paper, we propose a meta-learning approach with graph neural networks for link prediction: Neural Processes for Graph Neural Networks (NPGNN), which can perform both transductive and inductive learning tasks and adapt to patterns in a large new graph after training with a small subgraph. Experiments on real-world graphs are conducted to validate our model, where the results suggest that the proposed method achieves stronger performance compared to other state-of-the-art models, and meanwhile generalizes well when training on a small subgraph.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge