Hui-Hai Zhao

Integrating Quantum Processor Device and Control Optimization in a Gradient-based Framework

Dec 23, 2021

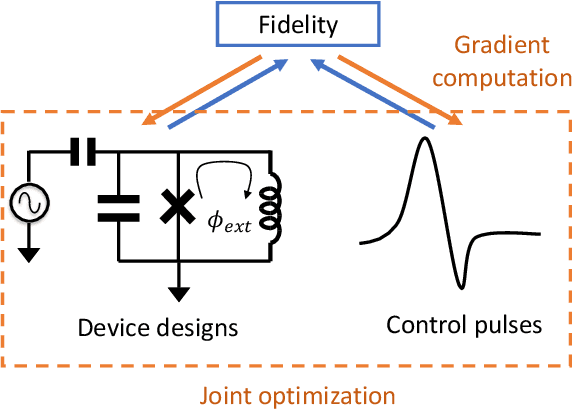

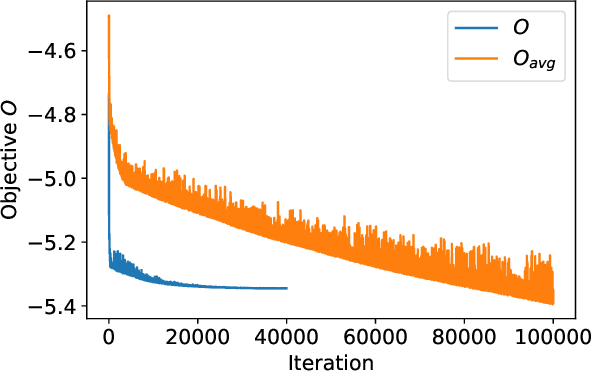

Abstract:In a quantum processor, the device design and external controls together contribute to the quality of the target quantum operations. As we continuously seek better alternative qubit platforms, we explore the increasingly large device and control design space. Thus, optimization becomes more and more challenging. In this work, we demonstrate that the figure of merit reflecting a design goal can be made differentiable with respect to the device and control parameters. In addition, we can compute the gradient of the design objective efficiently in a similar manner to the back-propagation algorithm and then utilize the gradient to optimize the device and the control parameters jointly and efficiently. This extends the scope of the quantum optimal control to superconducting device design. We also demonstrate the viability of gradient-based joint optimization over the device and control parameters through a few examples.

Compressing deep neural networks by matrix product operators

Apr 11, 2019

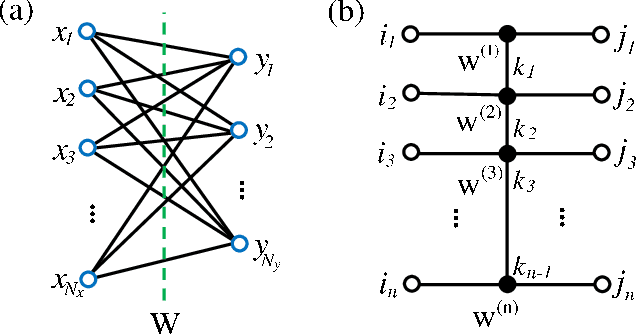

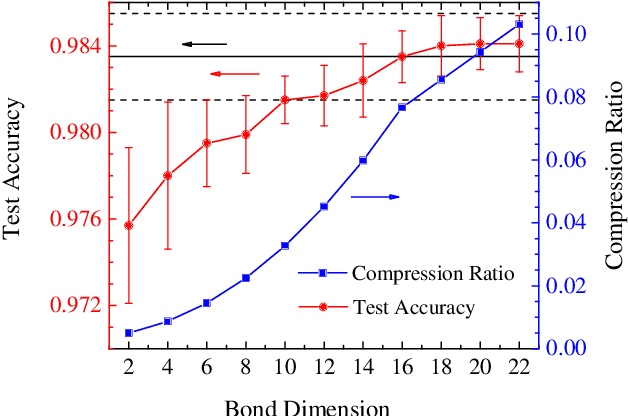

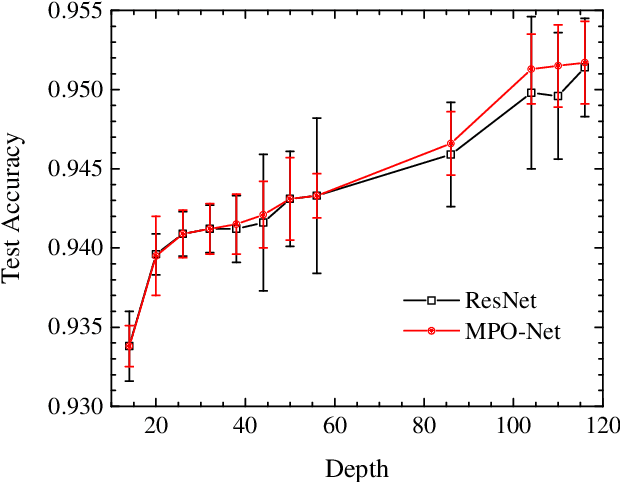

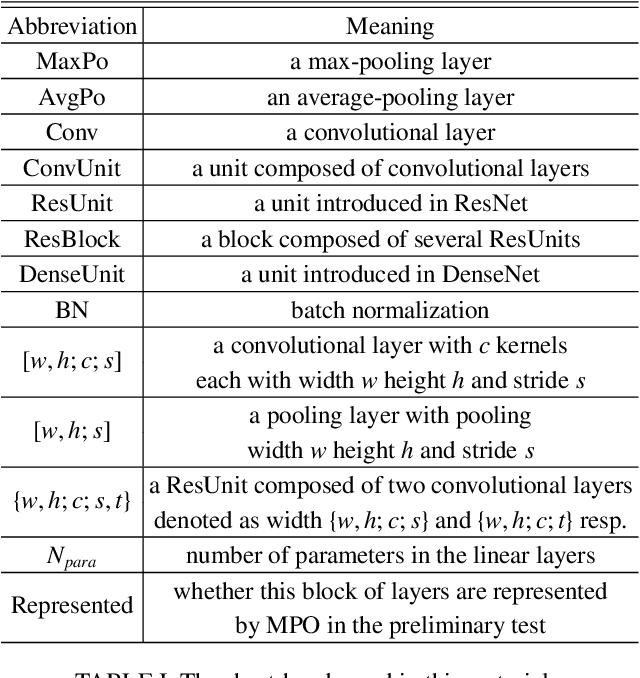

Abstract:A deep neural network is a parameterization of a multi-layer mapping of signals in terms of many alternatively arranged linear and nonlinear transformations. The linear transformations, which are generally used in the fully-connected as well as convolutional layers, contain most of the variational parameters that are trained and stored. Compressing a deep neural network to reduce its number of variational parameters but not its prediction power is an important but challenging problem towards the establishment of an optimized scheme in training efficiently these parameters and in lowering the risk of overfitting. Here we show that this problem can be effectively solved by representing linear transformations with matrix product operators (MPO). We have tested this approach in five main neural networks, including FC2, LeNet-5, VGG, ResNet, and DenseNet on two widely used datasets, namely MNIST and CIFAR-10, and found that this MPO representation indeed sets up a faithful and efficient mapping between input and output signals, which can keep or even improve the prediction accuracy with dramatically reduced number of parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge