Huangxing Lin

Self-Verification in Image Denoising

Nov 01, 2021

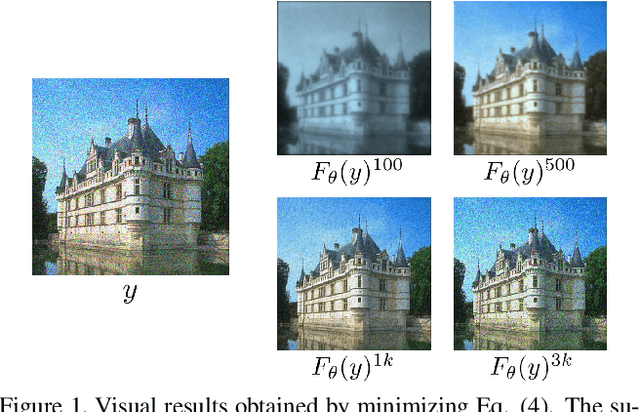

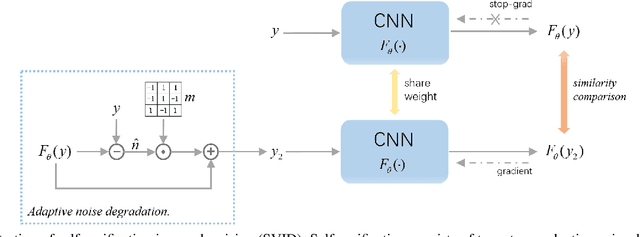

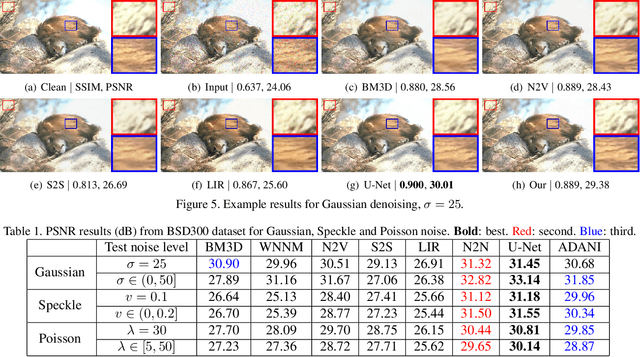

Abstract:We devise a new regularization, called self-verification, for image denoising. This regularization is formulated using a deep image prior learned by the network, rather than a traditional predefined prior. Specifically, we treat the output of the network as a ``prior'' that we denoise again after ``re-noising''. The comparison between the again denoised image and its prior can be interpreted as a self-verification of the network's denoising ability. We demonstrate that self-verification encourages the network to capture low-level image statistics needed to restore the image. Based on this self-verification regularization, we further show that the network can learn to denoise even if it has not seen any clean images. This learning strategy is self-supervised, and we refer to it as Self-Verification Image Denoising (SVID). SVID can be seen as a mixture of learning-based methods and traditional model-based denoising methods, in which regularization is adaptively formulated using the output of the network. We show the application of SVID to various denoising tasks using only observed corrupted data. It can achieve the denoising performance close to supervised CNNs.

Adaptive noise imitation for image denoising

Nov 30, 2020

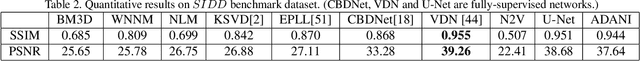

Abstract:The effectiveness of existing denoising algorithms typically relies on accurate pre-defined noise statistics or plenty of paired data, which limits their practicality. In this work, we focus on denoising in the more common case where noise statistics and paired data are unavailable. Considering that denoising CNNs require supervision, we develop a new \textbf{adaptive noise imitation (ADANI)} algorithm that can synthesize noisy data from naturally noisy images. To produce realistic noise, a noise generator takes unpaired noisy/clean images as input, where the noisy image is a guide for noise generation. By imposing explicit constraints on the type, level and gradient of noise, the output noise of ADANI will be similar to the guided noise, while keeping the original clean background of the image. Coupling the noisy data output from ADANI with the corresponding ground-truth, a denoising CNN is then trained in a fully-supervised manner. Experiments show that the noisy data produced by ADANI are visually and statistically similar to real ones so that the denoising CNN in our method is competitive to other networks trained with external paired data.

Learning Rate Dropout

Dec 05, 2019

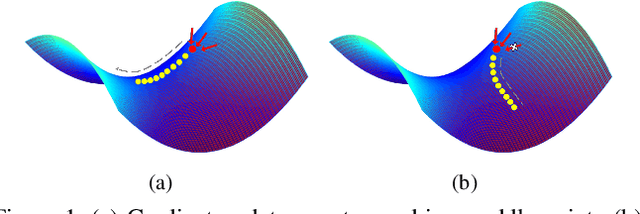

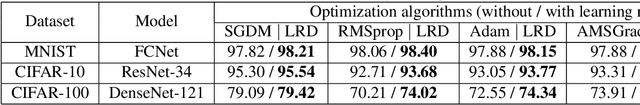

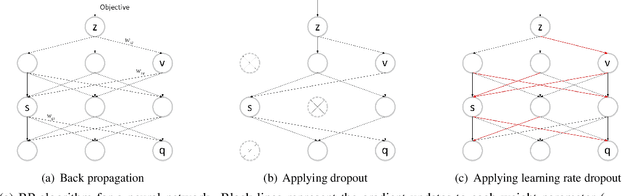

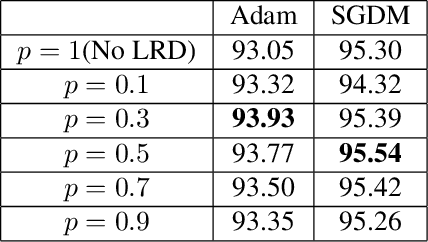

Abstract:The performance of a deep neural network is highly dependent on its training, and finding better local optimal solutions is the goal of many optimization algorithms. However, existing optimization algorithms show a preference for descent paths that converge slowly and do not seek to avoid bad local optima. In this work, we propose Learning Rate Dropout (LRD), a simple gradient descent technique for training related to coordinate descent. LRD empirically aids the optimizer to actively explore in the parameter space by randomly setting some learning rates to zero; at each iteration, only parameters whose learning rate is not 0 are updated. As the learning rate of different parameters is dropped, the optimizer will sample a new loss descent path for the current update. The uncertainty of the descent path helps the model avoid saddle points and bad local minima. Experiments show that LRD is surprisingly effective in accelerating training while preventing overfitting.

Noise2Blur: Online Noise Extraction and Denoising

Dec 03, 2019

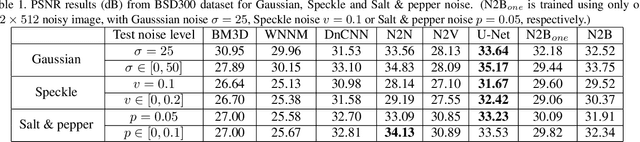

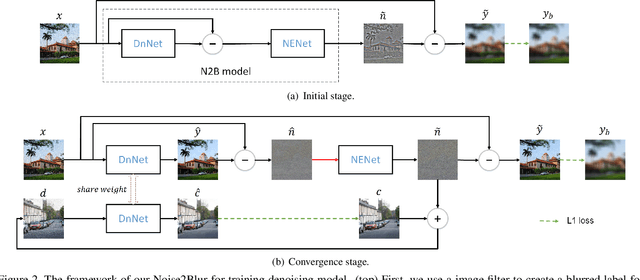

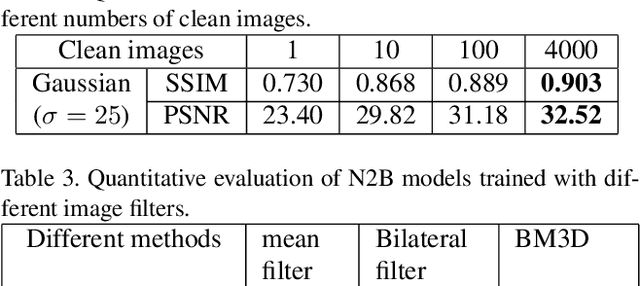

Abstract:We propose a new framework called Noise2Blur (N2B) for training robust image denoising models without pre-collected paired noisy/clean images. The training of the model requires only some (or even one) noisy images, some random unpaired clean images, and noise-free but blurred labels obtained by predefined filtering of the noisy images. The N2B model consists of two parts: a denoising network and a noise extraction network. First, the noise extraction network learns to output a noise map using the noise information from the denoising network under the guidence of the blurred labels. Then, the noise map is added to a clean image to generate a new ``noisy/clean'' image pair. Using the new image pair, the denoising network learns to generate clean and high-quality images from noisy observations. These two networks are trained simultaneously and mutually aid each other to learn the mappings of noise to clean/blur. Experiments on several denoising tasks show that the denoising performance of N2B is close to that of other denoising CNNs trained with pre-collected paired data.

Rain O'er Me: Synthesizing real rain to derain with data distillation

Apr 10, 2019

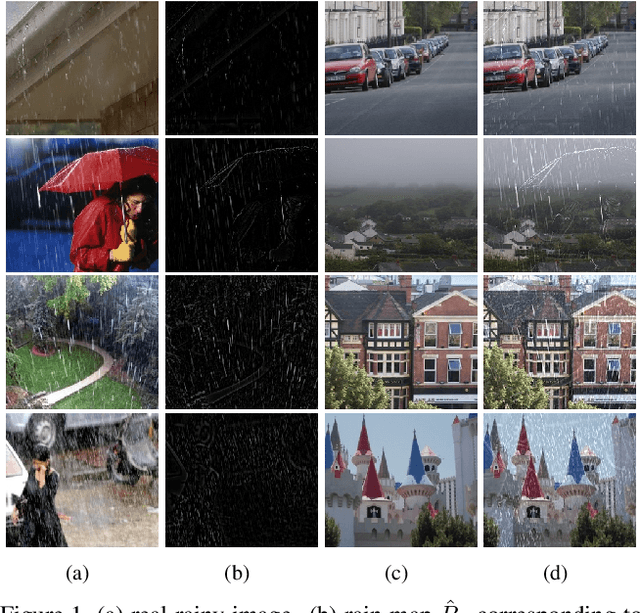

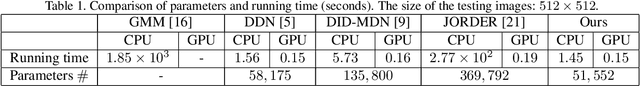

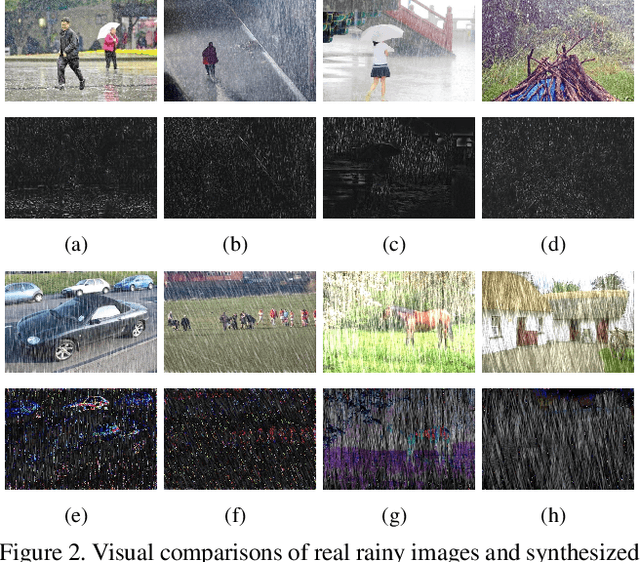

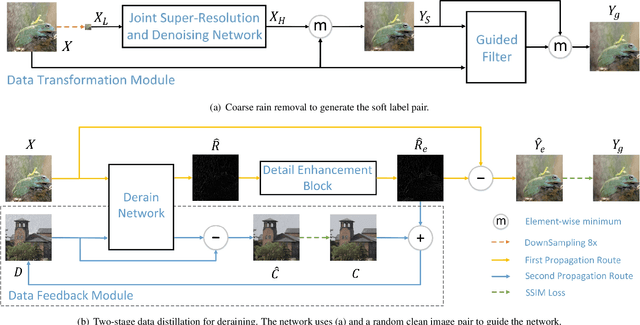

Abstract:We present a supervised technique for learning to remove rain from images without using synthetic rain software. The method is based on a two-stage data distillation approach: 1) A rainy image is first paired with a coarsely derained version using on a simple filtering technique ("rain-to-clean"). 2) Then a clean image is randomly matched with the rainy soft-labeled pair. Through a shared deep neural network, the rain that is removed from the first image is then added to the clean image to generate a second pair ("clean-to-rain"). The neural network simultaneously learns to map both images such that high resolution structure in the clean images can inform the deraining of the rainy images. Demonstrations show that this approach can address those visual characteristics of rain not easily synthesized by software in the usual way.

A^2Net: Adjacent Aggregation Networks for Image Raindrop Removal

Nov 24, 2018

Abstract:Existing methods for single images raindrop removal either have poor robustness or suffer from parameter burdens. In this paper, we propose a new Adjacent Aggregation Network (A^2Net) with lightweight architectures to remove raindrops from single images. Instead of directly cascading convolutional layers, we design an adjacent aggregation architecture to better fuse features for rich representations generation, which can lead to high quality images reconstruction. To further simplify the learning process, we utilize a problem-specific knowledge to force the network focus on the luminance channel in the YUV color space instead of all RGB channels. By combining adjacent aggregating operation with color space transformation, the proposed A^2Net can achieve state-of-the-art performances on raindrop removal with significant parameters reduction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge