Huang Xu

SegTalker: Segmentation-based Talking Face Generation with Mask-guided Local Editing

Sep 05, 2024

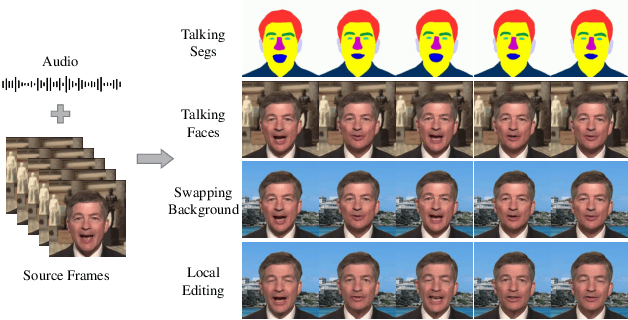

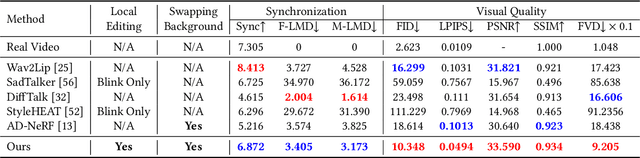

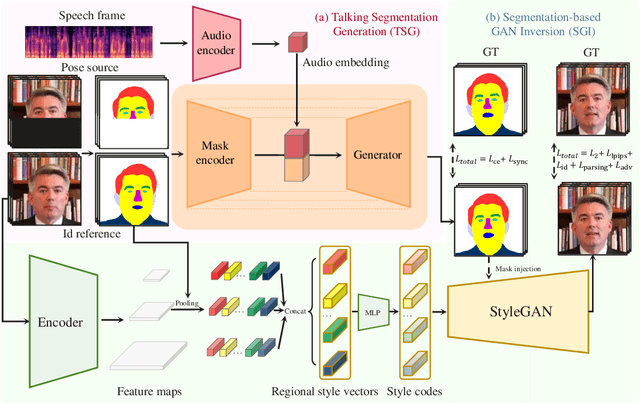

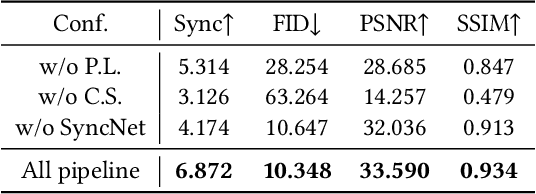

Abstract:Audio-driven talking face generation aims to synthesize video with lip movements synchronized to input audio. However, current generative techniques face challenges in preserving intricate regional textures (skin, teeth). To address the aforementioned challenges, we propose a novel framework called SegTalker to decouple lip movements and image textures by introducing segmentation as intermediate representation. Specifically, given the mask of image employed by a parsing network, we first leverage the speech to drive the mask and generate talking segmentation. Then we disentangle semantic regions of image into style codes using a mask-guided encoder. Ultimately, we inject the previously generated talking segmentation and style codes into a mask-guided StyleGAN to synthesize video frame. In this way, most of textures are fully preserved. Moreover, our approach can inherently achieve background separation and facilitate mask-guided facial local editing. In particular, by editing the mask and swapping the region textures from a given reference image (e.g. hair, lip, eyebrows), our approach enables facial editing seamlessly when generating talking face video. Experiments demonstrate that our proposed approach can effectively preserve texture details and generate temporally consistent video while remaining competitive in lip synchronization. Quantitative and qualitative results on the HDTF and MEAD datasets illustrate the superior performance of our method over existing methods.

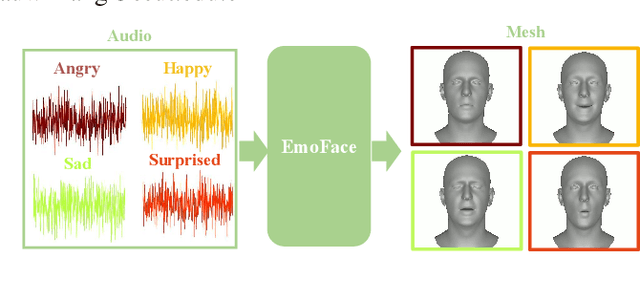

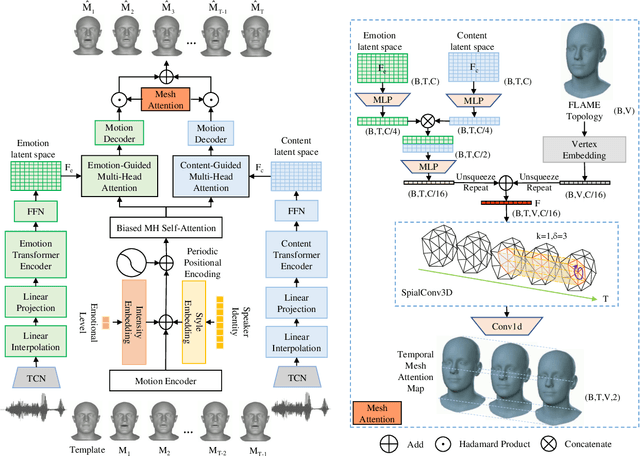

EmoFace: Emotion-Content Disentangled Speech-Driven 3D Talking Face with Mesh Attention

Aug 21, 2024

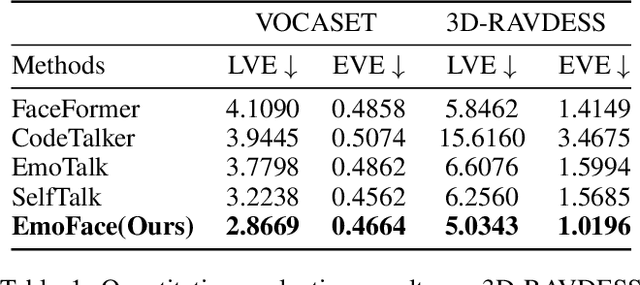

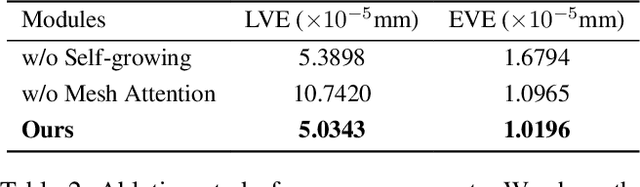

Abstract:The creation of increasingly vivid 3D virtual digital humans has become a hot topic in recent years. Currently, most speech-driven work focuses on training models to learn the relationship between phonemes and visemes to achieve more realistic lips. However, they fail to capture the correlations between emotions and facial expressions effectively. To solve this problem, we propose a new model, termed EmoFace. EmoFace employs a novel Mesh Attention mechanism, which helps to learn potential feature dependencies between mesh vertices in time and space. We also adopt, for the first time to our knowledge, an effective self-growing training scheme that combines teacher-forcing and scheduled sampling in a 3D face animation task. Additionally, since EmoFace is an autoregressive model, there is no requirement that the first frame of the training data must be a silent frame, which greatly reduces the data limitations and contributes to solve the current dilemma of insufficient datasets. Comprehensive quantitative and qualitative evaluations on our proposed high-quality reconstructed 3D emotional facial animation dataset, 3D-RAVDESS ($5.0343\times 10^{-5}$mm for LVE and $1.0196\times 10^{-5}$mm for EVE), and publicly available dataset VOCASET ($2.8669\times 10^{-5}$mm for LVE and $0.4664\times 10^{-5}$mm for EVE), demonstrate that our algorithm achieves state-of-the-art performance.

GLDiTalker: Speech-Driven 3D Facial Animation with Graph Latent Diffusion Transformer

Aug 03, 2024

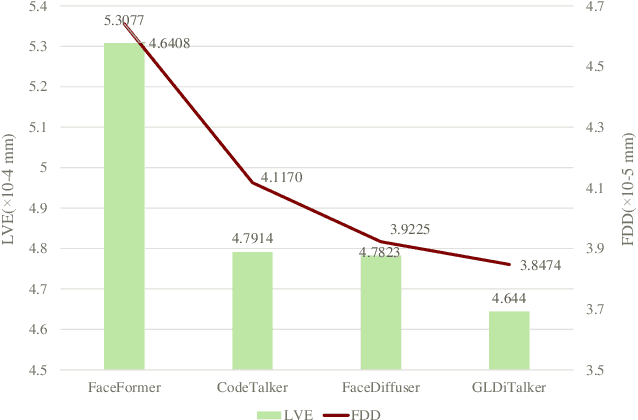

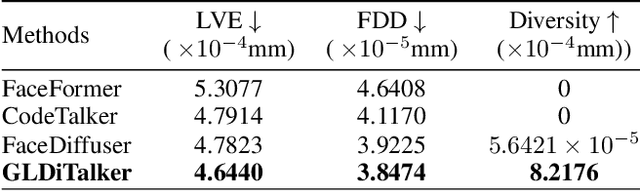

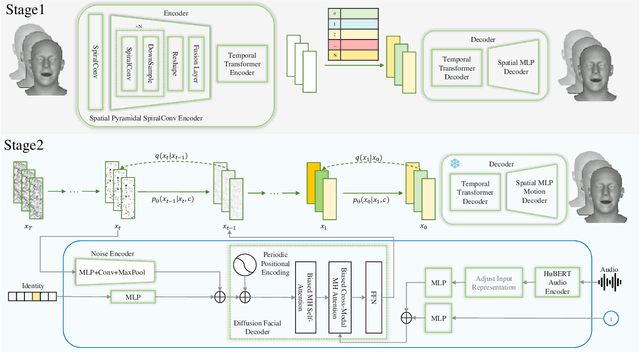

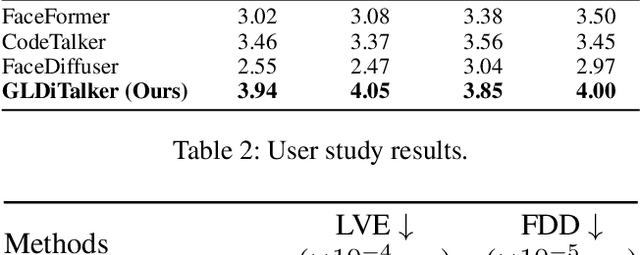

Abstract:3D speech-driven facial animation generation has received much attention in both industrial applications and academic research. Since the non-verbal facial cues that exist across the face in reality are non-deterministic, the generated results should be diverse. However, most recent methods are deterministic models that cannot learn a many-to-many mapping between audio and facial motion to generate diverse facial animations. To address this problem, we propose GLDiTalker, which introduces a motion prior along with some stochasticity to reduce the uncertainty of cross-modal mapping while increasing non-determinacy of the non-verbal facial cues that reside throughout the face. Particularly, GLDiTalker uses VQ-VAE to map facial motion mesh sequences into latent space in the first stage, and then iteratively adds and removes noise to the latent facial motion features in the second stage. In order to integrate different levels of spatial information, the Spatial Pyramidal SpiralConv Encoder is also designed to extract multi-scale features. Extensive qualitative and quantitative experiments demonstrate that our method achieves the state-of-the-art performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge