Hua Cai

Communications to Circulations: 3D Wind Field Retrieval and Real-Time Prediction Using 5G GNSS Signals and Deep Learning

Sep 19, 2025

Abstract:Accurate atmospheric wind field information is crucial for various applications, including weather forecasting, aviation safety, and disaster risk reduction. However, obtaining high spatiotemporal resolution wind data remains challenging due to limitations in traditional in-situ observations and remote sensing techniques, as well as the computational expense and biases of numerical weather prediction (NWP) models. This paper introduces G-WindCast, a novel deep learning framework that leverages signal strength variations from 5G Global Navigation Satellite System (GNSS) signals to retrieve and forecast three-dimensional (3D) atmospheric wind fields. The framework utilizes Forward Neural Networks (FNN) and Transformer networks to capture complex, nonlinear, and spatiotemporal relationships between GNSS-derived features and wind dynamics. Our preliminary results demonstrate promising accuracy in both wind retrieval and short-term wind forecasting (up to 30 minutes lead time), with skill scores comparable to high-resolution NWP outputs in certain scenarios. The model exhibits robustness across different forecast horizons and pressure levels, and its predictions for wind speed and direction show superior agreement with observations compared to concurrent ERA5 reanalysis data. Furthermore, we show that the system can maintain excellent performance for localized forecasting even with a significantly reduced number of GNSS stations (e.g., around 100), highlighting its cost-effectiveness and scalability. This interdisciplinary approach underscores the transformative potential of exploiting non-traditional data sources and deep learning for advanced environmental monitoring and real-time atmospheric applications.

EmoHead: Emotional Talking Head via Manipulating Semantic Expression Parameters

Mar 25, 2025

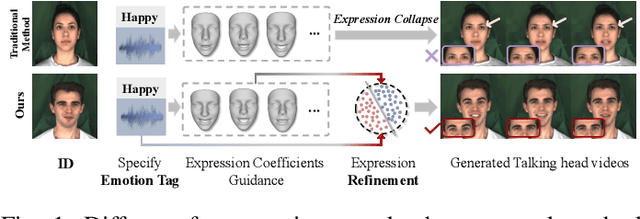

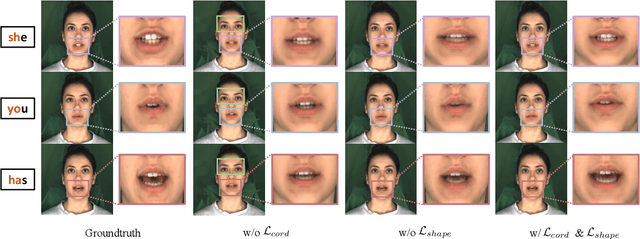

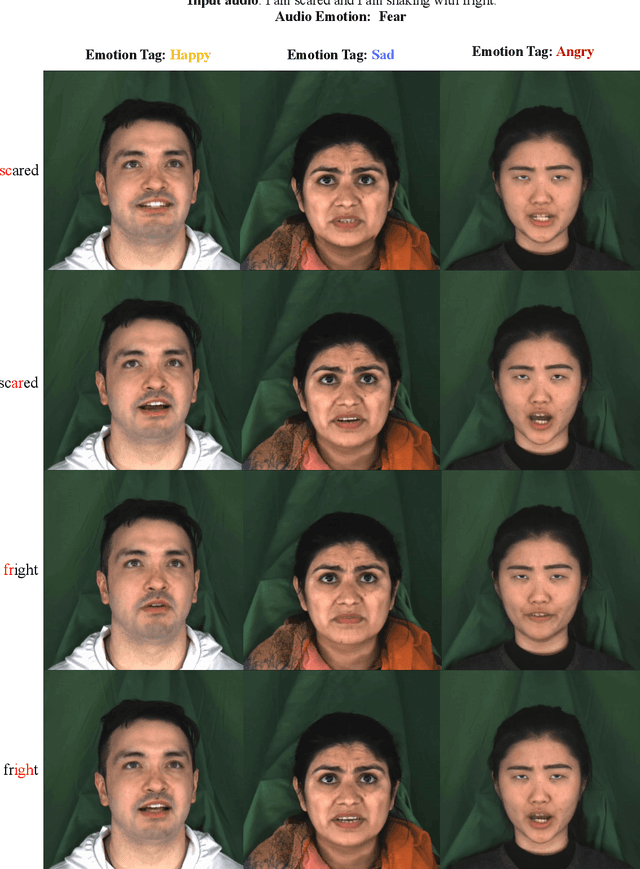

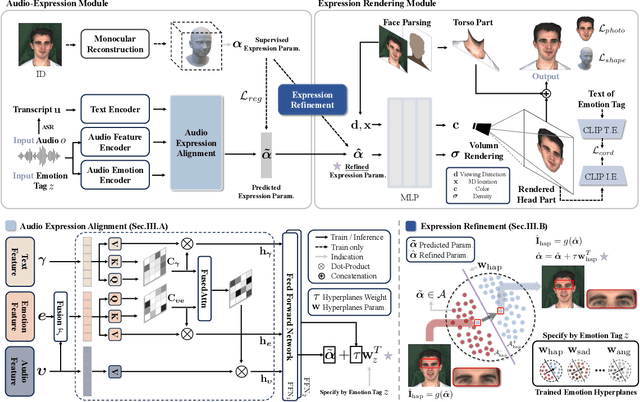

Abstract:Generating emotion-specific talking head videos from audio input is an important and complex challenge for human-machine interaction. However, emotion is highly abstract concept with ambiguous boundaries, and it necessitates disentangled expression parameters to generate emotionally expressive talking head videos. In this work, we present EmoHead to synthesize talking head videos via semantic expression parameters. To predict expression parameter for arbitrary audio input, we apply an audio-expression module that can be specified by an emotion tag. This module aims to enhance correlation from audio input across various emotions. Furthermore, we leverage pre-trained hyperplane to refine facial movements by probing along the vertical direction. Finally, the refined expression parameters regularize neural radiance fields and facilitate the emotion-consistent generation of talking head videos. Experimental results demonstrate that semantic expression parameters lead to better reconstruction quality and controllability.

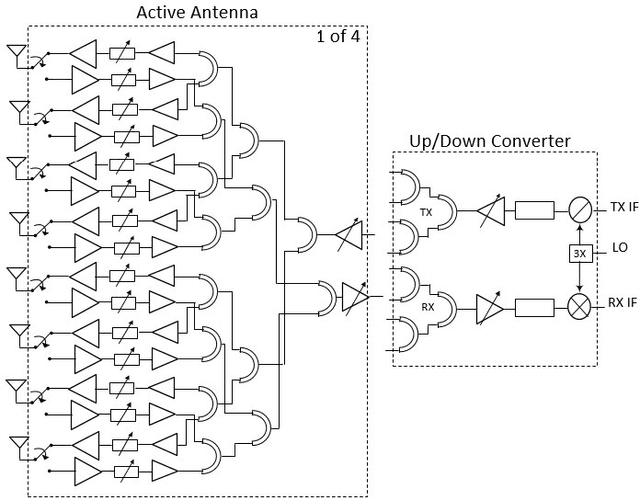

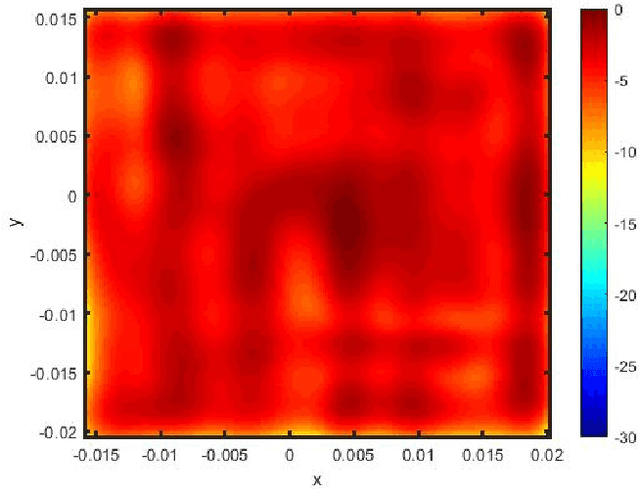

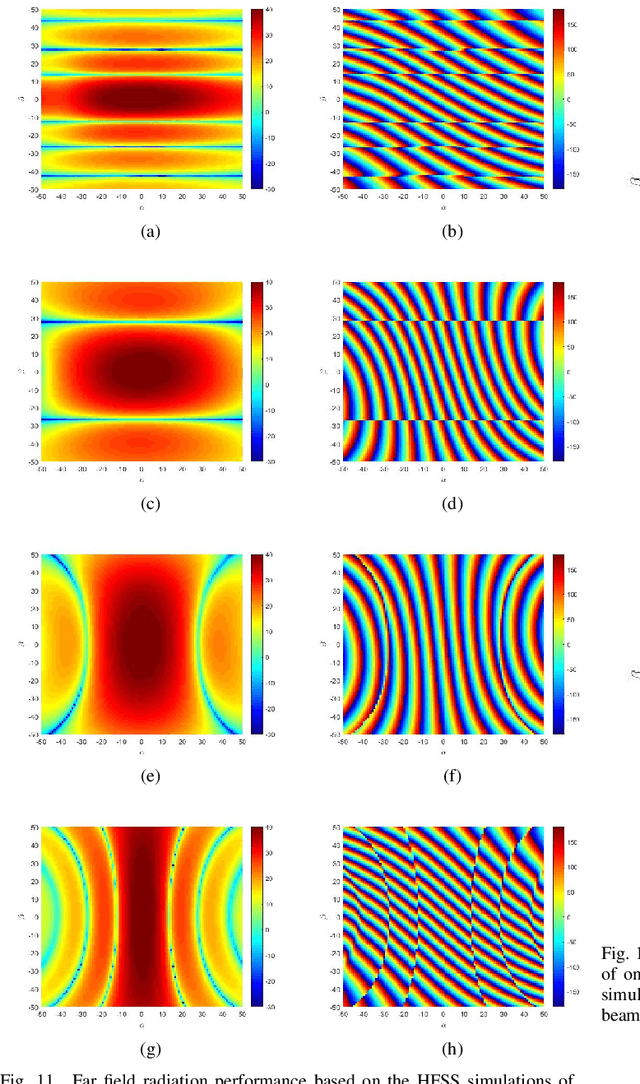

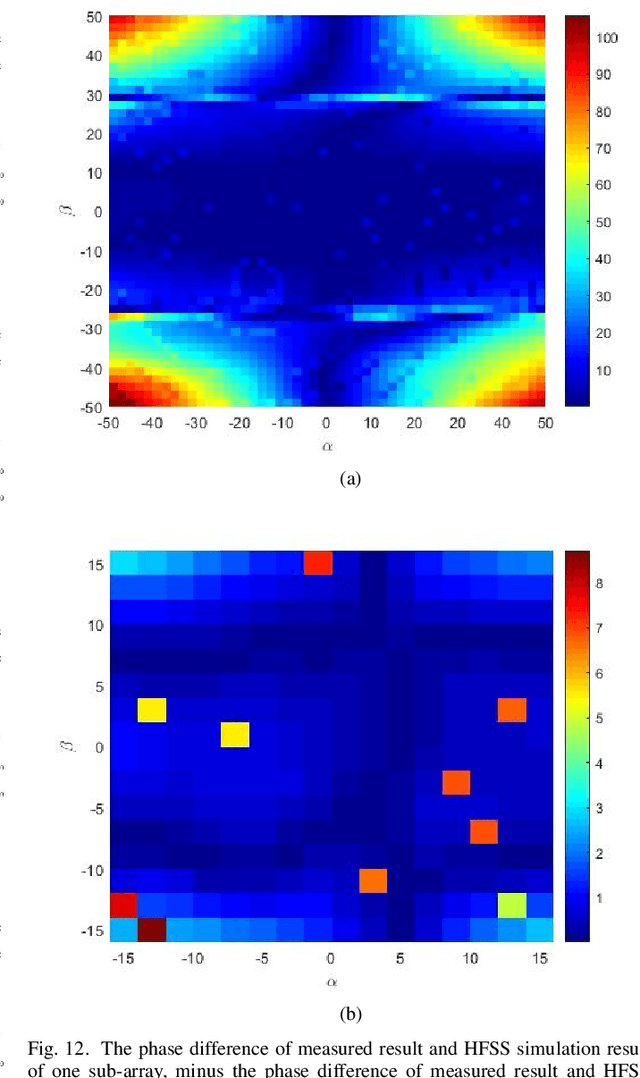

A Scalable 256-Elements E-Band Phased-Array Transceiver for Broadband Communication

Jun 20, 2021

Abstract:For E-band wireless communications, a high gain steerable antenna with sub-arrays is desired to reduce the implementation complexity. This paper presents an E-band communication link with 256-elements antennas based on 8-elements sub-arrays and four beam-forming chips in silicon germanium (SiGe) bipolar complementary metal-oxide-semiconductor (BiCMOS), which is packaged on a 19-layer low temperature co-fired ceramic (LTCC) substrate. After the design and manufacture of the 256-elements antenna, a fast near-field calibration method is proposed for calibration, where a single near-field measurement is required. Then near-field to far-field (NFFF) transform and far-field to near-field (FFNF) transform are used for the bore-sight calibration. The comparison with high frequency structure simulator (HFSS) is utilized for the non-bore-sight calibration. Verified on the 256-elements antenna, the beam-forming performance measured in the chamber is in good agreement with the simulations. The communication in the office environment is also realized using a fifth generation (5G) new radio (NR) system, whose bandwidth is 400 megahertz (MHz) and waveform format is orthogonal frequency division multiplexing (OFDM) with 120 kilohertz (kHz) sub-carrier spacing.

Machine Learning in/for Blockchain: Future and Challenges

Sep 12, 2019

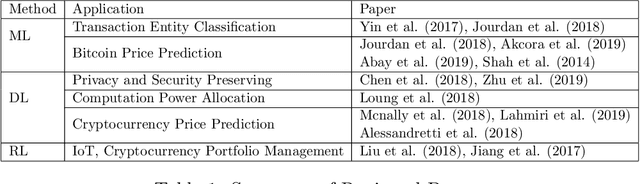

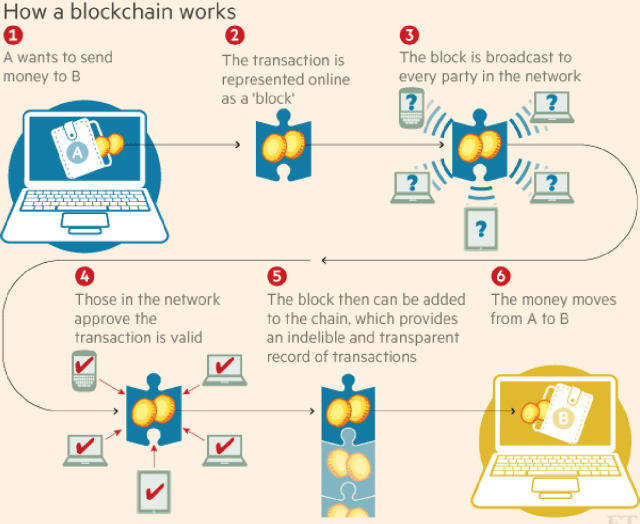

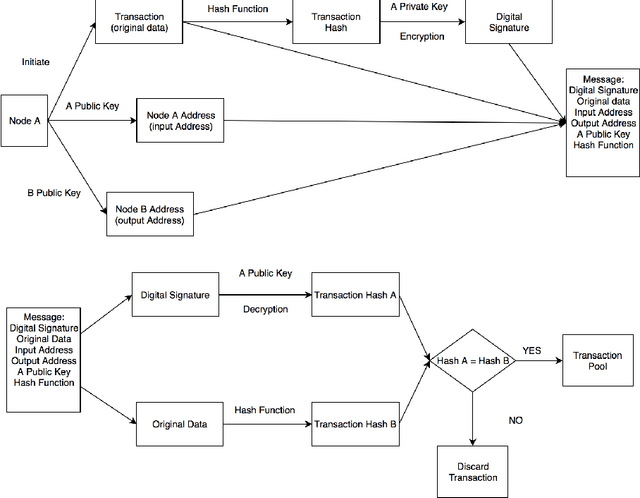

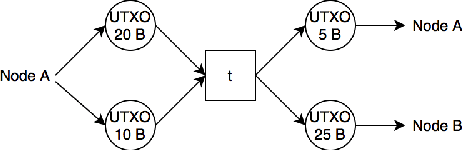

Abstract:Machine learning (including deep and reinforcement learning) and blockchain are two of the most noticeable technologies in recent years. The first one is the foundation of artificial intelligence and big data, and the second one has significantly disrupted the financial industry. Both technologies are data-driven, and thus there are rapidly growing interests in integrating them for more secure and efficient data sharing and analysis. In this paper, we review the research on combining blockchain and machine learning technologies and demonstrate that they can collaborate efficiently and effectively. In the end, we point out some future directions and expect more researches on deeper integration of the two promising technologies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge