Hsiu-Chin Lin

McGill University

Contractive Diffusion Policies: Robust Action Diffusion via Contractive Score-Based Sampling with Differential Equations

Jan 02, 2026Abstract:Diffusion policies have emerged as powerful generative models for offline policy learning, whose sampling process can be rigorously characterized by a score function guiding a Stochastic Differential Equation (SDE). However, the same score-based SDE modeling that grants diffusion policies the flexibility to learn diverse behavior also incurs solver and score-matching errors, large data requirements, and inconsistencies in action generation. While less critical in image generation, these inaccuracies compound and lead to failure in continuous control settings. We introduce Contractive Diffusion Policies (CDPs) to induce contractive behavior in the diffusion sampling dynamics. Contraction pulls nearby flows closer to enhance robustness against solver and score-matching errors while reducing unwanted action variance. We develop an in-depth theoretical analysis along with a practical implementation recipe to incorporate CDPs into existing diffusion policy architectures with minimal modification and computational cost. We evaluate CDPs for offline learning by conducting extensive experiments in simulation and real-world settings. Across benchmarks, CDPs often outperform baseline policies, with pronounced benefits under data scarcity.

Safe Domain Randomization via Uncertainty-Aware Out-of-Distribution Detection and Policy Adaptation

Jul 08, 2025

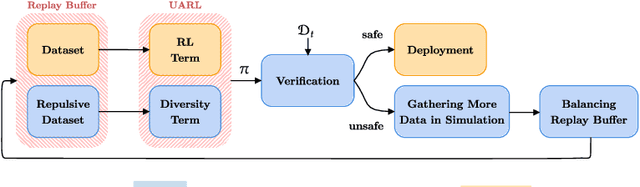

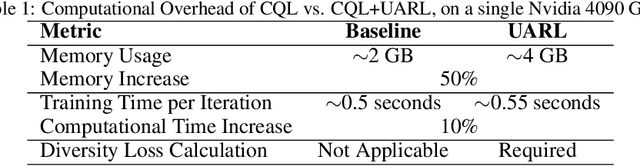

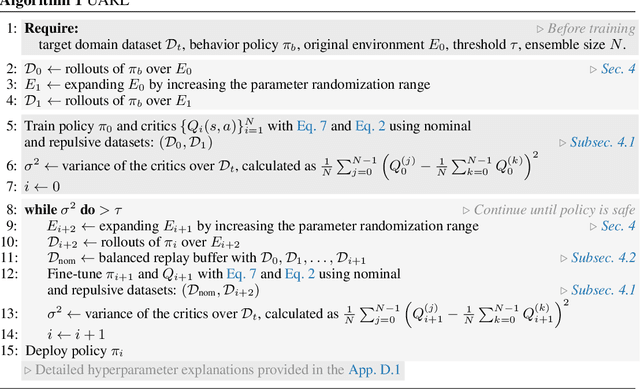

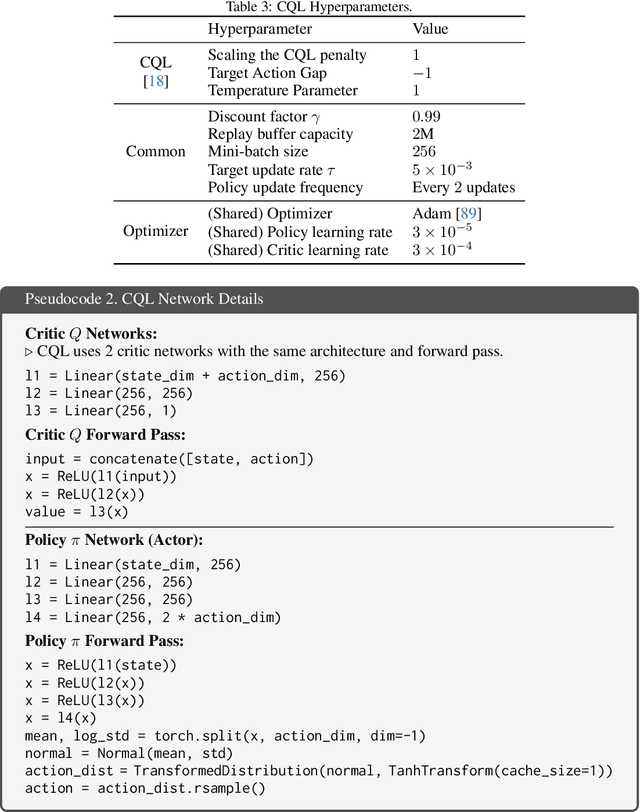

Abstract:Deploying reinforcement learning (RL) policies in real-world involves significant challenges, including distribution shifts, safety concerns, and the impracticality of direct interactions during policy refinement. Existing methods, such as domain randomization (DR) and off-dynamics RL, enhance policy robustness by direct interaction with the target domain, an inherently unsafe practice. We propose Uncertainty-Aware RL (UARL), a novel framework that prioritizes safety during training by addressing Out-Of-Distribution (OOD) detection and policy adaptation without requiring direct interactions in target domain. UARL employs an ensemble of critics to quantify policy uncertainty and incorporates progressive environmental randomization to prepare the policy for diverse real-world conditions. By iteratively refining over high-uncertainty regions of the state space in simulated environments, UARL enhances robust generalization to the target domain without explicitly training on it. We evaluate UARL on MuJoCo benchmarks and a quadrupedal robot, demonstrating its effectiveness in reliable OOD detection, improved performance, and enhanced sample efficiency compared to baselines.

Contractive Dynamical Imitation Policies for Efficient Out-of-Sample Recovery

Dec 10, 2024Abstract:Imitation learning is a data-driven approach to learning policies from expert behavior, but it is prone to unreliable outcomes in out-of-sample (OOS) regions. While previous research relying on stable dynamical systems guarantees convergence to a desired state, it often overlooks transient behavior. We propose a framework for learning policies using modeled by contractive dynamical systems, ensuring that all policy rollouts converge regardless of perturbations, and in turn, enable efficient OOS recovery. By leveraging recurrent equilibrium networks and coupling layers, the policy structure guarantees contractivity for any parameter choice, which facilitates unconstrained optimization. Furthermore, we provide theoretical upper bounds for worst-case and expected loss terms, rigorously establishing the reliability of our method in deployment. Empirically, we demonstrate substantial OOS performance improvements in robotics manipulation and navigation tasks in simulation.

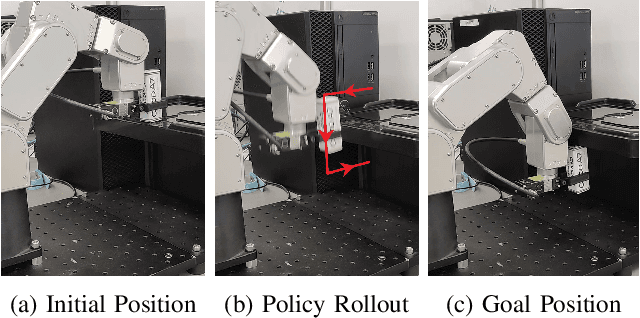

Single-Shot Learning of Stable Dynamical Systems for Long-Horizon Manipulation Tasks

Oct 01, 2024Abstract:Mastering complex sequential tasks continues to pose a significant challenge in robotics. While there has been progress in learning long-horizon manipulation tasks, most existing approaches lack rigorous mathematical guarantees for ensuring reliable and successful execution. In this paper, we extend previous work on learning long-horizon tasks and stable policies, focusing on improving task success rates while reducing the amount of training data needed. Our approach introduces a novel method that (1) segments long-horizon demonstrations into discrete steps defined by waypoints and subgoals, and (2) learns globally stable dynamical system policies to guide the robot to each subgoal, even in the face of sensory noise and random disturbances. We validate our approach through both simulation and real-world experiments, demonstrating effective transfer from simulation to physical robotic platforms. Code is available at https://github.com/Alestaubin/stable-imitation-policy-with-waypoints

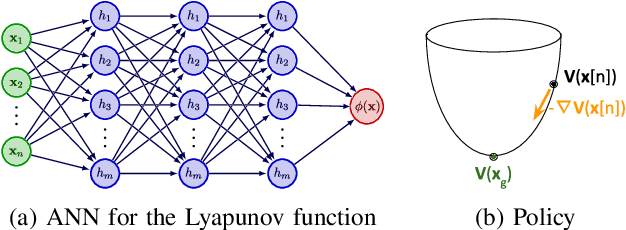

Globally Stable Neural Imitation Policies

Mar 07, 2024Abstract:Imitation learning presents an effective approach to alleviate the resource-intensive and time-consuming nature of policy learning from scratch in the solution space. Even though the resulting policy can mimic expert demonstrations reliably, it often lacks predictability in unexplored regions of the state-space, giving rise to significant safety concerns in the face of perturbations. To address these challenges, we introduce the Stable Neural Dynamical System (SNDS), an imitation learning regime which produces a policy with formal stability guarantees. We deploy a neural policy architecture that facilitates the representation of stability based on Lyapunov theorem, and jointly train the policy and its corresponding Lyapunov candidate to ensure global stability. We validate our approach by conducting extensive experiments in simulation and successfully deploying the trained policies on a real-world manipulator arm. The experimental results demonstrate that our method overcomes the instability, accuracy, and computational intensity problems associated with previous imitation learning methods, making our method a promising solution for stable policy learning in complex planning scenarios.

Learning Lyapunov-Stable Polynomial Dynamical Systems Through Imitation

Oct 31, 2023

Abstract:Imitation learning is a paradigm to address complex motion planning problems by learning a policy to imitate an expert's behavior. However, relying solely on the expert's data might lead to unsafe actions when the robot deviates from the demonstrated trajectories. Stability guarantees have previously been provided utilizing nonlinear dynamical systems, acting as high-level motion planners, in conjunction with the Lyapunov stability theorem. Yet, these methods are prone to inaccurate policies, high computational cost, sample inefficiency, or quasi stability when replicating complex and highly nonlinear trajectories. To mitigate this problem, we present an approach for learning a globally stable nonlinear dynamical system as a motion planning policy. We model the nonlinear dynamical system as a parametric polynomial and learn the polynomial's coefficients jointly with a Lyapunov candidate. To showcase its success, we compare our method against the state of the art in simulation and conduct real-world experiments with the Kinova Gen3 Lite manipulator arm. Our experiments demonstrate the sample efficiency and reproduction accuracy of our method for various expert trajectories, while remaining stable in the face of perturbations.

Improving Reinforcement Learning Training Regimes for Social Robot Navigation

Aug 29, 2023

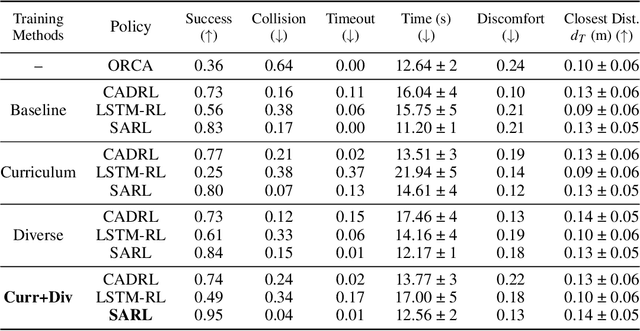

Abstract:In order for autonomous mobile robots to navigate in human spaces, they must abide by our social norms. Reinforcement learning (RL) has emerged as an effective method to train robot navigation policies that are able to respect these norms. However, a large portion of existing work in the field conducts both RL training and testing in simplistic environments. This limits the generalization potential of these models to unseen environments, and the meaningfulness of their reported results. We propose a method to improve the generalization performance of RL social navigation methods using curriculum learning. By employing multiple environment types and by modeling pedestrians using multiple dynamics models, we are able to progressively diversify and escalate difficulty in training. Our results show that the use of curriculum learning in training can be used to achieve better generalization performance than previous training methods. We also show that results presented in many existing state-of-the art RL social navigation works do not evaluate their methods outside of their training environments, and thus do not reflect their policies' failure to adequately generalize to out-of-distribution scenarios. In response, we validate our training approach on larger and more crowded testing environments than those used in training, allowing for more meaningful measurements of model performance.

Learning Agile Paths from Optimal Control

Nov 30, 2022

Abstract:Efficient motion planning algorithms are of central importance for deploying robots in the real world. Unfortunately, these algorithms often drastically reduce the dimensionality of the problem for the sake of feasibility, thereby foregoing optimal solutions. This limitation is most readily observed in agile robots, where the solution space can have multiple additional dimensions. Optimal control approaches partially solve this problem by finding optimal solutions without sacrificing the complexity of the environment, but do not meet the efficiency demands of real-world applications. This work proposes an approach to resolve these issues simultaneously by training a machine learning model on the outputs of an optimal control approach.

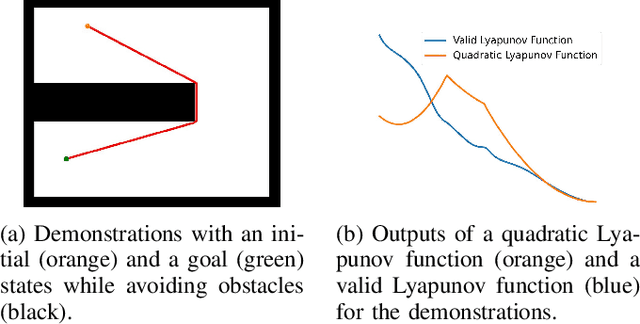

Generating Stable and Collision-Free Policies through Lyapunov Function Learning

Nov 16, 2022

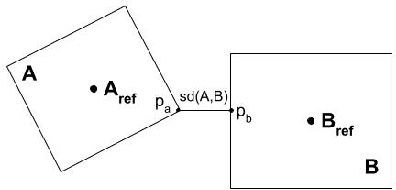

Abstract:The need for rapid and reliable robot deployment is on the rise. Imitation Learning (IL) has become popular for producing motion planning policies from a set of demonstrations. However, many methods in IL are not guaranteed to produce stable policies. The generated policy may not converge to the robot target, reducing reliability, and may collide with its environment, reducing the safety of the system. Stable Estimator of Dynamic Systems (SEDS) produces stable policies by constraining the Lyapunov stability criteria during learning, but the Lyapunov candidate function had to be manually selected. In this work, we propose a novel method for learning a Lyapunov function and a policy using a single neural network model. The method can be equipped with an obstacle avoidance module for convex object pairs to guarantee no collisions. We demonstrated our method is capable of finding policies in several simulation environments and transfer to a real-world scenario.

High Precision Real Time Collision Detection

Jul 23, 2020

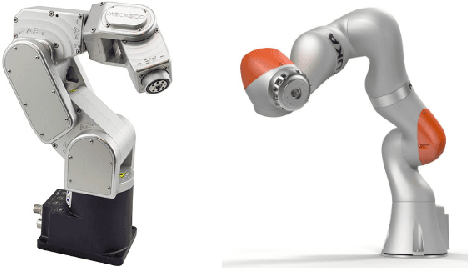

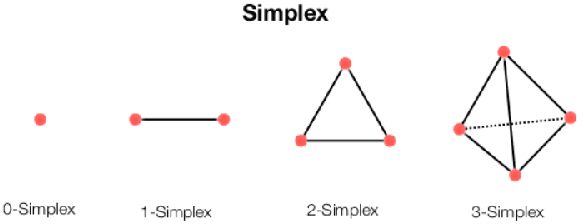

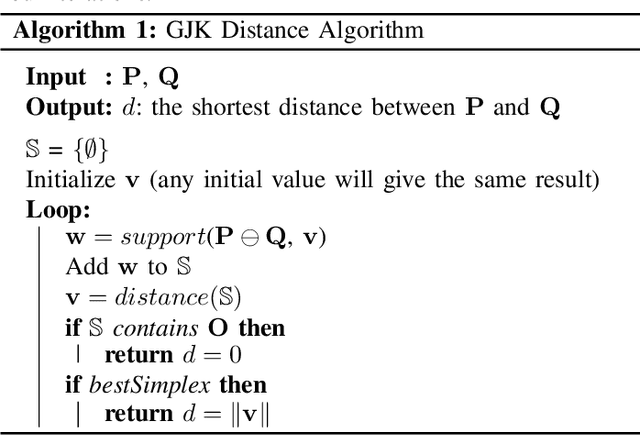

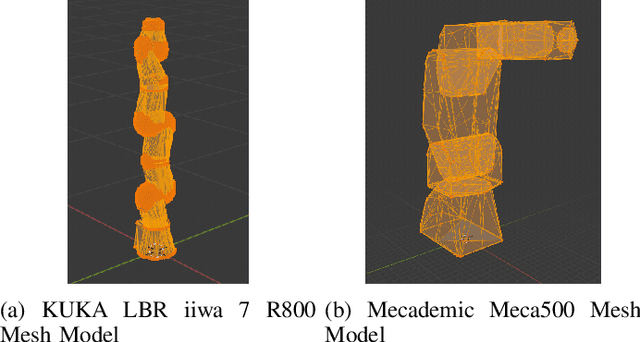

Abstract:Collision detection and collision avoidance are essential components in these systems for safe human-robot interactions. Robotics systems that can work "out-of-the-box" without excessive amount of installation and calibration from the experts is highly ideal. For this, we propose a generic, high precision, collision detect system that only requires the unified robot description format (URDF) and is capable of running in real time. We extended the Gilbert-Johnson-Keerthi (GJK) algorithm by utilizing a geometrical approach to determine the distance between each rigid body in the environment and check for collisions. The proposed system's performance is shown by checking the self-collision of the KUKA LBR iiwa 7 R800 and the Mecademic Meca500. The performance is compared to the Flexible Collision Library (FCL).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge