Hossein Rabbani

Optical Coherence Tomography Image Enhancement via Block Hankelization and Low Rank Tensor Network Approximation

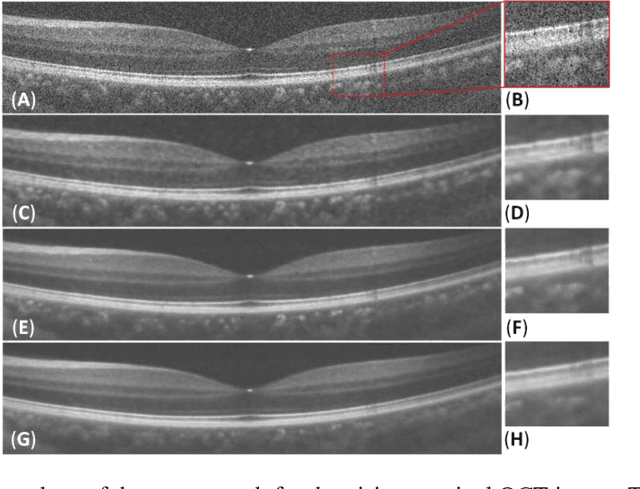

Jun 19, 2023Abstract:In this paper, the problem of image super-resolution for Optical Coherence Tomography (OCT) has been addressed. Due to the motion artifacts, OCT imaging is usually done with a low sampling rate and the resulting images are often noisy and have low resolution. Therefore, reconstruction of high resolution OCT images from the low resolution versions is an essential step for better OCT based diagnosis. In this paper, we propose a novel OCT super-resolution technique using Tensor Ring decomposition in the embedded space. A new tensorization method based on a block Hankelization approach with overlapped patches, called overlapped patch Hankelization, has been proposed which allows us to employ Tensor Ring decomposition. The Hankelization method enables us to better exploit the inter connection of pixels and consequently achieve better super-resolution of images. The low resolution image was first patch Hankelized and then its Tensor Ring decomposition with rank incremental has been computed. Simulation results confirm that the proposed approach is effective in OCT super-resolution.

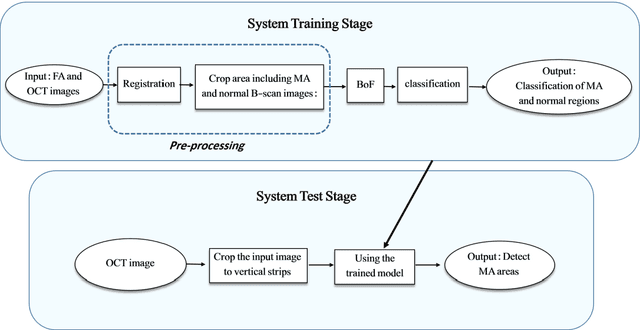

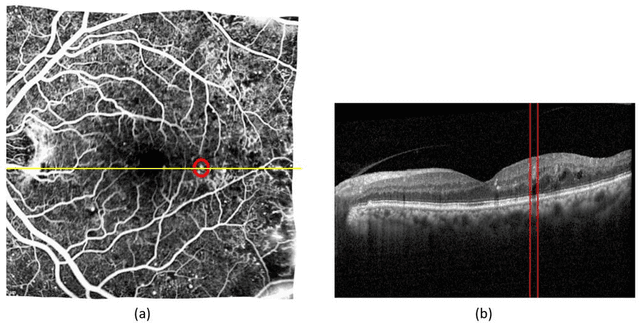

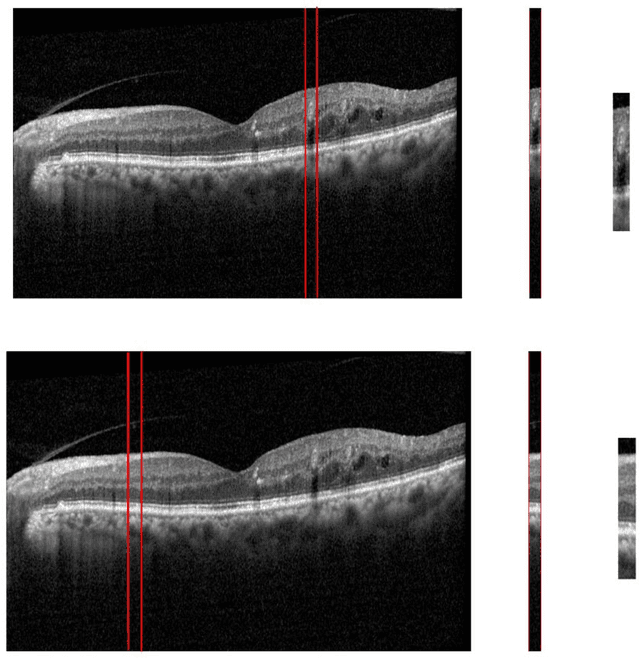

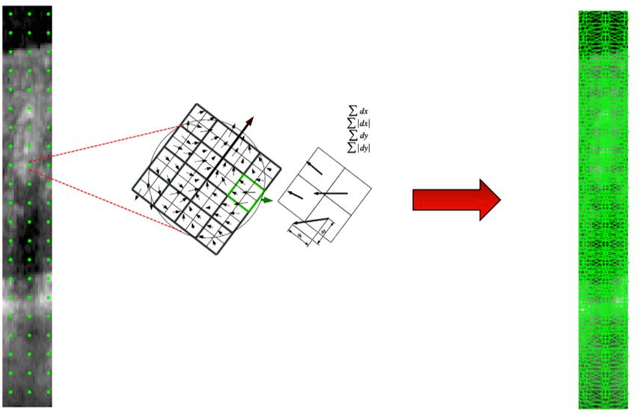

Automatic Detection of Microaneurysms in OCT Images Using Bag of Features

May 10, 2022

Abstract:Diabetic Retinopathy (DR) caused by diabetes occurs as a result of changes in the retinal vessels and causes visual impairment. Microaneurysms (MAs) are the early clinical signs of DR, whose timely diagnosis can help detecting DR in the early stages of its development. It has been observed that MAs are more common in the inner retinal layers compared to the outer retinal layers in eyes suffering from DR. Optical Coherence Tomography (OCT) is a noninvasive imaging technique that provides a cross-sectional view of the retina and it has been used in recent years to diagnose many eye diseases. As a result, in this paper has attempted to identify areas with MA from normal areas of the retina using OCT images. This work is done using the dataset collected from FA and OCT images of 20 patients with DR. In this regard, firstly Fluorescein Angiography (FA) and OCT images were registered. Then the MA and normal areas were separated and the features of each of these areas were extracted using the Bag of Features (BOF) approach with Speeded-Up Robust Feature (SURF) descriptor. Finally, the classification process was performed using a multilayer perceptron network. For each of the criteria of accuracy, sensitivity, specificity, and precision, the obtained results were 96.33%, 97.33%, 95.4%, and 95.28%, respectively. Utilizing OCT images to detect MAsautomatically is a new idea and the results obtained as preliminary research in this field are promising .

Multiscale Sparsifying Transform Learning for Image Denoising

Mar 25, 2020

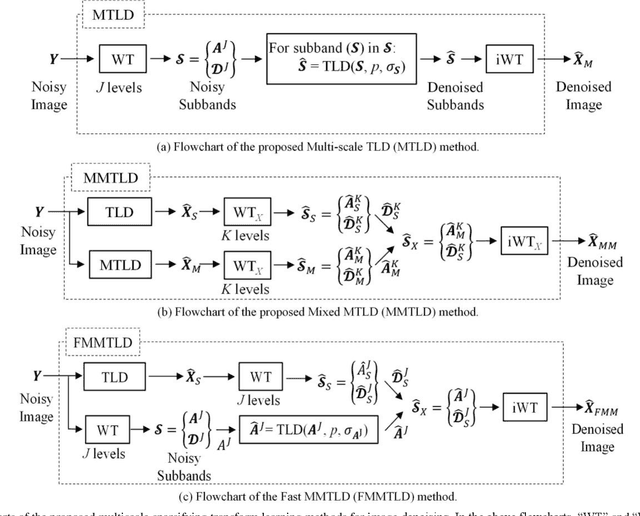

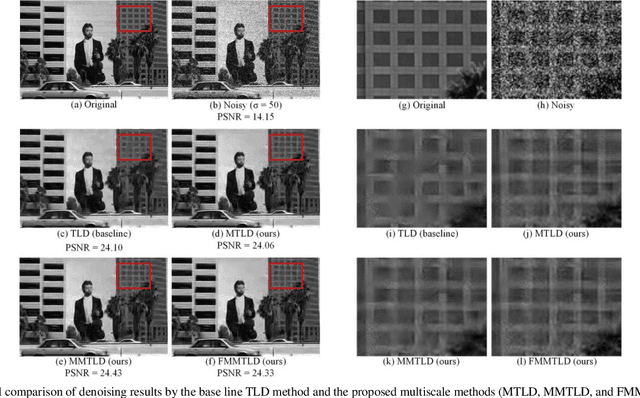

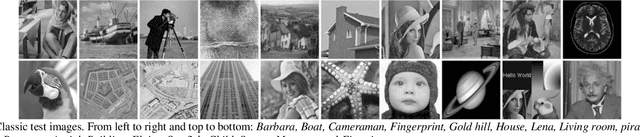

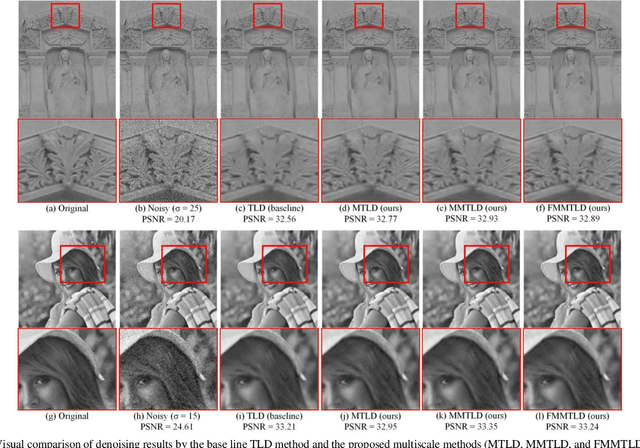

Abstract:The data-driven sparse methods such as synthesis dictionary learning and sparsifying transform learning have been proven to be effective in image denoising. However, these methods are intrinsically single-scale, which ignores the multiscale nature of images. This often leads to suboptimal results. In this paper, we propose several strategies to exploit multiscale information in image denoising through the sparsifying transform learning denoising (TLD) method. To this end, we first employ a simple method of denoising each wavelet subband independently via TLD. Then, we show that this method can be greatly enhanced using wavelet subbands mixing, which is a cheap fusion technique, to combine the results of single-scale and multiscale methods. Finally, we remove the need for denoising detail subbands. This simplification leads to an efficient multiscale denoising method with competitive performance to its baseline. The effectiveness of the proposed methods are experimentally shown over two datasets: 1) classic test images corrupted with Gaussian noise, and 2) fluorescence microscopy images corrupted with real Poisson-Gaussian noise. The proposed multiscale methods improve over the single-scale baseline method by an average of about 0.2 dB (in terms of PSNR) for removing synthetic Gaussian noise form classic test images and real Poisson-Gaussian noise from microscopy images, respectively. Interestingly, the proposed multiscale methods keep their superiority over the baseline even when noise is relatively weak. More importantly, we show that the proposed methods lead to visually pleasing results, in which edges and textures are better recovered. Extensive experiments over these two different datasets show that the proposed methods offer a good trade-off between performance and complexity.

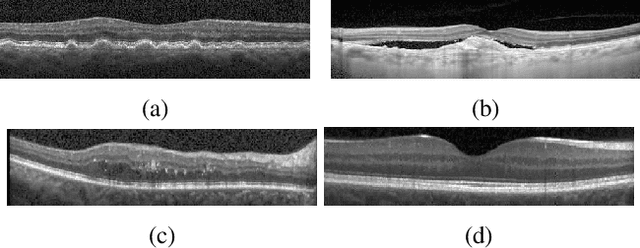

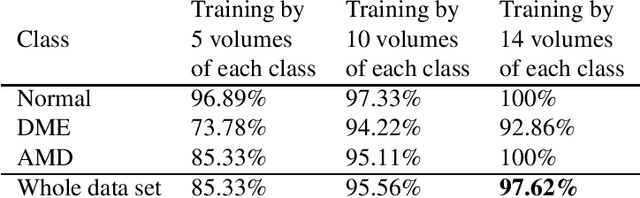

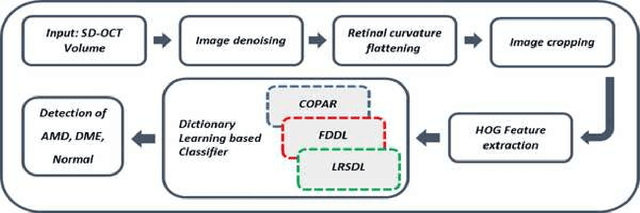

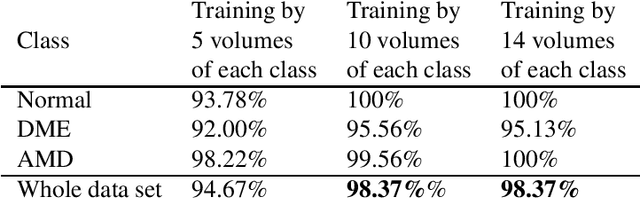

Classification of dry age-related macular degeneration and diabetic macular edema from optical coherence tomography images using dictionary learning

Mar 16, 2019

Abstract:Age-related Macular Degeneration (AMD) and Diabetic Macular Edema (DME) are the major causes of vision loss in developed countries. Alteration of retinal layer structure and appearance of exudate are the most significant signs of these diseases. With the aim of automatic classification of DME, AMD and normal subjects from Optical Coherence Tomography (OCT) images, we proposed a classification algorithm. The two important issues intended in this approach are, not utilizing retinal layer segmentation which by itself is a challenging task and attempting to identify diseases in their early stages, where the signs of diseases appear in a small fraction of B-Scans. We used a histogram of oriented gradients (HOG) feature descriptor to well characterize the distribution of local intensity gradients and edge directions. In order to capture the structure of extracted features, we employed different dictionary learning-based classifiers. Our dataset consists of 45 subjects: 15 patients with AMD, 15 patients with DME and 15 normal subjects. The proposed classifier leads to an accuracy of 95.13%, 100.00%, and 100.00% for DME, AMD, and normal OCT images, respectively, only by considering the 4% of all B-Scans of a volume which outperforms the state of the art methods.

Three-dimensional Optical Coherence Tomography Image Denoising via Multi-input Fully-Convolutional Networks

Nov 22, 2018

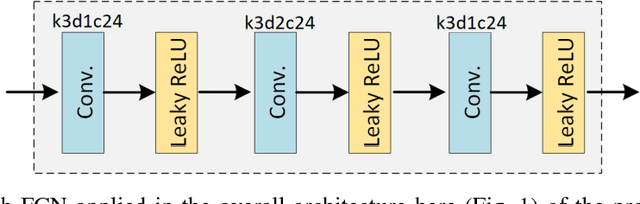

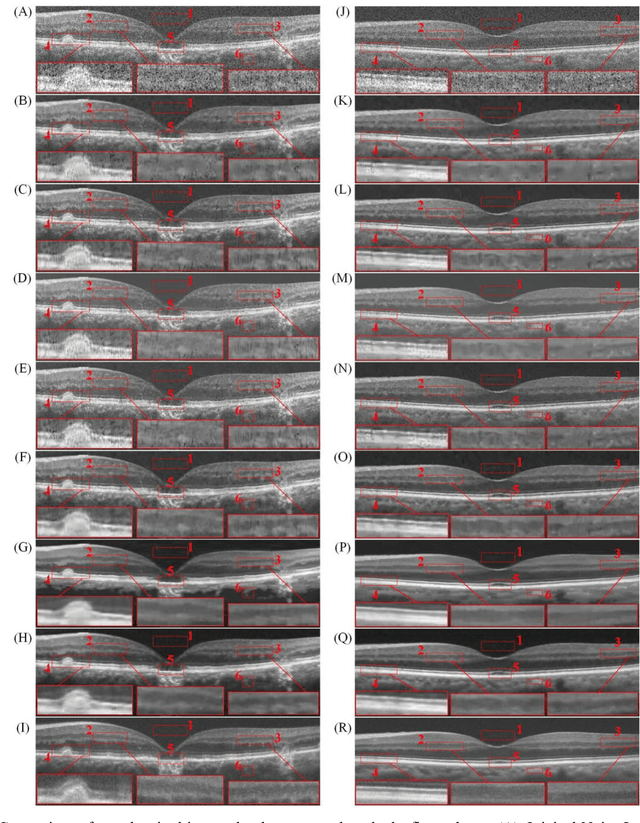

Abstract:In recent years, there has been a growing interest in applying convolutional neural networks (CNNs) to low-level vision tasks such as denoising and super-resolution. Optical coherence tomography (OCT) images are inevitably affected by noise, due to the coherent nature of the image formation process. In this paper, we take advantage of the progress in deep learning methods and propose a new method termed multi-input fully-convolutional networks (MIFCN) for denoising of OCT images. Despite recently proposed natural image denoising CNNs, our proposed architecture allows exploiting high degrees of correlation and complementary information among neighboring OCT images through pixel by pixel fusion of multiple FCNs. We also show how the parameters of the proposed architecture can be learned by optimizing a loss function that is specifically designed to take into account consistency between the overall output and the contribution of each input image. We compare the proposed MIFCN method quantitatively and qualitatively with the state-of-the-art denoising methods on OCT images of normal and age-related macular degeneration eyes.

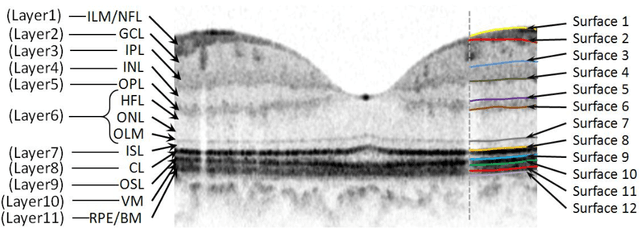

Thickness Mapping of Eleven Retinal Layers in Normal Eyes Using Spectral Domain Optical Coherence Tomography

Dec 11, 2013

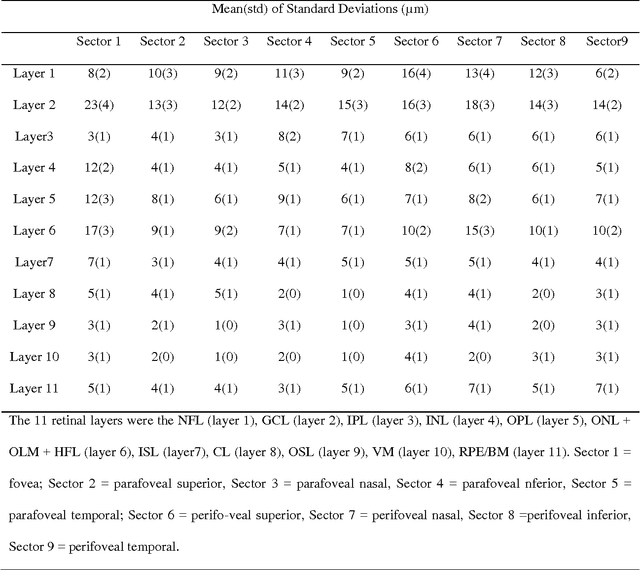

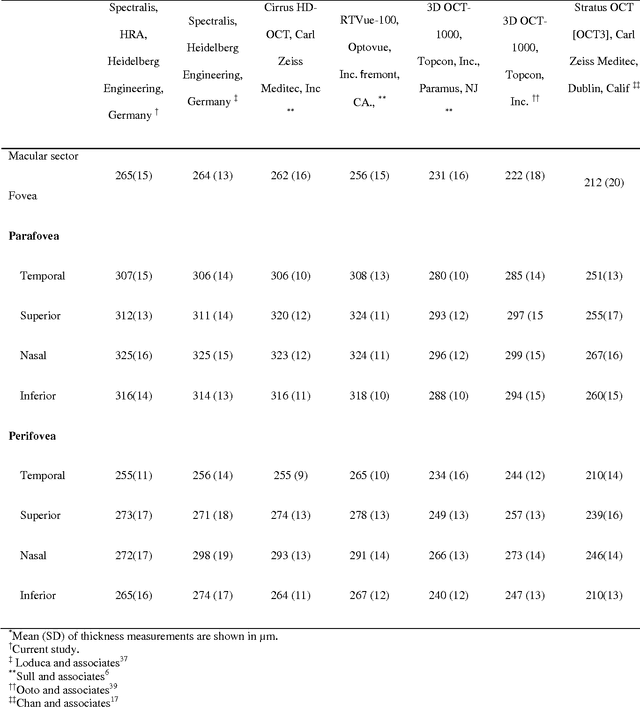

Abstract:Purpose. This study was conducted to determine the thickness map of eleven retinal layers in normal subjects by spectral domain optical coherence tomography (SD-OCT) and evaluate their association with sex and age. Methods. Mean regional retinal thickness of 11 retinal layers were obtained by automatic three-dimensional diffusion-map-based method in 112 normal eyes of 76 Iranian subjects. Results. The thickness map of central foveal area in layer 1, 3, and 4 displayed the minimum thickness (P<0.005 for all). Maximum thickness was observed in nasal to the fovea of layer 1 (P<0.001) and in a circular pattern in the parafoveal retinal area of layers 2, 3 and 4 and in central foveal area of layer 6 (P<0.001). Temporal and inferior quadrants of the total retinal thickness and most of other quadrants of layer 1 were significantly greater in the men than in the women. Surrounding eight sectors of total retinal thickness and a limited number of sectors in layer 1 and 4 significantly correlated with age. Conclusion. SD-OCT demonstrated the three-dimensional thickness distribution of retinal layers in normal eyes. Thickness of layers varied with sex and age and in different sectors. These variables should be considered while evaluating macular thickness.

Intra-Retinal Layer Segmentation of 3D Optical Coherence Tomography Using Coarse Grained Diffusion Map

Oct 08, 2012

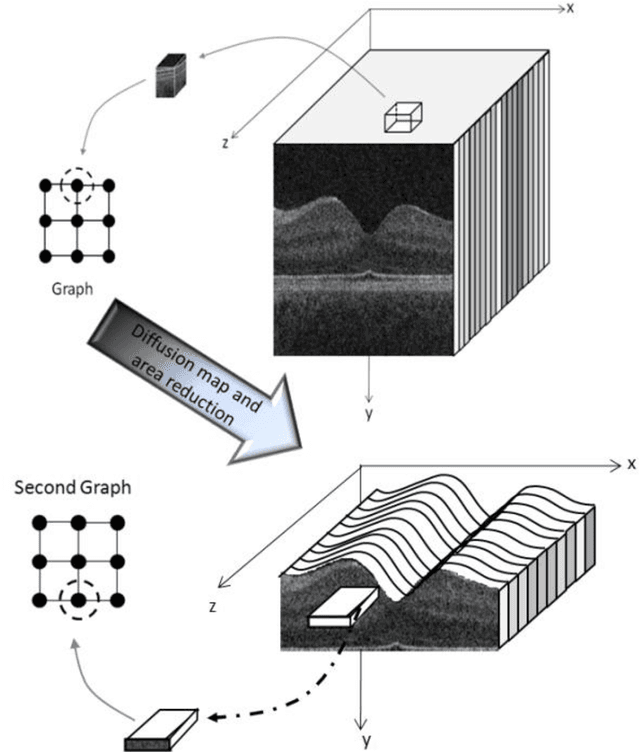

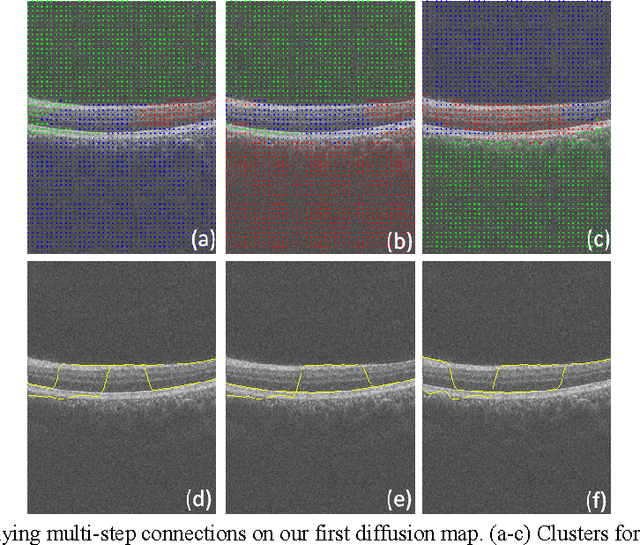

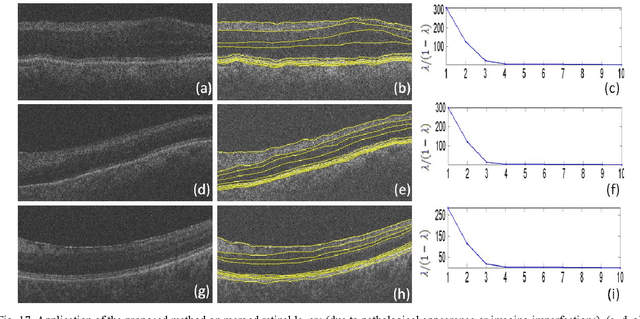

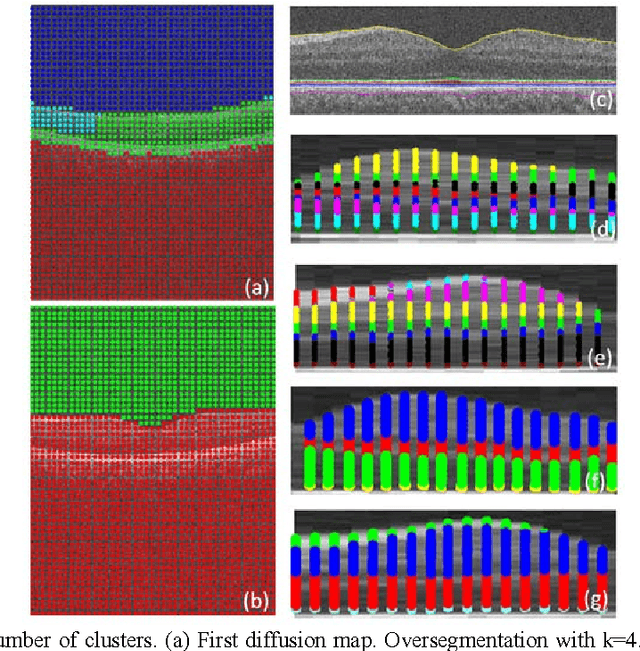

Abstract:Optical coherence tomography (OCT) is a powerful and noninvasive method for retinal imaging. In this paper, we introduce a fast segmentation method based on a new variant of spectral graph theory named diffusion maps. The research is performed on spectral domain (SD) OCT images depicting macular and optic nerve head appearance. The presented approach does not require edge-based image information and relies on regional image texture. Consequently, the proposed method demonstrates robustness in situations of low image contrast or poor layer-to-layer image gradients. Diffusion mapping is applied to 2D and 3D OCT datasets composed of two steps, one for partitioning the data into important and less important sections, and another one for localization of internal layers.In the first step, the pixels/voxels are grouped in rectangular/cubic sets to form a graph node.The weights of a graph are calculated based on geometric distances between pixels/voxels and differences of their mean intensity.The first diffusion map clusters the data into three parts, the second of which is the area of interest. The other two sections are eliminated from the remaining calculations. In the second step, the remaining area is subjected to another diffusion map assessment and the internal layers are localized based on their textural similarities.The proposed method was tested on 23 datasets from two patient groups (glaucoma and normals). The mean unsigned border positioning errors(mean - SD) was 8.52 - 3.13 and 7.56 - 2.95 micrometer for the 2D and 3D methods, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge