Amirhassan Monadjemi

They Look Like Each Other: Case-based Reasoning for Explainable Depression Detection on Twitter using Large Language Models

Jul 21, 2024

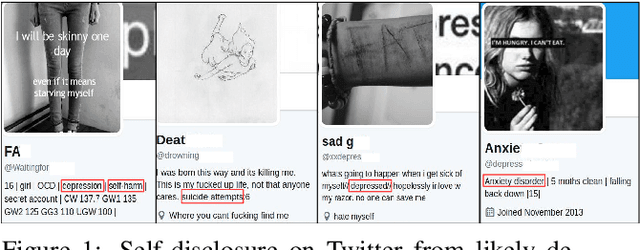

Abstract:Depression is a common mental health issue that requires prompt diagnosis and treatment. Despite the promise of social media data for depression detection, the opacity of employed deep learning models hinders interpretability and raises bias concerns. We address this challenge by introducing ProtoDep, a novel, explainable framework for Twitter-based depression detection. ProtoDep leverages prototype learning and the generative power of Large Language Models to provide transparent explanations at three levels: (i) symptom-level explanations for each tweet and user, (ii) case-based explanations comparing the user to similar individuals, and (iii) transparent decision-making through classification weights. Evaluated on five benchmark datasets, ProtoDep achieves near state-of-the-art performance while learning meaningful prototypes. This multi-faceted approach offers significant potential to enhance the reliability and transparency of depression detection on social media, ultimately aiding mental health professionals in delivering more informed care.

Multiscale Sparsifying Transform Learning for Image Denoising

Mar 25, 2020

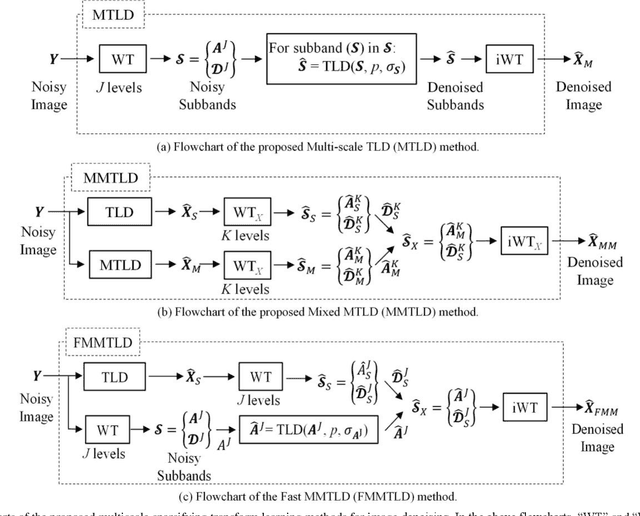

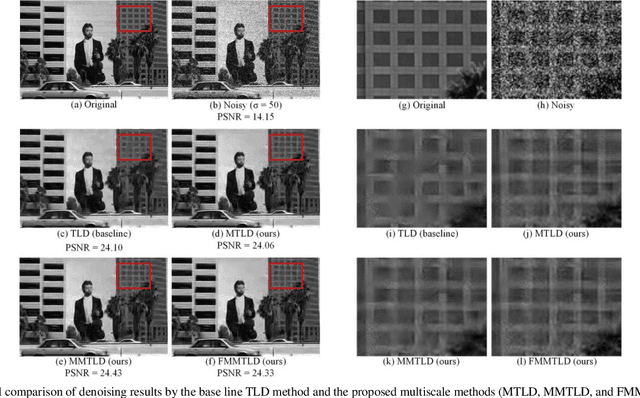

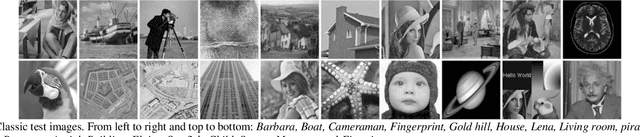

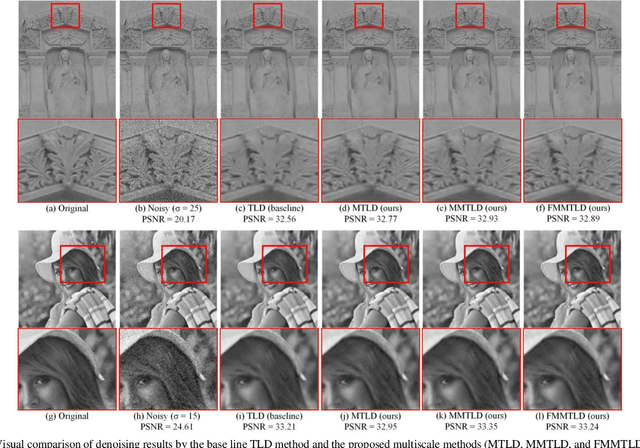

Abstract:The data-driven sparse methods such as synthesis dictionary learning and sparsifying transform learning have been proven to be effective in image denoising. However, these methods are intrinsically single-scale, which ignores the multiscale nature of images. This often leads to suboptimal results. In this paper, we propose several strategies to exploit multiscale information in image denoising through the sparsifying transform learning denoising (TLD) method. To this end, we first employ a simple method of denoising each wavelet subband independently via TLD. Then, we show that this method can be greatly enhanced using wavelet subbands mixing, which is a cheap fusion technique, to combine the results of single-scale and multiscale methods. Finally, we remove the need for denoising detail subbands. This simplification leads to an efficient multiscale denoising method with competitive performance to its baseline. The effectiveness of the proposed methods are experimentally shown over two datasets: 1) classic test images corrupted with Gaussian noise, and 2) fluorescence microscopy images corrupted with real Poisson-Gaussian noise. The proposed multiscale methods improve over the single-scale baseline method by an average of about 0.2 dB (in terms of PSNR) for removing synthetic Gaussian noise form classic test images and real Poisson-Gaussian noise from microscopy images, respectively. Interestingly, the proposed multiscale methods keep their superiority over the baseline even when noise is relatively weak. More importantly, we show that the proposed methods lead to visually pleasing results, in which edges and textures are better recovered. Extensive experiments over these two different datasets show that the proposed methods offer a good trade-off between performance and complexity.

Fusing Visual, Textual and Connectivity Clues for Studying Mental Health

Feb 19, 2019

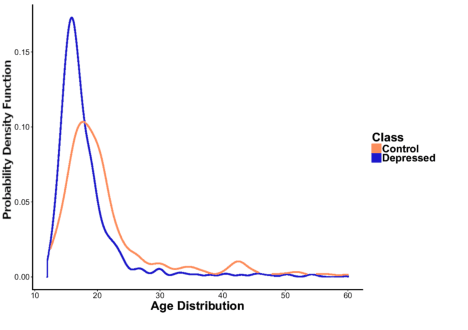

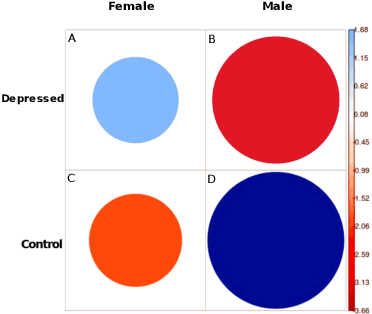

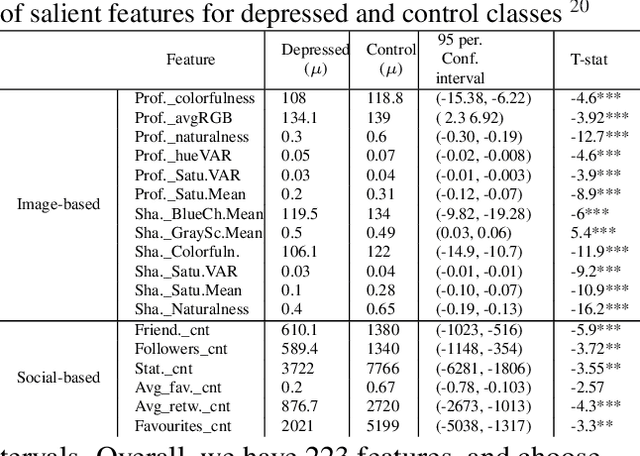

Abstract:With ubiquity of social media platforms, millions of people are sharing their online persona by expressing their thoughts, moods, emotions, feelings, and even their daily struggles with mental health issues voluntarily and publicly on social media. Unlike the most existing efforts which study depression by analyzing textual content, we examine and exploit multimodal big data to discern depressive behavior using a wide variety of features including individual-level demographics. By developing a multimodal framework and employing statistical techniques for fusing heterogeneous sets of features obtained by processing visual, textual and user interaction data, we significantly enhance the current state-of-the-art approaches for identifying depressed individuals on Twitter (improving the average F1-Score by 5 percent) as well as facilitate demographic inference from social media for broader applications. Besides providing insights into the relationship between demographics and mental health, our research assists in the design of a new breed of demographic-aware health interventions.

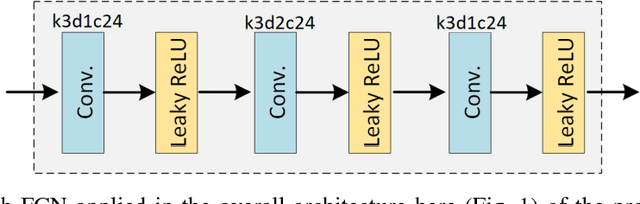

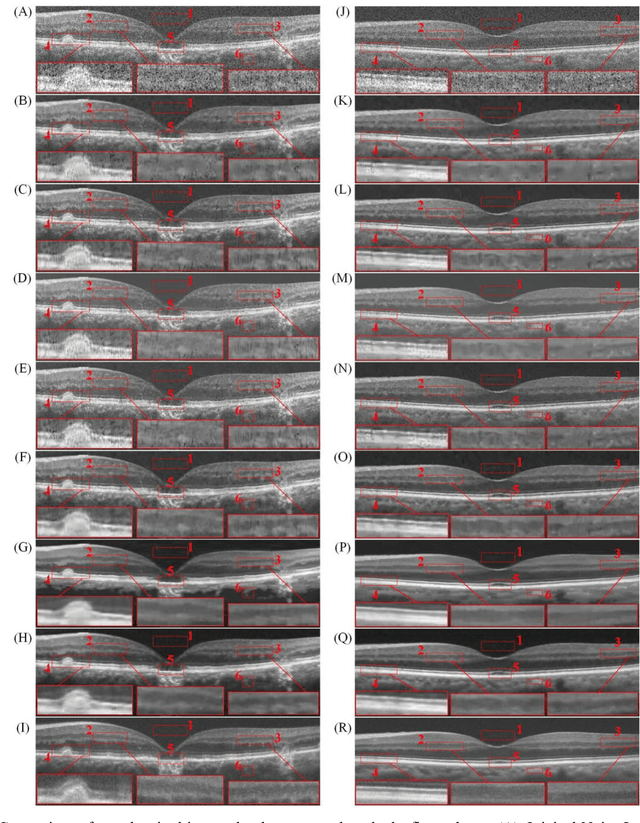

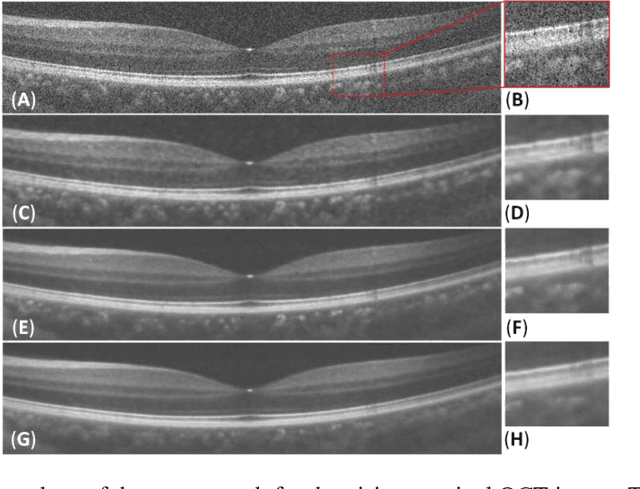

Three-dimensional Optical Coherence Tomography Image Denoising via Multi-input Fully-Convolutional Networks

Nov 22, 2018

Abstract:In recent years, there has been a growing interest in applying convolutional neural networks (CNNs) to low-level vision tasks such as denoising and super-resolution. Optical coherence tomography (OCT) images are inevitably affected by noise, due to the coherent nature of the image formation process. In this paper, we take advantage of the progress in deep learning methods and propose a new method termed multi-input fully-convolutional networks (MIFCN) for denoising of OCT images. Despite recently proposed natural image denoising CNNs, our proposed architecture allows exploiting high degrees of correlation and complementary information among neighboring OCT images through pixel by pixel fusion of multiple FCNs. We also show how the parameters of the proposed architecture can be learned by optimizing a loss function that is specifically designed to take into account consistency between the overall output and the contribution of each input image. We compare the proposed MIFCN method quantitatively and qualitatively with the state-of-the-art denoising methods on OCT images of normal and age-related macular degeneration eyes.

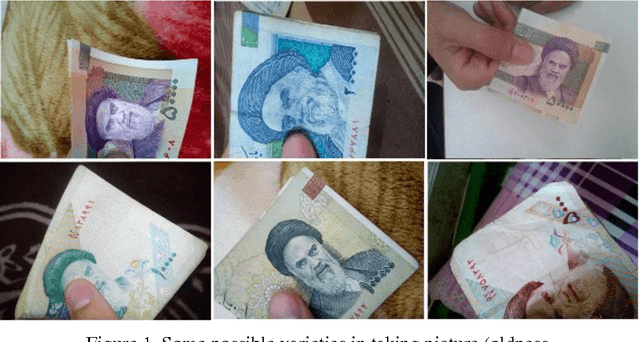

Iranian cashes recognition using mobile

Dec 17, 2014

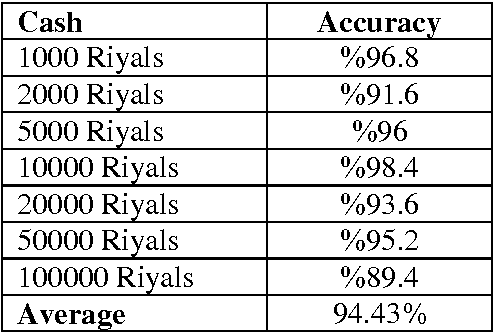

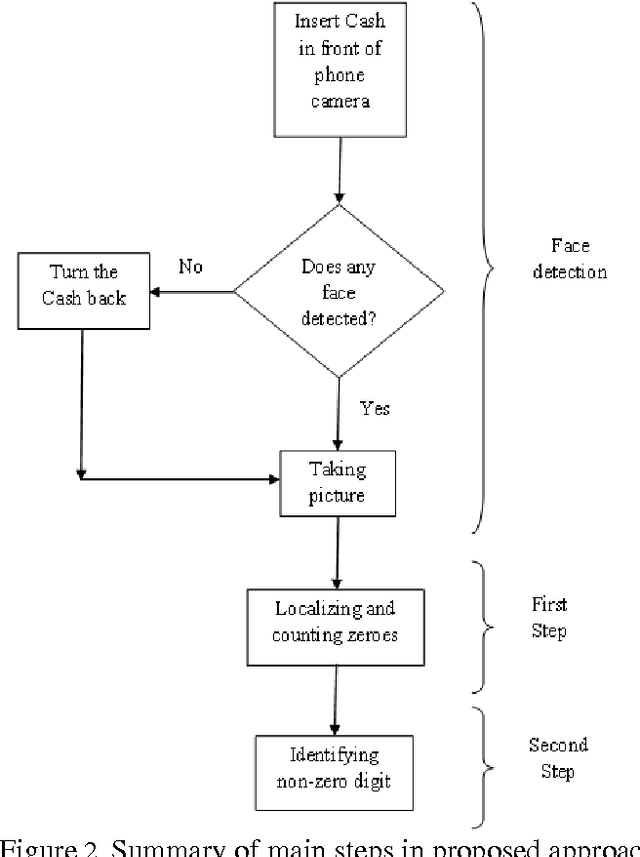

Abstract:In economical societies of today, using cash is an inseparable aspect of human life. People use cashes for marketing, services, entertainments, bank operations and so on. This huge amount of contact with cash and the necessity of knowing the monetary value of it caused one of the most challenging problems for visually impaired people. In this paper we propose a mobile phone based approach to identify monetary value of a picture taken from cashes using some image processing and machine vision techniques. While the developed approach is very fast, it can recognize the value of cash by average accuracy of about 95% and can overcome different challenges like rotation, scaling, collision, illumination changes, perspective, and some others.

* arXiv #133709

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge