Hoang-Quoc Nguyen-Son

SearchLLM: Detecting LLM Paraphrased Text by Measuring the Similarity with Regeneration of the Candidate Source via Search Engine

Jan 23, 2026Abstract:With the advent of large language models (LLMs), it has become common practice for users to draft text and utilize LLMs to enhance its quality through paraphrasing. However, this process can sometimes result in the loss or distortion of the original intended meaning. Due to the human-like quality of LLM-generated text, traditional detection methods often fail, particularly when text is paraphrased to closely mimic original content. In response to these challenges, we propose a novel approach named SearchLLM, designed to identify LLM-paraphrased text by leveraging search engine capabilities to locate potential original text sources. By analyzing similarities between the input and regenerated versions of candidate sources, SearchLLM effectively distinguishes LLM-paraphrased content. SearchLLM is designed as a proxy layer, allowing seamless integration with existing detectors to enhance their performance. Experimental results across various LLMs demonstrate that SearchLLM consistently enhances the accuracy of recent detectors in detecting LLM-paraphrased text that closely mimics original content. Furthermore, SearchLLM also helps the detectors prevent paraphrasing attacks.

VoteTRANS: Detecting Adversarial Text without Training by Voting on Hard Labels of Transformations

Jun 02, 2023

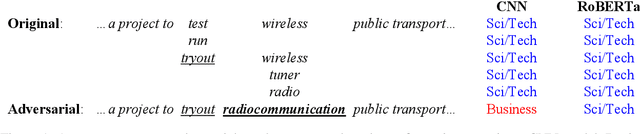

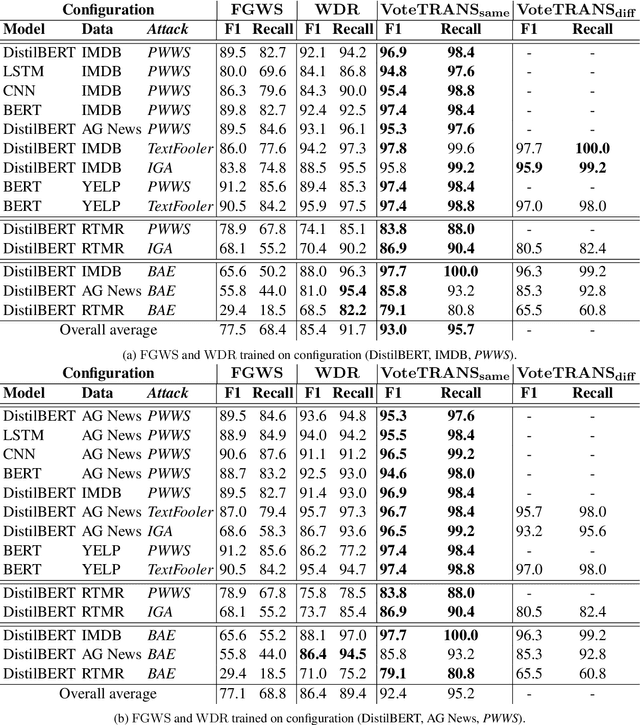

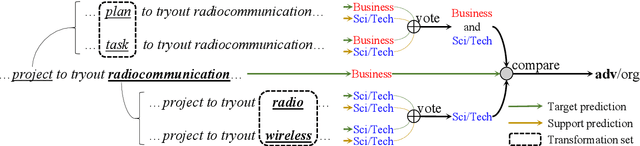

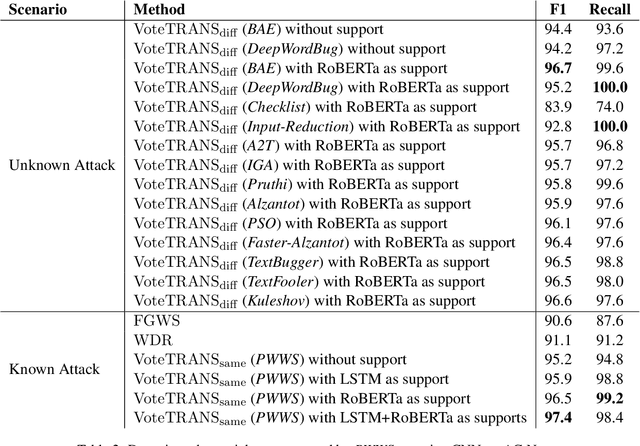

Abstract:Adversarial attacks reveal serious flaws in deep learning models. More dangerously, these attacks preserve the original meaning and escape human recognition. Existing methods for detecting these attacks need to be trained using original/adversarial data. In this paper, we propose detection without training by voting on hard labels from predictions of transformations, namely, VoteTRANS. Specifically, VoteTRANS detects adversarial text by comparing the hard labels of input text and its transformation. The evaluation demonstrates that VoteTRANS effectively detects adversarial text across various state-of-the-art attacks, models, and datasets.

SEPP: Similarity Estimation of Predicted Probabilities for Defending and Detecting Adversarial Text

Oct 13, 2021

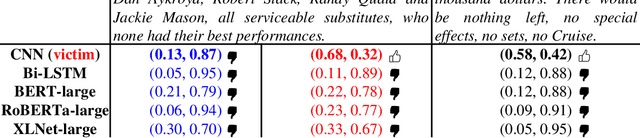

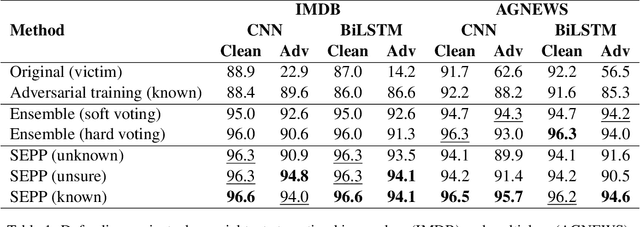

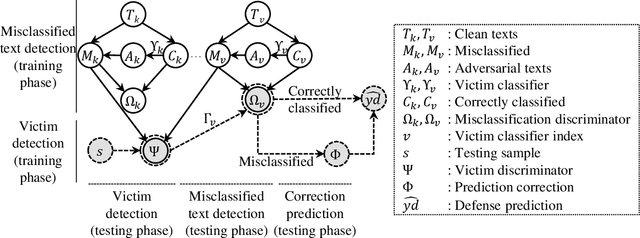

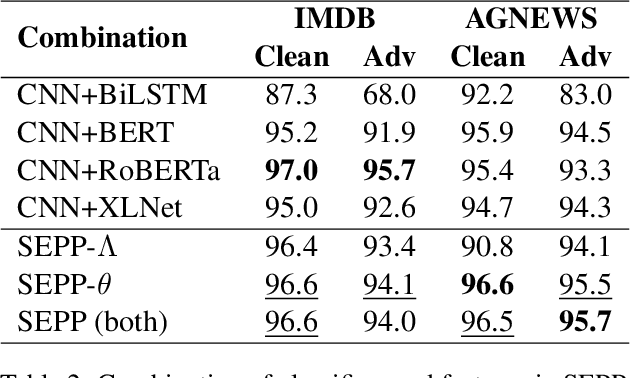

Abstract:There are two cases describing how a classifier processes input text, namely, misclassification and correct classification. In terms of misclassified texts, a classifier handles the texts with both incorrect predictions and adversarial texts, which are generated to fool the classifier, which is called a victim. Both types are misunderstood by the victim, but they can still be recognized by other classifiers. This induces large gaps in predicted probabilities between the victim and the other classifiers. In contrast, text correctly classified by the victim is often successfully predicted by the others and induces small gaps. In this paper, we propose an ensemble model based on similarity estimation of predicted probabilities (SEPP) to exploit the large gaps in the misclassified predictions in contrast to small gaps in the correct classification. SEPP then corrects the incorrect predictions of the misclassified texts. We demonstrate the resilience of SEPP in defending and detecting adversarial texts through different types of victim classifiers, classification tasks, and adversarial attacks.

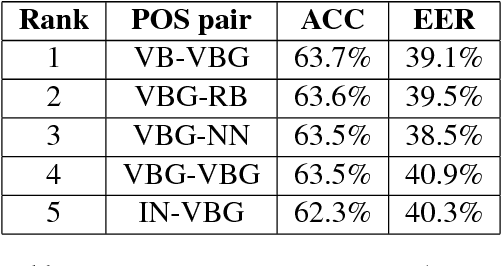

Identifying Adversarial Sentences by Analyzing Text Complexity

Dec 19, 2019

Abstract:Attackers create adversarial text to deceive both human perception and the current AI systems to perform malicious purposes such as spam product reviews and fake political posts. We investigate the difference between the adversarial and the original text to prevent the risk. We prove that the text written by a human is more coherent and fluent. Moreover, the human can express the idea through the flexible text with modern words while a machine focuses on optimizing the generated text by the simple and common words. We also suggest a method to identify the adversarial text by extracting the features related to our findings. The proposed method achieves high performance with 82.0% of accuracy and 18.4% of equal error rate, which is better than the existing methods whose the best accuracy is 77.0% corresponding to the error rate 22.8%.

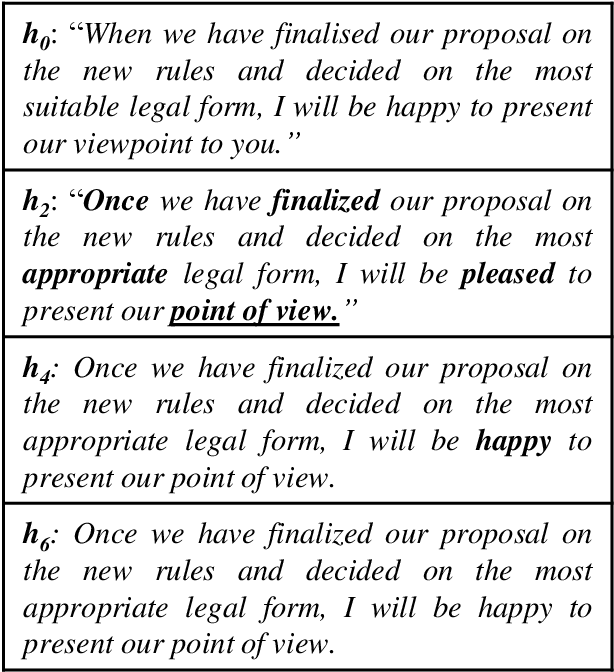

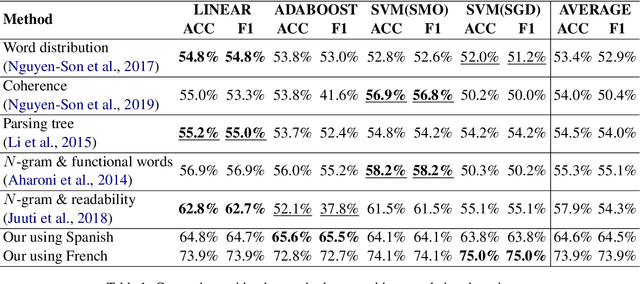

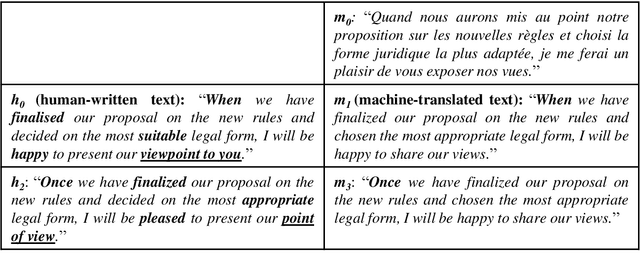

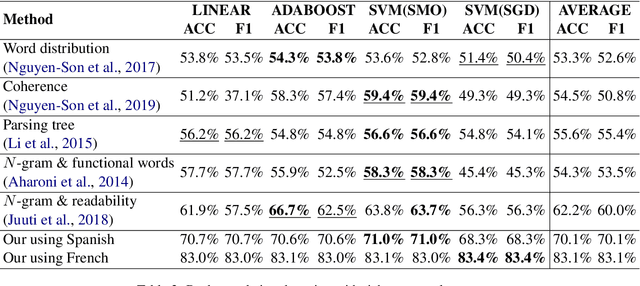

Detecting Machine-Translated Text using Back Translation

Oct 15, 2019

Abstract:Machine-translated text plays a crucial role in the communication of people using different languages. However, adversaries can use such text for malicious purposes such as plagiarism and fake review. The existing methods detected a machine-translated text only using the text's intrinsic content, but they are unsuitable for classifying the machine-translated and human-written texts with the same meanings. We have proposed a method to extract features used to distinguish machine/human text based on the similarity between the intrinsic text and its back-translation. The evaluation of detecting translated sentences with French shows that our method achieves 75.0% of both accuracy and F-score. It outperforms the existing methods whose the best accuracy is 62.8% and the F-score is 62.7%. The proposed method even detects more efficiently the back-translated text with 83.4% of accuracy, which is higher than 66.7% of the best previous accuracy. We also achieve similar results not only with F-score but also with similar experiments related to Japanese. Moreover, we prove that our detector can recognize both machine-translated and machine-back-translated texts without the language information which is used to generate these machine texts. It demonstrates the persistence of our method in various applications in both low- and rich-resource languages.

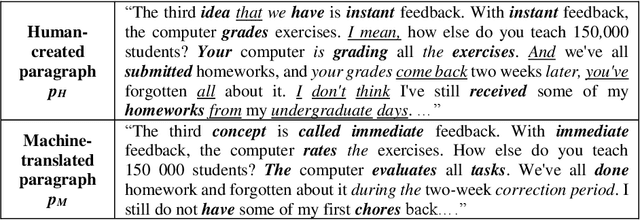

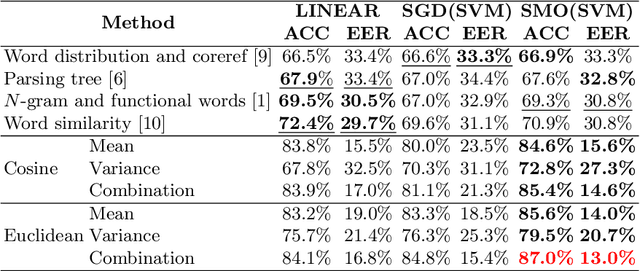

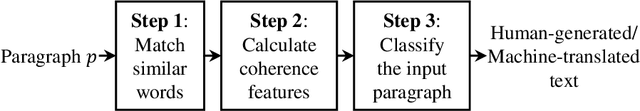

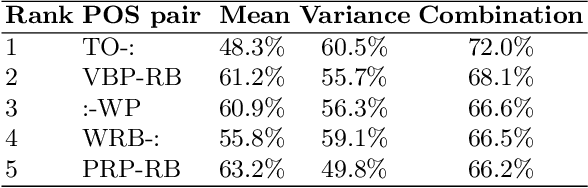

Detecting Machine-Translated Paragraphs by Matching Similar Words

Apr 24, 2019

Abstract:Machine-translated text plays an important role in modern life by smoothing communication from various communities using different languages. However, unnatural translation may lead to misunderstanding, a detector is thus needed to avoid the unfortunate mistakes. While a previous method measured the naturalness of continuous words using a N-gram language model, another method matched noncontinuous words across sentences but this method ignores such words in an individual sentence. We have developed a method matching similar words throughout the paragraph and estimating the paragraph-level coherence, that can identify machine-translated text. Experiment evaluates on 2000 English human-generated and 2000 English machine-translated paragraphs from German showing that the coherence-based method achieves high performance (accuracy = 87.0%; equal error rate = 13.0%). It is efficiently better than previous methods (best accuracy = 72.4%; equal error rate = 29.7%). Similar experiments on Dutch and Japanese obtain 89.2% and 97.9% accuracy, respectively. The results demonstrate the persistence of the proposed method in various languages with different resource levels.

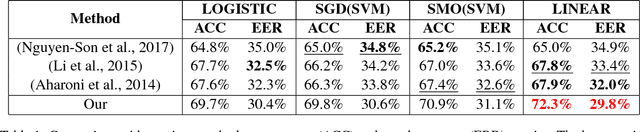

Identifying Computer-Translated Paragraphs using Coherence Features

Dec 28, 2018

Abstract:We have developed a method for extracting the coherence features from a paragraph by matching similar words in its sentences. We conducted an experiment with a parallel German corpus containing 2000 human-created and 2000 machine-translated paragraphs. The result showed that our method achieved the best performance (accuracy = 72.3%, equal error rate = 29.8%) when it is compared with previous methods on various computer-generated text including translation and paper generation (best accuracy = 67.9%, equal error rate = 32.0%). Experiments on Dutch, another rich resource language, and a low resource one (Japanese) attained similar performances. It demonstrated the efficiency of the coherence features at distinguishing computer-translated from human-created paragraphs on diverse languages.

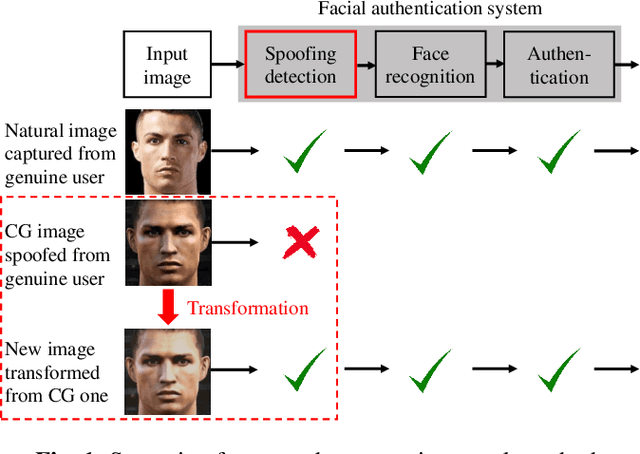

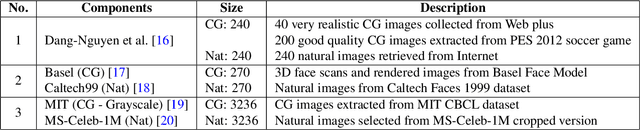

Transformation on Computer-Generated Facial Image to Avoid Detection by Spoofing Detector

Apr 12, 2018

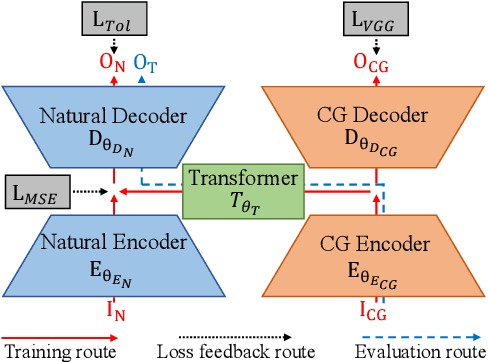

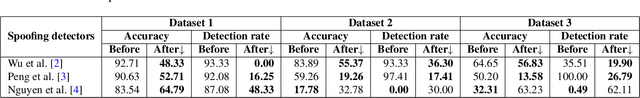

Abstract:Making computer-generated (CG) images more difficult to detect is an interesting problem in computer graphics and security. While most approaches focus on the image rendering phase, this paper presents a method based on increasing the naturalness of CG facial images from the perspective of spoofing detectors. The proposed method is implemented using a convolutional neural network (CNN) comprising two autoencoders and a transformer and is trained using a black-box discriminator without gradient information. Over 50% of the transformed CG images were not detected by three state-of-the-art spoofing detectors. This capability raises an alarm regarding the reliability of facial authentication systems, which are becoming widely used in daily life.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge