Hessam Sokooti

Joint Registration and Segmentation via Multi-Task Learning for Adaptive Radiotherapy of Prostate Cancer

May 05, 2021

Abstract:Medical image registration and segmentation are two of the most frequent tasks in medical image analysis. As these tasks are complementary and correlated, it would be beneficial to apply them simultaneously in a joint manner. In this paper, we formulate registration and segmentation as a joint problem via a Multi-Task Learning (MTL) setting, allowing these tasks to leverage their strengths and mitigate their weaknesses through the sharing of beneficial information. We propose to merge these tasks not only on the loss level, but on the architectural level as well. We studied this approach in the context of adaptive image-guided radiotherapy for prostate cancer, where planning and follow-up CT images as well as their corresponding contours are available for training. The study involves two datasets from different manufacturers and institutes. The first dataset was divided into training (12 patients) and validation (6 patients), and was used to optimize and validate the methodology, while the second dataset (14 patients) was used as an independent test set. We carried out an extensive quantitative comparison between the quality of the automatically generated contours from different network architectures as well as loss weighting methods. Moreover, we evaluated the quality of the generated deformation vector field (DVF). We show that MTL algorithms outperform their Single-Task Learning (STL) counterparts and achieve better generalization on the independent test set. The best algorithm achieved a mean surface distance of $1.06 \pm 0.3$ mm, $1.27 \pm 0.4$ mm, $0.91 \pm 0.4$ mm, and $1.76 \pm 0.8$ mm on the validation set for the prostate, seminal vesicles, bladder, and rectum, respectively. The high accuracy of the proposed method combined with the fast inference speed, makes it a promising method for automatic re-contouring of follow-up scans for adaptive radiotherapy.

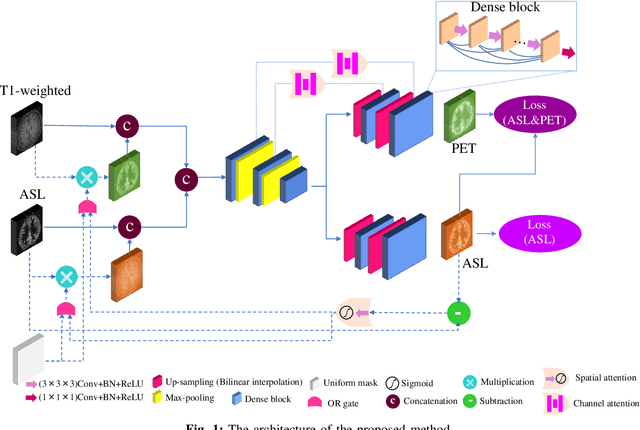

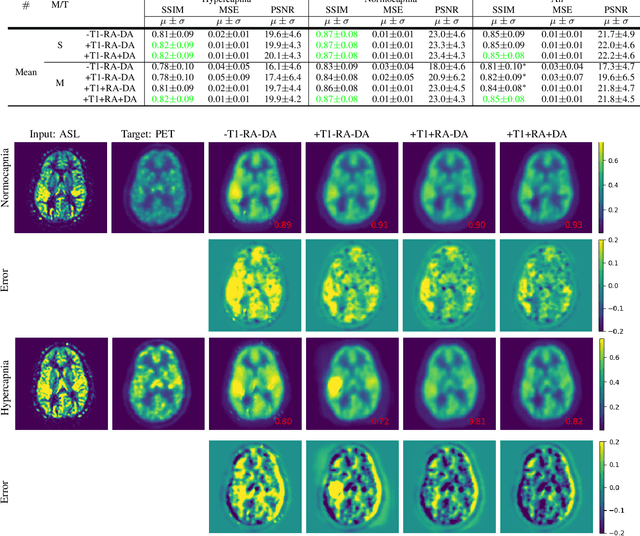

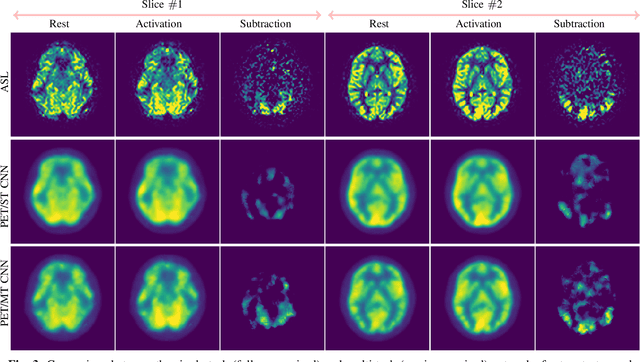

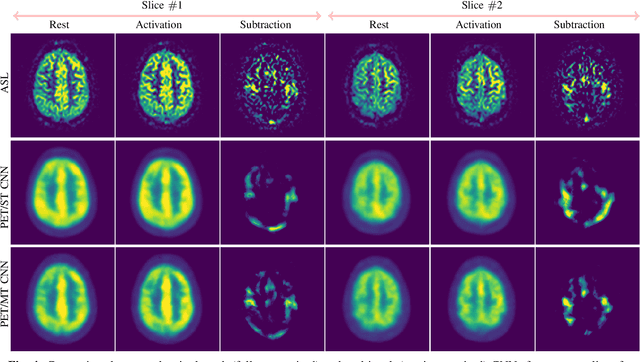

ASL to PET Translation by a Semi-supervised Residual-based Attention-guided Convolutional Neural Network

Mar 08, 2021

Abstract:Positron Emission Tomography (PET) is an imaging method that can assess physiological function rather than structural disturbances by measuring cerebral perfusion or glucose consumption. However, this imaging technique relies on injection of radioactive tracers and is expensive. On the contrary, Arterial Spin Labeling (ASL) MRI is a non-invasive, non-radioactive, and relatively cheap imaging technique for brain hemodynamic measurements, which allows quantification to some extent. In this paper we propose a convolutional neural network (CNN) based model for translating ASL to PET images, which could benefit patients as well as the healthcare system in terms of expenses and adverse side effects. However, acquiring a sufficient number of paired ASL-PET scans for training a CNN is prohibitive for many reasons. To tackle this problem, we present a new semi-supervised multitask CNN which is trained on both paired data, i.e. ASL and PET scans, and unpaired data, i.e. only ASL scans, which alleviates the problem of training a network on limited paired data. Moreover, we present a new residual-based-attention guided mechanism to improve the contextual features during the training process. Also, we show that incorporating T1-weighted scans as an input, due to its high resolution and availability of anatomical information, improves the results. We performed a two-stage evaluation based on quantitative image metrics by conducting a 7-fold cross validation followed by a double-blind observer study. The proposed network achieved structural similarity index measure (SSIM), mean squared error (MSE) and peak signal-to-noise ratio (PSNR) values of $0.85\pm0.08$, $0.01\pm0.01$, and $21.8\pm4.5$ respectively, for translating from 2D ASL and T1-weighted images to PET data. The proposed model is publicly available via https://github.com/yousefis/ASL2PET.

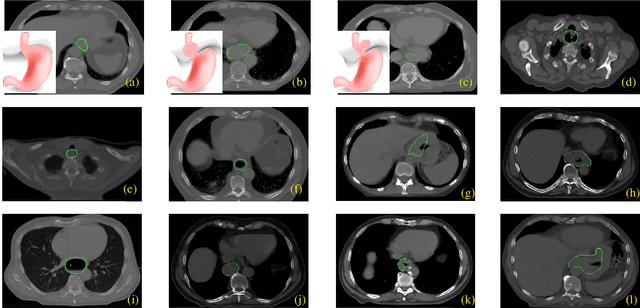

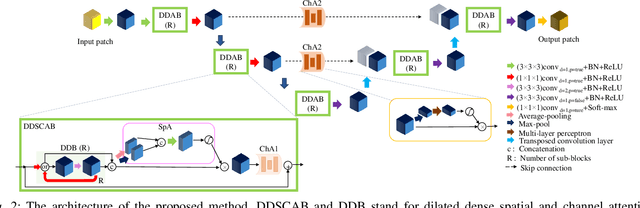

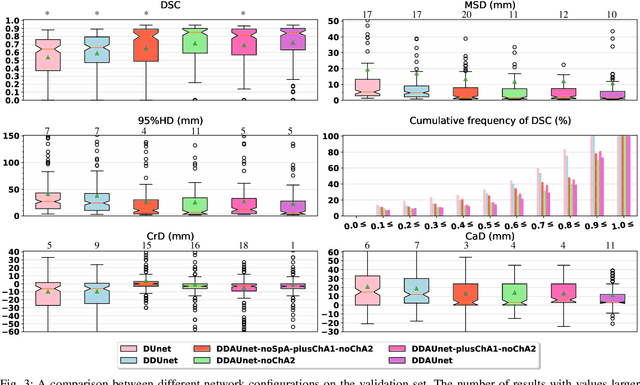

Esophageal Tumor Segmentation in CT Images using Dilated Dense Attention Unet (DDAUnet)

Dec 20, 2020

Abstract:Manual or automatic delineation of the esophageal tumor in CT images is known to be very challenging. This is due to the low contrast between the tumor and adjacent tissues, the anatomical variation of the esophagus, as well as the occasional presence of foreign bodies (e.g. feeding tubes). Physicians therefore usually exploit additional knowledge such as endoscopic findings, clinical history, additional imaging modalities like PET scans. Achieving his additional information is time-consuming, while the results are error-prone and might lead to non-deterministic results. In this paper we aim to investigate if and to what extent a simplified clinical workflow based on CT alone, allows one to automatically segment the esophageal tumor with sufficient quality. For this purpose, we present a fully automatic end-to-end esophageal tumor segmentation method based on convolutional neural networks (CNNs). The proposed network, called Dilated Dense Attention Unet (DDAUnet), leverages spatial and channel attention gates in each dense block to selectively concentrate on determinant feature maps and regions. Dilated convolutional layers are used to manage GPU memory and increase the network receptive field. We collected a dataset of 792 scans from 288 distinct patients including varying anatomies with \mbox{air pockets}, feeding tubes and proximal tumors. Repeatability and reproducibility studies were conducted for three distinct splits of training and validation sets. The proposed network achieved a $\mathrm{DSC}$ value of $0.79 \pm 0.20$, a mean surface distance of $5.4 \pm 20.2mm$ and $95\%$ Hausdorff distance of $14.7 \pm 25.0mm$ for 287 test scans, demonstrating promising results with a simplified clinical workflow based on CT alone. Our code is publicly available via \url{https://github.com/yousefis/DenseUnet_Esophagus_Segmentation}.

Fast Dynamic Perfusion and Angiography Reconstruction using an end-to-end 3D Convolutional Neural Network

Sep 04, 2019

Abstract:Hadamard time-encoded pseudo-continuous arterial spin labeling (te-pCASL) is a signal-to-noise ratio (SNR)-efficient MRI technique for acquiring dynamic pCASL signals that encodes the temporal information into the labeling according to a Hadamard matrix. In the decoding step, the contribution of each sub-bolus can be isolated resulting in dynamic perfusion scans. When acquiring te-ASL both with and without flow-crushing, the ASL-signal in the arteries can be isolated resulting in 4D-angiographic information. However, obtaining multi-timepoint perfusion and angiographic data requires two acquisitions. In this study, we propose a 3D Dense-Unet convolutional neural network with a multi-level loss function for reconstructing multi-timepoint perfusion and angiographic information from an interleaved $50\%$-sampled crushed and $50\%$-sampled non-crushed data, thereby negating the additional scan time. We present a framework to generate dynamic pCASL training and validation data, based on models of the intravascular and extravascular te-pCASL signals. The proposed network achieved SSIM values of $97.3 \pm 1.1$ and $96.2 \pm 11.1$ respectively for 4D perfusion and angiographic data reconstruction for 313 test data-sets.

3D Convolutional Neural Networks Image Registration Based on Efficient Supervised Learning from Artificial Deformations

Aug 27, 2019

Abstract:We propose a supervised nonrigid image registration method, trained using artificial displacement vector fields (DVF), for which we propose and compare three network architectures. The artificial DVFs allow training in a fully supervised and voxel-wise dense manner, but without the cost usually associated with the creation of densely labeled data. We propose a scheme to artificially generate DVFs, and for chest CT registration augment these with simulated respiratory motion. The proposed architectures are embedded in a multi-stage approach, to increase the capture range of the proposed networks in order to more accurately predict larger displacements. The proposed method, RegNet, is evaluated on multiple databases of chest CT scans and achieved a target registration error of 2.32 $\pm$ 5.33 mm and 1.86 $\pm$ 2.12 mm on SPREAD and DIR-Lab-4DCT studies, respectively. The average inference time of RegNet with two stages is about 2.2 s.

Adversarial optimization for joint registration and segmentation in prostate CT radiotherapy

Jun 28, 2019

Abstract:Joint image registration and segmentation has long been an active area of research in medical imaging. Here, we reformulate this problem in a deep learning setting using adversarial learning. We consider the case in which fixed and moving images as well as their segmentations are available for training, while segmentations are not available during testing; a common scenario in radiotherapy. The proposed framework consists of a 3D end-to-end generator network that estimates the deformation vector field (DVF) between fixed and moving images in an unsupervised fashion and applies this DVF to the moving image and its segmentation. A discriminator network is trained to evaluate how well the moving image and segmentation align with the fixed image and segmentation. The proposed network was trained and evaluated on follow-up prostate CT scans for image-guided radiotherapy, where the planning CT contours are propagated to the daily CT images using the estimated DVF. A quantitative comparison with conventional registration using \texttt{elastix} showed that the proposed method improved performance and substantially reduced computation time, thus enabling real-time contour propagation necessary for online-adaptive radiotherapy.

Quantitative Error Prediction of Medical Image Registration using Regression Forests

May 18, 2019

Abstract:Predicting registration error can be useful for evaluation of registration procedures, which is important for the adoption of registration techniques in the clinic. In addition, quantitative error prediction can be helpful in improving the registration quality. The task of predicting registration error is demanding due to the lack of a ground truth in medical images. This paper proposes a new automatic method to predict the registration error in a quantitative manner, and is applied to chest CT scans. A random regression forest is utilized to predict the registration error locally. The forest is built with features related to the transformation model and features related to the dissimilarity after registration. The forest is trained and tested using manually annotated corresponding points between pairs of chest CT scans in two experiments: SPREAD (trained and tested on SPREAD) and inter-database (including three databases SPREAD, DIR-Lab-4DCT and DIR-Lab-COPDgene). The results show that the mean absolute errors of regression are 1.07 $\pm$ 1.86 and 1.76 $\pm$ 2.59 mm for the SPREAD and inter-database experiment, respectively. The overall accuracy of classification in three classes (correct, poor and wrong registration) is 90.7% and 75.4%, for SPREAD and inter-database respectively. The good performance of the proposed method enables important applications such as automatic quality control in large-scale image analysis.

A Deep Learning Framework for Unsupervised Affine and Deformable Image Registration

Sep 17, 2018

Abstract:Image registration, the process of aligning two or more images, is the core technique of many (semi-)automatic medical image analysis tasks. Recent studies have shown that deep learning methods, notably convolutional neural networks (ConvNets), can be used for image registration. Thus far training of ConvNets for registration was supervised using predefined example registrations. However, obtaining example registrations is not trivial. To circumvent the need for predefined examples, and thereby to increase convenience of training ConvNets for image registration, we propose the Deep Learning Image Registration (DLIR) framework for \textit{unsupervised} affine and deformable image registration. In the DLIR framework ConvNets are trained for image registration by exploiting image similarity analogous to conventional intensity-based image registration. After a ConvNet has been trained with the DLIR framework, it can be used to register pairs of unseen images in one shot. We propose flexible ConvNets designs for affine image registration and for deformable image registration. By stacking multiple of these ConvNets into a larger architecture, we are able to perform coarse-to-fine image registration. We show for registration of cardiac cine MRI and registration of chest CT that performance of the DLIR framework is comparable to conventional image registration while being several orders of magnitude faster.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge