Matthias J. P. van Osch

UPCMR: A Universal Prompt-guided Model for Random Sampling Cardiac MRI Reconstruction

Feb 18, 2025

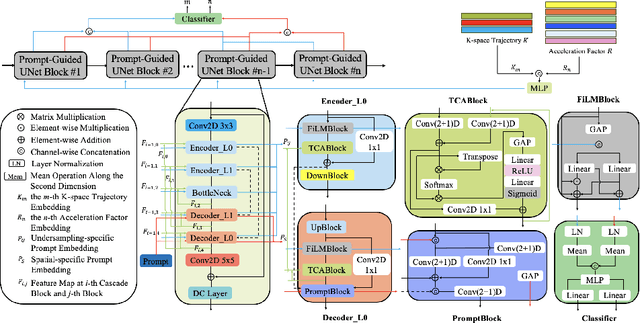

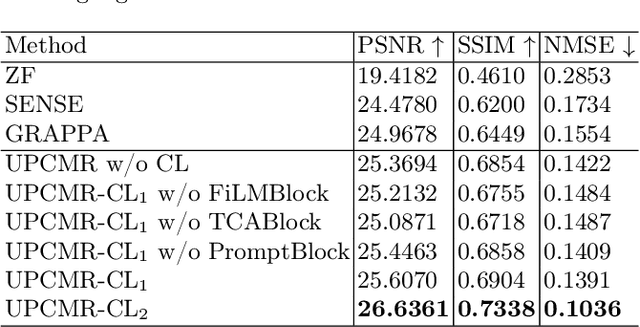

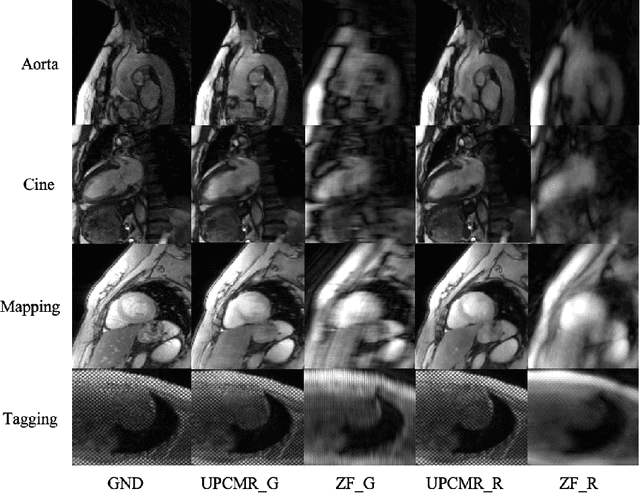

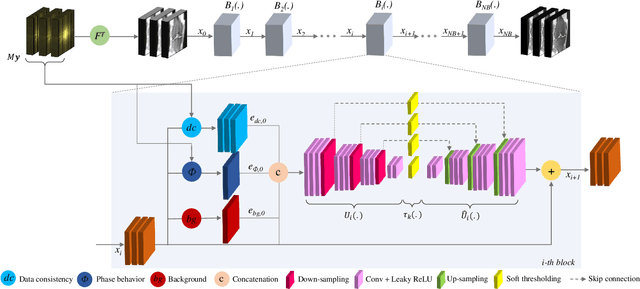

Abstract:Cardiac magnetic resonance imaging (CMR) is vital for diagnosing heart diseases, but long scan time remains a major drawback. To address this, accelerated imaging techniques have been introduced by undersampling k-space, which reduces the quality of the resulting images. Recent deep learning advancements aim to speed up scanning while preserving quality, but adapting to various sampling modes and undersampling factors remains challenging. Therefore, building a universal model is a promising direction. In this work, we introduce UPCMR, a universal unrolled model designed for CMR reconstruction. This model incorporates two kinds of learnable prompts, undersampling-specific prompt and spatial-specific prompt, and integrates them with a UNet structure in each block. Overall, by using the CMRxRecon2024 challenge dataset for training and validation, the UPCMR model highly enhances reconstructed image quality across all random sampling scenarios through an effective training strategy compared to some traditional methods, demonstrating strong adaptability potential for this task.

ASL to PET Translation by a Semi-supervised Residual-based Attention-guided Convolutional Neural Network

Mar 08, 2021

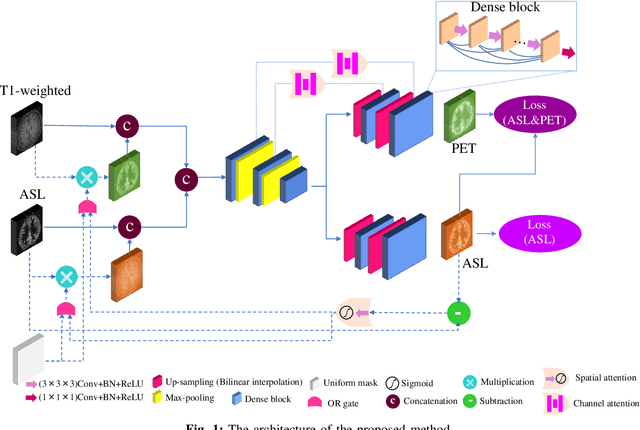

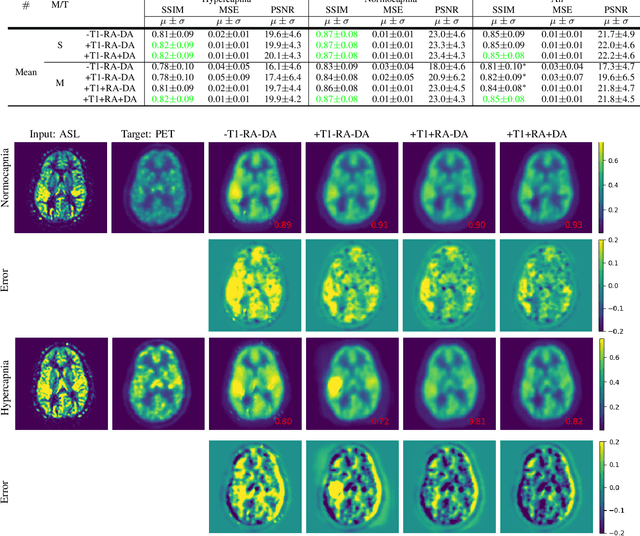

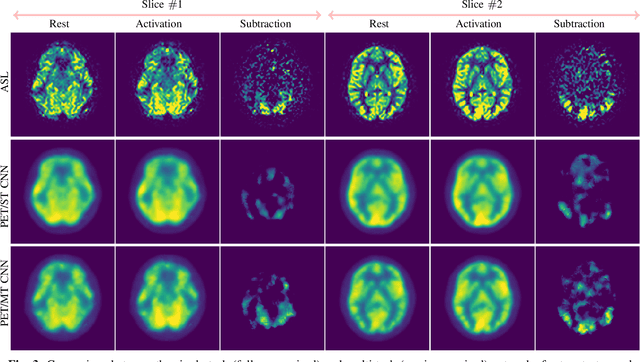

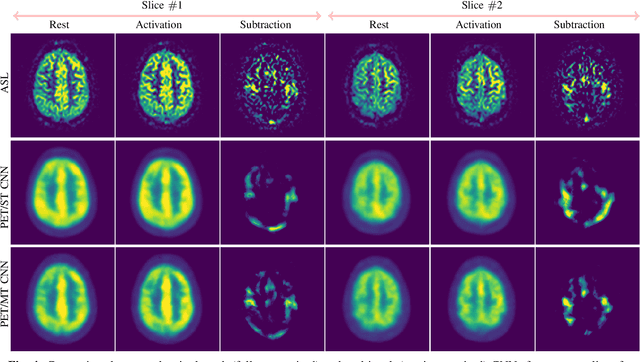

Abstract:Positron Emission Tomography (PET) is an imaging method that can assess physiological function rather than structural disturbances by measuring cerebral perfusion or glucose consumption. However, this imaging technique relies on injection of radioactive tracers and is expensive. On the contrary, Arterial Spin Labeling (ASL) MRI is a non-invasive, non-radioactive, and relatively cheap imaging technique for brain hemodynamic measurements, which allows quantification to some extent. In this paper we propose a convolutional neural network (CNN) based model for translating ASL to PET images, which could benefit patients as well as the healthcare system in terms of expenses and adverse side effects. However, acquiring a sufficient number of paired ASL-PET scans for training a CNN is prohibitive for many reasons. To tackle this problem, we present a new semi-supervised multitask CNN which is trained on both paired data, i.e. ASL and PET scans, and unpaired data, i.e. only ASL scans, which alleviates the problem of training a network on limited paired data. Moreover, we present a new residual-based-attention guided mechanism to improve the contextual features during the training process. Also, we show that incorporating T1-weighted scans as an input, due to its high resolution and availability of anatomical information, improves the results. We performed a two-stage evaluation based on quantitative image metrics by conducting a 7-fold cross validation followed by a double-blind observer study. The proposed network achieved structural similarity index measure (SSIM), mean squared error (MSE) and peak signal-to-noise ratio (PSNR) values of $0.85\pm0.08$, $0.01\pm0.01$, and $21.8\pm4.5$ respectively, for translating from 2D ASL and T1-weighted images to PET data. The proposed model is publicly available via https://github.com/yousefis/ASL2PET.

An Adaptive Intelligence Algorithm for Undersampled Knee MRI Reconstruction: Application to the 2019 fastMRI Challenge

Apr 15, 2020

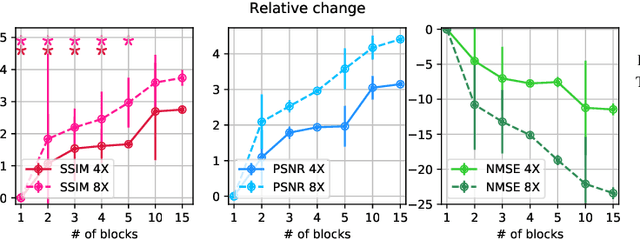

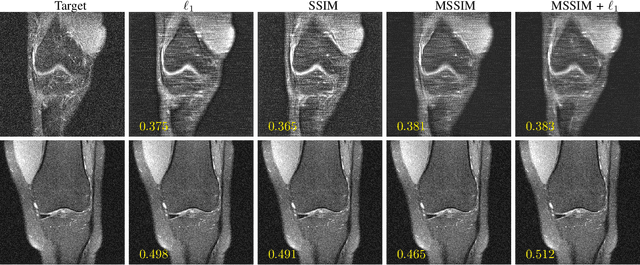

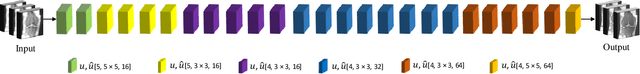

Abstract:Adaptive intelligence aims at empowering machine learning techniques with the additional use of domain knowledge. In this work, we present the application of adaptive intelligence to accelerate MR acquisition. Starting from undersampled k-space data, an iterative learning-based reconstruction scheme inspired by compressed sensing theory is used to reconstruct the images. We adopt deep neural networks to refine and correct prior reconstruction assumptions given the training data. The network was trained and tested on a knee MRI dataset from the 2019 fastMRI challenge organized by Facebook AI Research and NYU Langone Health. All submissions to the challenge were initially ranked based on similarity with a known groundtruth, after which the top 4 submissions were evaluated radiologically. Our method was evaluated by the fastMRI organizers on an independent challenge dataset. It ranked #1, shared #1, and #3 on respectively the 8x accelerated multi-coil, the 4x multi-coil, and the 4x single-coil track. This demonstrates the superior performance and wide applicability of the method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge