Hessah Albanwan

Deep Learning Meets Satellite Images -- An Evaluation on Handcrafted and Learning-based Features for Multi-date Satellite Stereo Images

Sep 04, 2024

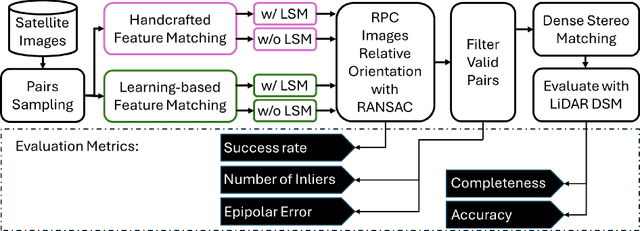

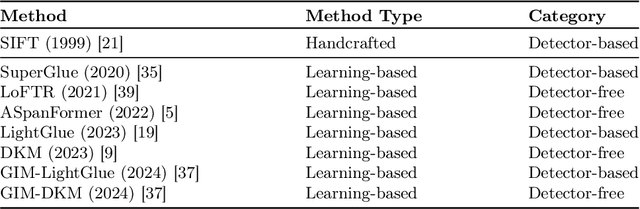

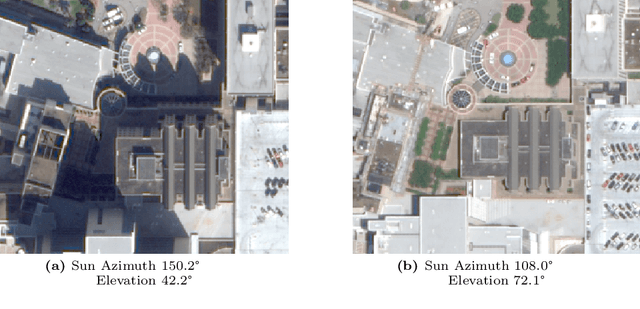

Abstract:A critical step in the digital surface models(DSM) generation is feature matching. Off-track (or multi-date) satellite stereo images, in particular, can challenge the performance of feature matching due to spectral distortions between images, long baseline, and wide intersection angles. Feature matching methods have evolved over the years from handcrafted methods (e.g., SIFT) to learning-based methods (e.g., SuperPoint and SuperGlue). In this paper, we compare the performance of different features, also known as feature extraction and matching methods, applied to satellite imagery. A wide range of stereo pairs(~500) covering two separate study sites are used. SIFT, as a widely used classic feature extraction and matching algorithm, is compared with seven deep-learning matching methods: SuperGlue, LightGlue, LoFTR, ASpanFormer, DKM, GIM-LightGlue, and GIM-DKM. Results demonstrate that traditional matching methods are still competitive in this age of deep learning, although for particular scenarios learning-based methods are very promising.

Spatial, Temporal, and Geometric Fusion for Remote Sensing Images

Apr 27, 2024

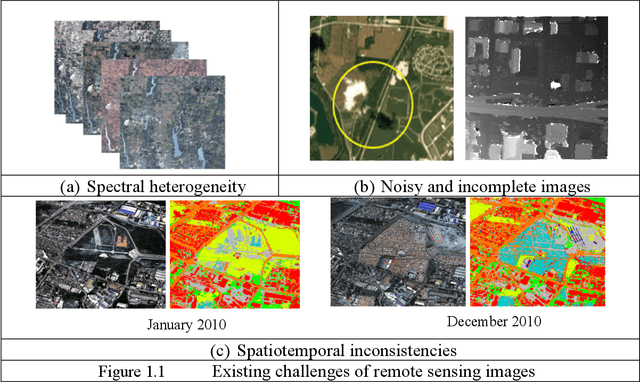

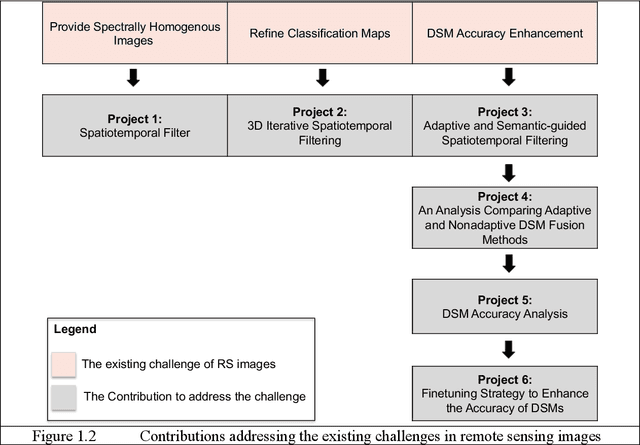

Abstract:Remote sensing (RS) images are important to monitor and survey earth at varying spatial scales. Continuous observations from various RS sources complement single observations to improve applications. Fusion into single or multiple images provides more informative, accurate, complete, and coherent data. Studies intensively investigated spatial-temporal fusion for specific applications like pan-sharpening and spatial-temporal fusion for time-series analysis. Fusion methods can process different images, modalities, and tasks and are expected to be robust and adaptive to various types of images (e.g., spectral images, classification maps, and elevation maps) and scene complexities. This work presents solutions to improve existing fusion methods that process gridded data and consider their type-specific uncertainties. The contributions include: 1) A spatial-temporal filter that addresses spectral heterogeneity of multitemporal images. 2) 3D iterative spatiotemporal filter that enhances spatiotemporal inconsistencies of classification maps. 3) Adaptive semantic-guided fusion that enhances the accuracy of DSMs and compares them with traditional fusion approaches to show the significance of adaptive methods. 4) A comprehensive analysis of DL stereo matching methods against traditional Census-SGM to obtain detailed knowledge on the accuracy of the DSMs at the stereo matching level. We analyze the overall performance, robustness, and generalization capability, which helps identify the limitations of current DSM generation methods. 5) Based on previous analysis, we develop a novel finetuning strategy to enhance transferability of DL stereo matching methods, hence, the accuracy of DSMs. Our work shows the importance of spatial, temporal, and geometric fusion in enhancing RS applications. It shows that the fusion problem is case-specific and depends on the image type, scene content, and application.

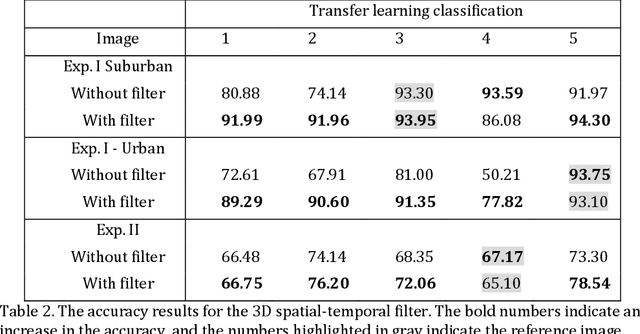

Remote Sensing Image Enhancement through Spatiotemporal Filtering

Apr 27, 2024

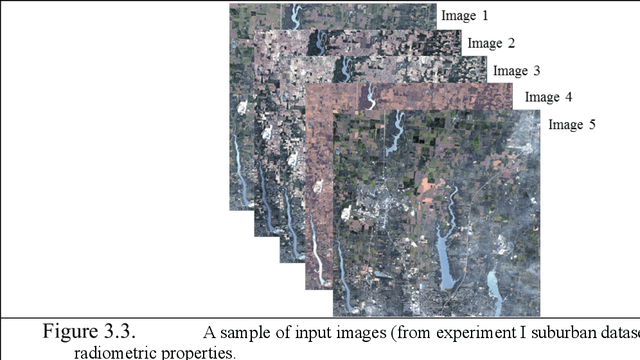

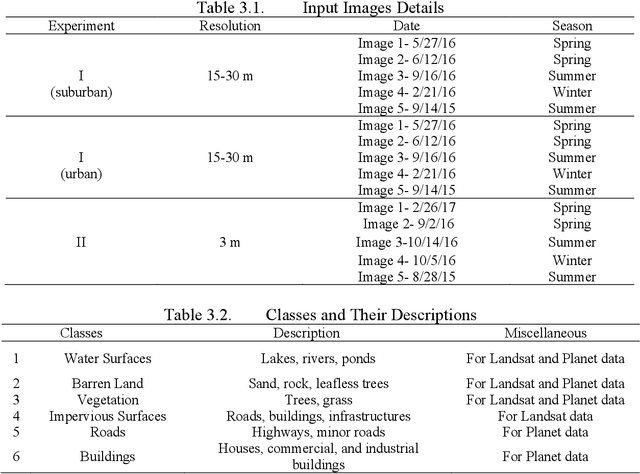

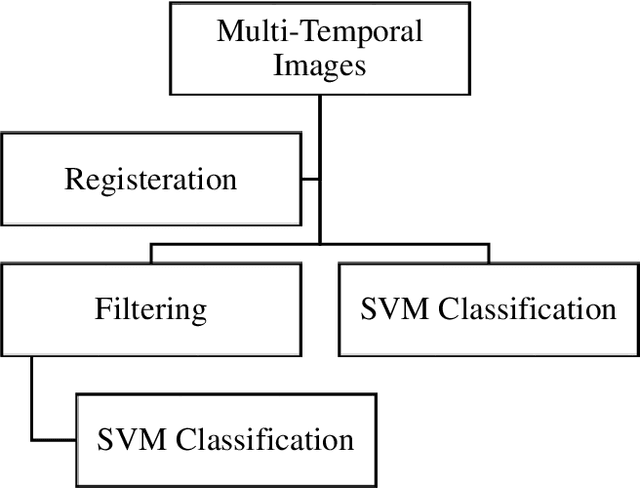

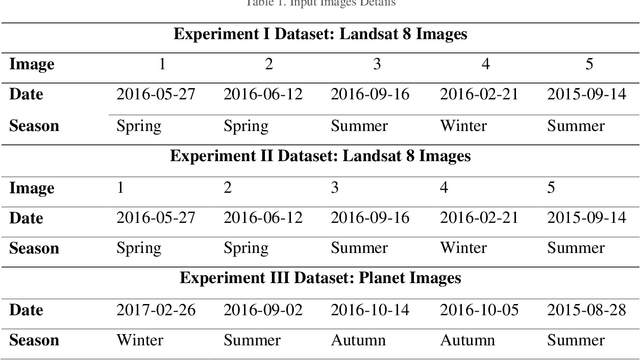

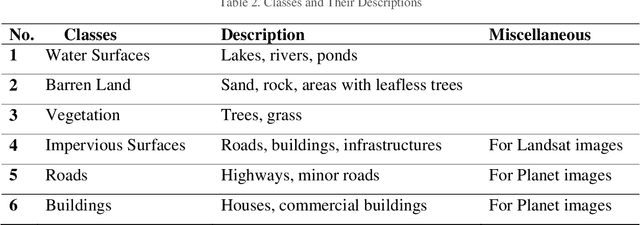

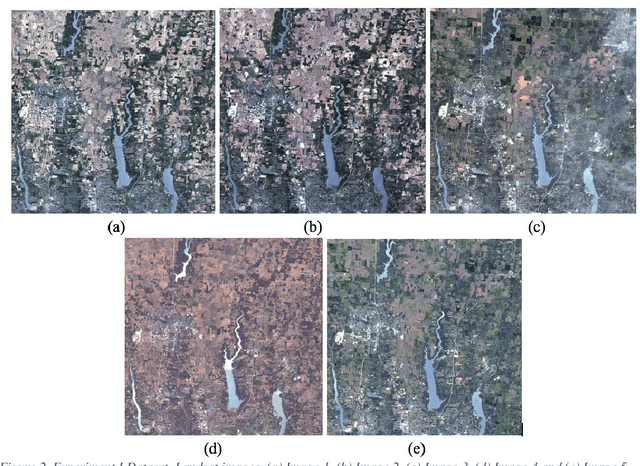

Abstract:The analysis of time-sequence satellite images is a powerful tool in remote sensing; it is used to explore the statics and dynamics of the surface of the earth. Usually, the quality of multitemporal images is influenced by metrological conditions, high reflectance of surfaces, illumination, and satellite sensor conditions. These negative influences may produce noises and different radiances and appearances between the images, which can affect the applications that process them. Thus, a spatiotemporal bilateral filter has been adopted in this research to enhance the quality of an image before using it in any application. The filter takes advantage of the temporal information provided by multi temporal images and attempts to reduce the differences between them to improve transfer learning used in classification. The classification method used here is support vector machine (SVM). Three experiments were conducted in this research, two were on Landsat 8 images with low-medium resolution, and the third on high-resolution images of Planet satellite. The newly developed filter proved that it can enhance the accuracy of classification using transfer learning by about 5%,15%, and 2% for the three experiments respectively.

Image Fusion in Remote Sensing: An Overview and Meta Analysis

Jan 16, 2024

Abstract:Image fusion in Remote Sensing (RS) has been a consistent demand due to its ability to turn raw images of different resolutions, sources, and modalities into accurate, complete, and spatio-temporally coherent images. It greatly facilitates downstream applications such as pan-sharpening, change detection, land-cover classification, etc. Yet, image fusion solutions are highly disparate to various remote sensing problems and thus are often narrowly defined in existing reviews as topical applications, such as pan-sharpening, and spatial-temporal image fusion. Considering that image fusion can be theoretically applied to any gridded data through pixel-level operations, in this paper, we expanded its scope by comprehensively surveying relevant works with a simple taxonomy: 1) many-to-one image fusion; 2) many-to-many image fusion. This simple taxonomy defines image fusion as a mapping problem that turns either a single or a set of images into another single or set of images, depending on the desired coherence, e.g., spectral, spatial/resolution coherence, etc. We show that this simple taxonomy, despite the significant modality difference it covers, can be presented by a conceptually easy framework. In addition, we provide a meta-analysis to review the major papers studying the various types of image fusion and their applications over the years (from the 1980s to date), covering 5,926 peer-reviewed papers. Finally, we discuss the main benefits and emerging challenges to provide open research directions and potential future works.

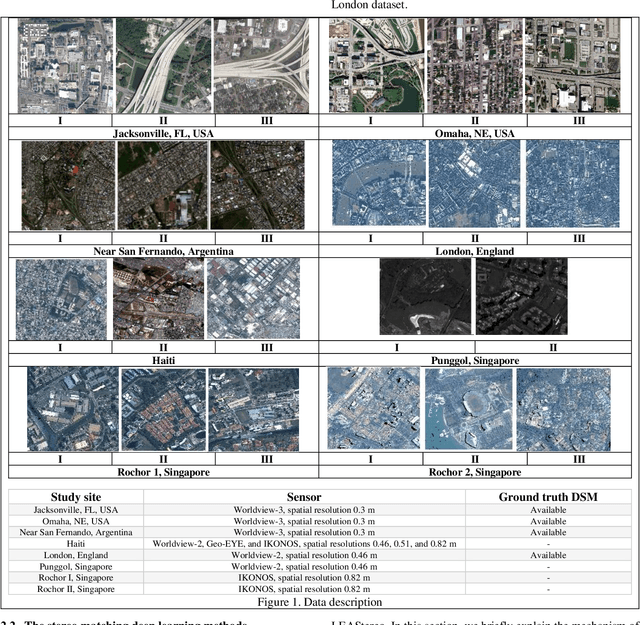

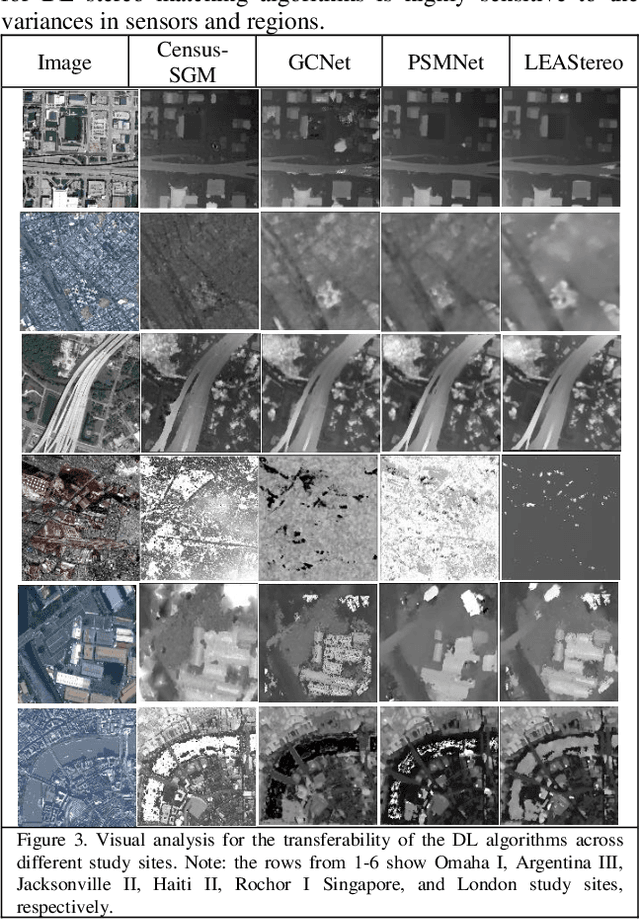

A Comparative Study on Deep-Learning Methods for Dense Image Matching of Multi-angle and Multi-date Remote Sensing Stereo Images

Oct 25, 2022Abstract:Deep learning (DL) stereo matching methods gained great attention in remote sensing satellite datasets. However, most of these existing studies conclude assessments based only on a few/single stereo images lacking a systematic evaluation on how robust DL methods are on satellite stereo images with varying radiometric and geometric configurations. This paper provides an evaluation of four DL stereo matching methods through hundreds of multi-date multi-site satellite stereo pairs with varying geometric configurations, against the traditional well-practiced Census-SGM (Semi-global matching), to comprehensively understand their accuracy, robustness, generalization capabilities, and their practical potential. The DL methods include a learning-based cost metric through convolutional neural networks (MC-CNN) followed by SGM, and three end-to-end (E2E) learning models using Geometry and Context Network (GCNet), Pyramid Stereo Matching Network (PSMNet), and LEAStereo. Our experiments show that E2E algorithms can achieve upper limits of geometric accuracies, while may not generalize well for unseen data. The learning-based cost metric and Census-SGM are rather robust and can consistently achieve acceptable results. All DL algorithms are robust to geometric configurations of stereo pairs and are less sensitive in comparison to the Census-SGM, while learning-based cost metrics can generalize on satellite images when trained on different datasets (airborne or ground-view).

A Review of Mobile Mapping Systems: From Sensors to Applications

May 31, 2022

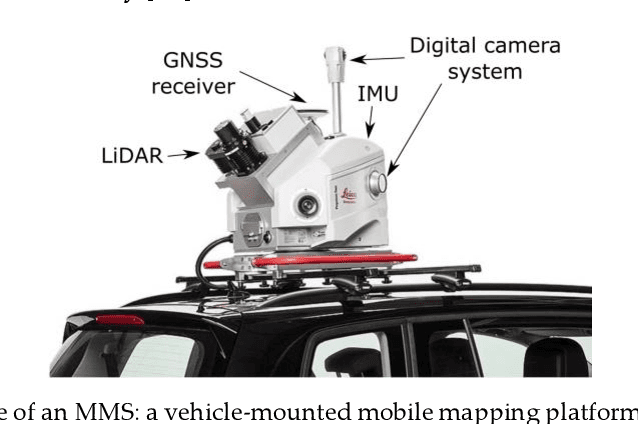

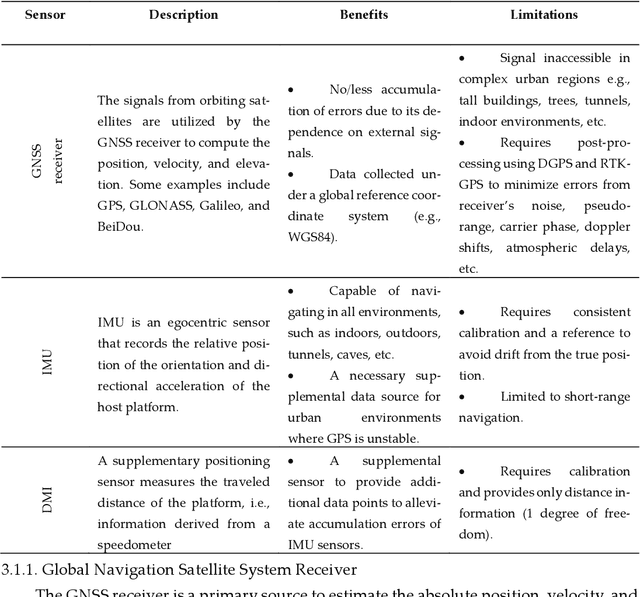

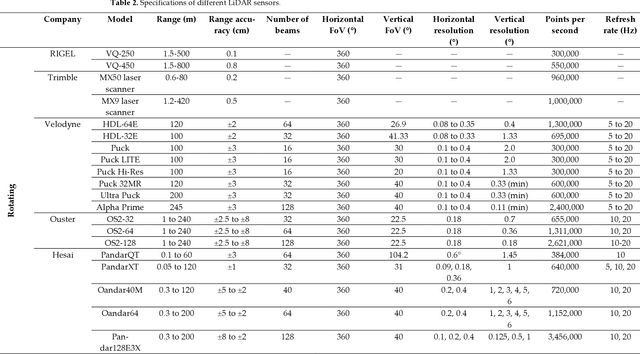

Abstract:The evolution of mobile mapping systems (MMSs) has gained more attention in the past few decades. MMSs have been widely used to provide valuable assets in different applications. This has been facilitated by the wide availability of low-cost sensors, the advances in computational resources, the maturity of the mapping algorithms, and the need for accurate and on-demand geographic information system (GIS) data and digital maps. Many MMSs combine hybrid sensors to provide a more informative, robust, and stable solution by complementing each other. In this paper, we present a comprehensive review of the modern MMSs by focusing on 1) the types of sensors and platforms, where we discuss their capabilities, limitations, and also provide a comprehensive overview of recent MMS technologies available in the market, 2) highlighting the general workflow to process any MMS data, 3) identifying the different use cases of mobile mapping technology by reviewing some of the common applications, and 4) presenting a discussion on the benefits, challenges, and share our views on the potential research directions.

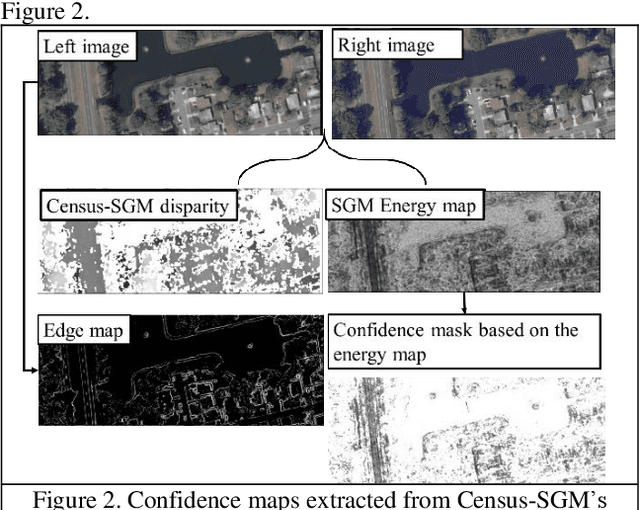

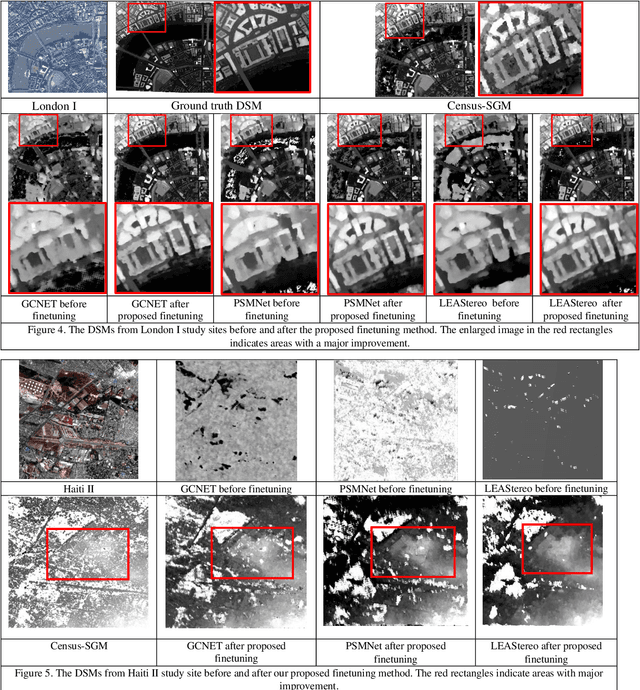

Fine-tuning deep learning models for stereo matching using results from semi-global matching

May 27, 2022

Abstract:Deep learning (DL) methods are widely investigated for stereo image matching tasks due to their reported high accuracies. However, their transferability/generalization capabilities are limited by the instances seen in the training data. With satellite images covering large-scale areas with variances in locations, content, land covers, and spatial patterns, we expect their performances to be impacted. Increasing the number and diversity of training data is always an option, but with the ground-truth disparity being limited in remote sensing due to its high cost, it is almost impossible to obtain the ground-truth for all locations. Knowing that classical stereo matching methods such as Census-based semi-global-matching (SGM) are widely adopted to process different types of stereo data, we therefore, propose a finetuning method that takes advantage of disparity maps derived from SGM on target stereo data. Our proposed method adopts a simple scheme that uses the energy map derived from the SGM algorithm to select high confidence disparity measurements, at the same utilizing the images to limit these selected disparity measurements on texture-rich regions. Our approach aims to investigate the possibility of improving the transferability of current DL methods to unseen target data without having their ground truth as a requirement. To perform a comprehensive study, we select 20 study-sites around the world to cover a variety of complexities and densities. We choose well-established DL methods like geometric and context network (GCNet), pyramid stereo matching network (PSMNet), and LEAStereo for evaluation. Our results indicate an improvement in the transferability of the DL methods across different regions visually and numerically.

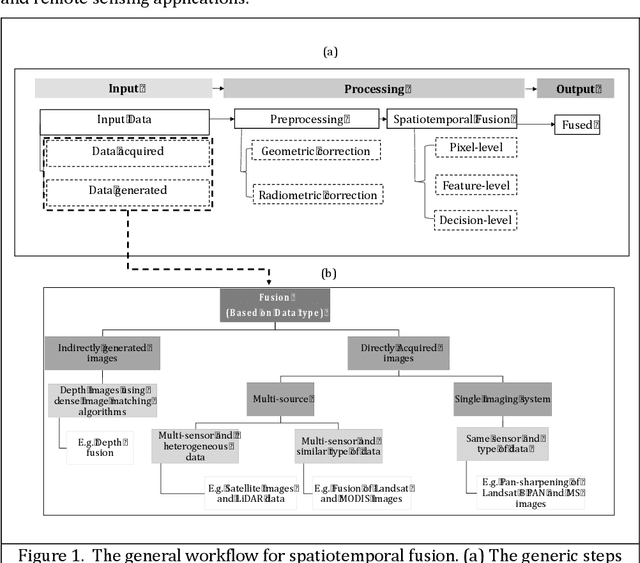

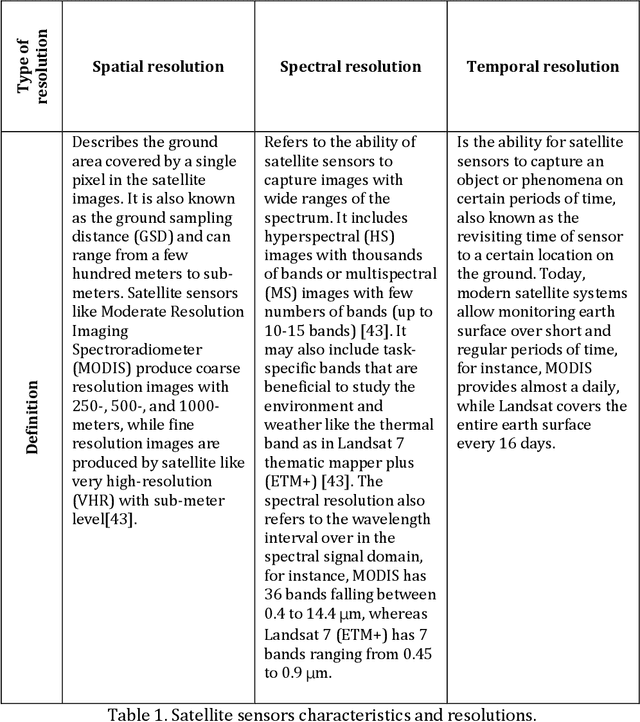

Spatiotemporal Fusion in Remote Sensing

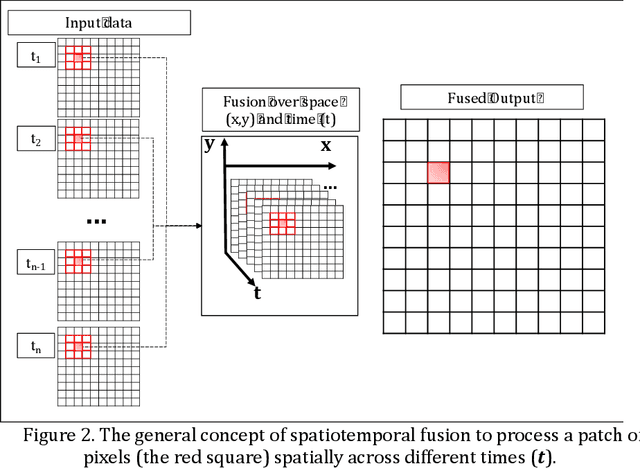

Jul 06, 2021

Abstract:Remote sensing images and techniques are powerful tools to investigate earth surface. Data quality is the key to enhance remote sensing applications and obtaining a clear and noise-free set of data is very difficult in most situations due to the varying acquisition (e.g., atmosphere and season), sensor, and platform (e.g., satellite angles and sensor characteristics) conditions. With the increasing development of satellites, nowadays Terabytes of remote sensing images can be acquired every day. Therefore, information and data fusion can be particularly important in the remote sensing community. The fusion integrates data from various sources acquired asynchronously for information extraction, analysis, and quality improvement. In this chapter, we aim to discuss the theory of spatiotemporal fusion by investigating previous works, in addition to describing the basic concepts and some of its applications by summarizing our prior and ongoing works.

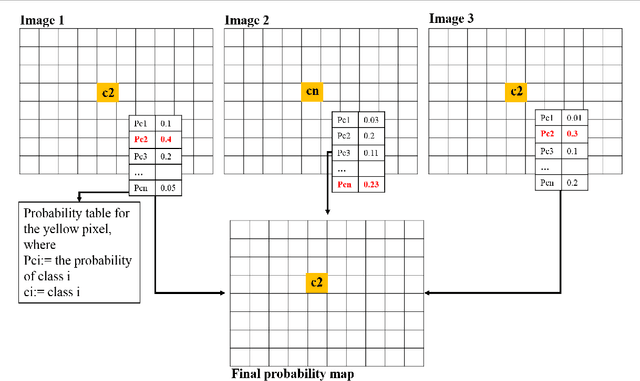

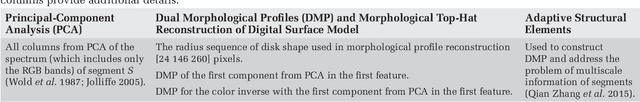

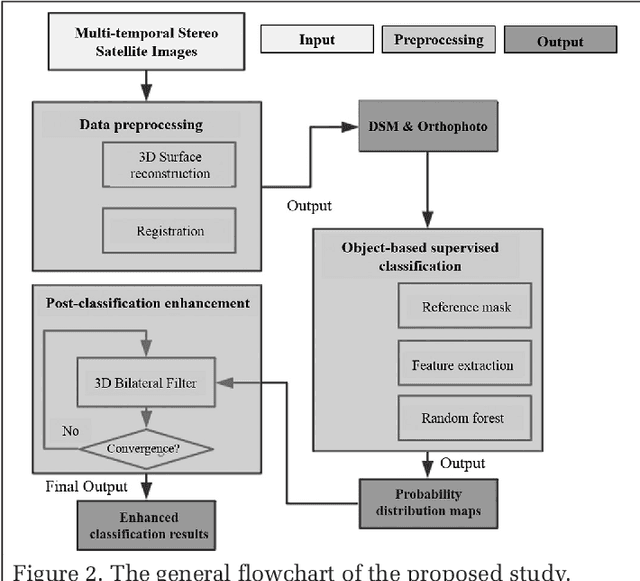

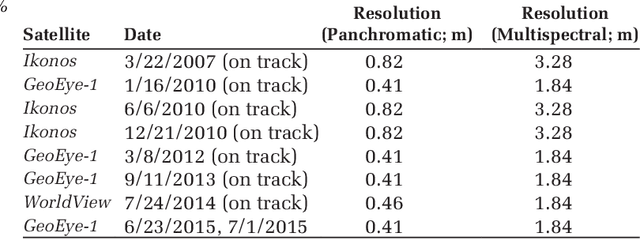

3D Iterative Spatiotemporal Filtering for Classification of Multitemporal Satellite Data Sets

Jul 01, 2021

Abstract:The current practice in land cover/land use change analysis relies heavily on the individually classified maps of the multitemporal data set. Due to varying acquisition conditions (e.g., illumination, sensors, seasonal differences), the classification maps yielded are often inconsistent through time for robust statistical analysis. 3D geometric features have been shown to be stable for assessing differences across the temporal data set. Therefore, in this article we investigate he use of a multitemporal orthophoto and digital surface model derived from satellite data for spatiotemporal classification. Our approach consists of two major steps: generating per-class probability distribution maps using the random-forest classifier with limited training samples, and making spatiotemporal inferences using an iterative 3D spatiotemporal filter operating on per-class probability maps. Our experimental results demonstrate that the proposed methods can consistently improve the individual classification results by 2%-6% and thus can be an important postclassification refinement approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge