Mostafa Elhashash

Select-and-Combine : A Novel Multi-Stereo Depth Fusion Algorithm for Point Cloud Generation via Efficient Local Markov Netlets

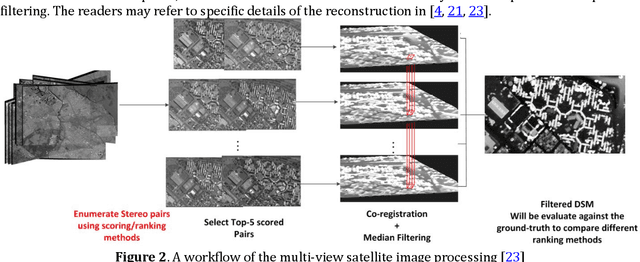

Aug 23, 2023Abstract:Many practical systems for image-based surface reconstruction employ a stereo/multi-stereo paradigm, due to its ability to scale for large scenes and its ease of implementation for out-of-core operations. In this process, multiple and abundant depth maps from stereo matching must be combined and fused into a single, consistent, and clean point cloud. However, the noises and outliers caused by stereo matching and the heterogenous geometric errors of the poses present a challenge for existing fusion algorithms, since they mostly assume Gaussian errors and predict fused results based on data from local spatial neighborhoods, which may inherit uncertainties from multiple depths resulting in lowered accuracy. In this paper, we propose a novel depth fusion paradigm, that instead of numerically fusing points from multiple depth maps, selects the best depth map per point, and combines them into a single and clean point cloud. This paradigm, called select-and-combine (SAC), is achieved through modeling the point level fusion using local Markov Netlets, a micro-network over point across neighboring views for depth/view selection, followed by a Netlets collapse process for point combination. The Markov Netlets are optimized such that they can inherently leverage spatial consistencies among depth maps of neighboring views, thus they can address errors beyond Gaussian ones. Our experiment results show that our approach outperforms existing depth fusion approaches by increasing the F1 score that considers both accuracy and completeness by 2.07% compared to the best existing method. Finally, our approach generates clearer point clouds that are 18% less redundant while with a higher accuracy before fusion

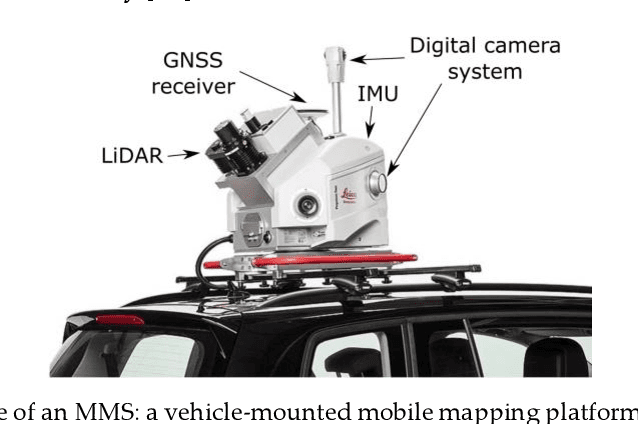

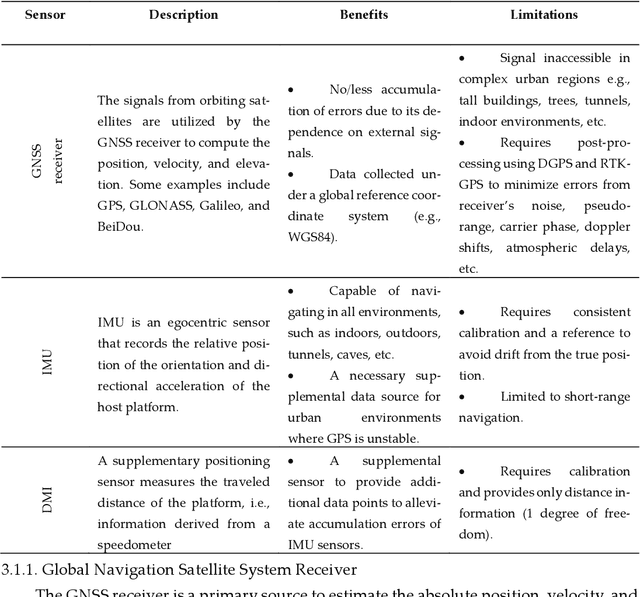

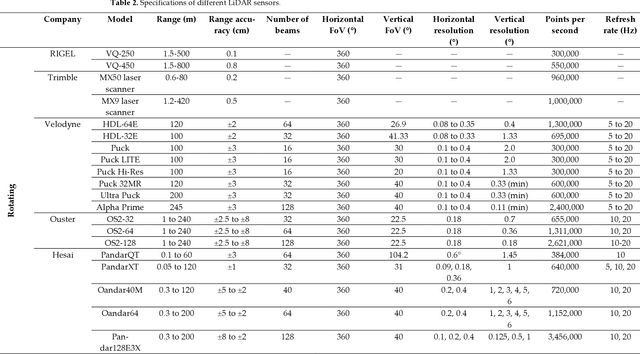

A Review of Mobile Mapping Systems: From Sensors to Applications

May 31, 2022

Abstract:The evolution of mobile mapping systems (MMSs) has gained more attention in the past few decades. MMSs have been widely used to provide valuable assets in different applications. This has been facilitated by the wide availability of low-cost sensors, the advances in computational resources, the maturity of the mapping algorithms, and the need for accurate and on-demand geographic information system (GIS) data and digital maps. Many MMSs combine hybrid sensors to provide a more informative, robust, and stable solution by complementing each other. In this paper, we present a comprehensive review of the modern MMSs by focusing on 1) the types of sensors and platforms, where we discuss their capabilities, limitations, and also provide a comprehensive overview of recent MMS technologies available in the market, 2) highlighting the general workflow to process any MMS data, 3) identifying the different use cases of mobile mapping technology by reviewing some of the common applications, and 4) presenting a discussion on the benefits, challenges, and share our views on the potential research directions.

Constrained Bundle Adjustment for Structure From Motion Using Uncalibrated Multi-Camera Systems

Apr 08, 2022

Abstract:Structure from motion using uncalibrated multi-camera systems is a challenging task. This paper proposes a bundle adjustment solution that implements a baseline constraint respecting that these cameras are static to each other. We assume these cameras are mounted on a mobile platform, uncalibrated, and coarsely synchronized. To this end, we propose the baseline constraint that is formulated for the scenario in which the cameras have overlapping views. The constraint is incorporated in the bundle adjustment solution to keep the relative motion of different cameras static. Experiments were conducted using video frames of two collocated GoPro cameras mounted on a vehicle with no system calibration. These two cameras were placed capturing overlapping contents. We performed our bundle adjustment using the proposed constraint and then produced 3D dense point clouds. Evaluations were performed by comparing these dense point clouds against LiDAR reference data. We showed that, as compared to traditional bundle adjustment, our proposed method achieved an improvement of 29.38%.

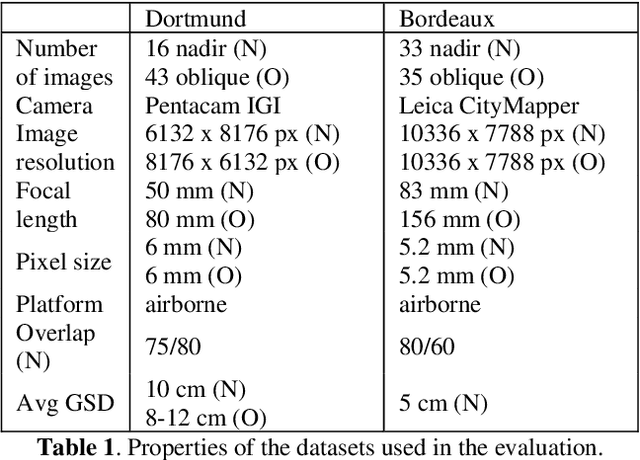

Investigating Spherical Epipolar Rectification for Multi-View Stereo 3D Reconstruction

Apr 08, 2022

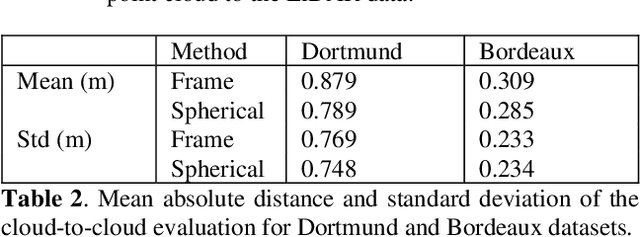

Abstract:Multi-view stereo (MVS) reconstruction is essential for creating 3D models. The approach involves applying epipolar rectification followed by dense matching for disparity estimation. However, existing approaches face challenges in applying dense matching for images with different viewpoints primarily due to large differences in object scale. In this paper, we propose a spherical model for epipolar rectification to minimize distortions caused by differences in principal rays. We evaluate the proposed approach using two aerial-based datasets consisting of multi-camera head systems. We show through qualitative and quantitative evaluation that the proposed approach performs better than frame-based epipolar correction by enhancing the completeness of point clouds by up to 4.05% while improving the accuracy by up to 10.23% using LiDAR data as ground truth.

3D Reconstruction through Fusion of Cross-View Images

Jun 27, 2021

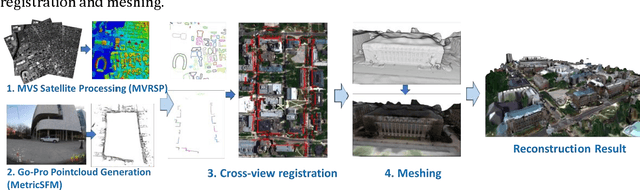

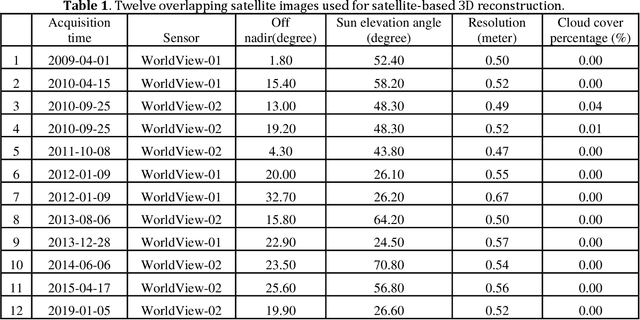

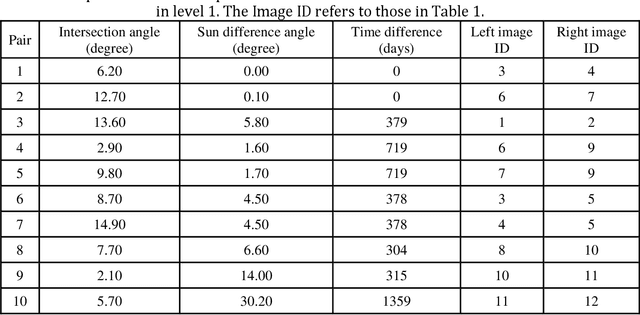

Abstract:3D recovery from multi-stereo and stereo images, as an important application of the image-based perspective geometry, serves many applications in computer vision, remote sensing and Geomatics. In this chapter, the authors utilize the imaging geometry and present approaches that perform 3D reconstruction from cross-view images that are drastically different in their viewpoints. We introduce our framework that takes ground-view images and satellite images for full 3D recovery, which includes necessary methods in satellite and ground-based point cloud generation from images, 3D data co-registration, fusion and mesh generation. We demonstrate our proposed framework on a dataset consisting of twelve satellite images and 150k video frames acquired through a vehicle-mounted Go-pro camera and demonstrate the reconstruction results. We have also compared our results with results generated from an intuitive processing pipeline that involves typical geo-registration and meshing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge