Spatial, Temporal, and Geometric Fusion for Remote Sensing Images

Paper and Code

Apr 27, 2024

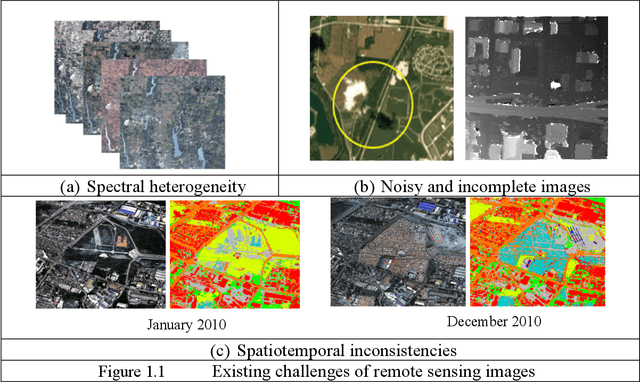

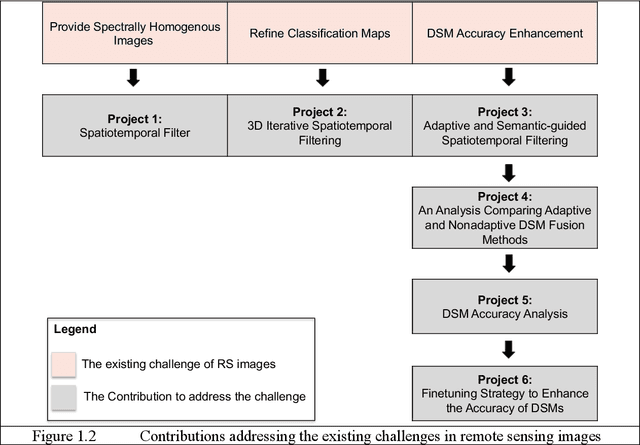

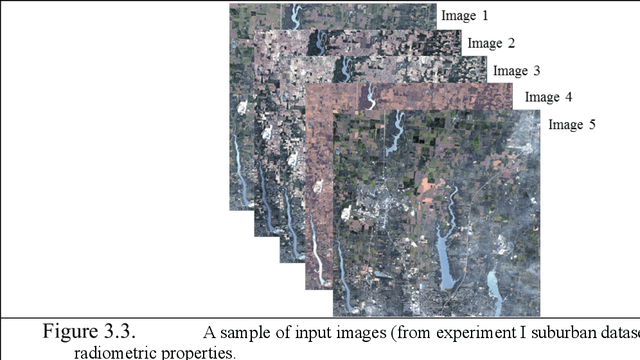

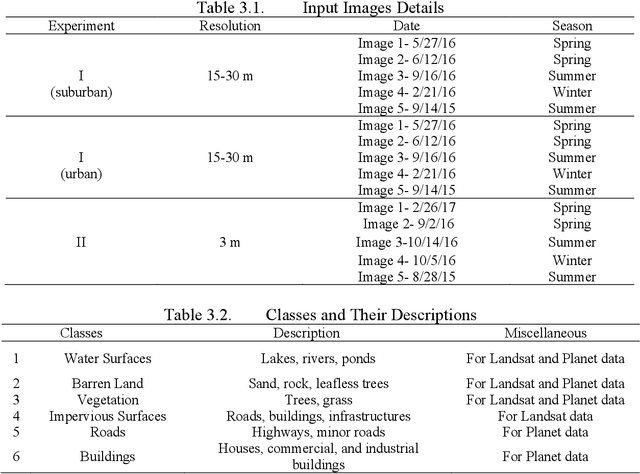

Remote sensing (RS) images are important to monitor and survey earth at varying spatial scales. Continuous observations from various RS sources complement single observations to improve applications. Fusion into single or multiple images provides more informative, accurate, complete, and coherent data. Studies intensively investigated spatial-temporal fusion for specific applications like pan-sharpening and spatial-temporal fusion for time-series analysis. Fusion methods can process different images, modalities, and tasks and are expected to be robust and adaptive to various types of images (e.g., spectral images, classification maps, and elevation maps) and scene complexities. This work presents solutions to improve existing fusion methods that process gridded data and consider their type-specific uncertainties. The contributions include: 1) A spatial-temporal filter that addresses spectral heterogeneity of multitemporal images. 2) 3D iterative spatiotemporal filter that enhances spatiotemporal inconsistencies of classification maps. 3) Adaptive semantic-guided fusion that enhances the accuracy of DSMs and compares them with traditional fusion approaches to show the significance of adaptive methods. 4) A comprehensive analysis of DL stereo matching methods against traditional Census-SGM to obtain detailed knowledge on the accuracy of the DSMs at the stereo matching level. We analyze the overall performance, robustness, and generalization capability, which helps identify the limitations of current DSM generation methods. 5) Based on previous analysis, we develop a novel finetuning strategy to enhance transferability of DL stereo matching methods, hence, the accuracy of DSMs. Our work shows the importance of spatial, temporal, and geometric fusion in enhancing RS applications. It shows that the fusion problem is case-specific and depends on the image type, scene content, and application.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge