He Yu

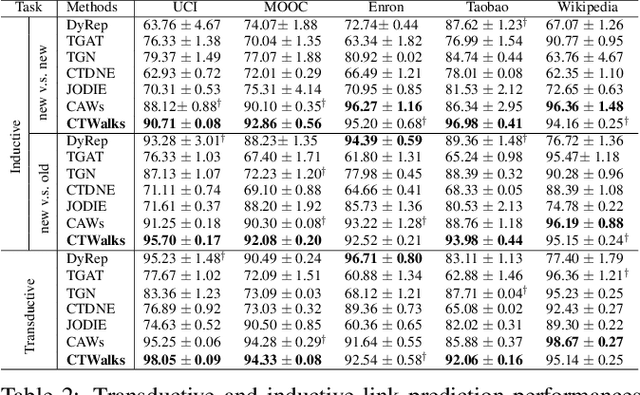

Community-Aware Temporal Walks: Parameter-Free Representation Learning on Continuous-Time Dynamic Graphs

Jan 21, 2025

Abstract:Dynamic graph representation learning plays a crucial role in understanding evolving behaviors. However, existing methods often struggle with flexibility, adaptability, and the preservation of temporal and structural dynamics. To address these issues, we propose Community-aware Temporal Walks (CTWalks), a novel framework for representation learning on continuous-time dynamic graphs. CTWalks integrates three key components: a community-based parameter-free temporal walk sampling mechanism, an anonymization strategy enriched with community labels, and an encoding process that leverages continuous temporal dynamics modeled via ordinary differential equations (ODEs). This design enables precise modeling of both intra- and inter-community interactions, offering a fine-grained representation of evolving temporal patterns in continuous-time dynamic graphs. CTWalks theoretically overcomes locality bias in walks and establishes its connection to matrix factorization. Experiments on benchmark datasets demonstrate that CTWalks outperforms established methods in temporal link prediction tasks, achieving higher accuracy while maintaining robustness.

Two Layer Walk: A Community-Aware Graph Embedding

Dec 18, 2024

Abstract:Community structures are critical for understanding the mesoscopic organization of networks, bridging local and global patterns. While methods such as DeepWalk and node2vec capture local positional information through random walks, they fail to preserve community structures. Other approaches like modularized nonnegative matrix factorization and evolutionary algorithms address this gap but are computationally expensive and unsuitable for large-scale networks. To overcome these limitations, we propose Two Layer Walk (TLWalk), a novel graph embedding algorithm that incorporates hierarchical community structures. TLWalk balances intra- and inter-community relationships through a community-aware random walk mechanism without requiring additional parameters. Theoretical analysis demonstrates that TLWalk effectively mitigates locality bias. Experiments on benchmark datasets show that TLWalk outperforms state-of-the-art methods, achieving up to 3.2% accuracy gains for link prediction tasks. By encoding dense local and sparse global structures, TLWalk proves robust and scalable across diverse networks, offering an efficient solution for network analysis.

Deep Insights into Automated Optimization with Large Language Models and Evolutionary Algorithms

Oct 28, 2024Abstract:Designing optimization approaches, whether heuristic or meta-heuristic, usually demands extensive manual intervention and has difficulty generalizing across diverse problem domains. The combination of Large Language Models (LLMs) and Evolutionary Algorithms (EAs) offers a promising new approach to overcome these limitations and make optimization more automated. In this setup, LLMs act as dynamic agents that can generate, refine, and interpret optimization strategies, while EAs efficiently explore complex solution spaces through evolutionary operators. Since this synergy enables a more efficient and creative search process, we first conduct an extensive review of recent research on the application of LLMs in optimization. We focus on LLMs' dual functionality as solution generators and algorithm designers. Then, we summarize the common and valuable designs in existing work and propose a novel LLM-EA paradigm for automated optimization. Furthermore, centered on this paradigm, we conduct an in-depth analysis of innovative methods for three key components: individual representation, variation operators, and fitness evaluation. We address challenges related to heuristic generation and solution exploration, especially from the LLM prompts' perspective. Our systematic review and thorough analysis of the paradigm can assist researchers in better understanding the current research and promoting the development of combining LLMs with EAs for automated optimization.

AutoRNet: Automatically Optimizing Heuristics for Robust Network Design via Large Language Models

Oct 23, 2024Abstract:Achieving robust networks is a challenging problem due to its NP-hard nature and complex solution space. Current methods, from handcrafted feature extraction to deep learning, have made progress but remain rigid, requiring manual design and large labeled datasets. To address these issues, we propose AutoRNet, a framework that integrates large language models (LLMs) with evolutionary algorithms to generate heuristics for robust network design. We design network optimization strategies to provide domain-specific prompts for LLMs, utilizing domain knowledge to generate advanced heuristics. Additionally, we introduce an adaptive fitness function to balance convergence and diversity while maintaining degree distributions. AutoRNet is evaluated on sparse and dense scale-free networks, outperforming current methods by reducing the need for manual design and large datasets.

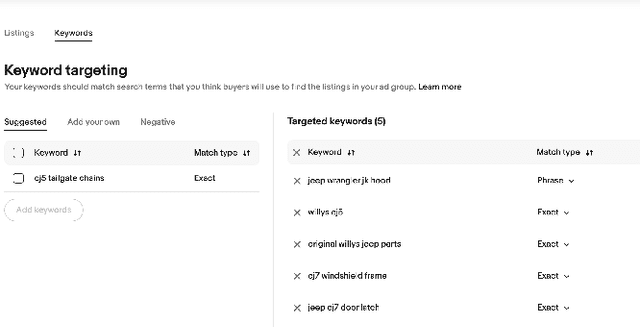

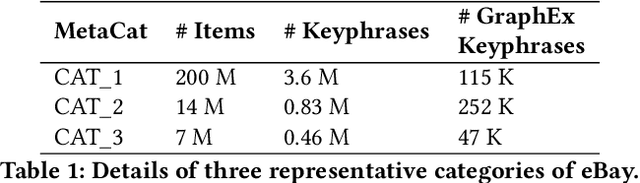

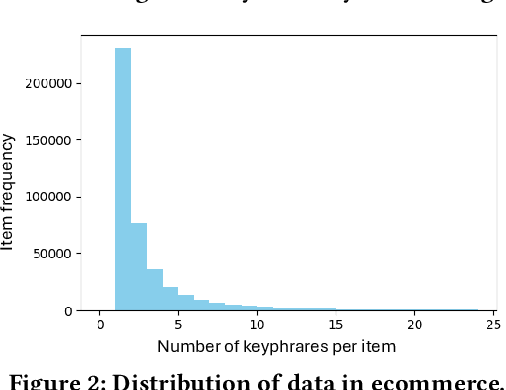

GraphEx: A Graph-based Extraction Method for Advertiser Keyphrase Recommendation

Sep 05, 2024

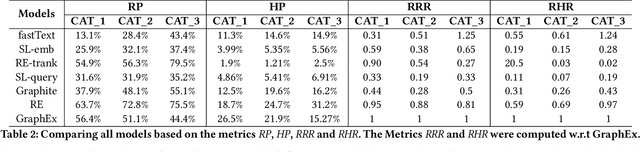

Abstract:Online sellers and advertisers are recommended keyphrases for their listed products, which they bid on to enhance their sales. One popular paradigm that generates such recommendations is Extreme Multi-Label Classification (XMC), which involves tagging/mapping keyphrases to items. We outline the limitations of using traditional item-query based tagging or mapping techniques for keyphrase recommendations on E-Commerce platforms. We introduce GraphEx, an innovative graph-based approach that recommends keyphrases to sellers using extraction of token permutations from item titles. Additionally, we demonstrate that relying on traditional metrics such as precision/recall can be misleading in practical applications, thereby necessitating a combination of metrics to evaluate performance in real-world scenarios. These metrics are designed to assess the relevance of keyphrases to items and the potential for buyer outreach. GraphEx outperforms production models at eBay, achieving the objectives mentioned above. It supports near real-time inferencing in resource-constrained production environments and scales effectively for billions of items.

Exploring Knowledge Transfer in Evolutionary Many-task Optimization: A Complex Network Perspective

Jul 12, 2024Abstract:The field of evolutionary many-task optimization (EMaTO) is increasingly recognized for its ability to streamline the resolution of optimization challenges with repetitive characteristics, thereby conserving computational resources. This paper tackles the challenge of crafting efficient knowledge transfer mechanisms within EMaTO, a task complicated by the computational demands of individual task evaluations. We introduce a novel framework that employs a complex network to comprehensively analyze the dynamics of knowledge transfer between tasks within EMaTO. By extracting and scrutinizing the knowledge transfer network from existing EMaTO algorithms, we evaluate the influence of network modifications on overall algorithmic efficacy. Our findings indicate that these networks are diverse, displaying community-structured directed graph characteristics, with their network density adapting to different task sets. This research underscores the viability of integrating complex network concepts into EMaTO to refine knowledge transfer processes, paving the way for future advancements in the domain.

$R^3$-NL2GQL: A Hybrid Models Approach for for Accuracy Enhancing and Hallucinations Mitigation

Nov 03, 2023Abstract:While current NL2SQL tasks constructed using Foundation Models have achieved commendable results, their direct application to Natural Language to Graph Query Language (NL2GQL) tasks poses challenges due to the significant differences between GQL and SQL expressions, as well as the numerous types of GQL. Our extensive experiments reveal that in NL2GQL tasks, larger Foundation Models demonstrate superior cross-schema generalization abilities, while smaller Foundation Models struggle to improve their GQL generation capabilities through fine-tuning. However, after fine-tuning, smaller models exhibit better intent comprehension and higher grammatical accuracy. Diverging from rule-based and slot-filling techniques, we introduce R3-NL2GQL, which employs both smaller and larger Foundation Models as reranker, rewriter and refiner. The approach harnesses the comprehension ability of smaller models for information reranker and rewriter, and the exceptional generalization and generation capabilities of larger models to transform input natural language queries and code structure schema into any form of GQLs. Recognizing the lack of established datasets in this nascent domain, we have created a bilingual dataset derived from graph database documentation and some open-source Knowledge Graphs (KGs). We tested our approach on this dataset and the experimental results showed that delivers promising performance and robustness.Our code and dataset is available at https://github.com/zhiqix/NL2GQL

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge