Hatem Abou-zeid

The Internet of Senses: Building on Semantic Communications and Edge Intelligence

Dec 21, 2022

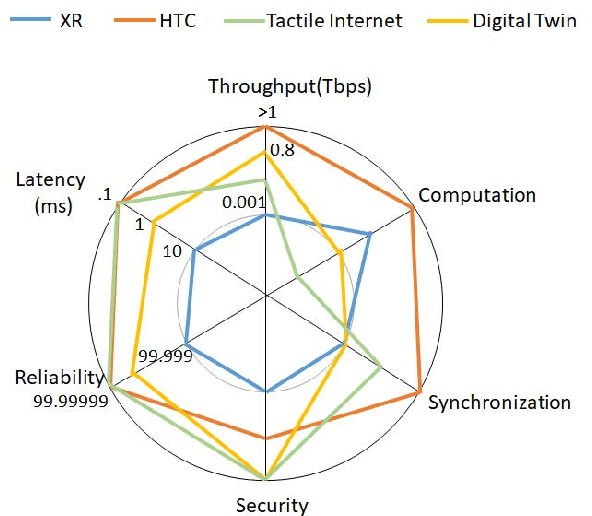

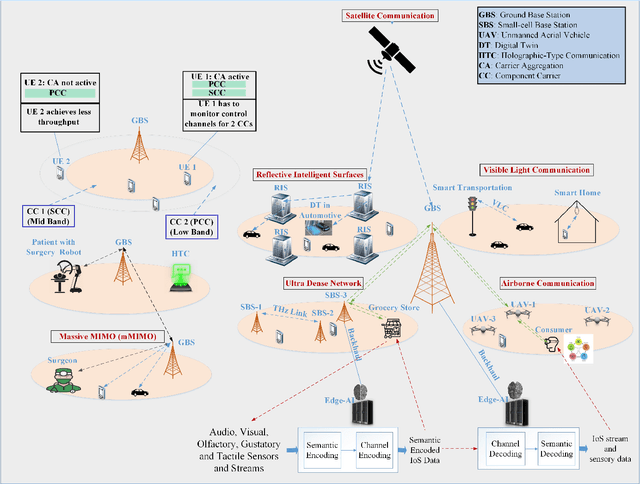

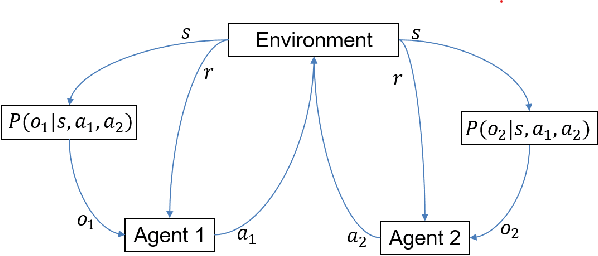

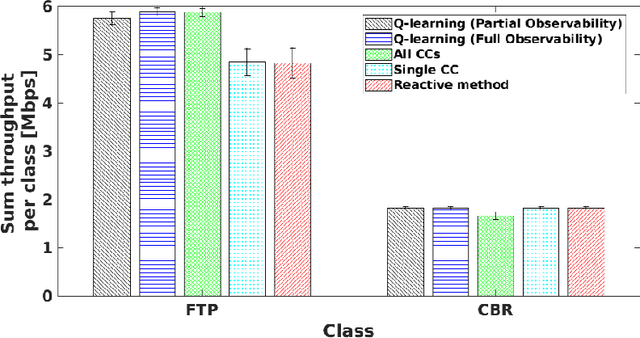

Abstract:The Internet of Senses (IoS) holds the promise of flawless telepresence-style communication for all human `receptors' and therefore blurs the difference of virtual and real environments. We commence by highlighting the compelling use cases empowered by the IoS and also the key network requirements. We then elaborate on how the emerging semantic communications and Artificial Intelligence (AI)/Machine Learning (ML) paradigms along with 6G technologies may satisfy the requirements of IoS use cases. On one hand, semantic communications can be applied for extracting meaningful and significant information and hence efficiently exploit the resources and for harnessing a priori information at the receiver to satisfy IoS requirements. On the other hand, AI/ML facilitates frugal network resource management by making use of the enormous amount of data generated in IoS edge nodes and devices, as well as by optimizing the IoS performance via intelligent agents. However, the intelligent agents deployed at the edge are not completely aware of each others' decisions and the environments of each other, hence they operate in a partially rather than fully observable environment. Therefore, we present a case study of Partially Observable Markov Decision Processes (POMDP) for improving the User Equipment (UE) throughput and energy consumption, as they are imperative for IoS use cases, using Reinforcement Learning for astutely activating and deactivating the component carriers in carrier aggregation. Finally, we outline the challenges and open issues of IoS implementations and employing semantic communications, edge intelligence as well as learning under partial observability in the IoS context.

Toward Safe and Accelerated Deep Reinforcement Learning for Next-Generation Wireless Networks

Sep 16, 2022

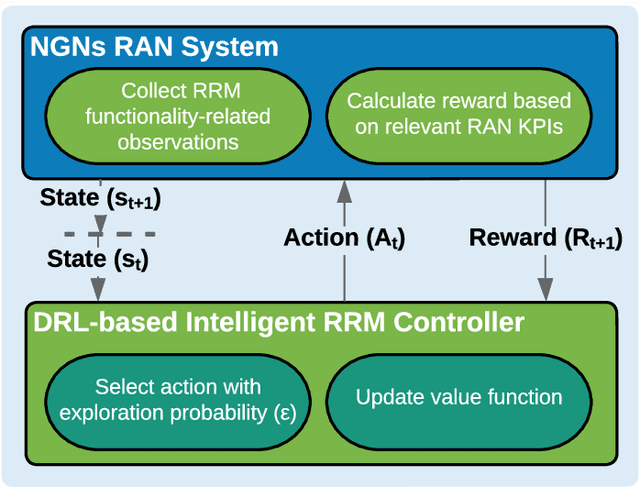

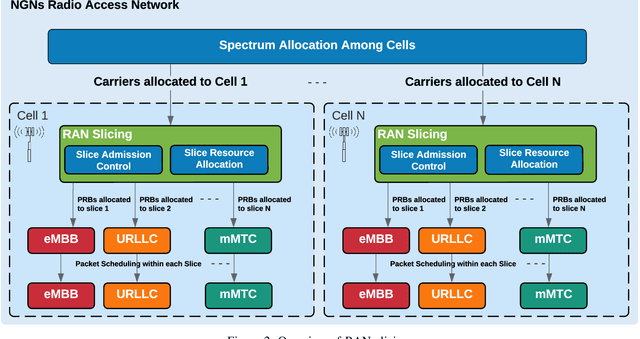

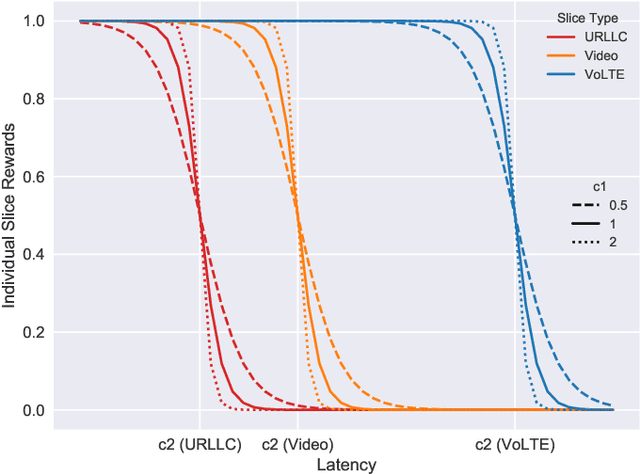

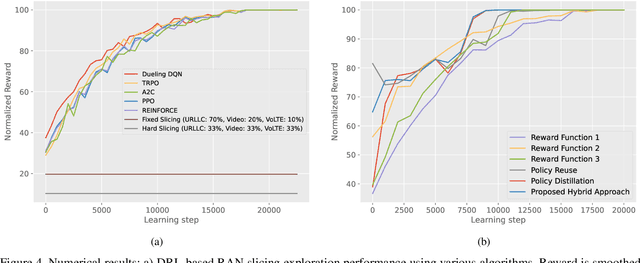

Abstract:Deep reinforcement learning (DRL) algorithms have recently gained wide attention in the wireless networks domain. They are considered promising approaches for solving dynamic radio resource management (RRM) problems in next-generation networks. Given their capabilities to build an approximate and continuously updated model of the wireless network environments, DRL algorithms can deal with the multifaceted complexity of such environments. Nevertheless, several challenges hinder the practical adoption of DRL in commercial networks. In this article, we first discuss two key practical challenges that are faced but rarely tackled when developing DRL-based RRM solutions. We argue that it is inevitable to address these DRL-related challenges for DRL to find its way to RRM commercial solutions. In particular, we discuss the need to have safe and accelerated DRL-based RRM solutions that mitigate the slow convergence and performance instability exhibited by DRL algorithms. We then review and categorize the main approaches used in the RRM domain to develop safe and accelerated DRL-based solutions. Finally, a case study is conducted to demonstrate the importance of having safe and accelerated DRL-based RRM solutions. We employ multiple variants of transfer learning (TL) techniques to accelerate the convergence of intelligent radio access network (RAN) slicing DRL-based controllers. We also propose a hybrid TL-based approach and sigmoid function-based rewards as examples of safe exploration in DRL-based RAN slicing.

Segmented Learning for Class-of-Service Network Traffic Classification

Aug 03, 2022

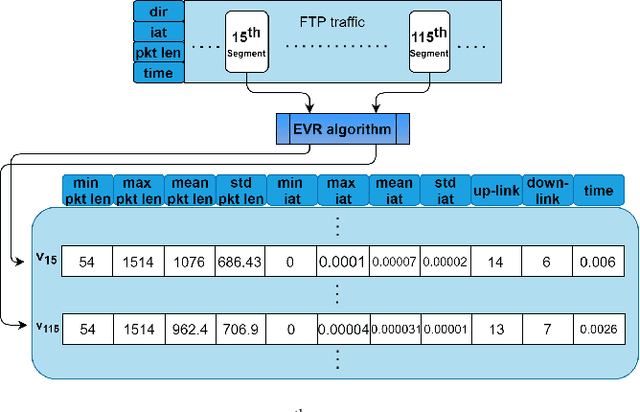

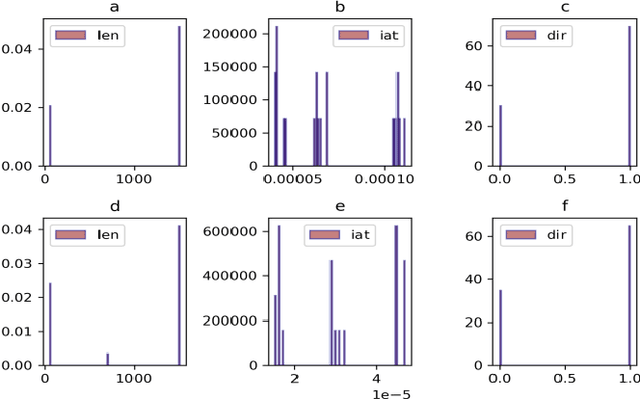

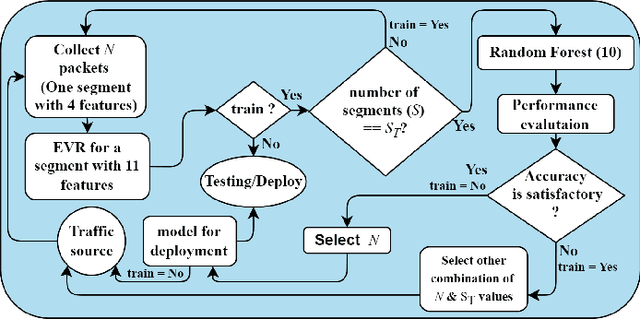

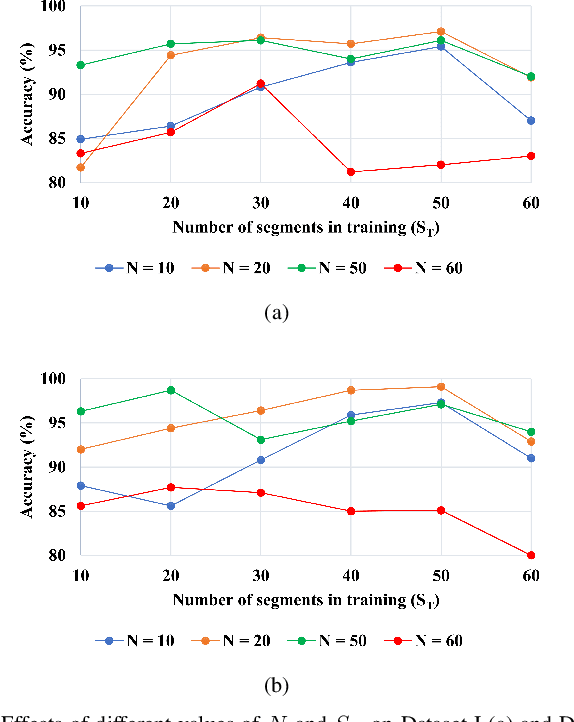

Abstract:Class-of-service (CoS) network traffic classification (NTC) classifies a group of similar traffic applications. The CoS classification is advantageous in resource scheduling for Internet service providers and avoids the necessity of remodelling. Our goal is to find a robust, lightweight, and fast-converging CoS classifier that uses fewer data in modelling and does not require specialized tools in feature extraction. The commonality of statistical features among the network flow segments motivates us to propose novel segmented learning that includes essential vector representation and a simple-segment method of classification. We represent the segmented traffic in the vector form using the EVR. Then, the segmented traffic is modelled for classification using random forest. Our solution's success relies on finding the optimal segment size and a minimum number of segments required in modelling. The solution is validated on multiple datasets for various CoS services, including virtual reality (VR). Significant findings of the research work are i) Synchronous services that require acknowledgment and request to continue communication are classified with 99% accuracy, ii) Initial 1,000 packets in any session are good enough to model a CoS traffic for promising results, and we therefore can quickly deploy a CoS classifier, and iii) Test results remain consistent even when trained on one dataset and tested on a different dataset. In summary, our solution is the first to propose segmentation learning NTC that uses fewer features to classify most CoS traffic with an accuracy of 99%. The implementation of our solution is available on GitHub.

Virtual Reality Gaming on the Cloud: A Reality Check

Sep 21, 2021

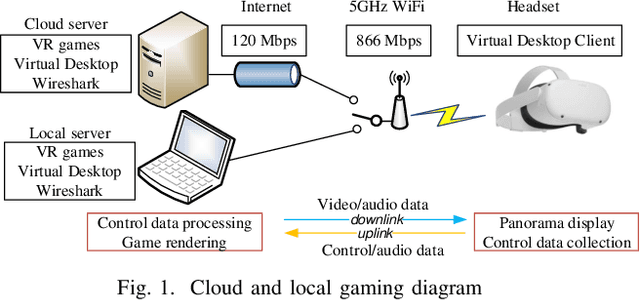

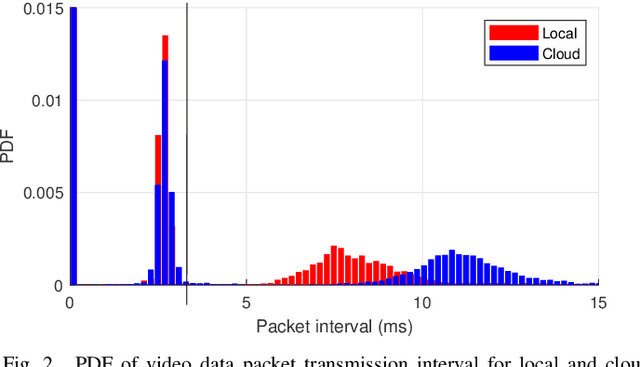

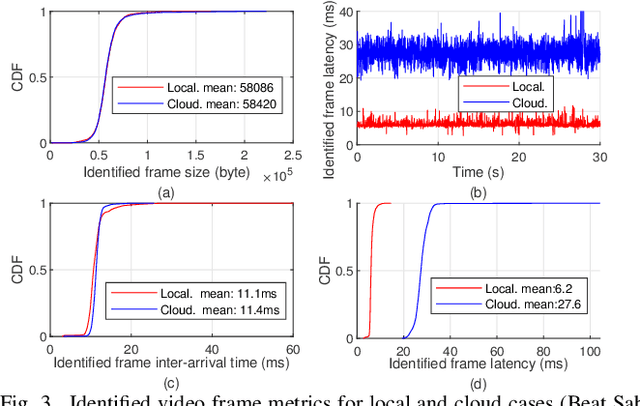

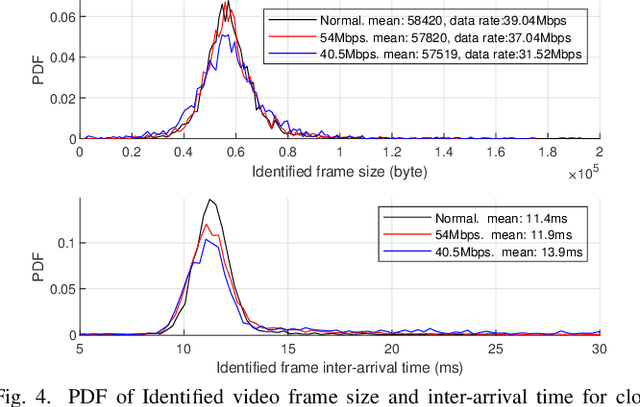

Abstract:Cloud virtual reality (VR) gaming traffic characteristics such as frame size, inter-arrival time, and latency need to be carefully studied as a first step toward scalable VR cloud service provisioning. To this end, in this paper we analyze the behavior of VR gaming traffic and Quality of Service (QoS) when VR rendering is conducted remotely in the cloud. We first build a VR testbed utilizing a cloud server, a commercial VR headset, and an off-the-shelf WiFi router. Using this testbed, we collect and process cloud VR gaming traffic data from different games under a number of network conditions and fixed and adaptive video encoding schemes. To analyze the application-level characteristics such as video frame size, frame inter-arrival time, frame loss and frame latency, we develop an interval threshold based identification method for video frames. Based on the frame identification results, we present two statistical models that capture the behaviour of the VR gaming video traffic. The models can be used by researchers and practitioners to generate VR traffic models for simulations and experiments - and are paramount in designing advanced radio resource management (RRM) and network optimization for cloud VR gaming services. To the best of the authors' knowledge, this is the first measurement study and analysis conducted using a commercial cloud VR gaming platform, and under both fixed and adaptive bitrate streaming. We make our VR traffic data-sets publicly available for further research by the community.

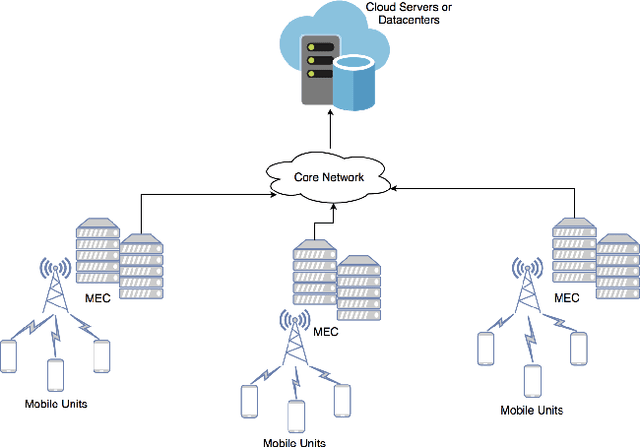

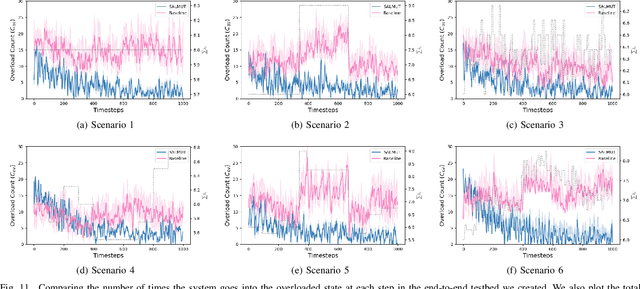

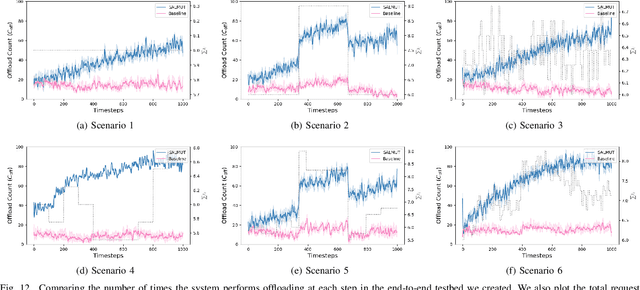

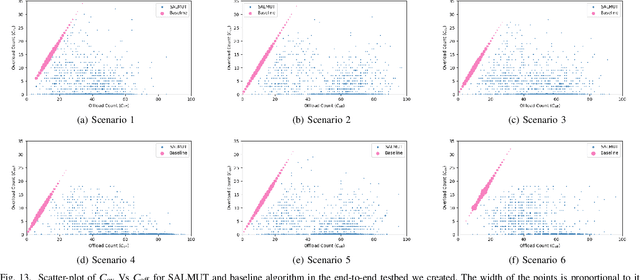

Structure-aware reinforcement learning for node-overload protection in mobile edge computing

Jun 29, 2021

Abstract:Mobile Edge Computing (MEC) refers to the concept of placing computational capability and applications at the edge of the network, providing benefits such as reduced latency in handling client requests, reduced network congestion, and improved performance of applications. The performance and reliability of MEC are degraded significantly when one or several edge servers in the cluster are overloaded. Especially when a server crashes due to the overload, it causes service failures in MEC. In this work, an adaptive admission control policy to prevent edge node from getting overloaded is presented. This approach is based on a recently-proposed low complexity RL (Reinforcement Learning) algorithm called SALMUT (Structure-Aware Learning for Multiple Thresholds), which exploits the structure of the optimal admission control policy in multi-class queues for an average-cost setting. We extend the framework to work for node overload-protection problem in a discounted-cost setting. The proposed solution is validated using several scenarios mimicking real-world deployments in two different settings - computer simulations and a docker testbed. Our empirical evaluations show that the total discounted cost incurred by SALMUT is similar to state-of-the-art deep RL algorithms such as PPO (Proximal Policy Optimization) and A2C (Advantage Actor Critic) but requires an order of magnitude less time to train, outputs easily interpretable policy, and can be deployed in an online manner.

Delay-Tolerant Constrained OCO with Application to Network Resource Allocation

May 09, 2021

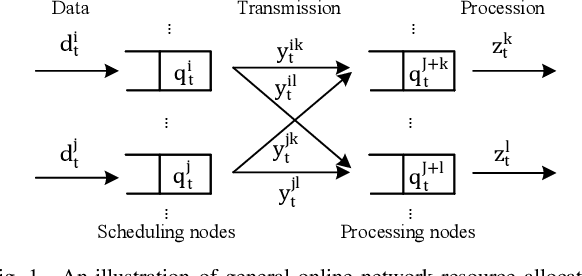

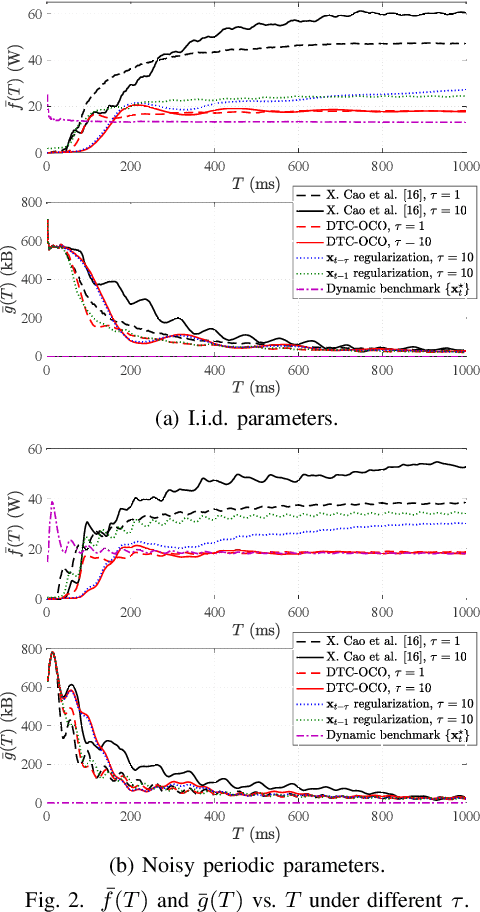

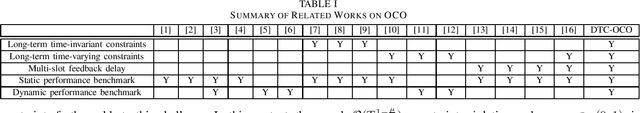

Abstract:We consider online convex optimization (OCO) with multi-slot feedback delay, where an agent makes a sequence of online decisions to minimize the accumulation of time-varying convex loss functions, subject to short-term and long-term constraints that are possibly time-varying. The current convex loss function and the long-term constraint function are revealed to the agent only after the decision is made, and they may be delayed for multiple time slots. Existing work on OCO under this general setting has focused on the static regret, which measures the gap of losses between the online decision sequence and an offline benchmark that is fixed over time. In this work, we consider both the static regret and the more practically meaningful dynamic regret, where the benchmark is a time-varying sequence of per-slot optimizers. We propose an efficient algorithm, termed Delay-Tolerant Constrained-OCO (DTC-OCO), which uses a novel constraint penalty with double regularization to tackle the asynchrony between information feedback and decision updates. We derive upper bounds on its dynamic regret, static regret, and constraint violation, proving them to be sublinear under mild conditions. We further apply DTC-OCO to a general network resource allocation problem, which arises in many systems such as data networks and cloud computing. Simulation results demonstrate substantial performance gain of DTC-OCO over the known best alternative.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge