Min Dong

SegOTA: Accelerating Over-the-Air Federated Learning with Segmented Transmission

Apr 13, 2025Abstract:Federated learning (FL) with over-the-air computation efficiently utilizes the communication resources, but it can still experience significant latency when each device transmits a large number of model parameters to the server. This paper proposes the Segmented Over-The-Air (SegOTA) method for FL, which reduces latency by partitioning devices into groups and letting each group transmit only one segment of the model parameters in each communication round. Considering a multi-antenna server, we model the SegOTA transmission and reception process to establish an upper bound on the expected model learning optimality gap. We minimize this upper bound, by formulating the per-round online optimization of device grouping and joint transmit-receive beamforming, for which we derive efficient closed-form solutions. Simulation results show that our proposed SegOTA substantially outperforms the conventional full-model OTA approach and other common alternatives.

Improving Wireless Federated Learning via Joint Downlink-Uplink Beamforming over Analog Transmission

Feb 04, 2025

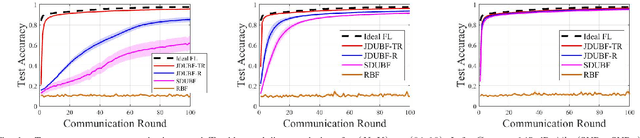

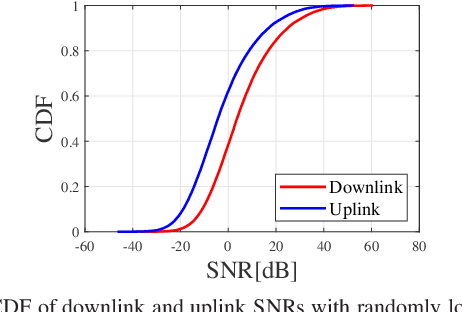

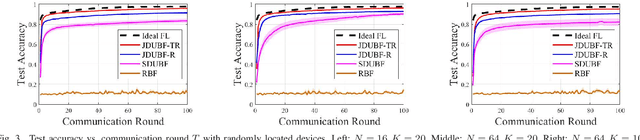

Abstract:Federated learning (FL) over wireless networks using analog transmission can efficiently utilize the communication resource but is susceptible to errors caused by noisy wireless links. In this paper, assuming a multi-antenna base station, we jointly design downlink-uplink beamforming to maximize FL training convergence over time-varying wireless channels. We derive the round-trip model updating equation and use it to analyze the FL training convergence to capture the effects of downlink and uplink beamforming and the local model training on the global model update. Aiming to maximize the FL training convergence rate, we propose a low-complexity joint downlink-uplink beamforming (JDUBF) algorithm, which adopts a greedy approach to decompose the multi-round joint optimization and convert it into per-round online joint optimization problems. The per-round problem is further decomposed into three subproblems over a block coordinate descent framework, where we show that each subproblem can be efficiently solved by projected gradient descent with fast closed-form updates. An efficient initialization method that leads to a closed-form initial point is also proposed to accelerate the convergence of JDUBF. Simulation demonstrates that JDUBF substantially outperforms the conventional separate-link beamforming design.

Power-Efficient Over-the-Air Aggregation with Receive Beamforming for Federated Learning

Jan 29, 2025

Abstract:This paper studies power-efficient uplink transmission design for federated learning (FL) that employs over-the-air analog aggregation and multi-antenna beamforming at the server. We jointly optimize device transmit weights and receive beamforming at each FL communication round to minimize the total device transmit power while ensuring convergence in FL training. Through our convergence analysis, we establish sufficient conditions on the aggregation error to guarantee FL training convergence. Utilizing these conditions, we reformulate the power minimization problem into a unique bi-convex structure that contains a transmit beamforming optimization subproblem and a receive beamforming feasibility subproblem. Despite this unconventional structure, we propose a novel alternating optimization approach that guarantees monotonic decrease of the objective value, to allow convergence to a partial optimum. We further consider imperfect channel state information (CSI), which requires accounting for the channel estimation errors in the power minimization problem and FL convergence analysis. We propose a CSI-error-aware joint beamforming algorithm, which can substantially outperform one that does not account for channel estimation errors. Simulation with canonical classification datasets demonstrates that our proposed methods achieve significant power reduction compared to existing benchmarks across a wide range of parameter settings, while attaining the same target accuracy under the same convergence rate.

Uplink Over-the-Air Aggregation for Multi-Model Wireless Federated Learning

Sep 02, 2024

Abstract:We propose an uplink over-the-air aggregation (OAA) method for wireless federated learning (FL) that simultaneously trains multiple models. To maximize the multi-model training convergence rate, we derive an upper bound on the optimality gap of the global model update, and then, formulate an uplink joint transmit-receive beamforming optimization problem to minimize this upper bound. We solve this problem using the block coordinate descent approach, which admits low-complexity closed-form updates. Simulation results show that our proposed multi-model FL with fast OAA substantially outperforms sequentially training multiple models under the conventional single-model approach.

Joint Group Scheduling and Multicast Beamforming for Downlink Large-Scale Multi-Group Multicast

Mar 15, 2024

Abstract:Next-generation wireless networks need to handle massive user access effectively. This paper addresses the problem of joint group scheduling and multicast beamforming for downlink multicast with many active groups. Aiming to maximize the minimum user throughput, we propose a three-phase approach to tackle this difficult joint optimization problem efficiently. In Phase 1, we utilize the optimal multicast beamforming structure obtained recently to find the group-channel directions for all groups. We propose two low-complexity scheduling algorithms in Phase 2, which determine the subset of groups in each time slot sequentially and the total number of time slots required for all groups. The first algorithm measures the level of spatial separation among groups and selects the dissimilar groups that maximize the minimum user rate into the same time slot. In contrast, the second algorithm first identifies the spatially correlated groups via a learning-based clustering method based on the group-channel directions, and then separates spatially similar groups into different time slots. Finally, the multicast beamformers for the scheduled groups are obtained in each time slot by a computationally efficient method. Simulation results show that our proposed approaches can effectively capture the level of spatial separation among groups for scheduling to improve the minimum user throughput over the conventional approach that serves all groups in a single time slot or one group per time slot, and can be executed with low computational complexity.

Multi-Model Wireless Federated Learning with Downlink Beamforming

Jan 15, 2024

Abstract:This paper studies the design of wireless federated learning (FL) for simultaneously training multiple machine learning models. We consider round robin device-model assignment and downlink beamforming for concurrent multiple model updates. After formulating the joint downlink-uplink transmission process, we derive the per-model global update expression over communication rounds, capturing the effect of beamforming and noisy reception. To maximize the multi-model training convergence rate, we derive an upper bound on the optimality gap of the global model update and use it to formulate a multi-group multicast beamforming problem. We show that this problem can be converted to minimizing the sum of inverse received signal-to-interference-plus-noise ratios, which can be solved efficiently by projected gradient descent. Simulation shows that our proposed multi-model FL solution outperforms other alternatives, including conventional single-model sequential training and multi-model zero-forcing beamforming.

Efficient Design of Multi-group Multicast Beamforming via Reconfigurable Intelligent Surface

Dec 31, 2023

Abstract:This paper considers a multi-group multicasting scenario facilitated by a reconfigurable intelligent surface (RIS). We propose a fast and scalable algorithm for the joint design of the base station (BS) multicast beamforming and the RIS passive beamforming to minimize the transmit power subject to the quality-of-service (QoS) constraints. By exploring the structure of the joint optimization problem, we show that this QoS problem can be broken into a BS multicast QoS subproblem and an RIS max-min-fair (MMF) multicast subproblem, which are solved alternatingly. In our proposed algorithm, we utilize the optimal multicast beamforming structure to obtain the BS beamformers efficiently. Furthermore, we reformulate the challenging RIS multicast subproblem and employ a first-order projected subgradient algorithm (PSA) to solve it, which yields closed-form updates. Simulation results show the efficacy of our proposed algorithm in performance and computational cost compared to other alternative methods.

Joint Beamforming and Device Selection in Federated Learning with Over-the-air Aggregation

Feb 28, 2023

Abstract:Federated Learning (FL) with over-the-air computation is susceptible to analog aggregation error due to channel conditions and noise. Excluding devices with weak channels can reduce the aggregation error, but also decreases the amount of training data in FL. In this work, we jointly design the uplink receiver beamforming and device selection in over-the-air FL to maximize the training convergence rate. We propose a new method termed JBFDS, which takes into account the impact of receiver beamforming and device selection on the global loss function at each training round. Our simulation results with real-world image classification demonstrate that the proposed method achieves faster convergence with significantly lower computational complexity than existing alternatives.

Resource Allocation for Massive MIMO HetNets with Quantize-Forward Relaying

May 24, 2021

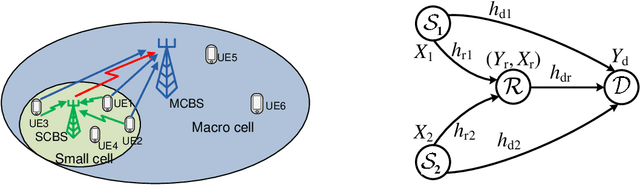

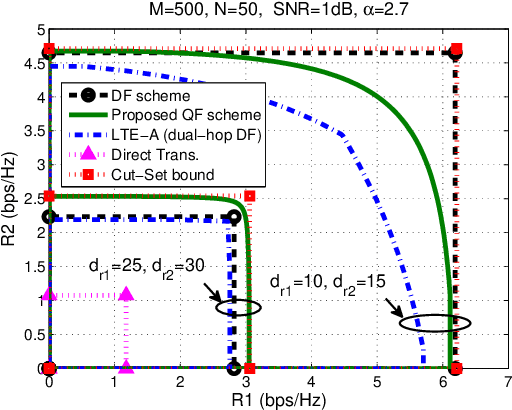

Abstract:We investigate how massive MIMO impacts the uplink transmission design in a heterogeneous network (HetNet) where multiple users communicate with a macro-cell base station (MCBS) with the help of a small-cell BS (SCBS) with zero-forcing (ZF) detection at each BS. We first analyze the quantize-forward (QF) relaying scheme with joint decoding (JD) at the MCBS. To maximize the rate region, we optimize the quantization of all user data streams at the SCBS by developing a novel water-filling algorithm that is based on the Descartes' rule of signs. Our result shows that as a user link to the SCBS becomes stronger than that to the MCBS, the SCBS deploys finer quantization to that user data stream. We further propose a new simplified scheme through Wyner-Ziv (WZ) binning and time-division (TD) transmission at the SCBS, which allows not only sequential but also separate decoding of each user message at the MCBS. For this new QF-WZTD scheme, the optimal quantization parameters are identical to that of the QF-JD scheme while the phase durations are conveniently optimized as functions of the quantization parameters. Despite its simplicity, the QF-WZTD scheme achieves the same rate performance of the QF-JD scheme, making it an attractive option for future HetNets.

Delay-Tolerant Constrained OCO with Application to Network Resource Allocation

May 09, 2021

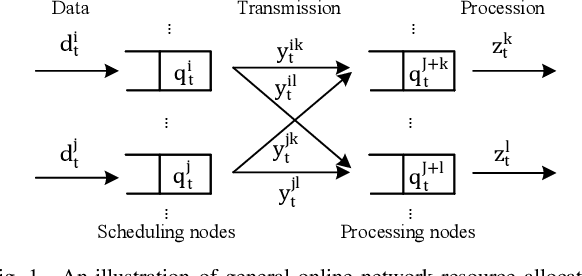

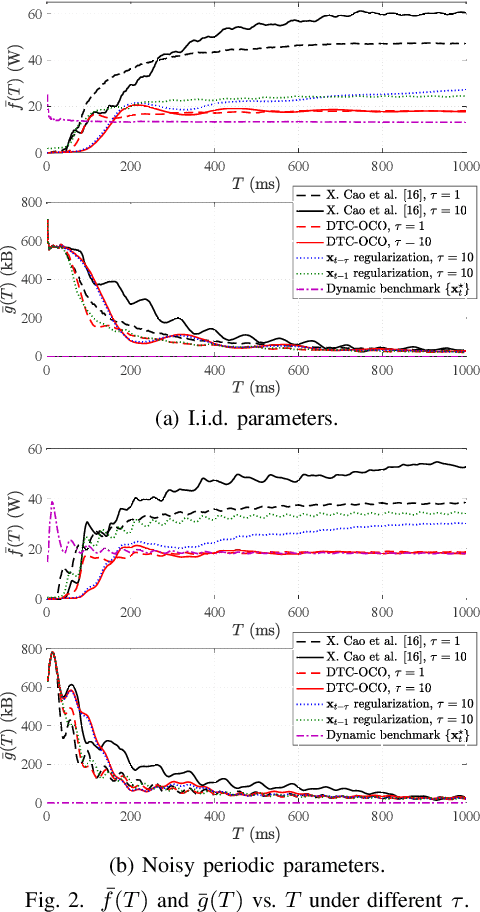

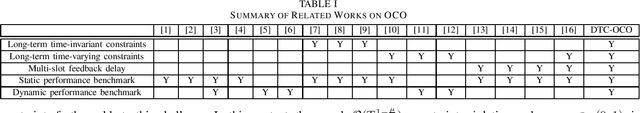

Abstract:We consider online convex optimization (OCO) with multi-slot feedback delay, where an agent makes a sequence of online decisions to minimize the accumulation of time-varying convex loss functions, subject to short-term and long-term constraints that are possibly time-varying. The current convex loss function and the long-term constraint function are revealed to the agent only after the decision is made, and they may be delayed for multiple time slots. Existing work on OCO under this general setting has focused on the static regret, which measures the gap of losses between the online decision sequence and an offline benchmark that is fixed over time. In this work, we consider both the static regret and the more practically meaningful dynamic regret, where the benchmark is a time-varying sequence of per-slot optimizers. We propose an efficient algorithm, termed Delay-Tolerant Constrained-OCO (DTC-OCO), which uses a novel constraint penalty with double regularization to tackle the asynchrony between information feedback and decision updates. We derive upper bounds on its dynamic regret, static regret, and constraint violation, proving them to be sublinear under mild conditions. We further apply DTC-OCO to a general network resource allocation problem, which arises in many systems such as data networks and cloud computing. Simulation results demonstrate substantial performance gain of DTC-OCO over the known best alternative.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge