Ali Afana

Foundation Model-Aided Hierarchical Control for Robust RIS-Assisted Near-Field Communications

Jan 06, 2026Abstract:The deployment of extremely large aperture arrays (ELAAs) in sixth-generation (6G) networks could shift communication into the near-field communication (NFC) regime. In this regime, signals exhibit spherical wave propagation, unlike the planar waves in conventional far-field systems. Reconfigurable intelligent surfaces (RISs) can dynamically adjust phase shifts to support NFC beamfocusing, concentrating signal energy at specific spatial coordinates. However, effective RIS utilization depends on both rapid channel state information (CSI) estimation and proactive blockage mitigation, which occur on inherently different timescales. CSI varies at millisecond intervals due to small-scale fading, while blockage events evolve over seconds, posing challenges for conventional single-level control algorithms. To address this issue, we propose a dual-transformer (DT) hierarchical framework that integrates two specialized transformer models within a hierarchical deep reinforcement learning (HDRL) architecture, referred to as the DT-HDRL framework. A fast-timescale transformer processes ray-tracing data for rapid CSI estimation, while a vision transformer (ViT) analyzes visual data to predict impending blockages. In HDRL, the high-level controller selects line-of-sight (LoS) or RIS-assisted non-line-of-sight (NLoS) transmission paths and sets goals, while the low-level controller optimizes base station (BS) beamfocusing and RIS phase shifts using instantaneous CSI. This dual-timescale coordination maximizes spectral efficiency (SE) while ensuring robust performance under dynamic conditions. Simulation results demonstrate that our approach improves SE by approximately 18% compared to single-timescale baselines, while the proposed blockage predictor achieves an F1-score of 0.92, providing a 769 ms advance warning window in dynamic scenarios.

Multi-Modal Data-Enhanced Foundation Models for Prediction and Control in Wireless Networks: A Survey

Jan 06, 2026Abstract:Foundation models (FMs) are recognized as a transformative breakthrough that has started to reshape the future of artificial intelligence (AI) across both academia and industry. The integration of FMs into wireless networks is expected to enable the development of general-purpose AI agents capable of handling diverse network management requests and highly complex wireless-related tasks involving multi-modal data. Inspired by these ideas, this work discusses the utilization of FMs, especially multi-modal FMs in wireless networks. We focus on two important types of tasks in wireless network management: prediction tasks and control tasks. In particular, we first discuss FMs-enabled multi-modal contextual information understanding in wireless networks. Then, we explain how FMs can be applied to prediction and control tasks, respectively. Following this, we introduce the development of wireless-specific FMs from two perspectives: available datasets for development and the methodologies used. Finally, we conclude with a discussion of the challenges and future directions for FM-enhanced wireless networks.

Foundation Model-Aided Deep Reinforcement Learning for RIS-Assisted Wireless Communication

Jun 11, 2025Abstract:Reconfigurable intelligent surfaces (RIS) have emerged as a promising technology for enhancing wireless communication by dynamically controlling signal propagation in the environment. However, their efficient deployment relies on accurate channel state information (CSI), which leads to high channel estimation overhead due to their passive nature and the large number of reflective elements. In this work, we solve this challenge by proposing a novel framework that leverages a pre-trained open-source foundation model (FM) named large wireless model (LWM) to process wireless channels and generate versatile and contextualized channel embeddings. These embeddings are then used for the joint optimization of the BS beamforming and RIS configurations. To be more specific, for joint optimization, we design a deep reinforcement learning (DRL) model to automatically select the BS beamforming vector and RIS phase-shift matrix, aiming to maximize the spectral efficiency (SE). This work shows that a pre-trained FM for radio signal understanding can be fine-tuned and integrated with DRL for effective decision-making in wireless networks. It highlights the potential of modality-specific FMs in real-world network optimization. According to the simulation results, the proposed method outperforms the DRL-based approach and beam sweeping-based approach, achieving 9.89% and 43.66% higher SE, respectively.

Conditional Denoising Diffusion for ISAC Enhanced Channel Estimation in Cell-Free 6G

Jun 07, 2025Abstract:Cell-free Integrated Sensing and Communication (ISAC) aims to revolutionize 6th Generation (6G) networks. By combining distributed access points with ISAC capabilities, it boosts spectral efficiency, situational awareness, and communication reliability. Channel estimation is a critical step in cell-free ISAC systems to ensure reliable communication, but its performance is usually limited by challenges such as pilot contamination and noisy channel estimates. This paper presents a novel framework leveraging sensing information as a key input within a Conditional Denoising Diffusion Model (CDDM). In this framework, we integrate CDDM with a Multimodal Transformer (MMT) to enhance channel estimation in ISAC-enabled cell-free systems. The MMT encoder effectively captures inter-modal relationships between sensing and location data, enabling the CDDM to iteratively denoise and refine channel estimates. Simulation results demonstrate that the proposed approach achieves significant performance gains. As compared with Least Squares (LS) and Minimum Mean Squared Error (MMSE) estimators, the proposed model achieves normalized mean squared error (NMSE) improvements of 8 dB and 9 dB, respectively. Moreover, we achieve a 27.8% NMSE improvement compared to the traditional denoising diffusion model (TDDM), which does not incorporate sensing channel information. Additionally, the model exhibits higher robustness against pilot contamination and maintains high accuracy under challenging conditions, such as low signal-to-noise ratios (SNRs). According to the simulation results, the model performs well for users near sensing targets by leveraging the correlation between sensing and communication channels.

SegOTA: Accelerating Over-the-Air Federated Learning with Segmented Transmission

Apr 13, 2025Abstract:Federated learning (FL) with over-the-air computation efficiently utilizes the communication resources, but it can still experience significant latency when each device transmits a large number of model parameters to the server. This paper proposes the Segmented Over-The-Air (SegOTA) method for FL, which reduces latency by partitioning devices into groups and letting each group transmit only one segment of the model parameters in each communication round. Considering a multi-antenna server, we model the SegOTA transmission and reception process to establish an upper bound on the expected model learning optimality gap. We minimize this upper bound, by formulating the per-round online optimization of device grouping and joint transmit-receive beamforming, for which we derive efficient closed-form solutions. Simulation results show that our proposed SegOTA substantially outperforms the conventional full-model OTA approach and other common alternatives.

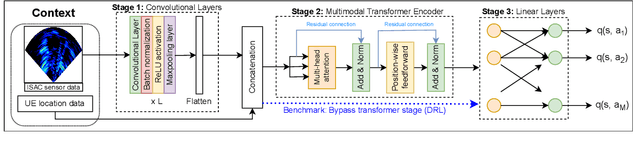

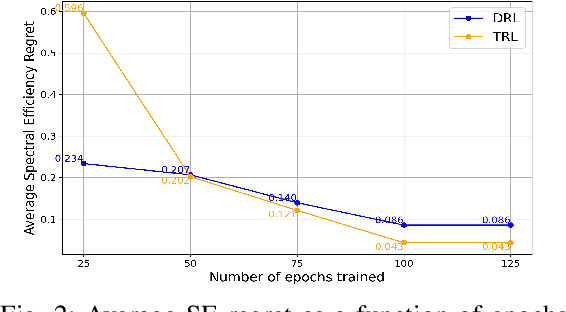

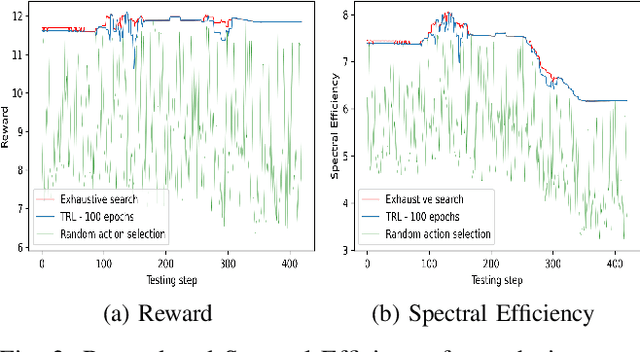

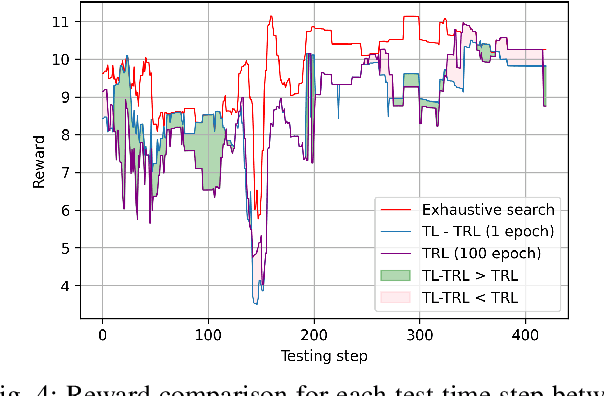

Beam Selection in ISAC using Contextual Bandit with Multi-modal Transformer and Transfer Learning

Mar 11, 2025

Abstract:Sixth generation (6G) wireless technology is anticipated to introduce Integrated Sensing and Communication (ISAC) as a transformative paradigm. ISAC unifies wireless communication and RADAR or other forms of sensing to optimize spectral and hardware resources. This paper presents a pioneering framework that leverages ISAC sensing data to enhance beam selection processes in complex indoor environments. By integrating multi-modal transformer models with a multi-agent contextual bandit algorithm, our approach utilizes ISAC sensing data to improve communication performance and achieves high spectral efficiency (SE). Specifically, the multi-modal transformer can capture inter-modal relationships, enhancing model generalization across diverse scenarios. Experimental evaluations on the DeepSense 6G dataset demonstrate that our model outperforms traditional deep reinforcement learning (DRL) methods, achieving superior beam prediction accuracy and adaptability. In the single-user scenario, we achieve an average SE regret improvement of 49.6% as compared to DRL. Furthermore, we employ transfer reinforcement learning to reduce training time and improve model performance in multi-user environments. In the multi-user scenario, this approach enhances the average SE regret, which is a measure to demonstrate how far the learned policy is from the optimal SE policy, by 19.7% compared to training from scratch, even when the latter is trained 100 times longer.

Improving Wireless Federated Learning via Joint Downlink-Uplink Beamforming over Analog Transmission

Feb 04, 2025

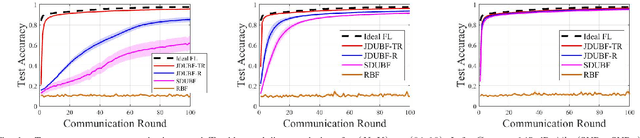

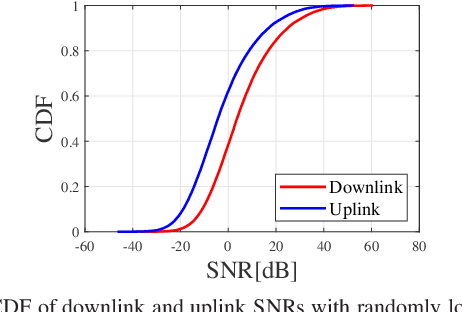

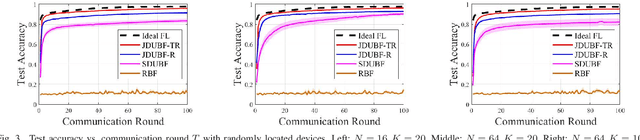

Abstract:Federated learning (FL) over wireless networks using analog transmission can efficiently utilize the communication resource but is susceptible to errors caused by noisy wireless links. In this paper, assuming a multi-antenna base station, we jointly design downlink-uplink beamforming to maximize FL training convergence over time-varying wireless channels. We derive the round-trip model updating equation and use it to analyze the FL training convergence to capture the effects of downlink and uplink beamforming and the local model training on the global model update. Aiming to maximize the FL training convergence rate, we propose a low-complexity joint downlink-uplink beamforming (JDUBF) algorithm, which adopts a greedy approach to decompose the multi-round joint optimization and convert it into per-round online joint optimization problems. The per-round problem is further decomposed into three subproblems over a block coordinate descent framework, where we show that each subproblem can be efficiently solved by projected gradient descent with fast closed-form updates. An efficient initialization method that leads to a closed-form initial point is also proposed to accelerate the convergence of JDUBF. Simulation demonstrates that JDUBF substantially outperforms the conventional separate-link beamforming design.

Generative AI-enabled Blockage Prediction for Robust Dual-Band mmWave Communication

Jan 20, 2025

Abstract:In mmWave wireless networks, signal blockages present a significant challenge due to the susceptibility to environmental moving obstructions. Recently, the availability of visual data has been leveraged to enhance blockage prediction accuracy in mmWave networks. In this work, we propose a Vision Transformer (ViT)-based approach for visual-aided blockage prediction that intelligently switches between mmWave and Sub-6 GHz frequencies to maximize network throughput and maintain reliable connectivity. Given the computational demands of processing visual data, we implement our solution within a hierarchical fog-cloud computing architecture, where fog nodes collaborate with cloud servers to efficiently manage computational tasks. This structure incorporates a generative AI-based compression technique that significantly reduces the volume of visual data transmitted between fog nodes and cloud centers. Our proposed method is tested with the real-world DeepSense 6G dataset, and according to the simulation results, it achieves a blockage prediction accuracy of 92.78% while reducing bandwidth usage by 70.31%.

Regularized Top-$k$: A Bayesian Framework for Gradient Sparsification

Jan 10, 2025

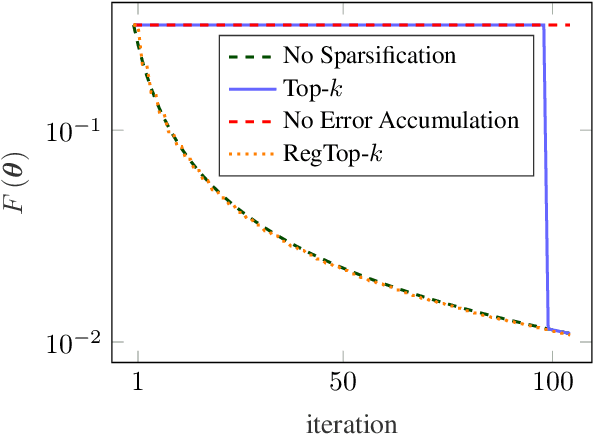

Abstract:Error accumulation is effective for gradient sparsification in distributed settings: initially-unselected gradient entries are eventually selected as their accumulated error exceeds a certain level. The accumulation essentially behaves as a scaling of the learning rate for the selected entries. Although this property prevents the slow-down of lateral movements in distributed gradient descent, it can deteriorate convergence in some settings. This work proposes a novel sparsification scheme that controls the learning rate scaling of error accumulation. The development of this scheme follows two major steps: first, gradient sparsification is formulated as an inverse probability (inference) problem, and the Bayesian optimal sparsification mask is derived as a maximum-a-posteriori estimator. Using the prior distribution inherited from Top-$k$, we derive a new sparsification algorithm which can be interpreted as a regularized form of Top-$k$. We call this algorithm regularized Top-$k$ (RegTop-$k$). It utilizes past aggregated gradients to evaluate posterior statistics of the next aggregation. It then prioritizes the local accumulated gradient entries based on these posterior statistics. We validate our derivation through numerical experiments. In distributed linear regression, it is observed that while Top-$k$ remains at a fixed distance from the global optimum, RegTop-$k$ converges to the global optimum at significantly higher compression ratios. We further demonstrate the generalization of this observation by employing RegTop-$k$ in distributed training of ResNet-18 on CIFAR-10, where it noticeably outperforms Top-$k$.

Multi-Modal Transformer and Reinforcement Learning-based Beam Management

Oct 22, 2024

Abstract:Beam management is an important technique to improve signal strength and reduce interference in wireless communication systems. Recently, there has been increasing interest in using diverse sensing modalities for beam management. However, it remains a big challenge to process multi-modal data efficiently and extract useful information. On the other hand, the recently emerging multi-modal transformer (MMT) is a promising technique that can process multi-modal data by capturing long-range dependencies. While MMT is highly effective in handling multi-modal data and providing robust beam management, integrating reinforcement learning (RL) further enhances their adaptability in dynamic environments. In this work, we propose a two-step beam management method by combining MMT with RL for dynamic beam index prediction. In the first step, we divide available beam indices into several groups and leverage MMT to process diverse data modalities to predict the optimal beam group. In the second step, we employ RL for fast beam decision-making within each group, which in return maximizes throughput. Our proposed framework is tested on a 6G dataset. In this testing scenario, it achieves higher beam prediction accuracy and system throughput compared to both the MMT-only based method and the RL-only based method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge