Hossam S. Hassanein

SafeSlice: Enabling SLA-Compliant O-RAN Slicing via Safe Deep Reinforcement Learning

Mar 17, 2025Abstract:Deep reinforcement learning (DRL)-based slicing policies have shown significant success in simulated environments but face challenges in physical systems such as open radio access networks (O-RANs) due to simulation-to-reality gaps. These policies often lack safety guarantees to ensure compliance with service level agreements (SLAs), such as the strict latency requirements of immersive applications. As a result, a deployed DRL slicing agent may make resource allocation (RA) decisions that degrade system performance, particularly in previously unseen scenarios. Real-world immersive applications require maintaining SLA constraints throughout deployment to prevent risky DRL exploration. In this paper, we propose SafeSlice to address both the cumulative (trajectory-wise) and instantaneous (state-wise) latency constraints of O-RAN slices. We incorporate the cumulative constraints by designing a sigmoid-based risk-sensitive reward function that reflects the slices' latency requirements. Moreover, we build a supervised learning cost model as part of a safety layer that projects the slicing agent's RA actions to the nearest safe actions, fulfilling instantaneous constraints. We conduct an exhaustive experiment that supports multiple services, including real virtual reality (VR) gaming traffic, to investigate the performance of SafeSlice under extreme and changing deployment conditions. SafeSlice achieves reductions of up to 83.23% in average cumulative latency, 93.24% in instantaneous latency violations, and 22.13% in resource consumption compared to the baselines. The results also indicate SafeSlice's robustness to changing the threshold configurations of latency constraints, a vital deployment scenario that will be realized by the O-RAN paradigm to empower mobile network operators (MNOs).

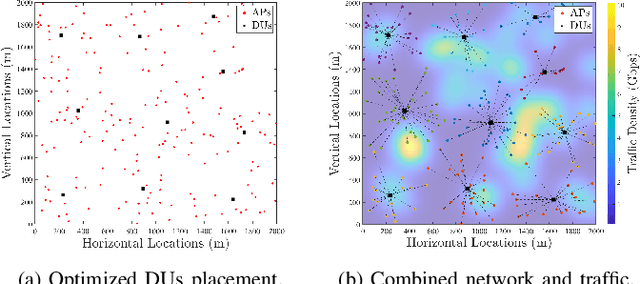

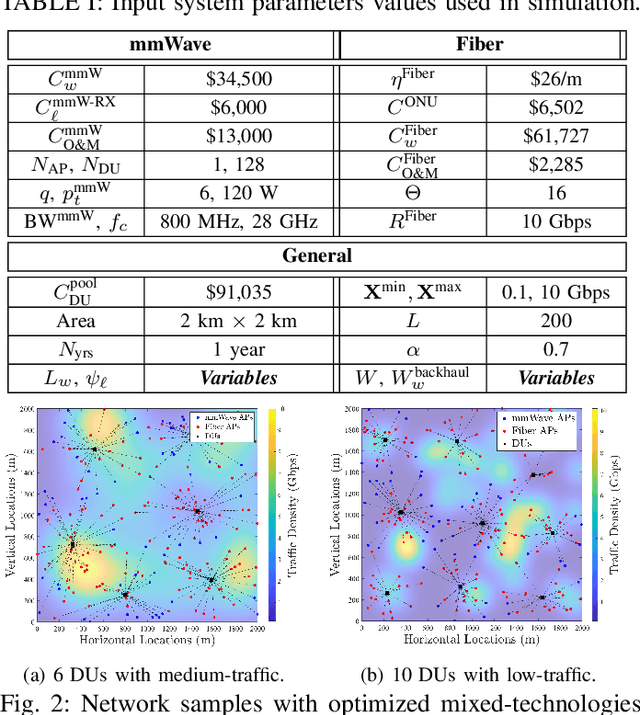

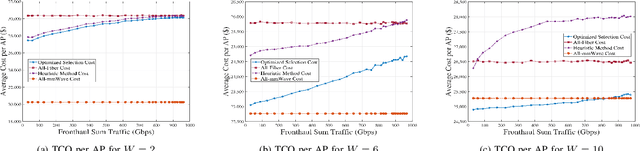

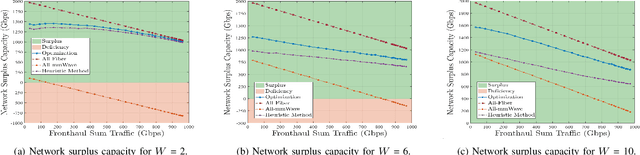

Traffic-Aware Cost-Optimized Fronthaul Planning for Ultra-Dense Networks

Dec 20, 2024

Abstract:The cost and limited capacity of fronthaul links pose significant challenges for the deployment of ultra-dense networks (UDNs), specifically for cell-free massive MIMO systems. Hence, cost-effective planning of reliable fronthaul networks is crucial for the future deployment of UDNs. We propose an optimization framework for traffic-aware hybrid fronthaul network planning, aimed at minimizing total costs through a mixed-integer linear program (MILP) that considers fiber optics and mmWave, along with optimizing key performance metrics. The results demonstrate superiority of the proposed approach, highlighting the cost-effectiveness and performance advantages when compared to different deployment schemes. Moreover, our results also reveal different trends that are critical for Service Providers (SPs) during the fronthaul planning phase of future-proof networks that can adapt to evolving traffic demands.

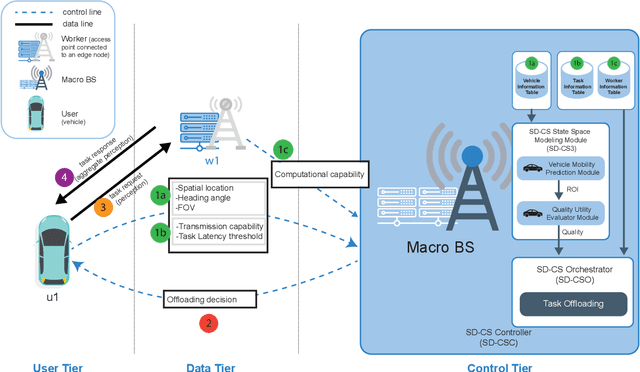

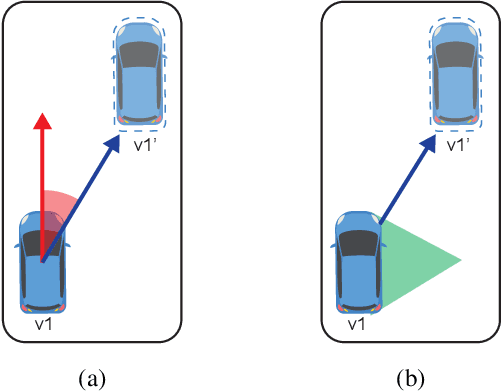

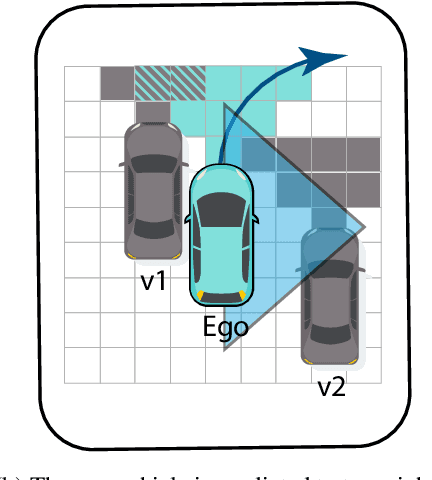

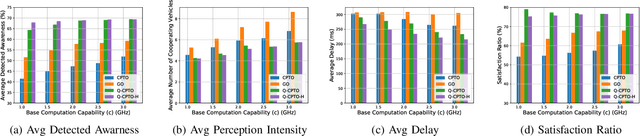

Quality-Aware Task Offloading for Cooperative Perception in Vehicular Edge Computing

May 31, 2024

Abstract:Task offloading in Vehicular Edge Computing (VEC) can advance cooperative perception (CP) to improve traffic awareness in Autonomous Vehicles. In this paper, we propose the Quality-aware Cooperative Perception Task Offloading (QCPTO) scheme. Q-CPTO is the first task offloading scheme that enhances traffic awareness by prioritizing the quality rather than the quantity of cooperative perception. Q-CPTO improves the quality of CP by curtailing perception redundancy and increasing the Value of Information (VOI) procured by each user. We use Kalman filters (KFs) for VOI assessment, predicting the next movement of each vehicle to estimate its region of interest. The estimated VOI is then integrated into the task offloading problem. We formulate the task offloading problem as an Integer Linear Program (ILP) that maximizes the VOI of users and reduces perception redundancy by leveraging the spatially diverse fields of view (FOVs) of vehicles, while adhering to strict latency requirements. We also propose the Q-CPTO-Heuristic (Q-CPTOH) scheme to solve the task offloading problem in a time-efficient manner. Extensive evaluations show that Q-CPTO significantly outperforms prominent task offloading schemes by up to 14% and 20% in terms of response delay and traffic awareness, respectively. Furthermore, Q-CPTO-H closely approaches the optimal solution, with marginal gaps of up to 1.4% and 2.1% in terms of traffic awareness and the number of collaborating users, respectively, while reducing the runtime by up to 84%.

Risk-Aware Accelerated Wireless Federated Learning with Heterogeneous Clients

Jan 17, 2024Abstract:Wireless Federated Learning (FL) is an emerging distributed machine learning paradigm, particularly gaining momentum in domains with confidential and private data on mobile clients. However, the location-dependent performance, in terms of transmission rates and susceptibility to transmission errors, poses major challenges for wireless FL's convergence speed and accuracy. The challenge is more acute for hostile environments without a metric that authenticates the data quality and security profile of the clients. In this context, this paper proposes a novel risk-aware accelerated FL framework that accounts for the clients heterogeneity in the amount of possessed data, transmission rates, transmission errors, and trustworthiness. Classifying clients according to their location-dependent performance and trustworthiness profiles, we propose a dynamic risk-aware global model aggregation scheme that allows clients to participate in descending order of their transmission rates and an ascending trustworthiness constraint. In particular, the transmission rate is the dominant participation criterion for initial rounds to accelerate the convergence speed. Our model then progressively relaxes the transmission rate restriction to explore more training data at cell-edge clients. The aggregation rounds incorporate a debiasing factor that accounts for transmission errors. Risk-awareness is enabled by a validation set, where the base station eliminates non-trustworthy clients at the fine-tuning stage. The proposed scheme is benchmarked against a conservative scheme (i.e., only allowing trustworthy devices) and an aggressive scheme (i.e., oblivious to the trust metric). The numerical results highlight the superiority of the proposed scheme in terms of accuracy and convergence speed when compared to both benchmarks.

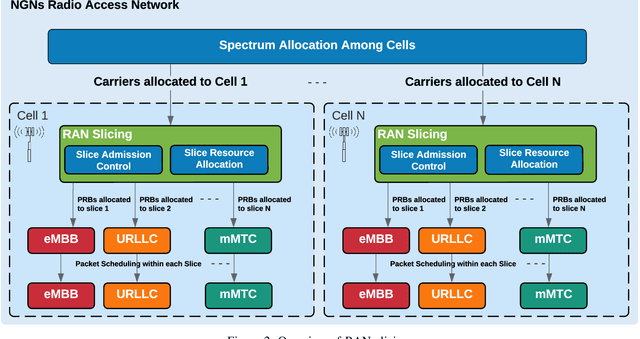

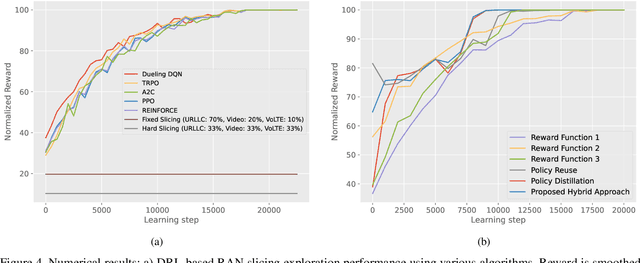

Safe and Accelerated Deep Reinforcement Learning-based O-RAN Slicing: A Hybrid Transfer Learning Approach

Sep 18, 2023Abstract:The open radio access network (O-RAN) architecture supports intelligent network control algorithms as one of its core capabilities. Data-driven applications incorporate such algorithms to optimize radio access network (RAN) functions via RAN intelligent controllers (RICs). Deep reinforcement learning (DRL) algorithms are among the main approaches adopted in the O-RAN literature to solve dynamic radio resource management problems. However, despite the benefits introduced by the O-RAN RICs, the practical adoption of DRL algorithms in real network deployments falls behind. This is primarily due to the slow convergence and unstable performance exhibited by DRL agents upon deployment and when encountering previously unseen network conditions. In this paper, we address these challenges by proposing transfer learning (TL) as a core component of the training and deployment workflows for the DRL-based closed-loop control of O-RAN functionalities. To this end, we propose and design a hybrid TL-aided approach that leverages the advantages of both policy reuse and distillation TL methods to provide safe and accelerated convergence in DRL-based O-RAN slicing. We conduct a thorough experiment that accommodates multiple services, including real VR gaming traffic to reflect practical scenarios of O-RAN slicing. We also propose and implement policy reuse and distillation-aided DRL and non-TL-aided DRL as three separate baselines. The proposed hybrid approach shows at least: 7.7% and 20.7% improvements in the average initial reward value and the percentage of converged scenarios, and a 64.6% decrease in reward variance while maintaining fast convergence and enhancing the generalizability compared with the baselines.

How Does Forecasting Affect the Convergence of DRL Techniques in O-RAN Slicing?

Sep 01, 2023Abstract:The success of immersive applications such as virtual reality (VR) gaming and metaverse services depends on low latency and reliable connectivity. To provide seamless user experiences, the open radio access network (O-RAN) architecture and 6G networks are expected to play a crucial role. RAN slicing, a critical component of the O-RAN paradigm, enables network resources to be allocated based on the needs of immersive services, creating multiple virtual networks on a single physical infrastructure. In the O-RAN literature, deep reinforcement learning (DRL) algorithms are commonly used to optimize resource allocation. However, the practical adoption of DRL in live deployments has been sluggish. This is primarily due to the slow convergence and performance instabilities suffered by the DRL agents both upon initial deployment and when there are significant changes in network conditions. In this paper, we investigate the impact of time series forecasting of traffic demands on the convergence of the DRL-based slicing agents. For that, we conduct an exhaustive experiment that supports multiple services including real VR gaming traffic. We then propose a novel forecasting-aided DRL approach and its respective O-RAN practical deployment workflow to enhance DRL convergence. Our approach shows up to 22.8%, 86.3%, and 300% improvements in the average initial reward value, convergence rate, and number of converged scenarios respectively, enhancing the generalizability of the DRL agents compared with the implemented baselines. The results also indicate that our approach is robust against forecasting errors and that forecasting models do not have to be ideal.

Toward Safe and Accelerated Deep Reinforcement Learning for Next-Generation Wireless Networks

Sep 16, 2022

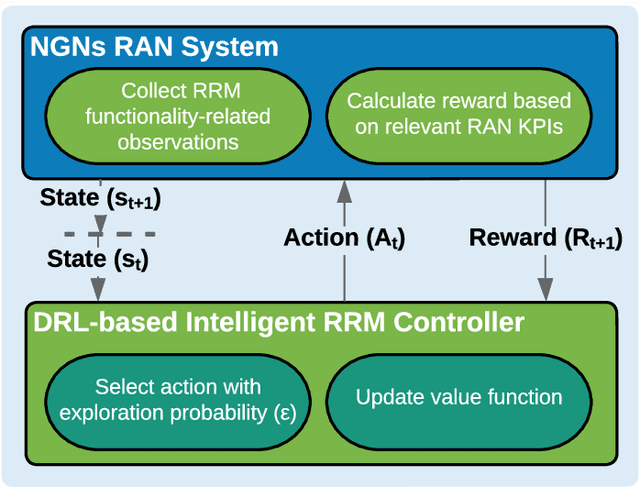

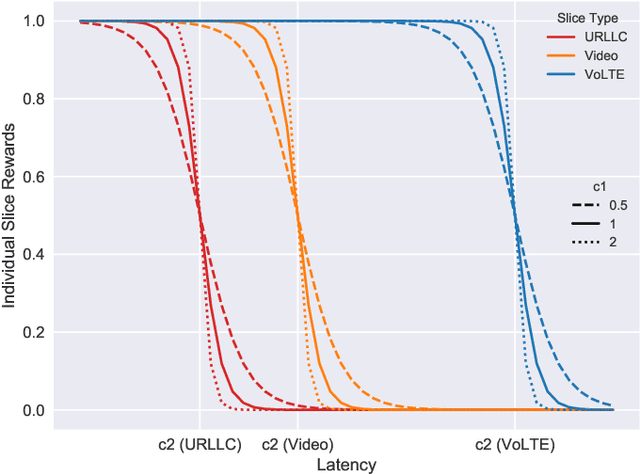

Abstract:Deep reinforcement learning (DRL) algorithms have recently gained wide attention in the wireless networks domain. They are considered promising approaches for solving dynamic radio resource management (RRM) problems in next-generation networks. Given their capabilities to build an approximate and continuously updated model of the wireless network environments, DRL algorithms can deal with the multifaceted complexity of such environments. Nevertheless, several challenges hinder the practical adoption of DRL in commercial networks. In this article, we first discuss two key practical challenges that are faced but rarely tackled when developing DRL-based RRM solutions. We argue that it is inevitable to address these DRL-related challenges for DRL to find its way to RRM commercial solutions. In particular, we discuss the need to have safe and accelerated DRL-based RRM solutions that mitigate the slow convergence and performance instability exhibited by DRL algorithms. We then review and categorize the main approaches used in the RRM domain to develop safe and accelerated DRL-based solutions. Finally, a case study is conducted to demonstrate the importance of having safe and accelerated DRL-based RRM solutions. We employ multiple variants of transfer learning (TL) techniques to accelerate the convergence of intelligent radio access network (RAN) slicing DRL-based controllers. We also propose a hybrid TL-based approach and sigmoid function-based rewards as examples of safe exploration in DRL-based RAN slicing.

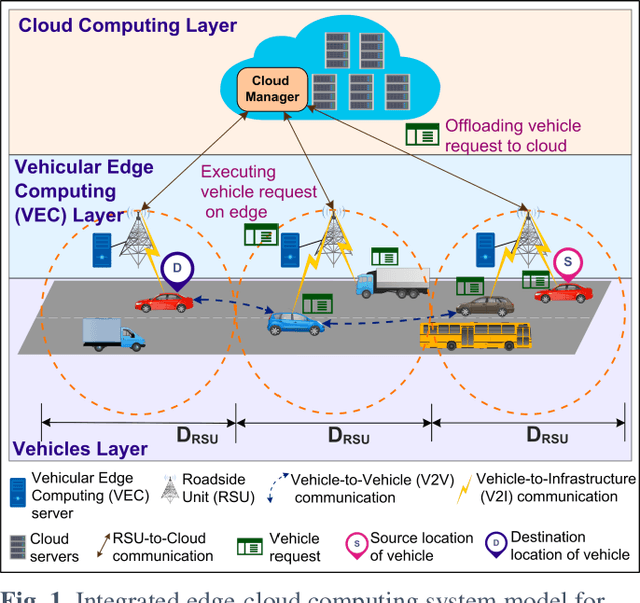

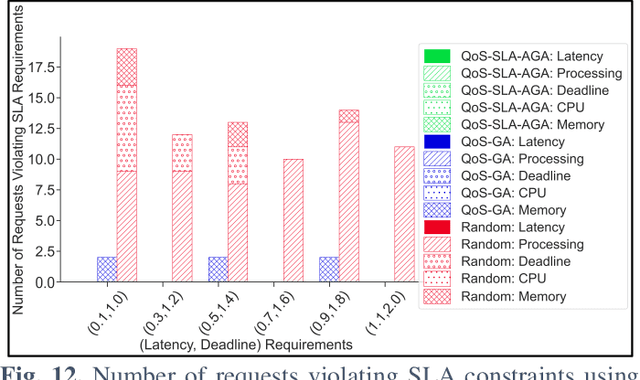

QoS-SLA-Aware Artificial Intelligence Adaptive Genetic Algorithm for Multi-Request Offloading in Integrated Edge-Cloud Computing System for the Internet of Vehicles

Jan 21, 2022

Abstract:Internet of Vehicles (IoV) over Vehicular Ad-hoc Networks (VANETS) is an emerging technology enabling the development of smart cities applications for safer, efficient, and pleasant travel. These applications have stringent requirements expressed in Service Level Agreements (SLAs). Considering vehicles limited computational and storage capabilities, applications requests are offloaded into an integrated edge-cloud computing system. Existing offloading solutions focus on optimizing applications Quality of Service (QoS) while respecting a single SLA constraint. They do not consider the impact of overlapped requests processing. Very few contemplate the varying speed of a vehicle. This paper proposes a novel Artificial Intelligence (AI) QoS-SLA-aware genetic algorithm (GA) for multi-request offloading in a heterogeneous edge-cloud computing system, considering the impact of overlapping requests processing and dynamic vehicle speed. The objective of the optimization algorithm is to improve the applications' Quality of Service (QoS) by minimizing the total execution time. The proposed algorithm integrates an adaptive penalty function to assimilate the SLAs constraints in terms of latency, processing time, deadline, CPU, and memory requirements. Numerical experiments and comparative analysis are achieved between our proposed QoS-SLA-aware GA, random, and GA baseline approaches. The results show that the proposed algorithm executes the requests 1.22 times faster on average compared to the random approach with 59.9% less SLA violations. While the GA baseline approach increases the performance of the requests by 1.14 times, it has 19.8% more SLA violations than our approach.

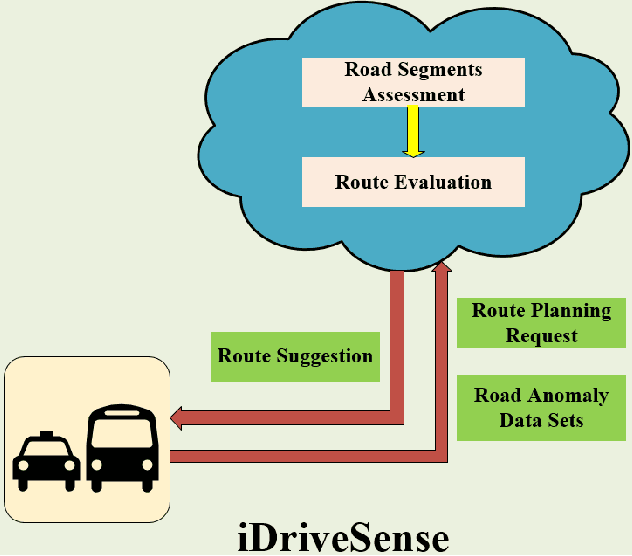

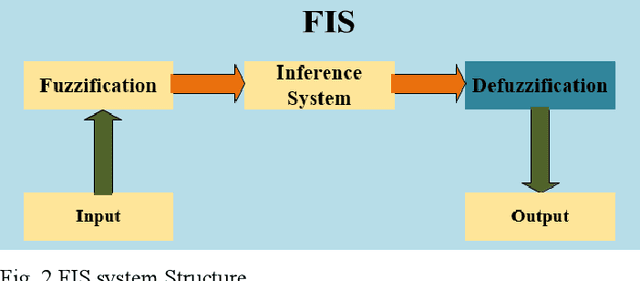

iDriveSense: Dynamic Route Planning Involving Roads Quality Information

Sep 08, 2018

Abstract:Owing to the expeditious growth in the information and communication technologies, smart cities have raised the expectations in terms of efficient functioning and management. One key aspect of residents' daily comfort is assured through affording reliable traffic management and route planning. Comprehensively, the majority of the present trip planning applications and service providers are enabling their trip planning recommendations relying on shortest paths and/or fastest routes. However, such suggestions may discount drivers' preferences with respect to safe and less disturbing trips. Road anomalies such as cracks, potholes, and manholes induce risky driving scenarios and can lead to vehicles damages and costly repairs. Accordingly, in this paper, we propose a crowdsensing based dynamic route planning system. Leveraging both the vehicle motion sensors and the inertial sensors within the smart devices, road surface types and anomalies have been detected and categorized. In addition, the monitored events are geo-referenced utilizing GPS receivers on both vehicles and smart devices. Consequently, road segments assessments are conducted using fuzzy system models based on aspects such as the number of anomalies and their severity levels in each road segment. Afterward, another fuzzy model is adopted to recommend the best trip routes based on the road segments quality in each potential route. Extensive road experiments are held to build and show the potential of the proposed system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge