Huned Materwala

From Conception to Deployment: Intelligent Stroke Prediction Framework using Machine Learning and Performance Evaluation

Apr 01, 2023Abstract:Stroke is the second leading cause of death worldwide. Machine learning classification algorithms have been widely adopted for stroke prediction. However, these algorithms were evaluated using different datasets and evaluation metrics. Moreover, there is no comprehensive framework for stroke data analytics. This paper proposes an intelligent stroke prediction framework based on a critical examination of machine learning prediction algorithms in the literature. The five most used machine learning algorithms for stroke prediction are evaluated using a unified setup for objective comparison. Comparative analysis and numerical results reveal that the Random Forest algorithm is best suited for stroke prediction.

Forecasting COVID-19 Infections in Gulf Cooperation Council (GCC) Countries using Machine Learning

Mar 14, 2023Abstract:COVID-19 has infected more than 68 million people worldwide since it was first detected about a year ago. Machine learning time series models have been implemented to forecast COVID-19 infections. In this paper, we develop time series models for the Gulf Cooperation Council (GCC) countries using the public COVID-19 dataset from Johns Hopkins. The dataset set includes the one-year cumulative COVID-19 cases between 22/01/2020 to 22/01/2021. We developed different models for the countries under study based on the spatial distribution of the infection data. Our experimental results show that the developed models can forecast COVID-19 infections with high precision.

HealthEdge: A Machine Learning-Based Smart Healthcare Framework for Prediction of Type 2 Diabetes in an Integrated IoT, Edge, and Cloud Computing System

Jan 25, 2023Abstract:Diabetes Mellitus has no permanent cure to date and is one of the leading causes of death globally. The alarming increase in diabetes calls for the need to take precautionary measures to avoid/predict the occurrence of diabetes. This paper proposes HealthEdge, a machine learning-based smart healthcare framework for type 2 diabetes prediction in an integrated IoT-edge-cloud computing system. Numerical experiments and comparative analysis were carried out between the two most used machine learning algorithms in the literature, Random Forest (RF) and Logistic Regression (LR), using two real-life diabetes datasets. The results show that RF predicts diabetes with 6% more accuracy on average compared to LR.

Secure and Privacy-Preserving Automated End-to-End Integrated IoT-Edge-Artificial Intelligence-Blockchain Monitoring System for Diabetes Mellitus Prediction

Nov 13, 2022Abstract:Diabetes Mellitus, one of the leading causes of death worldwide, has no cure till date and can lead to severe health complications, such as retinopathy, limb amputation, cardiovascular diseases, and neuronal disease, if left untreated. Consequently, it becomes crucial to take precautionary measures to avoid/predict the occurrence of diabetes. Machine learning approaches have been proposed and evaluated in the literature for diabetes prediction. This paper proposes an IoT-edge-Artificial Intelligence (AI)-blockchain system for diabetes prediction based on risk factors. The proposed system is underpinned by the blockchain to obtain a cohesive view of the risk factors data from patients across different hospitals and to ensure security and privacy of the user data. Furthermore, we provide a comparative analysis of different medical sensors, devices, and methods to measure and collect the risk factors values in the system. Numerical experiments and comparative analysis were carried out between our proposed system, using the most accurate random forest (RF) model, and the two most used state-of-the-art machine learning approaches, Logistic Regression (LR) and Support Vector Machine (SVM), using three real-life diabetes datasets. The results show that the proposed system using RF predicts diabetes with 4.57% more accuracy on average compared to LR and SVM, with 2.87 times more execution time. Data balancing without feature selection does not show significant improvement. The performance is improved by 1.14% and 0.02% after feature selection for PIMA Indian and Sylhet datasets respectively, while it reduces by 0.89% for MIMIC III.

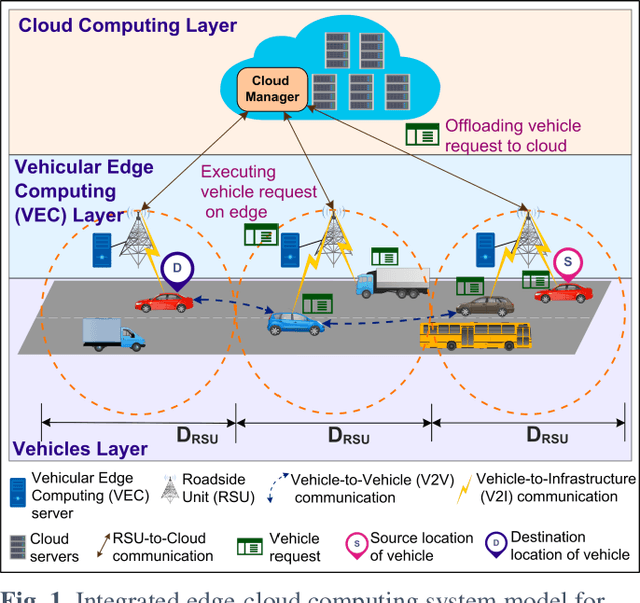

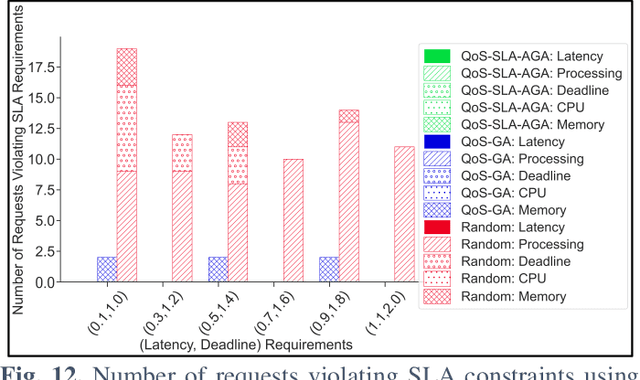

QoS-SLA-Aware Artificial Intelligence Adaptive Genetic Algorithm for Multi-Request Offloading in Integrated Edge-Cloud Computing System for the Internet of Vehicles

Jan 21, 2022

Abstract:Internet of Vehicles (IoV) over Vehicular Ad-hoc Networks (VANETS) is an emerging technology enabling the development of smart cities applications for safer, efficient, and pleasant travel. These applications have stringent requirements expressed in Service Level Agreements (SLAs). Considering vehicles limited computational and storage capabilities, applications requests are offloaded into an integrated edge-cloud computing system. Existing offloading solutions focus on optimizing applications Quality of Service (QoS) while respecting a single SLA constraint. They do not consider the impact of overlapped requests processing. Very few contemplate the varying speed of a vehicle. This paper proposes a novel Artificial Intelligence (AI) QoS-SLA-aware genetic algorithm (GA) for multi-request offloading in a heterogeneous edge-cloud computing system, considering the impact of overlapping requests processing and dynamic vehicle speed. The objective of the optimization algorithm is to improve the applications' Quality of Service (QoS) by minimizing the total execution time. The proposed algorithm integrates an adaptive penalty function to assimilate the SLAs constraints in terms of latency, processing time, deadline, CPU, and memory requirements. Numerical experiments and comparative analysis are achieved between our proposed QoS-SLA-aware GA, random, and GA baseline approaches. The results show that the proposed algorithm executes the requests 1.22 times faster on average compared to the random approach with 59.9% less SLA violations. While the GA baseline approach increases the performance of the requests by 1.14 times, it has 19.8% more SLA violations than our approach.

Performance and Energy-Aware Bi-objective Tasks Scheduling for Cloud Data Centers

Apr 25, 2021

Abstract:Cloud computing enables remote execution of users tasks. The pervasive adoption of cloud computing in smart cities services and applications requires timely execution of tasks adhering to Quality of Services (QoS). However, the increasing use of computing servers exacerbates the issues of high energy consumption, operating costs, and environmental pollution. Maximizing the performance and minimizing the energy in a cloud data center is challenging. In this paper, we propose a performance and energy optimization bi-objective algorithm to tradeoff the contradicting performance and energy objectives. An evolutionary algorithm-based multi-objective optimization is for the first time proposed using system performance counters. The performance of the proposed model is evaluated using a realistic cloud dataset in a cloud computing environment. Our experimental results achieve higher performance and lower energy consumption compared to a state of the art algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge