Leila Ismail

ADAT: Time-Series-Aware Adaptive Transformer Architecture for Sign Language Translation

Apr 16, 2025

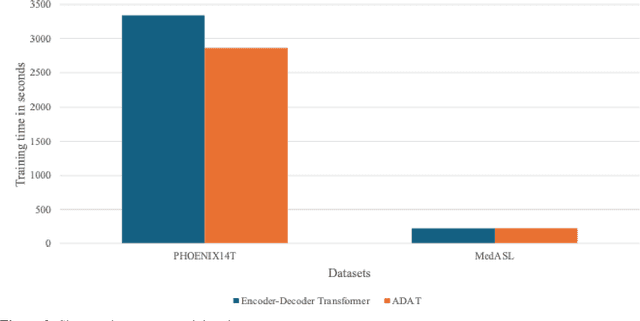

Abstract:Current sign language machine translation systems rely on recognizing hand movements, facial expressions and body postures, and natural language processing, to convert signs into text. Recent approaches use Transformer architectures to model long-range dependencies via positional encoding. However, they lack accuracy in recognizing fine-grained, short-range temporal dependencies between gestures captured at high frame rates. Moreover, their high computational complexity leads to inefficient training. To mitigate these issues, we propose an Adaptive Transformer (ADAT), which incorporates components for enhanced feature extraction and adaptive feature weighting through a gating mechanism to emphasize contextually relevant features while reducing training overhead and maintaining translation accuracy. To evaluate ADAT, we introduce MedASL, the first public medical American Sign Language dataset. In sign-to-gloss-to-text experiments, ADAT outperforms the encoder-decoder transformer, improving BLEU-4 accuracy by 0.1% while reducing training time by 14.33% on PHOENIX14T and 3.24% on MedASL. In sign-to-text experiments, it improves accuracy by 8.7% and reduces training time by 2.8% on PHOENIX14T and achieves 4.7% higher accuracy and 7.17% faster training on MedASL. Compared to encoder-only and decoder-only baselines in sign-to-text, ADAT is at least 6.8% more accurate despite being up to 12.1% slower due to its dual-stream structure.

GLoT: A Novel Gated-Logarithmic Transformer for Efficient Sign Language Translation

Feb 17, 2025Abstract:Machine Translation has played a critical role in reducing language barriers, but its adaptation for Sign Language Machine Translation (SLMT) has been less explored. Existing works on SLMT mostly use the Transformer neural network which exhibits low performance due to the dynamic nature of the sign language. In this paper, we propose a novel Gated-Logarithmic Transformer (GLoT) that captures the long-term temporal dependencies of the sign language as a time-series data. We perform a comprehensive evaluation of GloT with the transformer and transformer-fusion models as a baseline, for Sign-to-Gloss-to-Text translation. Our results demonstrate that GLoT consistently outperforms the other models across all metrics. These findings underscore its potential to address the communication challenges faced by the Deaf and Hard of Hearing community.

From Rule-Based Models to Deep Learning Transformers Architectures for Natural Language Processing and Sign Language Translation Systems: Survey, Taxonomy and Performance Evaluation

Aug 27, 2024Abstract:With the growing Deaf and Hard of Hearing population worldwide and the persistent shortage of certified sign language interpreters, there is a pressing need for an efficient, signs-driven, integrated end-to-end translation system, from sign to gloss to text and vice-versa. There has been a wealth of research on machine translations and related reviews. However, there are few works on sign language machine translation considering the particularity of the language being continuous and dynamic. This paper aims to address this void, providing a retrospective analysis of the temporal evolution of sign language machine translation algorithms and a taxonomy of the Transformers architectures, the most used approach in language translation. We also present the requirements of a real-time Quality-of-Service sign language ma-chine translation system underpinned by accurate deep learning algorithms. We propose future research directions for sign language translation systems.

ChatGPT, Let us Chat Sign Language: Experiments, Architectural Elements, Challenges and Research Directions

Jan 10, 2024

Abstract:ChatGPT is a language model based on Generative AI. Existing research work on ChatGPT focused on its use in various domains. However, its potential for Sign Language Translation (SLT) is yet to be explored. This paper addresses this void. Therefore, we present GPT's evolution aiming a retrospective analysis of the improvements to its architecture for SLT. We explore ChatGPT's capabilities in translating different sign languages in paving the way to better accessibility for deaf and hard-of-hearing community. Our experimental results indicate that ChatGPT can accurately translate from English to American (ASL), Australian (AUSLAN), and British (BSL) sign languages and from Arabic Sign Language (ArSL) to English with only one prompt iteration. However, the model failed to translate from Arabic to ArSL and ASL, AUSLAN, and BSL to Arabic. Consequently, we present challenges and derive insights for future research directions.

Metaverse: A Vision, Architectural Elements, and Future Directions for Scalable and Realtime Virtual Worlds

Aug 24, 2023

Abstract:With the emergence of Cloud computing, Internet of Things-enabled Human-Computer Interfaces, Generative Artificial Intelligence, and high-accurate Machine and Deep-learning recognition and predictive models, along with the Post Covid-19 proliferation of social networking, and remote communications, the Metaverse gained a lot of popularity. Metaverse has the prospective to extend the physical world using virtual and augmented reality so the users can interact seamlessly with the real and virtual worlds using avatars and holograms. It has the potential to impact people in the way they interact on social media, collaborate in their work, perform marketing and business, teach, learn, and even access personalized healthcare. Several works in the literature examine Metaverse in terms of hardware wearable devices, and virtual reality gaming applications. However, the requirements of realizing the Metaverse in realtime and at a large-scale need yet to be examined for the technology to be usable. To address this limitation, this paper presents the temporal evolution of Metaverse definitions and captures its evolving requirements. Consequently, we provide insights into Metaverse requirements. In addition to enabling technologies, we lay out architectural elements for scalable, reliable, and efficient Metaverse systems, and a classification of existing Metaverse applications along with proposing required future research directions.

From Conception to Deployment: Intelligent Stroke Prediction Framework using Machine Learning and Performance Evaluation

Apr 01, 2023Abstract:Stroke is the second leading cause of death worldwide. Machine learning classification algorithms have been widely adopted for stroke prediction. However, these algorithms were evaluated using different datasets and evaluation metrics. Moreover, there is no comprehensive framework for stroke data analytics. This paper proposes an intelligent stroke prediction framework based on a critical examination of machine learning prediction algorithms in the literature. The five most used machine learning algorithms for stroke prediction are evaluated using a unified setup for objective comparison. Comparative analysis and numerical results reveal that the Random Forest algorithm is best suited for stroke prediction.

Towards a Deep Learning Pain-Level Detection Deployment at UAE for Patient-Centric-Pain Management and Diagnosis Support: Framework and Performance Evaluation

Mar 14, 2023Abstract:The outbreak of the COVID-19 pandemic revealed the criticality of timely intervention in a situation exacerbated by a shortage in medical staff and equipment. Pain-level screening is the initial step toward identifying the severity of patient conditions. Automatic recognition of state and feelings help in identifying patient symptoms to take immediate adequate action and providing a patient-centric medical plan tailored to a patient's state. In this paper, we propose a framework for pain-level detection for deployment in the United Arab Emirates and assess its performance using the most used approaches in the literature. Our results show that a deployment of a pain-level deep learning detection framework is promising in identifying the pain level accurately.

Forecasting COVID-19 Infections in Gulf Cooperation Council (GCC) Countries using Machine Learning

Mar 14, 2023Abstract:COVID-19 has infected more than 68 million people worldwide since it was first detected about a year ago. Machine learning time series models have been implemented to forecast COVID-19 infections. In this paper, we develop time series models for the Gulf Cooperation Council (GCC) countries using the public COVID-19 dataset from Johns Hopkins. The dataset set includes the one-year cumulative COVID-19 cases between 22/01/2020 to 22/01/2021. We developed different models for the countries under study based on the spatial distribution of the infection data. Our experimental results show that the developed models can forecast COVID-19 infections with high precision.

HealthEdge: A Machine Learning-Based Smart Healthcare Framework for Prediction of Type 2 Diabetes in an Integrated IoT, Edge, and Cloud Computing System

Jan 25, 2023Abstract:Diabetes Mellitus has no permanent cure to date and is one of the leading causes of death globally. The alarming increase in diabetes calls for the need to take precautionary measures to avoid/predict the occurrence of diabetes. This paper proposes HealthEdge, a machine learning-based smart healthcare framework for type 2 diabetes prediction in an integrated IoT-edge-cloud computing system. Numerical experiments and comparative analysis were carried out between the two most used machine learning algorithms in the literature, Random Forest (RF) and Logistic Regression (LR), using two real-life diabetes datasets. The results show that RF predicts diabetes with 6% more accuracy on average compared to LR.

Secure and Privacy-Preserving Automated End-to-End Integrated IoT-Edge-Artificial Intelligence-Blockchain Monitoring System for Diabetes Mellitus Prediction

Nov 13, 2022Abstract:Diabetes Mellitus, one of the leading causes of death worldwide, has no cure till date and can lead to severe health complications, such as retinopathy, limb amputation, cardiovascular diseases, and neuronal disease, if left untreated. Consequently, it becomes crucial to take precautionary measures to avoid/predict the occurrence of diabetes. Machine learning approaches have been proposed and evaluated in the literature for diabetes prediction. This paper proposes an IoT-edge-Artificial Intelligence (AI)-blockchain system for diabetes prediction based on risk factors. The proposed system is underpinned by the blockchain to obtain a cohesive view of the risk factors data from patients across different hospitals and to ensure security and privacy of the user data. Furthermore, we provide a comparative analysis of different medical sensors, devices, and methods to measure and collect the risk factors values in the system. Numerical experiments and comparative analysis were carried out between our proposed system, using the most accurate random forest (RF) model, and the two most used state-of-the-art machine learning approaches, Logistic Regression (LR) and Support Vector Machine (SVM), using three real-life diabetes datasets. The results show that the proposed system using RF predicts diabetes with 4.57% more accuracy on average compared to LR and SVM, with 2.87 times more execution time. Data balancing without feature selection does not show significant improvement. The performance is improved by 1.14% and 0.02% after feature selection for PIMA Indian and Sylhet datasets respectively, while it reduces by 0.89% for MIMIC III.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge