Nada Shahin

ADAT: Time-Series-Aware Adaptive Transformer Architecture for Sign Language Translation

Apr 16, 2025

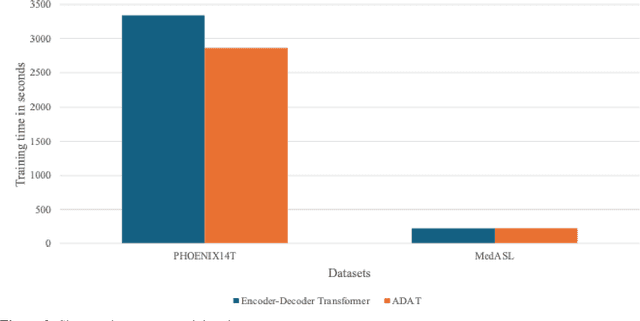

Abstract:Current sign language machine translation systems rely on recognizing hand movements, facial expressions and body postures, and natural language processing, to convert signs into text. Recent approaches use Transformer architectures to model long-range dependencies via positional encoding. However, they lack accuracy in recognizing fine-grained, short-range temporal dependencies between gestures captured at high frame rates. Moreover, their high computational complexity leads to inefficient training. To mitigate these issues, we propose an Adaptive Transformer (ADAT), which incorporates components for enhanced feature extraction and adaptive feature weighting through a gating mechanism to emphasize contextually relevant features while reducing training overhead and maintaining translation accuracy. To evaluate ADAT, we introduce MedASL, the first public medical American Sign Language dataset. In sign-to-gloss-to-text experiments, ADAT outperforms the encoder-decoder transformer, improving BLEU-4 accuracy by 0.1% while reducing training time by 14.33% on PHOENIX14T and 3.24% on MedASL. In sign-to-text experiments, it improves accuracy by 8.7% and reduces training time by 2.8% on PHOENIX14T and achieves 4.7% higher accuracy and 7.17% faster training on MedASL. Compared to encoder-only and decoder-only baselines in sign-to-text, ADAT is at least 6.8% more accurate despite being up to 12.1% slower due to its dual-stream structure.

GLoT: A Novel Gated-Logarithmic Transformer for Efficient Sign Language Translation

Feb 17, 2025Abstract:Machine Translation has played a critical role in reducing language barriers, but its adaptation for Sign Language Machine Translation (SLMT) has been less explored. Existing works on SLMT mostly use the Transformer neural network which exhibits low performance due to the dynamic nature of the sign language. In this paper, we propose a novel Gated-Logarithmic Transformer (GLoT) that captures the long-term temporal dependencies of the sign language as a time-series data. We perform a comprehensive evaluation of GloT with the transformer and transformer-fusion models as a baseline, for Sign-to-Gloss-to-Text translation. Our results demonstrate that GLoT consistently outperforms the other models across all metrics. These findings underscore its potential to address the communication challenges faced by the Deaf and Hard of Hearing community.

From Rule-Based Models to Deep Learning Transformers Architectures for Natural Language Processing and Sign Language Translation Systems: Survey, Taxonomy and Performance Evaluation

Aug 27, 2024Abstract:With the growing Deaf and Hard of Hearing population worldwide and the persistent shortage of certified sign language interpreters, there is a pressing need for an efficient, signs-driven, integrated end-to-end translation system, from sign to gloss to text and vice-versa. There has been a wealth of research on machine translations and related reviews. However, there are few works on sign language machine translation considering the particularity of the language being continuous and dynamic. This paper aims to address this void, providing a retrospective analysis of the temporal evolution of sign language machine translation algorithms and a taxonomy of the Transformers architectures, the most used approach in language translation. We also present the requirements of a real-time Quality-of-Service sign language ma-chine translation system underpinned by accurate deep learning algorithms. We propose future research directions for sign language translation systems.

ChatGPT, Let us Chat Sign Language: Experiments, Architectural Elements, Challenges and Research Directions

Jan 10, 2024

Abstract:ChatGPT is a language model based on Generative AI. Existing research work on ChatGPT focused on its use in various domains. However, its potential for Sign Language Translation (SLT) is yet to be explored. This paper addresses this void. Therefore, we present GPT's evolution aiming a retrospective analysis of the improvements to its architecture for SLT. We explore ChatGPT's capabilities in translating different sign languages in paving the way to better accessibility for deaf and hard-of-hearing community. Our experimental results indicate that ChatGPT can accurately translate from English to American (ASL), Australian (AUSLAN), and British (BSL) sign languages and from Arabic Sign Language (ArSL) to English with only one prompt iteration. However, the model failed to translate from Arabic to ArSL and ASL, AUSLAN, and BSL to Arabic. Consequently, we present challenges and derive insights for future research directions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge