Harshvardhan Sikka

MultiNet: An Open-Source Software Toolkit \& Benchmark Suite for the Evaluation and Adaptation of Multimodal Action Models

Jun 10, 2025Abstract:Recent innovations in multimodal action models represent a promising direction for developing general-purpose agentic systems, combining visual understanding, language comprehension, and action generation. We introduce MultiNet - a novel, fully open-source benchmark and surrounding software ecosystem designed to rigorously evaluate and adapt models across vision, language, and action domains. We establish standardized evaluation protocols for assessing vision-language models (VLMs) and vision-language-action models (VLAs), and provide open source software to download relevant data, models, and evaluations. Additionally, we provide a composite dataset with over 1.3 trillion tokens of image captioning, visual question answering, commonsense reasoning, robotic control, digital game-play, simulated locomotion/manipulation, and many more tasks. The MultiNet benchmark, framework, toolkit, and evaluation harness have been used in downstream research on the limitations of VLA generalization.

A4L: An Architecture for AI-Augmented Learning

May 08, 2025Abstract:AI promises personalized learning and scalable education. As AI agents increasingly permeate education in support of teaching and learning, there is a critical and urgent need for data architectures for collecting and analyzing data on learning, and feeding the results back to teachers, learners, and the AI agents for personalization of learning at scale. At the National AI Institute for Adult Learning and Online Education, we are developing an Architecture for AI-Augmented Learning (A4L) for supporting adult learning through online education. We present the motivations, goals, requirements of the A4L architecture. We describe preliminary applications of A4L and discuss how it advances the goals of making learning more personalized and scalable.

Benchmarking Vision, Language, & Action Models in Procedurally Generated, Open Ended Action Environments

May 08, 2025Abstract:Vision-language-action (VLA) models represent an important step toward general-purpose robotic systems by integrating visual perception, language understanding, and action execution. However, systematic evaluation of these models, particularly their zero-shot generalization capabilities in out-of-distribution (OOD) environments, remains limited. In this paper, we introduce MultiNet v0.2, a comprehensive benchmark designed to evaluate and analyze the generalization performance of state-of-the-art VLM and VLA models-including GPT-4o, GPT-4.1, OpenVLA,Pi0 Base, and Pi0 FAST-on diverse procedural tasks from the Procgen benchmark. Our analysis reveals several critical insights: (1) all evaluated models exhibit significant limitations in zero-shot generalization to OOD tasks, with performance heavily influenced by factors such as action representation and task complexit; (2) VLAs generally outperform other models due to their robust architectural design; and (3) VLM variants demonstrate substantial improvements when constrained appropriately, highlighting the sensitivity of model performance to precise prompt engineering.

Designing a Communication Bridge between Communities: Participatory Design for a Question-Answering AI Agent

Aug 01, 2023

Abstract:How do we design an AI system that is intended to act as a communication bridge between two user communities with different mental models and vocabularies? Skillsync is an interactive environment that engages employers (companies) and training providers (colleges) in a sustained dialogue to help them achieve the goal of building a training proposal that successfully meets the needs of the employers and employees. We used a variation of participatory design to elicit requirements for developing AskJill, a question-answering agent that explains how Skillsync works and thus acts as a communication bridge between company and college users. Our study finds that participatory design was useful in guiding the requirements gathering and eliciting user questions for the development of AskJill. Our results also suggest that the two Skillsync user communities perceived glossary assistance as a key feature that AskJill needs to offer, and they would benefit from such a shared vocabulary.

Human-AI Interaction Design in Machine Teaching

Jun 10, 2022

Abstract:Machine Teaching (MT) is an interactive process where a human and a machine interact with the goal of training a machine learning model (ML) for a specified task. The human teacher communicates their task expertise and the machine student gathers the required data and knowledge to produce an ML model. MT systems are developed to jointly minimize the time spent on teaching and the learner's error rate. The design of human-AI interaction in an MT system not only impacts the teaching efficiency, but also indirectly influences the ML performance by affecting the teaching quality. In this paper, we build upon our previous work where we proposed an MT framework with three components, viz., the teaching interface, the machine learner, and the knowledge base, and focus on the human-AI interaction design involved in realizing the teaching interface. We outline design decisions that need to be addressed in developing an MT system beginning from an ML task. The paper follows the Socratic method entailing a dialogue between a curious student and a wise teacher.

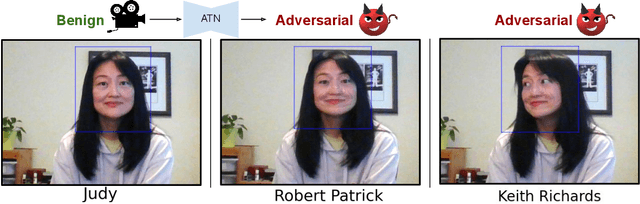

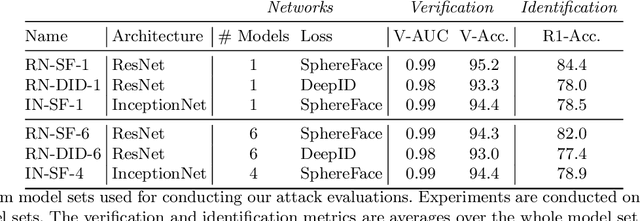

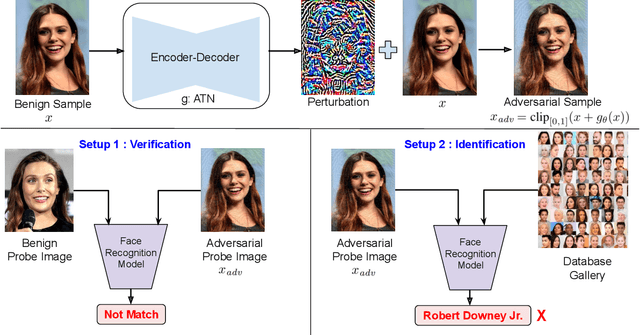

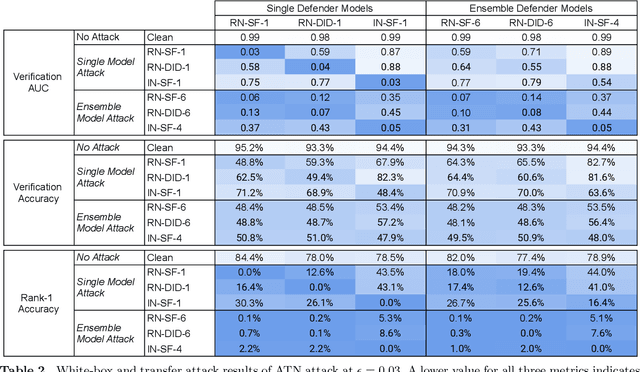

ReFace: Real-time Adversarial Attacks on Face Recognition Systems

Jun 09, 2022

Abstract:Deep neural network based face recognition models have been shown to be vulnerable to adversarial examples. However, many of the past attacks require the adversary to solve an input-dependent optimization problem using gradient descent which makes the attack impractical in real-time. These adversarial examples are also tightly coupled to the attacked model and are not as successful in transferring to different models. In this work, we propose ReFace, a real-time, highly-transferable attack on face recognition models based on Adversarial Transformation Networks (ATNs). ATNs model adversarial example generation as a feed-forward neural network. We find that the white-box attack success rate of a pure U-Net ATN falls substantially short of gradient-based attacks like PGD on large face recognition datasets. We therefore propose a new architecture for ATNs that closes this gap while maintaining a 10000x speedup over PGD. Furthermore, we find that at a given perturbation magnitude, our ATN adversarial perturbations are more effective in transferring to new face recognition models than PGD. ReFace attacks can successfully deceive commercial face recognition services in a transfer attack setting and reduce face identification accuracy from 82% to 16.4% for AWS SearchFaces API and Azure face verification accuracy from 91% to 50.1%.

Explanation as Question Answering based on a Task Model of the Agent's Design

Jun 08, 2022

Abstract:We describe a stance towards the generation of explanations in AI agents that is both human-centered and design-based. We collect questions about the working of an AI agent through participatory design by focus groups. We capture an agent's design through a Task-Method-Knowledge model that explicitly specifies the agent's tasks and goals, as well as the mechanisms, knowledge and vocabulary it uses for accomplishing the tasks. We illustrate our approach through the generation of explanations in Skillsync, an AI agent that links companies and colleges for worker upskilling and reskilling. In particular, we embed a question-answering agent called AskJill in Skillsync, where AskJill contains a TMK model of Skillsync's design. AskJill presently answers human-generated questions about Skillsync's tasks and vocabulary, and thereby helps explain how it produces its recommendations.

A Framework for Interactive Knowledge-Aided Machine Teaching

Apr 21, 2022

Abstract:Machine Teaching (MT) is an interactive process where humans train a machine learning model by playing the role of a teacher. The process of designing an MT system involves decisions that can impact both efficiency of human teachers and performance of machine learners. Previous research has proposed and evaluated specific MT systems but there is limited discussion on a general framework for designing them. We propose a framework for designing MT systems and also detail a system for the text classification problem as a specific instance. Our framework focuses on three components i.e. teaching interface, machine learner, and knowledge base; and their relations describe how each component can benefit the others. Our preliminary experiments show how MT systems can reduce both human teaching time and machine learner error rate.

Agent Smith: Teaching Question Answering to Jill Watson

Dec 22, 2021

Abstract:Building AI agents can be costly. Consider a question answering agent such as Jill Watson that automatically answers students' questions on the discussion forums of online classes based on their syllabi and other course materials. Training a Jill on the syllabus of a new online class can take a hundred hours or more. Machine teaching - interactive teaching of an AI agent using synthetic data sets - can reduce the training time because it combines the advantages of knowledge-based AI, machine learning using large data sets, and interactive human-in-loop training. We describe Agent Smith, an interactive machine teaching agent that reduces the time taken to train a Jill for a new online class by an order of magnitude.

WeightScale: Interpreting Weight Change in Neural Networks

Jul 07, 2021

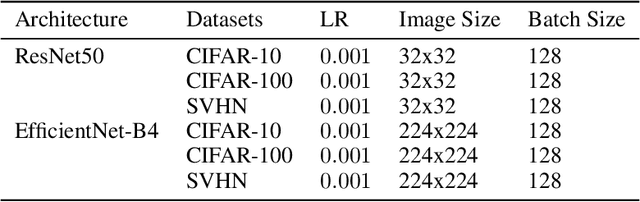

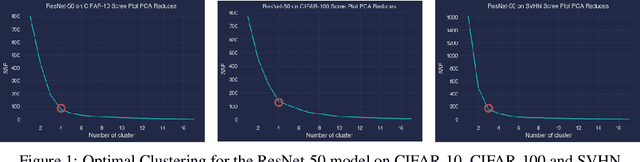

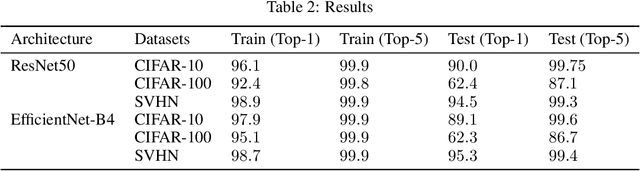

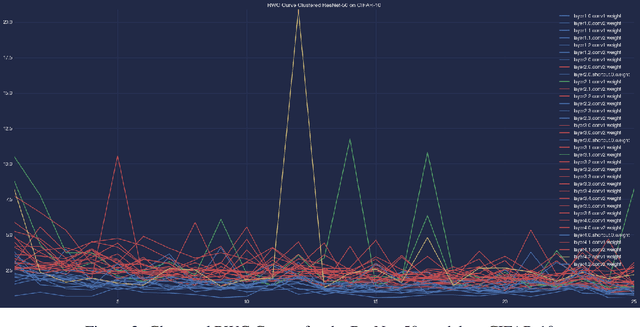

Abstract:Interpreting the learning dynamics of neural networks can provide useful insights into how networks learn and the development of better training and design approaches. We present an approach to interpret learning in neural networks by measuring relative weight change on a per layer basis and dynamically aggregating emerging trends through combination of dimensionality reduction and clustering which allows us to scale to very deep networks. We use this approach to investigate learning in the context of vision tasks across a variety of state-of-the-art networks and provide insights into the learning behavior of these networks, including how task complexity affects layer-wise learning in deeper layers of networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge