Spencer Rugaber

A4L: An Architecture for AI-Augmented Learning

May 08, 2025Abstract:AI promises personalized learning and scalable education. As AI agents increasingly permeate education in support of teaching and learning, there is a critical and urgent need for data architectures for collecting and analyzing data on learning, and feeding the results back to teachers, learners, and the AI agents for personalization of learning at scale. At the National AI Institute for Adult Learning and Online Education, we are developing an Architecture for AI-Augmented Learning (A4L) for supporting adult learning through online education. We present the motivations, goals, requirements of the A4L architecture. We describe preliminary applications of A4L and discuss how it advances the goals of making learning more personalized and scalable.

Designing a Communication Bridge between Communities: Participatory Design for a Question-Answering AI Agent

Aug 01, 2023

Abstract:How do we design an AI system that is intended to act as a communication bridge between two user communities with different mental models and vocabularies? Skillsync is an interactive environment that engages employers (companies) and training providers (colleges) in a sustained dialogue to help them achieve the goal of building a training proposal that successfully meets the needs of the employers and employees. We used a variation of participatory design to elicit requirements for developing AskJill, a question-answering agent that explains how Skillsync works and thus acts as a communication bridge between company and college users. Our study finds that participatory design was useful in guiding the requirements gathering and eliciting user questions for the development of AskJill. Our results also suggest that the two Skillsync user communities perceived glossary assistance as a key feature that AskJill needs to offer, and they would benefit from such a shared vocabulary.

Explanation as Question Answering based on a Task Model of the Agent's Design

Jun 08, 2022

Abstract:We describe a stance towards the generation of explanations in AI agents that is both human-centered and design-based. We collect questions about the working of an AI agent through participatory design by focus groups. We capture an agent's design through a Task-Method-Knowledge model that explicitly specifies the agent's tasks and goals, as well as the mechanisms, knowledge and vocabulary it uses for accomplishing the tasks. We illustrate our approach through the generation of explanations in Skillsync, an AI agent that links companies and colleges for worker upskilling and reskilling. In particular, we embed a question-answering agent called AskJill in Skillsync, where AskJill contains a TMK model of Skillsync's design. AskJill presently answers human-generated questions about Skillsync's tasks and vocabulary, and thereby helps explain how it produces its recommendations.

Understanding Self-Directed Learning in an Online Laboratory

Jun 06, 2022

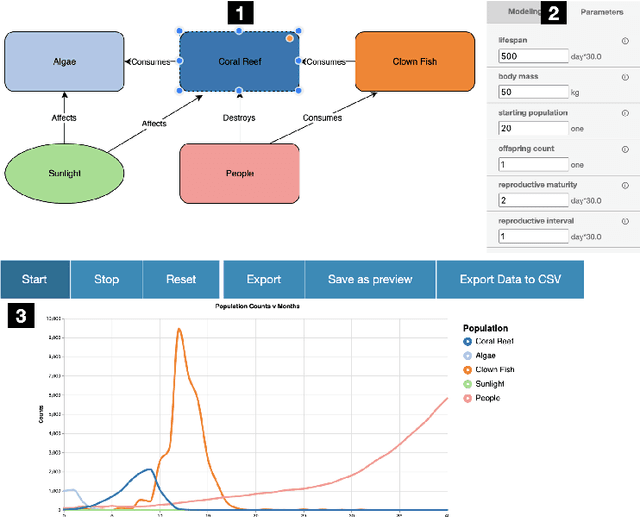

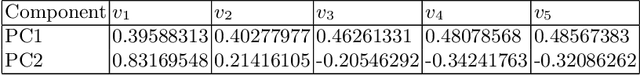

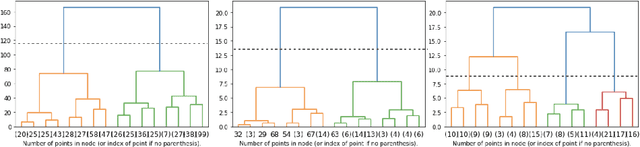

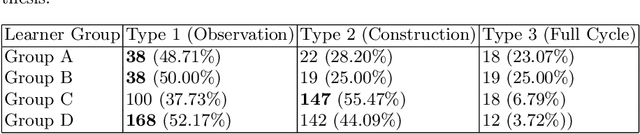

Abstract:We described a study on the use of an online laboratory for self-directed learning by constructing and simulating conceptual models of ecological systems. In this study, we could observe only the modeling behaviors and outcomes; the learning goals and outcomes were unknown. We used machine learning techniques to analyze the modeling behaviors of 315 learners and 822 conceptual models they generated. We derive three main conclusions from the results. First, learners manifest three types of modeling behaviors: observation (simulation focused), construction (construction focused), and full exploration (model construction, evaluation and revision). Second, while observation was the most common behavior among all learners, construction without evaluation was more common for less engaged learners and full exploration occurred mostly for more engaged learners. Third, learners who explored the full cycle of model construction, evaluation and revision generated models of higher quality. These modeling behaviors provide insights into self-directed learning at large.

Explanation as Question Answering based on Design Knowledge

Dec 16, 2021

Abstract:Explanation of an AI agent requires knowledge of its design and operation. An open question is how to identify, access and use this design knowledge for generating explanations. Many AI agents used in practice, such as intelligent tutoring systems fielded in educational contexts, typically come with a User Guide that explains what the agent does, how it works and how to use the agent. However, few humans actually read the User Guide in detail. Instead, most users seek answers to their questions on demand. In this paper, we describe a question answering agent (AskJill) that uses the User Guide for an interactive learning environment (VERA) to automatically answer questions and thereby explains the domain, functioning, and operation of VERA. We present a preliminary assessment of AskJill in VERA.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge