Han Song

ETA-INIT: Enhancing the Translation Accuracy for Stereo Visual-Inertial SLAM Initialization

May 23, 2024

Abstract:As the current initialization method in the state-of-the-art Stereo Visual-Inertial SLAM framework, ORB-SLAM3 has limitations. Its success depends on the performance of the pure stereo SLAM system and is based on the underlying assumption that pure visual SLAM can accurately estimate the camera trajectory, which is essential for inertial parameter estimation. Meanwhile, the further improved initialization method for ORB-SLAM3, known as Stereo-NEC, is time-consuming due to applying keypoint tracking to estimate gyroscope bias with normal epipolar constraints. To address the limitations of previous methods, this paper proposes a method aimed at enhancing translation accuracy during the initialization stage. The fundamental concept of our method is to improve the translation estimate with a 3 Degree-of-Freedom (DoF) Bundle Adjustment (BA), independently, while the rotation estimate is fixed, instead of using ORB-SLAM3's 6-DoF BA. Additionally, the rotation estimate will be updated by considering IMU measurements and gyroscope bias, unlike ORB-SLAM3's rotation, which is directly obtained from stereo visual odometry and may yield inferior results when operating in challenging scenarios. We also conduct extensive evaluations on the public benchmark, the EuRoC dataset, demonstrating that our method excels in accuracy.

BundledSLAM: An Accurate Visual SLAM System Using Multiple Cameras

Apr 01, 2024Abstract:Multi-camera SLAM systems offer a plethora of advantages, primarily stemming from their capacity to amalgamate information from a broader field of view, thereby resulting in heightened robustness and improved localization accuracy. In this research, we present a significant extension and refinement of the state-of-the-art stereo SLAM system, known as ORB-SLAM2, with the objective of attaining even higher precision. To accomplish this objective, we commence by mapping measurements from all cameras onto a virtual camera termed BundledFrame. This virtual camera is meticulously engineered to seamlessly adapt to multi-camera configurations, facilitating the effective fusion of data captured from multiple cameras. Additionally, we harness extrinsic parameters in the bundle adjustment (BA) process to achieve precise trajectory estimation.Furthermore, we conduct an extensive analysis of the role of bundle adjustment (BA) in the context of multi-camera scenarios, delving into its impact on tracking, local mapping, and global optimization. Our experimental evaluation entails comprehensive comparisons between ground truth data and the state-of-the-art SLAM system. To rigorously assess the system's performance, we utilize the EuRoC datasets. The consistent results of our evaluations demonstrate the superior accuracy of our system in comparison to existing approaches.

Advancing the State of the Art in Open Domain Dialog Systems through the Alexa Prize

Dec 27, 2018

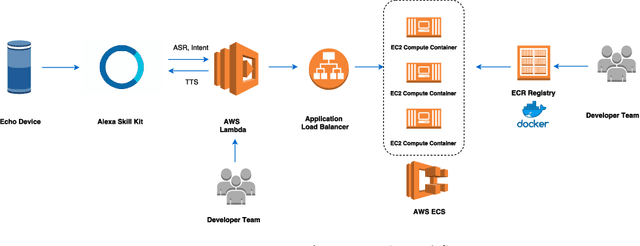

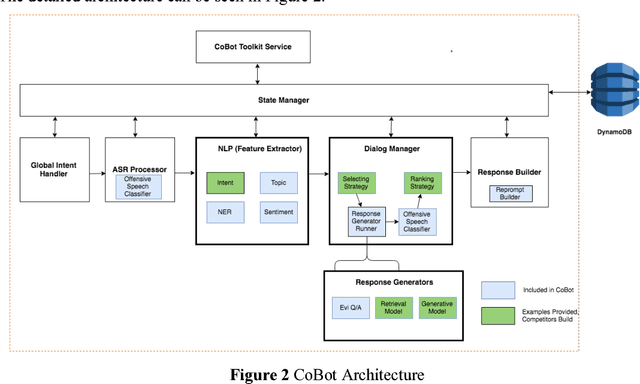

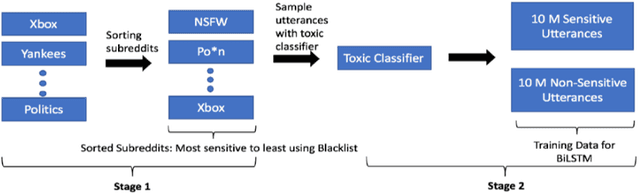

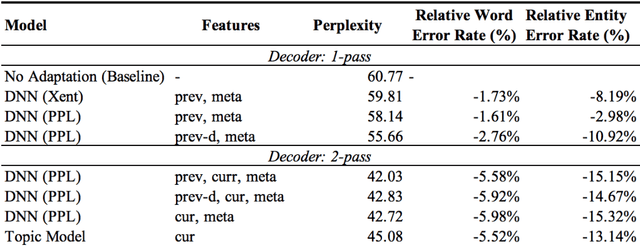

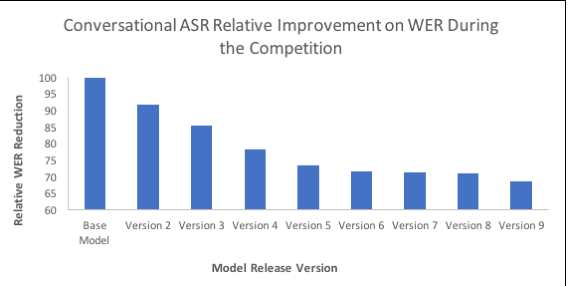

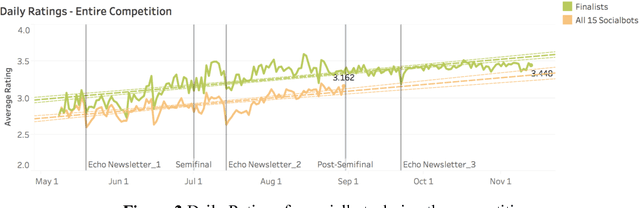

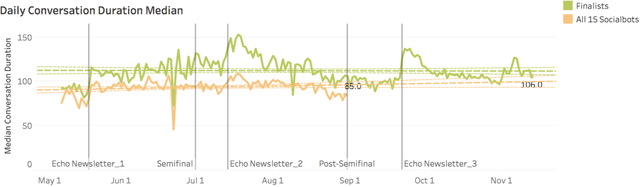

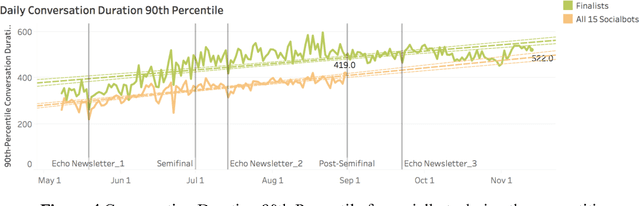

Abstract:Building open domain conversational systems that allow users to have engaging conversations on topics of their choice is a challenging task. Alexa Prize was launched in 2016 to tackle the problem of achieving natural, sustained, coherent and engaging open-domain dialogs. In the second iteration of the competition in 2018, university teams advanced the state of the art by using context in dialog models, leveraging knowledge graphs for language understanding, handling complex utterances, building statistical and hierarchical dialog managers, and leveraging model-driven signals from user responses. The 2018 competition also included the provision of a suite of tools and models to the competitors including the CoBot (conversational bot) toolkit, topic and dialog act detection models, conversation evaluators, and a sensitive content detection model so that the competing teams could focus on building knowledge-rich, coherent and engaging multi-turn dialog systems. This paper outlines the advances developed by the university teams as well as the Alexa Prize team to achieve the common goal of advancing the science of Conversational AI. We address several key open-ended problems such as conversational speech recognition, open domain natural language understanding, commonsense reasoning, statistical dialog management, and dialog evaluation. These collaborative efforts have driven improved experiences by Alexa users to an average rating of 3.61, the median duration of 2 mins 18 seconds, and average turns to 14.6, increases of 14%, 92%, 54% respectively since the launch of the 2018 competition. For conversational speech recognition, we have improved our relative Word Error Rate by 55% and our relative Entity Error Rate by 34% since the launch of the Alexa Prize. Socialbots improved in quality significantly more rapidly in 2018, in part due to the release of the CoBot toolkit.

Reconstruction and Registration of Large-Scale Medical Scene Using Point Clouds Data from Different Modalities

Sep 05, 2018

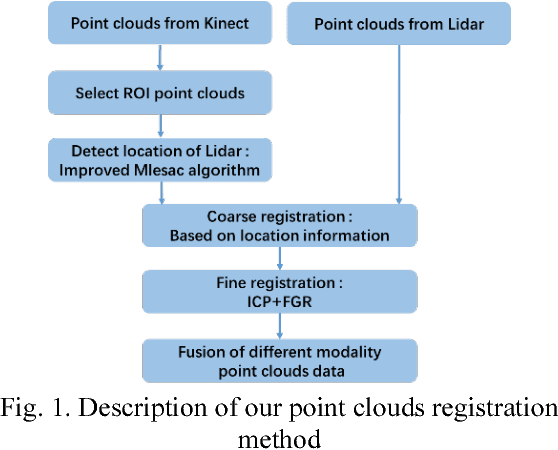

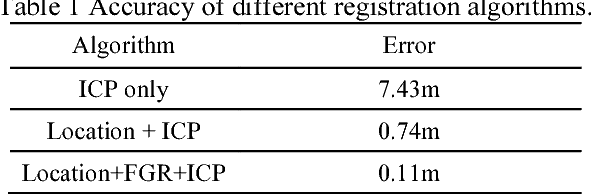

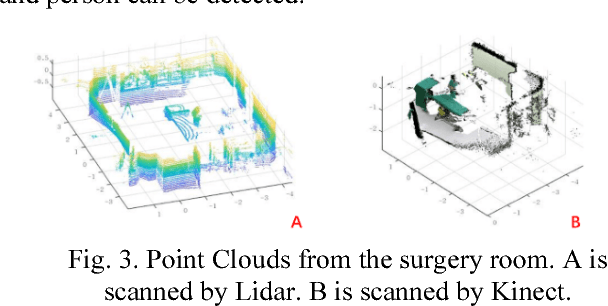

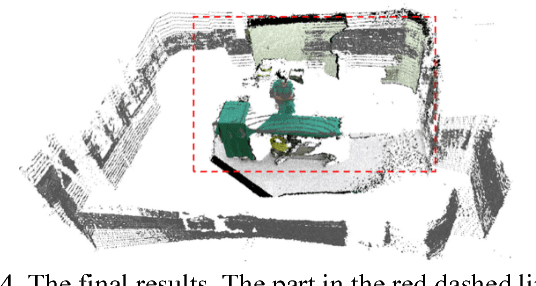

Abstract:Sensing the medical scenario can ensure the safety during the surgical operations. So, in this regard, a monitor platform which can obtain the accurate location information of the surgery room is desperately needed. Compared to 2D camera image, 3D data contains more information of distance and direction. Therefore, 3D sensors are more suitable to be used in surgical scene monitoring. However, each 3D sensor has its own limitations. For example, Lidar (Light Detection and Ranging) can detect large-scale environment with high precision, but the point clouds or depth maps are very sparse. As for commodity RGBD sensors, such as Kinect, can accurately capture denser data, but limited to a small range from 0.5 to 4.5m. So, a proper method which can address these problems for fusing different modalities data is important. In this paper, we proposed a method which can fuse different modalities 3D data to get a large-scale and dense point cloud. The key contributions of our work are as follows. First, we proposed a 3D data collecting system to reconstruct the medical scenes. By fusing the Lidar and Kinect data, a large-scale medical scene with more details can be reconstructed. Second, we proposed a location-based fast point clouds registration algorithm to deal with different modality datasets.

Conversational AI: The Science Behind the Alexa Prize

Jan 11, 2018

Abstract:Conversational agents are exploding in popularity. However, much work remains in the area of social conversation as well as free-form conversation over a broad range of domains and topics. To advance the state of the art in conversational AI, Amazon launched the Alexa Prize, a 2.5-million-dollar university competition where sixteen selected university teams were challenged to build conversational agents, known as socialbots, to converse coherently and engagingly with humans on popular topics such as Sports, Politics, Entertainment, Fashion and Technology for 20 minutes. The Alexa Prize offers the academic community a unique opportunity to perform research with a live system used by millions of users. The competition provided university teams with real user conversational data at scale, along with the user-provided ratings and feedback augmented with annotations by the Alexa team. This enabled teams to effectively iterate and make improvements throughout the competition while being evaluated in real-time through live user interactions. To build their socialbots, university teams combined state-of-the-art techniques with novel strategies in the areas of Natural Language Understanding, Context Modeling, Dialog Management, Response Generation, and Knowledge Acquisition. To support the efforts of participating teams, the Alexa Prize team made significant scientific and engineering investments to build and improve Conversational Speech Recognition, Topic Tracking, Dialog Evaluation, Voice User Experience, and tools for traffic management and scalability. This paper outlines the advances created by the university teams as well as the Alexa Prize team to achieve the common goal of solving the problem of Conversational AI.

* 18 pages, 5 figures, Alexa Prize Proceedings Paper (https://developer.amazon.com/alexaprize/proceedings), Alexa Prize University Competition to advance Conversational AI

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge