Hamid Soltanian-Zadeh

Improving Generalization in MRI-Based Deep Learning Models for Total Knee Replacement Prediction

Apr 29, 2025

Abstract:Knee osteoarthritis (KOA) is a common joint disease that causes pain and mobility issues. While MRI-based deep learning models have demonstrated superior performance in predicting total knee replacement (TKR) and disease progression, their generalizability remains challenging, particularly when applied to imaging data from different sources. In this study, we have shown that replacing batch normalization with instance normalization, using data augmentation, and applying contrastive loss improves model generalization in a baseline deep learning model for knee osteoarthritis (KOA) prediction. We trained and evaluated our model using MRI data from the Osteoarthritis Initiative (OAI) database, considering sagittal fat-suppressed intermediate-weighted turbo spin-echo (FS-IW-TSE) images as the source domain and sagittal fat-suppressed three-dimensional (3D) dual-echo in steady state (DESS) images as the target domain. The results demonstrate a statistically significant improvement in classification accuracy across both domains, with our approach outperforming the baseline model.

QuatE-D: A Distance-Based Quaternion Model for Knowledge Graph Embedding

Apr 18, 2025Abstract:Knowledge graph embedding (KGE) methods aim to represent entities and relations in a continuous space while preserving their structural and semantic properties. Quaternion-based KGEs have demonstrated strong potential in capturing complex relational patterns. In this work, we propose QuatE-D, a novel quaternion-based model that employs a distance-based scoring function instead of traditional inner-product approaches. By leveraging Euclidean distance, QuatE-D enhances interpretability and provides a more flexible representation of relational structures. Experimental results demonstrate that QuatE-D achieves competitive performance while maintaining an efficient parameterization, particularly excelling in Mean Rank reduction. These findings highlight the effectiveness of distance-based scoring in quaternion embeddings, offering a promising direction for knowledge graph completion.

Single-Layer Learnable Activation for Implicit Neural Representation (SL$^{2}$A-INR)

Sep 18, 2024

Abstract:Implicit Neural Representation (INR), leveraging a neural network to transform coordinate input into corresponding attributes, has recently driven significant advances in several vision-related domains. However, the performance of INR is heavily influenced by the choice of the nonlinear activation function used in its multilayer perceptron (MLP) architecture. Multiple nonlinearities have been investigated; yet, current INRs face limitations in capturing high-frequency components, diverse signal types, and handling inverse problems. We have identified that these problems can be greatly alleviated by introducing a paradigm shift in INRs. We find that an architecture with learnable activations in initial layers can represent fine details in the underlying signals. Specifically, we propose SL$^{2}$A-INR, a hybrid network for INR with a single-layer learnable activation function, prompting the effectiveness of traditional ReLU-based MLPs. Our method performs superior across diverse tasks, including image representation, 3D shape reconstructions, inpainting, single image super-resolution, CT reconstruction, and novel view synthesis. Through comprehensive experiments, SL$^{2}$A-INR sets new benchmarks in accuracy, quality, and convergence rates for INR.

Multi-Scale Convolutional Neural Network for Automated AMD Classification using Retinal OCT Images

Oct 06, 2021

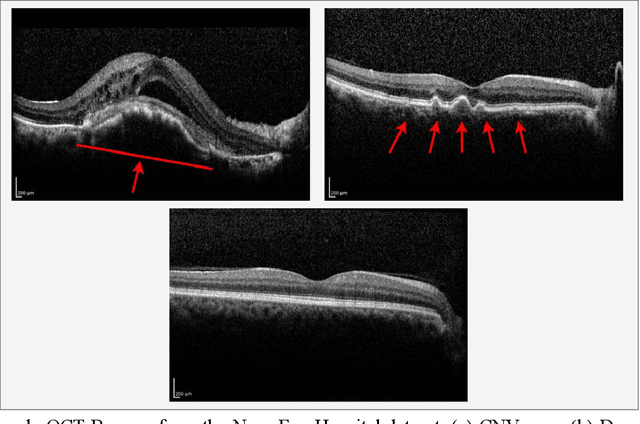

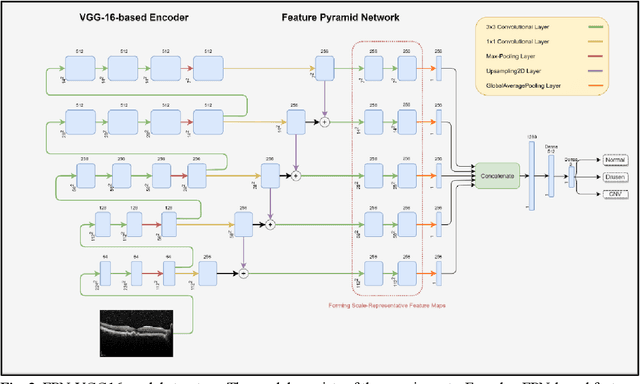

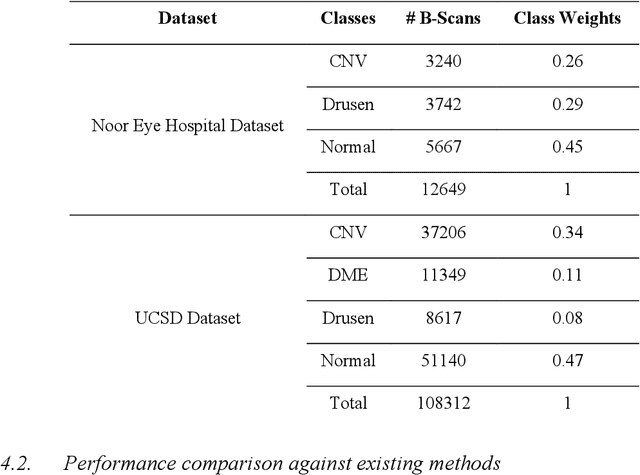

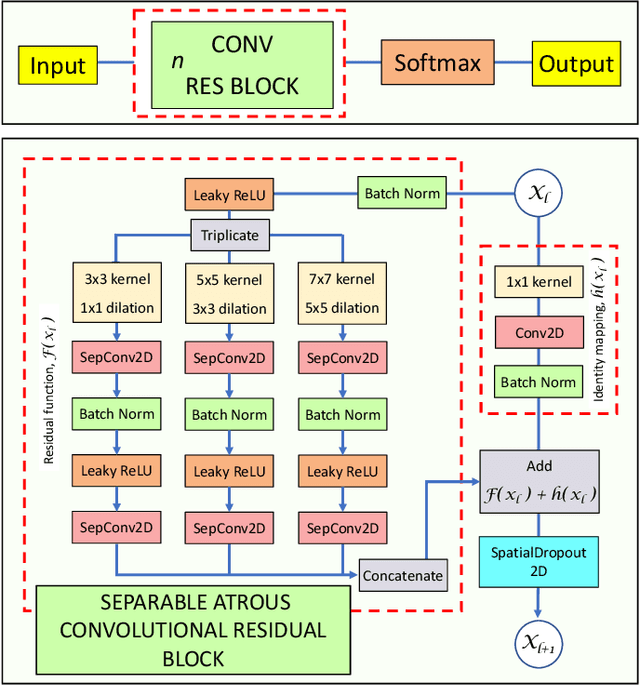

Abstract:Age-related macular degeneration (AMD) is the most common cause of blindness in developed countries, especially in people over 60 years of age. The workload of specialists and the healthcare system in this field has increased in recent years mainly dues to three reasons: 1) increased use of retinal optical coherence tomography (OCT) imaging technique, 2) prevalence of population aging worldwide, and 3) chronic nature of AMD. Recent developments in deep learning have provided a unique opportunity for the development of fully automated diagnosis frameworks. Considering the presence of AMD-related retinal pathologies in varying sizes in OCT images, our objective was to propose a multi-scale convolutional neural network (CNN) capable of distinguishing pathologies using receptive fields with various sizes. The multi-scale CNN was designed based on the feature pyramid network (FPN) structure and was used to diagnose normal and two common clinical characteristics of dry and wet AMD, namely drusen and choroidal neovascularization (CNV). The proposed method was evaluated on a national dataset gathered at Noor Eye Hospital (NEH), consisting of 12649 retinal OCT images from 441 patients, and a UCSD public dataset, consisting of 108312 OCT images. The results show that the multi-scale FPN-based structure was able to improve the base model's overall accuracy by 0.4% to 3.3% for different backbone models. In addition, gradual learning improved the performance in two phases from 87.2%+-2.5% to 93.4%+-1.4% by pre-training the base model on ImageNet weights in the first phase and fine-tuning the resulting model on a dataset of OCT images in the second phase. The promising quantitative and qualitative results of the proposed architecture prove the suitability of the proposed method to be used as a screening tool in healthcare centers assisting ophthalmologists in making better diagnostic decisions.

CXR-Net: An Artificial Intelligence Pipeline for Quick Covid-19 Screening of Chest X-Rays

Feb 26, 2021

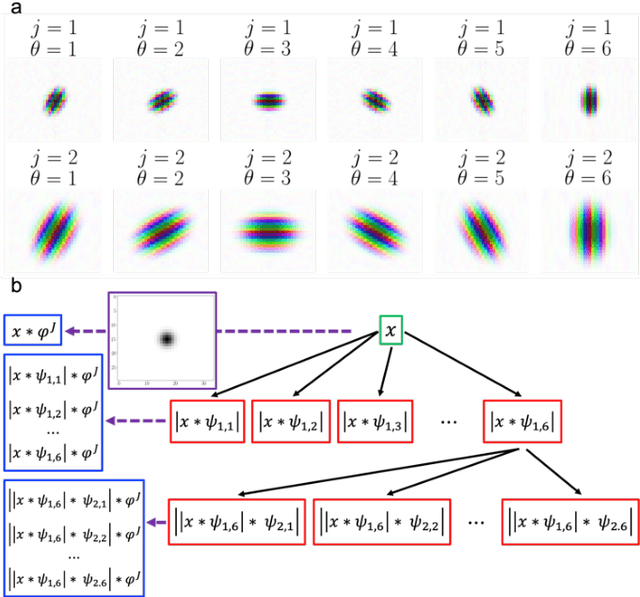

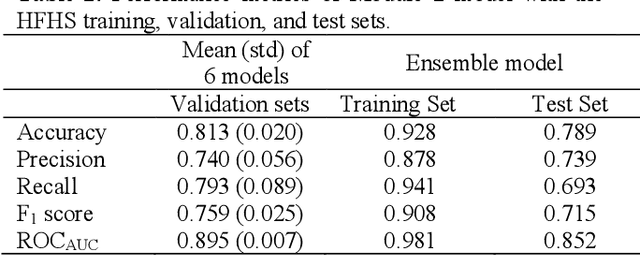

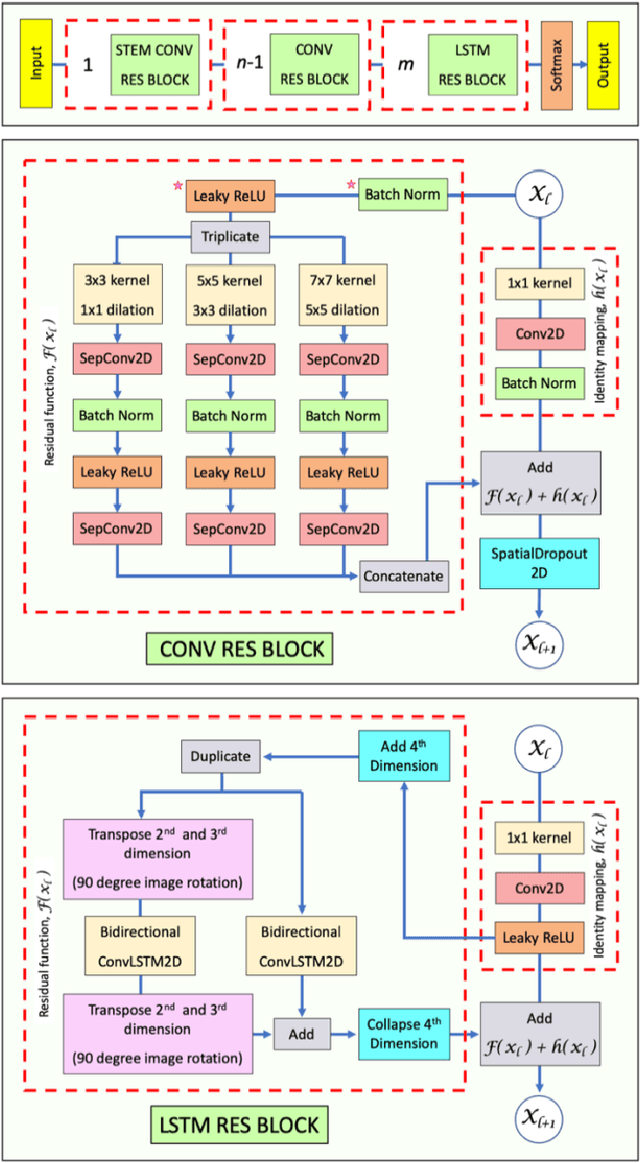

Abstract:CXR-Net is a two-module Artificial Intelligence pipeline for the quick detection of SARS-CoV-2 from chest X-rays (CXRs). Module 1 was trained on a public dataset of 6395 CXRs with radiologist annotated lung contours to generate masks of the lungs that overlap the heart and large vasa. Module 2 is a hybrid convnet in which the first convolutional layer with learned coefficients is replaced by a layer with fixed coefficients provided by the Wavelet Scattering Transform (WST). Module 2 takes as inputs the patients CXRs and corresponding lung masks calculated by Module 1, and produces as outputs a class assignment (Covid vs. non-Covid) and high resolution heat maps that identify the SARS associated lung regions. Module 2 was trained on a dataset of CXRs from non-Covid and RT-PCR confirmed Covid patients acquired at the Henry Ford Health System (HFHS) Hospital in Detroit. All non-Covid CXRs were from pre-Covid era (2018-2019), and included images from both normal lungs and lungs affected by non-Covid pathologies. Training and test sets consisted of 2265 CXRs (1417 Covid negative, 848 Covid positive), and 1532 CXRs (945 Covid negative, 587 Covid positive), respectively. Six distinct cross-validation models, each trained on 1887 images and validated against 378 images, were combined into an ensemble model that was used to classify the CXR images of the test set with resulting Accuracy = 0.789, Precision = 0.739, Recall = 0.693, F1 score = 0.715, ROC(AUC) = 0.852.

Epistocracy Algorithm: A Novel Hyper-heuristic Optimization Strategy for Solving Complex Optimization Problems

Jan 30, 2021

Abstract:This paper proposes a novel evolutionary algorithm called Epistocracy which incorporates human socio-political behavior and intelligence to solve complex optimization problems. The inspiration of the Epistocracy algorithm originates from a political regime where educated people have more voting power than the uneducated or less educated. The algorithm is a self-adaptive, and multi-population optimizer in which the evolution process takes place in parallel for many populations led by a council of leaders. To avoid stagnation in poor local optima and to prevent a premature convergence, the algorithm employs multiple mechanisms such as dynamic and adaptive leadership based on gravitational force, dynamic population allocation and diversification, variance-based step-size determination, and regression-based leadership adjustment. The algorithm uses a stratified sampling method called Latin Hypercube Sampling (LHS) to distribute the initial population more evenly for exploration of the search space and exploitation of the accumulated knowledge. To investigate the performance and evaluate the reliability of the algorithm, we have used a set of multimodal benchmark functions, and then applied the algorithm to the MNIST dataset to further verify the accuracy, scalability, and robustness of the algorithm. Experimental results show that the Epistocracy algorithm outperforms the tested state-of-the-art evolutionary and swarm intelligence algorithms in terms of performance, precision, and convergence.

Lung Segmentation in Chest X-rays with Res-CR-Net

Nov 14, 2020

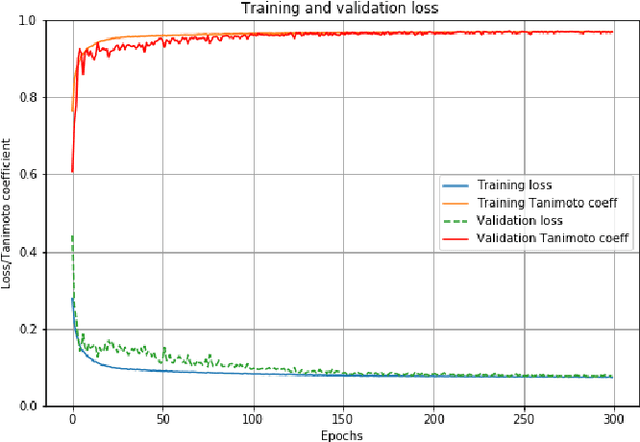

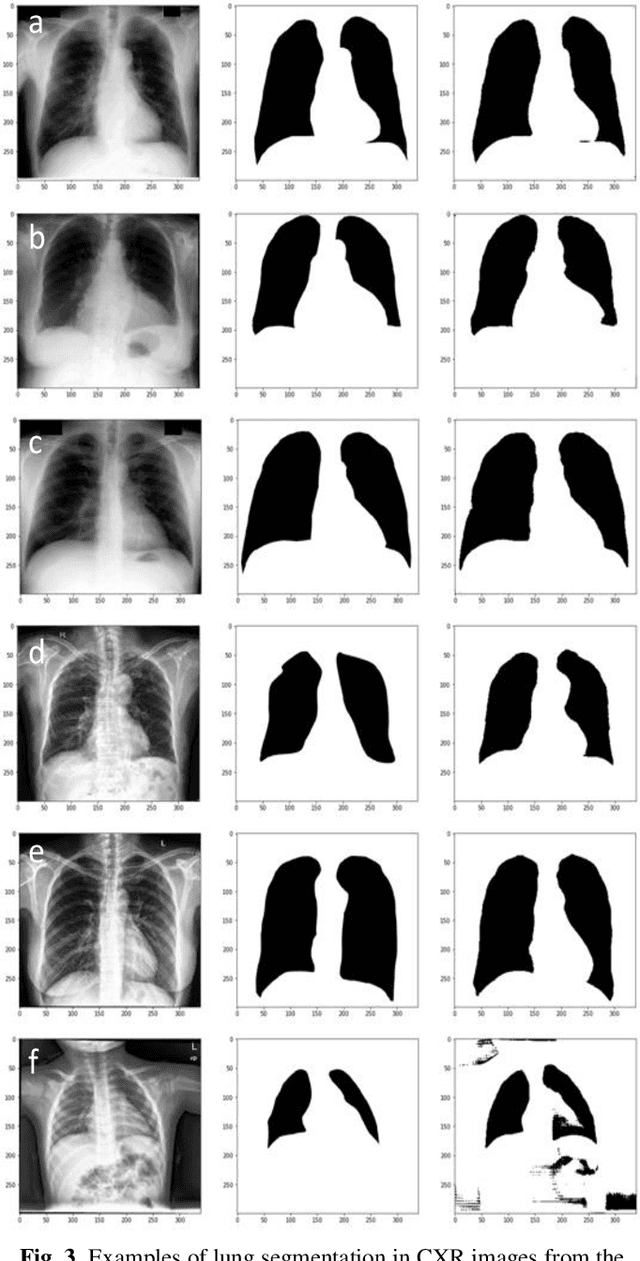

Abstract:Deep Neural Networks (DNN) are widely used to carry out segmentation tasks in biomedical images. Most DNNs developed for this purpose are based on some variation of the encoder-decoder U-Net architecture. Here we show that Res-CR-Net, a new type of fully convolutional neural network, which was originally developed for the semantic segmentation of microscopy images, and which does not adopt a U-Net architecture, is very effective at segmenting the lung fields in chest X-rays from either healthy patients or patients with a variety of lung pathologies.

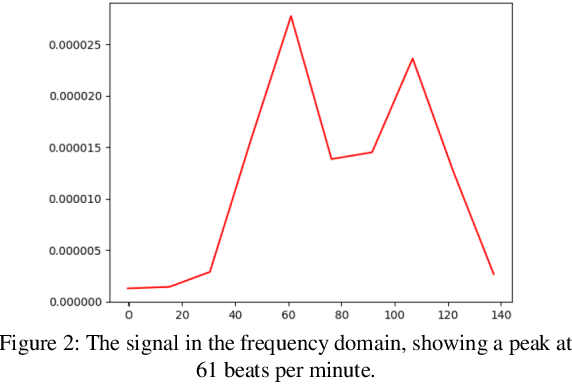

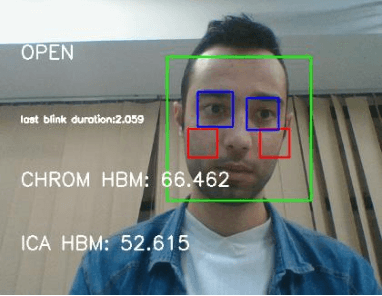

Using image-extracted features to determine heart rate and blink duration for driver sleepiness detection

Dec 08, 2019

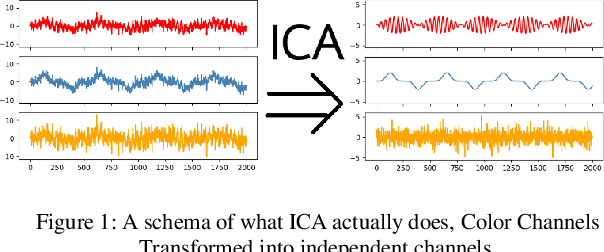

Abstract:Heart rate and blink duration are two vital physiological signals which give information about cardiac activity and consciousness. Monitoring these two signals is crucial for various applications such as driver drowsiness detection. As there are several problems posed by the conventional systems to be used for continuous, long-term monitoring, a remote blink and ECG monitoring system can be used as an alternative. For estimating the blink duration, two strategies are used. In the first approach, pictures of open and closed eyes are fed into an Artificial Neural Network (ANN) to decide whether the eyes are open or close. In the second approach, they are classified and labeled using Linear Discriminant Analysis (LDA). The labeled images are then be used to determine the blink duration. For heart rate variability, two strategies are used to evaluate the passing blood volume: Independent Component Analysis (ICA); and a chrominance based method. Eye recognition yielded 78-92% accuracy in classifying open/closed eyes with ANN and 71-91% accuracy with LDA. Heart rate evaluations had a mean loss of around 16 Beats Per Minute (BPM) for the ICA strategy and 13 BPM for the chrominance based technique.

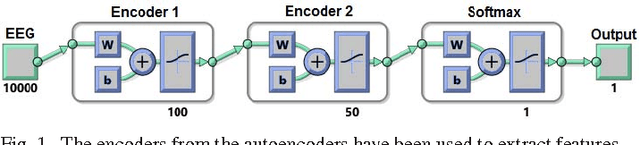

Cloud-based Deep Learning of Big EEG Data for Epileptic Seizure Prediction

Feb 17, 2017

Abstract:Developing a Brain-Computer Interface~(BCI) for seizure prediction can help epileptic patients have a better quality of life. However, there are many difficulties and challenges in developing such a system as a real-life support for patients. Because of the nonstationary nature of EEG signals, normal and seizure patterns vary across different patients. Thus, finding a group of manually extracted features for the prediction task is not practical. Moreover, when using implanted electrodes for brain recording massive amounts of data are produced. This big data calls for the need for safe storage and high computational resources for real-time processing. To address these challenges, a cloud-based BCI system for the analysis of this big EEG data is presented. First, a dimensionality-reduction technique is developed to increase classification accuracy as well as to decrease the communication bandwidth and computation time. Second, following a deep-learning approach, a stacked autoencoder is trained in two steps for unsupervised feature extraction and classification. Third, a cloud-computing solution is proposed for real-time analysis of big EEG data. The results on a benchmark clinical dataset illustrate the superiority of the proposed patient-specific BCI as an alternative method and its expected usefulness in real-life support of epilepsy patients.

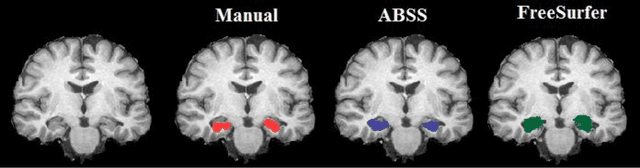

Automatic and Manual Segmentation of Hippocampus in Epileptic Patients MRI

Oct 26, 2016

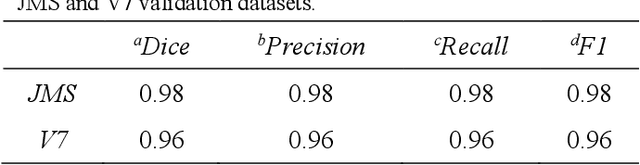

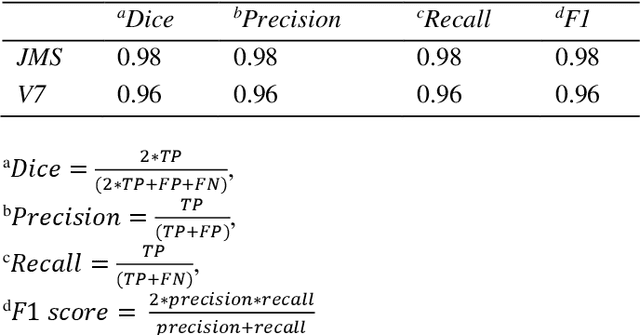

Abstract:The hippocampus is a seminal structure in the most common surgically-treated form of epilepsy. Accurate segmentation of the hippocampus aids in establishing asymmetry regarding size and signal characteristics in order to disclose the likely site of epileptogenicity. With sufficient refinement, it may ultimately aid in the avoidance of invasive monitoring with its expense and risk for the patient. To this end, a reliable and consistent method for segmentation of the hippocampus from magnetic resonance imaging (MRI) is needed. In this work, we present a systematic and statistical analysis approach for evaluation of automated segmentation methods in order to establish one that reliably approximates the results achieved by manual tracing of the hippocampus.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge