Haiqing Hu

LREF: A Novel LLM-based Relevance Framework for E-commerce

Mar 12, 2025

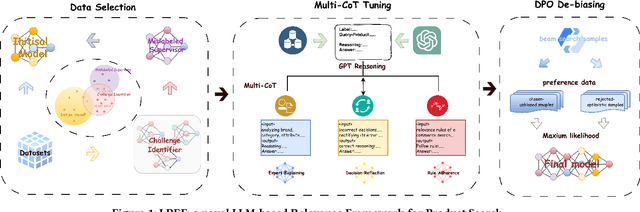

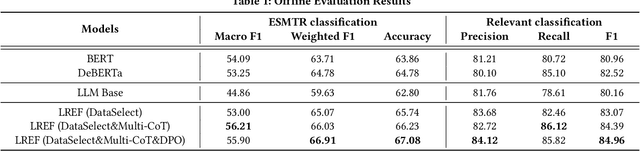

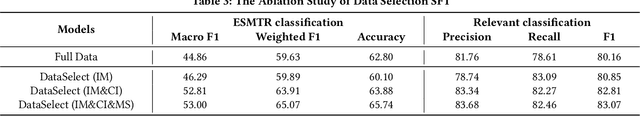

Abstract:Query and product relevance prediction is a critical component for ensuring a smooth user experience in e-commerce search. Traditional studies mainly focus on BERT-based models to assess the semantic relevance between queries and products. However, the discriminative paradigm and limited knowledge capacity of these approaches restrict their ability to comprehend the relevance between queries and products fully. With the rapid advancement of Large Language Models (LLMs), recent research has begun to explore their application to industrial search systems, as LLMs provide extensive world knowledge and flexible optimization for reasoning processes. Nonetheless, directly leveraging LLMs for relevance prediction tasks introduces new challenges, including a high demand for data quality, the necessity for meticulous optimization of reasoning processes, and an optimistic bias that can result in over-recall. To overcome the above problems, this paper proposes a novel framework called the LLM-based RElevance Framework (LREF) aimed at enhancing e-commerce search relevance. The framework comprises three main stages: supervised fine-tuning (SFT) with Data Selection, Multiple Chain of Thought (Multi-CoT) tuning, and Direct Preference Optimization (DPO) for de-biasing. We evaluate the performance of the framework through a series of offline experiments on large-scale real-world datasets, as well as online A/B testing. The results indicate significant improvements in both offline and online metrics. Ultimately, the model was deployed in a well-known e-commerce application, yielding substantial commercial benefits.

A Multi-Granularity Matching Attention Network for Query Intent Classification in E-commerce Retrieval

Mar 28, 2023

Abstract:Query intent classification, which aims at assisting customers to find desired products, has become an essential component of the e-commerce search. Existing query intent classification models either design more exquisite models to enhance the representation learning of queries or explore label-graph and multi-task to facilitate models to learn external information. However, these models cannot capture multi-granularity matching features from queries and categories, which makes them hard to mitigate the gap in the expression between informal queries and categories. This paper proposes a Multi-granularity Matching Attention Network (MMAN), which contains three modules: a self-matching module, a char-level matching module, and a semantic-level matching module to comprehensively extract features from the query and a query-category interaction matrix. In this way, the model can eliminate the difference in expression between queries and categories for query intent classification. We conduct extensive offline and online A/B experiments, and the results show that the MMAN significantly outperforms the strong baselines, which shows the superiority and effectiveness of MMAN. MMAN has been deployed in production and brings great commercial value for our company.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge