Hai-Bao Chen

OneIG-Bench: Omni-dimensional Nuanced Evaluation for Image Generation

Jun 09, 2025Abstract:Text-to-image (T2I) models have garnered significant attention for generating high-quality images aligned with text prompts. However, rapid T2I model advancements reveal limitations in early benchmarks, lacking comprehensive evaluations, for example, the evaluation on reasoning, text rendering and style. Notably, recent state-of-the-art models, with their rich knowledge modeling capabilities, show promising results on the image generation problems requiring strong reasoning ability, yet existing evaluation systems have not adequately addressed this frontier. To systematically address these gaps, we introduce OneIG-Bench, a meticulously designed comprehensive benchmark framework for fine-grained evaluation of T2I models across multiple dimensions, including prompt-image alignment, text rendering precision, reasoning-generated content, stylization, and diversity. By structuring the evaluation, this benchmark enables in-depth analysis of model performance, helping researchers and practitioners pinpoint strengths and bottlenecks in the full pipeline of image generation. Specifically, OneIG-Bench enables flexible evaluation by allowing users to focus on a particular evaluation subset. Instead of generating images for the entire set of prompts, users can generate images only for the prompts associated with the selected dimension and complete the corresponding evaluation accordingly. Our codebase and dataset are now publicly available to facilitate reproducible evaluation studies and cross-model comparisons within the T2I research community.

Multilayer Perceptron Based Stress Evolution Analysis under DC Current Stressing for Multi-segment Wires

May 17, 2022

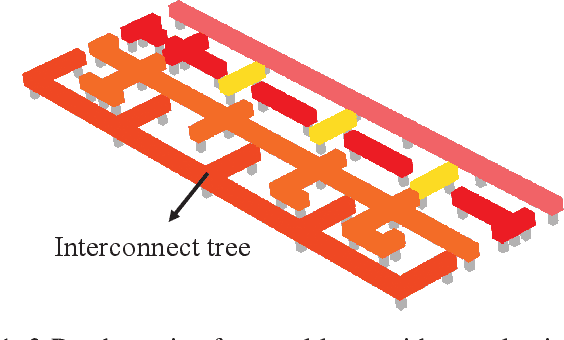

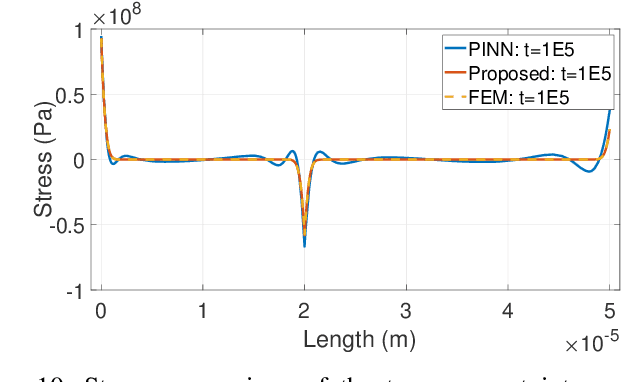

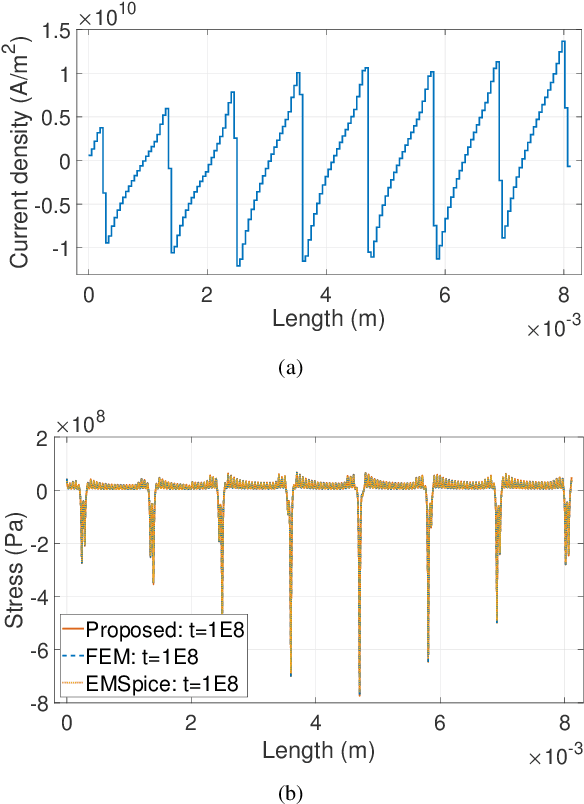

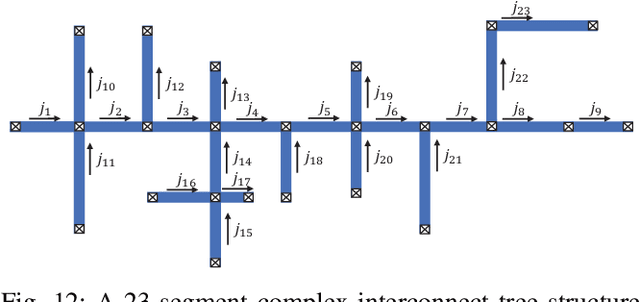

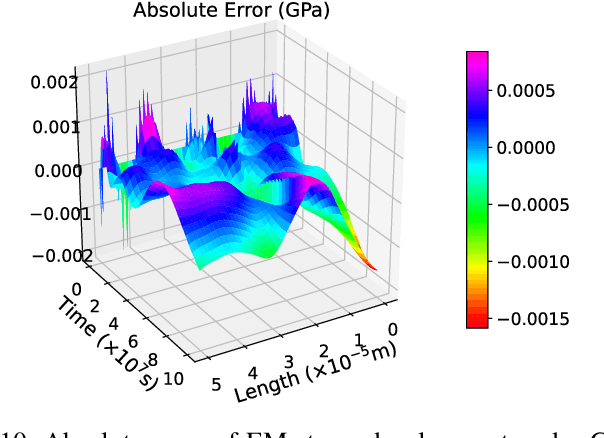

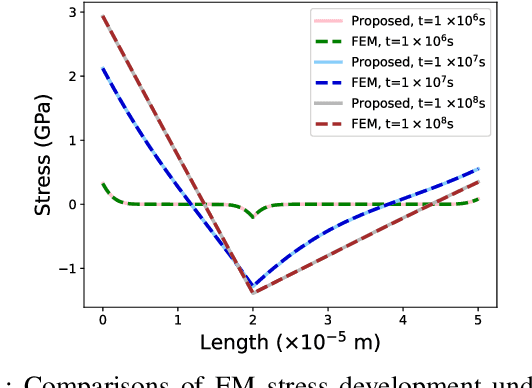

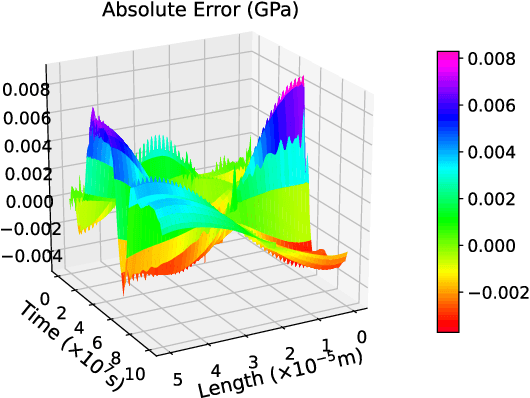

Abstract:Electromigration (EM) is one of the major concerns in the reliability analysis of very large scale integration (VLSI) systems due to the continuous technology scaling. Accurately predicting the time-to-failure of integrated circuits (IC) becomes increasingly important for modern IC design. However, traditional methods are often not sufficiently accurate, leading to undesirable over-design especially in advanced technology nodes. In this paper, we propose an approach using multilayer perceptrons (MLP) to compute stress evolution in the interconnect trees during the void nucleation phase. The availability of a customized trial function for neural network training holds the promise of finding dynamic mesh-free stress evolution on complex interconnect trees under time-varying temperatures. Specifically, we formulate a new objective function considering the EM-induced coupled partial differential equations (PDEs), boundary conditions (BCs), and initial conditions to enforce the physics-based constraints in the spatial-temporal domain. The proposed model avoids meshing and reduces temporal iterations compared with conventional numerical approaches like FEM. Numerical results confirm its advantages on accuracy and computational performance.

A Space-Time Neural Network for Analysis of Stress Evolution under DC Current Stressing

Mar 29, 2022

Abstract:The electromigration (EM)-induced reliability issues in very large scale integration (VLSI) circuits have attracted increased attention due to the continuous technology scaling. Traditional EM models often lead to overly pessimistic prediction incompatible with the shrinking design margin in future technology nodes. Motivated by the latest success of neural networks in solving differential equations in physical problems, we propose a novel mesh-free model to compute EM-induced stress evolution in VLSI circuits. The model utilizes a specifically crafted space-time physics-informed neural network (STPINN) as the solver for EM analysis. By coupling the physics-based EM analysis with dynamic temperature incorporating Joule heating and via effect, we can observe stress evolution along multi-segment interconnect trees under constant, time-dependent and space-time-dependent temperature during the void nucleation phase. The proposed STPINN method obviates the time discretization and meshing required in conventional numerical stress evolution analysis and offers significant computational savings. Numerical comparison with competing schemes demonstrates a 2x ~ 52x speedup with a satisfactory accuracy.

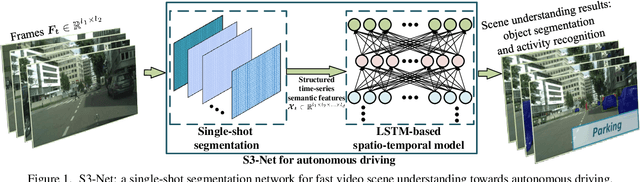

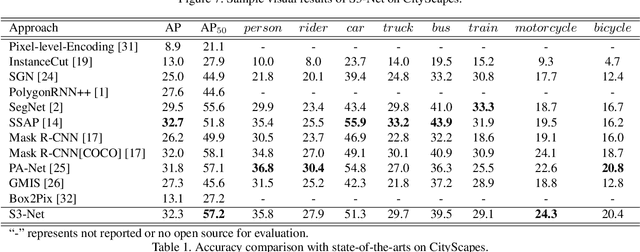

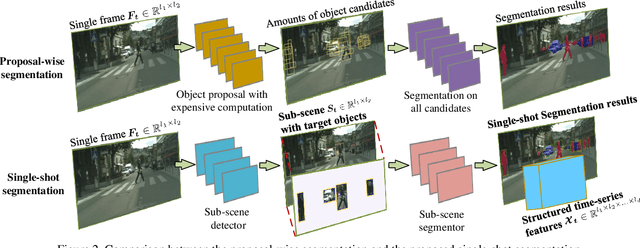

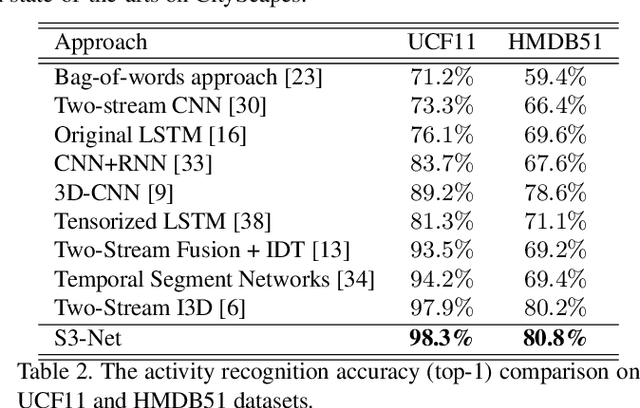

S3-Net: A Fast and Lightweight Video Scene Understanding Network by Single-shot Segmentation

Nov 04, 2020

Abstract:Real-time understanding in video is crucial in various AI applications such as autonomous driving. This work presents a fast single-shot segmentation strategy for video scene understanding. The proposed net, called S3-Net, quickly locates and segments target sub-scenes, meanwhile extracts structured time-series semantic features as inputs to an LSTM-based spatio-temporal model. Utilizing tensorization and quantization techniques, S3-Net is intended to be lightweight for edge computing. Experiments using CityScapes, UCF11, HMDB51 and MOMENTS datasets demonstrate that the proposed S3-Net achieves an accuracy improvement of 8.1% versus the 3D-CNN based approach on UCF11, a storage reduction of 6.9x and an inference speed of 22.8 FPS on CityScapes with a GTX1080Ti GPU.

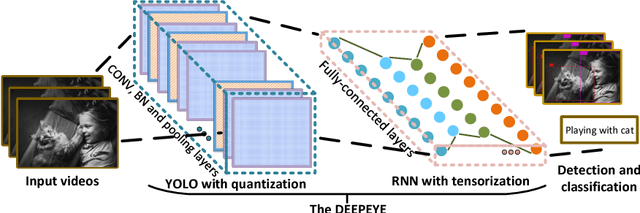

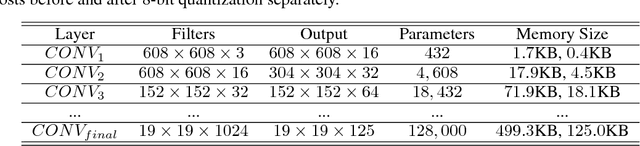

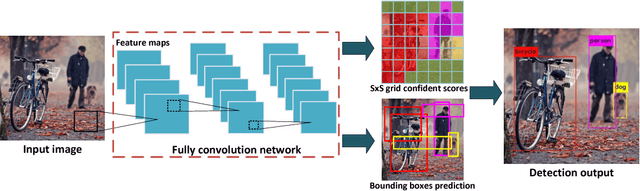

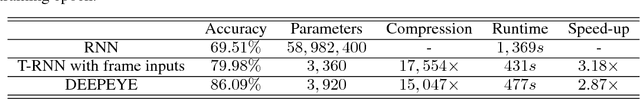

DEEPEYE: A Compact and Accurate Video Comprehension at Terminal Devices Compressed with Quantization and Tensorization

Jun 07, 2018

Abstract:As it requires a huge number of parameters when exposed to high dimensional inputs in video detection and classification, there is a grand challenge to develop a compact yet accurate video comprehension at terminal devices. Current works focus on optimizations of video detection and classification in a separated fashion. In this paper, we introduce a video comprehension (object detection and action recognition) system for terminal devices, namely DEEPEYE. Based on You Only Look Once (YOLO), we have developed an 8-bit quantization method when training YOLO; and also developed a tensorized-compression method of Recurrent Neural Network (RNN) composed of features extracted from YOLO. The developed quantization and tensorization can significantly compress the original network model yet with maintained accuracy. Using the challenging video datasets: MOMENTS and UCF11 as benchmarks, the results show that the proposed DEEPEYE achieves 3.994x model compression rate with only 0.47% mAP decreased; and 15,047x parameter reduction and 2.87x speed-up with 16.58% accuracy improvement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge