Sheldon X. -D. Tan

EM-GAN: Fast Stress Analysis for Multi-Segment Interconnect Using Generative Adversarial Networks

Apr 27, 2020

Abstract:In this paper, we propose a fast transient hydrostatic stress analysis for electromigration (EM) failure assessment for multi-segment interconnects using generative adversarial networks (GANs). Our work leverages the image synthesis feature of GAN-based generative deep neural networks. The stress evaluation of multi-segment interconnects, modeled by partial differential equations, can be viewed as time-varying 2D-images-to-image problem where the input is the multi-segment interconnects topology with current densities and the output is the EM stress distribution in those wire segments at the given aging time. Based on this observation, we train conditional GAN model using the images of many self-generated multi-segment wires and wire current densities and aging time (as conditions) against the COMSOL simulation results. Different hyperparameters of GAN were studied and compared. The proposed algorithm, called {\it EM-GAN}, can quickly give accurate stress distribution of a general multi-segment wire tree for a given aging time, which is important for full-chip fast EM failure assessment. Our experimental results show that the EM-GAN shows 6.6\% averaged error compared to COMSOL simulation results with orders of magnitude speedup. It also delivers 8.3X speedup over state-of-the-art analytic based EM analysis solver.

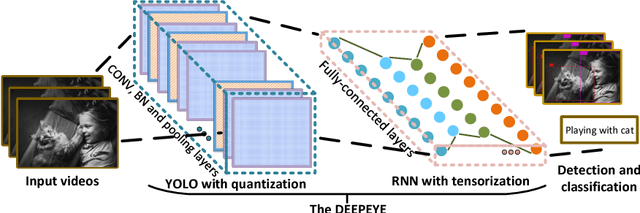

DEEPEYE: A Compact and Accurate Video Comprehension at Terminal Devices Compressed with Quantization and Tensorization

Jun 07, 2018

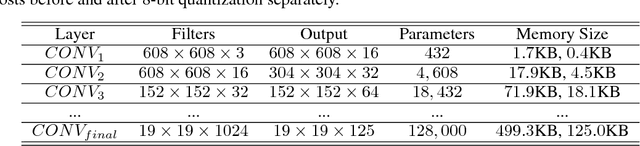

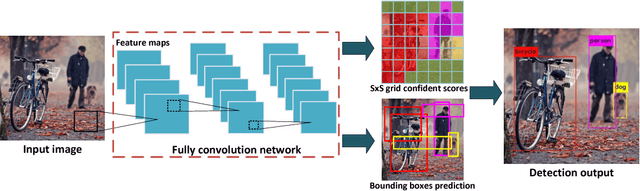

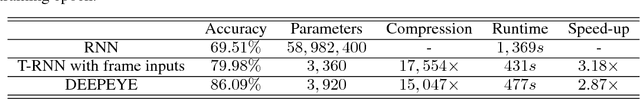

Abstract:As it requires a huge number of parameters when exposed to high dimensional inputs in video detection and classification, there is a grand challenge to develop a compact yet accurate video comprehension at terminal devices. Current works focus on optimizations of video detection and classification in a separated fashion. In this paper, we introduce a video comprehension (object detection and action recognition) system for terminal devices, namely DEEPEYE. Based on You Only Look Once (YOLO), we have developed an 8-bit quantization method when training YOLO; and also developed a tensorized-compression method of Recurrent Neural Network (RNN) composed of features extracted from YOLO. The developed quantization and tensorization can significantly compress the original network model yet with maintained accuracy. Using the challenging video datasets: MOMENTS and UCF11 as benchmarks, the results show that the proposed DEEPEYE achieves 3.994x model compression rate with only 0.47% mAP decreased; and 15,047x parameter reduction and 2.87x speed-up with 16.58% accuracy improvement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge