H. F. Machiel Van der Loos

University of British Columbia

Consistency Matters: Defining Demonstration Data Quality Metrics in Robot Learning from Demonstration

Dec 18, 2024

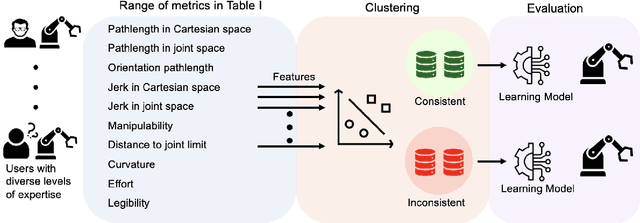

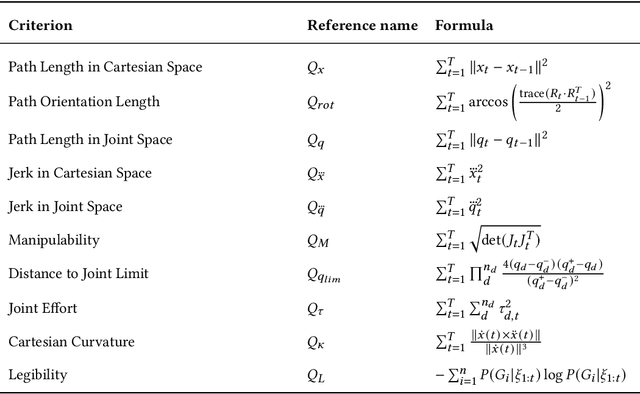

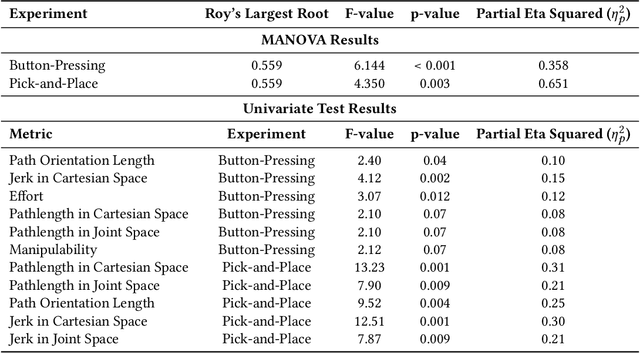

Abstract:Learning from Demonstration (LfD) empowers robots to acquire new skills through human demonstrations, making it feasible for everyday users to teach robots. However, the success of learning and generalization heavily depends on the quality of these demonstrations. Consistency is often used to indicate quality in LfD, yet the factors that define this consistency remain underexplored. In this paper, we evaluate a comprehensive set of motion data characteristics to determine which consistency measures best predict learning performance. By ensuring demonstration consistency prior to training, we enhance models' predictive accuracy and generalization to novel scenarios. We validate our approach with two user studies involving participants with diverse levels of robotics expertise. In the first study (N = 24), users taught a PR2 robot to perform a button-pressing task in a constrained environment, while in the second study (N = 30), participants trained a UR5 robot on a pick-and-place task. Results show that demonstration consistency significantly impacts success rates in both learning and generalization, with 70% and 89% of task success rates in the two studies predicted using our consistency metrics. Moreover, our metrics estimate generalized performance success rates with 76% and 91% accuracy. These findings suggest that our proposed measures provide an intuitive, practical way to assess demonstration data quality before training, without requiring expert data or algorithm-specific modifications. Our approach offers a systematic way to evaluate demonstration quality, addressing a critical gap in LfD by formalizing consistency metrics that enhance the reliability of robot learning from human demonstrations.

How Can Everyday Users Efficiently Teach Robots by Demonstrations?

Oct 19, 2023

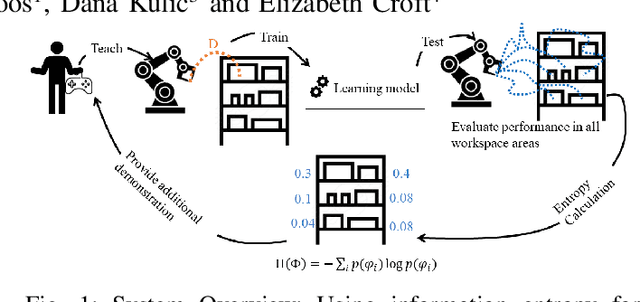

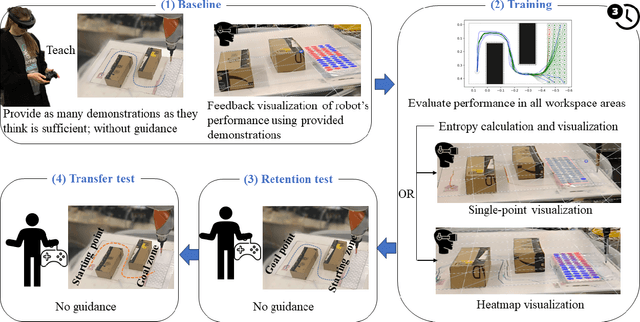

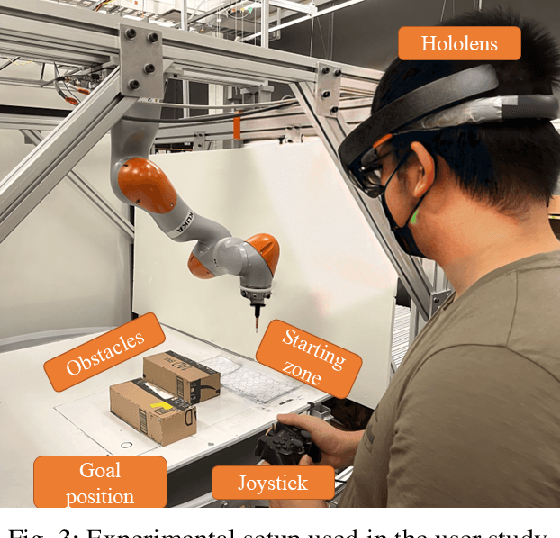

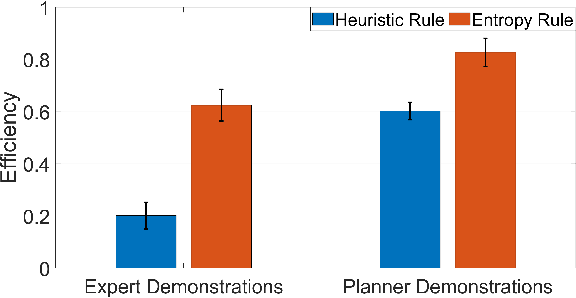

Abstract:Learning from Demonstration (LfD) is a framework that allows lay users to easily program robots. However, the efficiency of robot learning and the robot's ability to generalize to task variations hinges upon the quality and quantity of the provided demonstrations. Our objective is to guide human teachers to furnish more effective demonstrations, thus facilitating efficient robot learning. To achieve this, we propose to use a measure of uncertainty, namely task-related information entropy, as a criterion for suggesting informative demonstration examples to human teachers to improve their teaching skills. In a conducted experiment (N=24), an augmented reality (AR)-based guidance system was employed to train novice users to produce additional demonstrations from areas with the highest entropy within the workspace. These novice users were trained for a few trials to teach the robot a generalizable task using a limited number of demonstrations. Subsequently, the users' performance after training was assessed first on the same task (retention) and then on a novel task (transfer) without guidance. The results indicated a substantial improvement in robot learning efficiency from the teacher's demonstrations, with an improvement of up to 198% observed on the novel task. Furthermore, the proposed approach was compared to a state-of-the-art heuristic rule and found to improve robot learning efficiency by 210% compared to the heuristic rule.

Quantifying Demonstration Quality for Robot Learning and Generalization

Mar 25, 2022

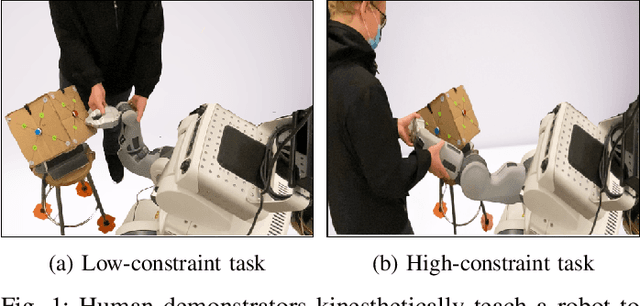

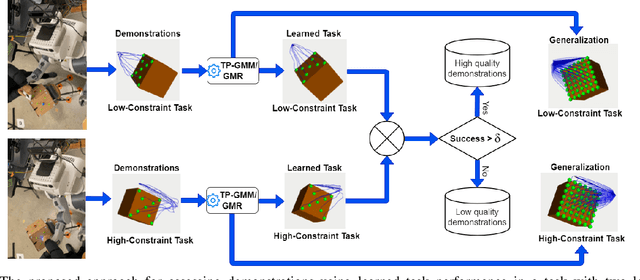

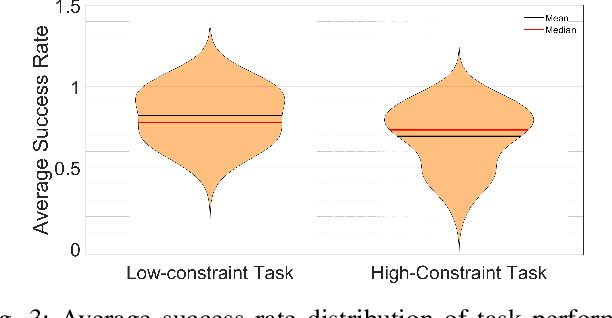

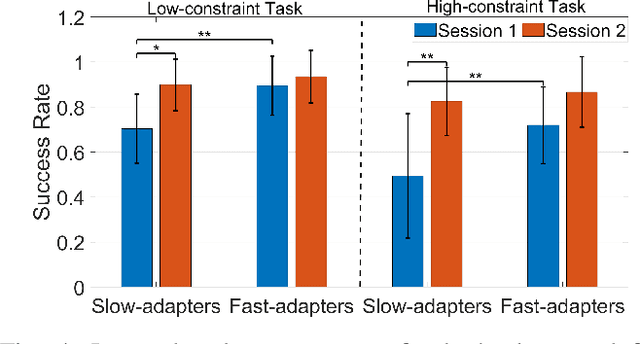

Abstract:Learning from Demonstration (LfD) seeks to democratize robotics by enabling diverse end-users to teach robots to perform a task by providing demonstrations. However, most LfD techniques assume users provide optimal demonstrations. This is not always the case in real applications where users are likely to provide demonstrations of varying quality, that may change with expertise and other factors. Demonstration quality plays a crucial role in robot learning and generalization. Hence, it is important to quantify the quality of the provided demonstrations before using them for robot learning. In this paper, we propose quantifying the quality of the demonstrations based on how well they perform in the learned task. We hypothesize that task performance can give an indication of the generalization performance on similar tasks. The proposed approach is validated in a user study (N = 27). Users with different robotics expertise levels were recruited to teach a PR2 robot a generic task (pressing a button) under different task constraints. They taught the robot in two sessions on two different days to capture their teaching behaviour across sessions. The task performance was utilized to classify the provided demonstrations into high-quality and low-quality sets. The results show a significant Pearson correlation coefficient (R = 0.85, p < 0.0001) between the task performance and generalization performance across all participants. We also found that users clustered into two groups: Users who provided high-quality demonstrations from the first session, assigned to the fast-adapters group, and users who provided low-quality demonstrations in the first session and then improved with practice, assigned to the slow-adapters group. These results highlight the importance of quantifying demonstration quality, which can be indicative of the adaptation level of the user to the task.

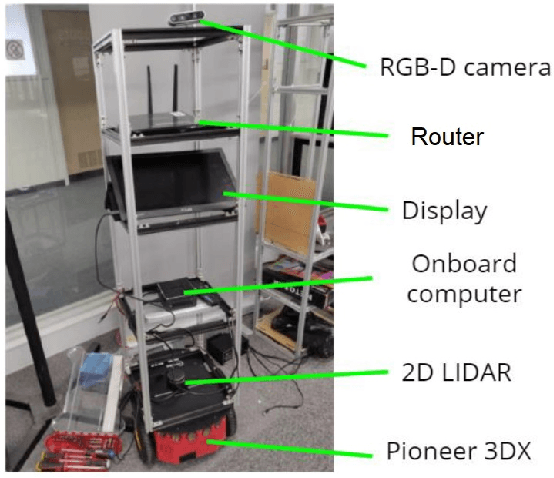

Group Surfing: A Pedestrian-Based Approach to Sidewalk Robot Navigation

Apr 13, 2021

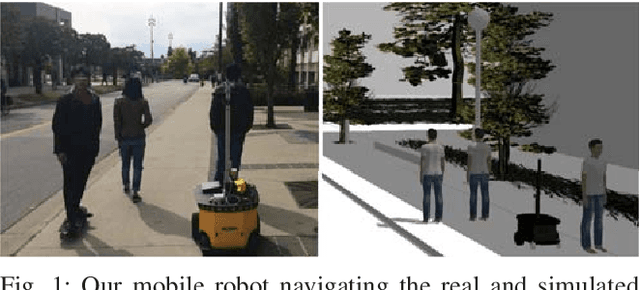

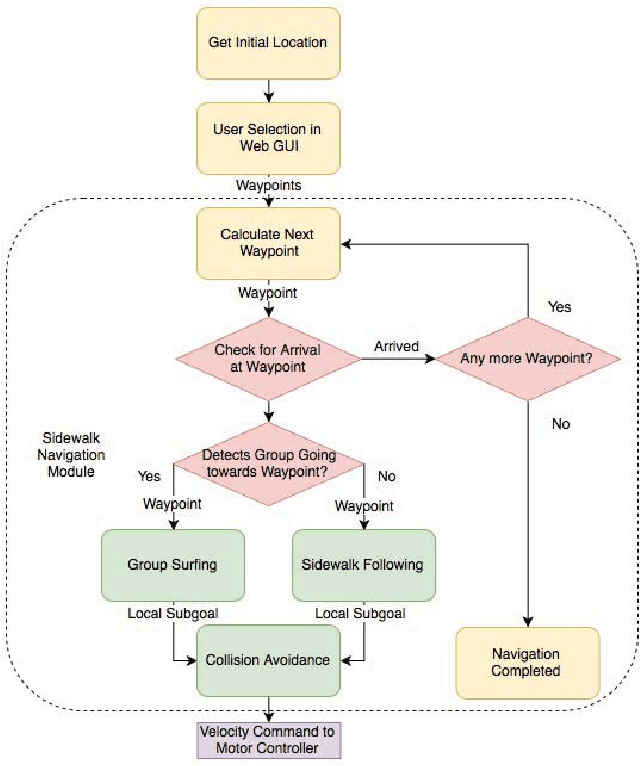

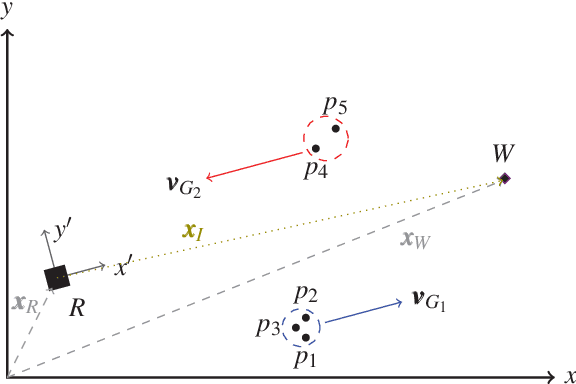

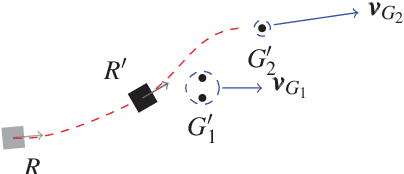

Abstract:In this paper, we propose a novel navigation system for mobile robots in pedestrian-rich sidewalk environments. Sidewalks are unique in that the pedestrian-shared space has characteristics of both roads and indoor spaces. Like vehicles on roads, pedestrian movement often manifests as linear flows in opposing directions. On the other hand, pedestrians also form crowds and can exhibit much more random movements than vehicles. Classical algorithms are insufficient for safe navigation around pedestrians and remaining on the sidewalk space. Thus, our approach takes advantage of natural human motion to allow a robot to adapt to sidewalk navigation in a safe and socially-compliant manner. We developed a \textit{group surfing} method which aims to imitate the optimal pedestrian group for bringing the robot closer to its goal. For pedestrian-sparse environments, we propose a sidewalk edge detection and following method. Underlying these two navigation methods, the collision avoidance scheme is human-aware. The integrated navigation stack is evaluated and demonstrated in simulation. A hardware demonstration is also presented.

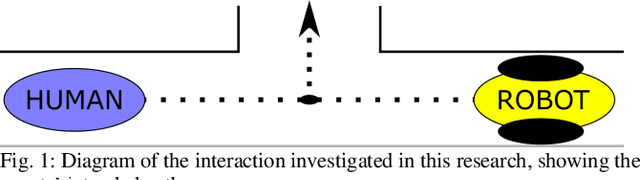

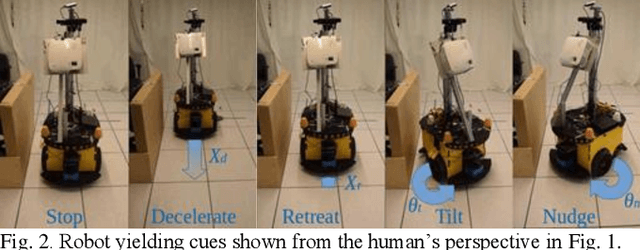

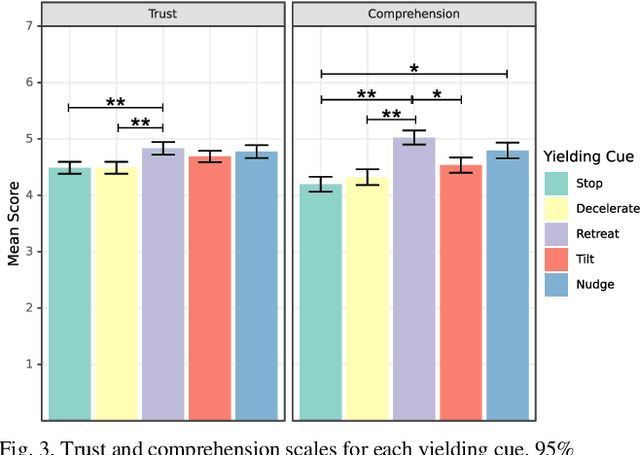

Mobile Robot Yielding Cues for Human-Robot Spatial Interaction

Apr 06, 2021

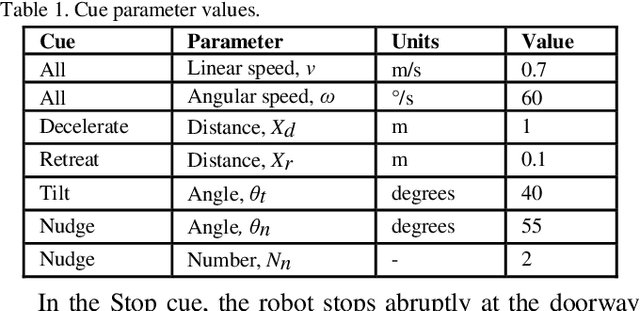

Abstract:Mobile robots are increasingly being deployed in public spaces such as shopping malls, airports, and urban sidewalks. Most of these robots are designed with human-aware motion planning capabilities but are not designed to communicate with pedestrians. Pedestrians encounter these robots without prior understanding of the robots' behaviour, which can cause discomfort, confusion, and delayed social acceptance. In this research, we explore the common human-robot interaction at a doorway or bottleneck in a structured environment. We designed and evaluated communication cues used by a robot when yielding to a pedestrian in this scenario. We conducted an online user study with 102 participants using videos of a set of robot-to-human yielding cues. Results show that a Robot Retreating cue was the most socially acceptable cue. The results of this work help guide the development of mobile robots for public spaces.

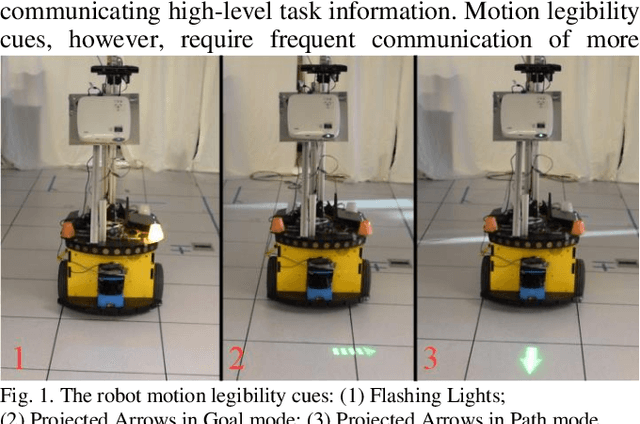

Hey Robot, Which Way Are You Going? Nonverbal Motion Legibility Cues for Human-Robot Spatial Interaction

Apr 06, 2021

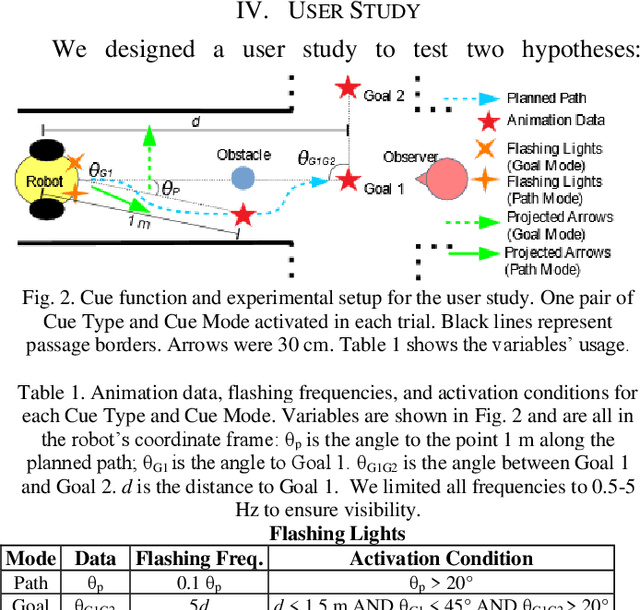

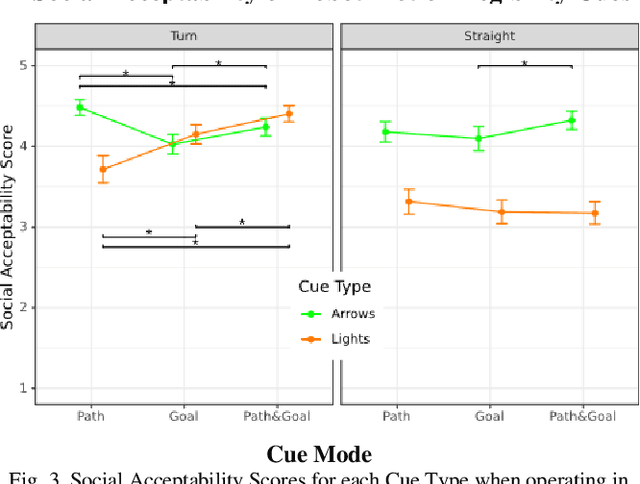

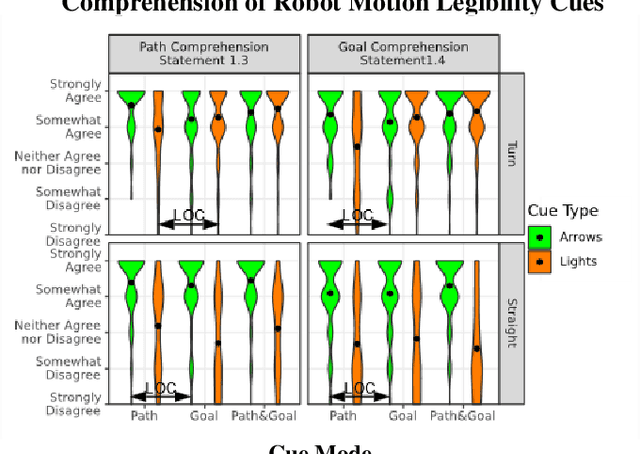

Abstract:Mobile robots have recently been deployed in public spaces such as shopping malls, airports, and urban sidewalks. Most of these robots are designed with human-aware motion planning capabilities but are not designed to communicate with pedestrians. Pedestrians that encounter these robots without prior understanding of the robots' behaviour can experience discomfort, confusion, and delayed social acceptance. In this work we designed and evaluated nonverbal robot motion legibility cues, which communicate a mobile robot's motion intention to pedestrians. We compared a motion legibility cue using Projected Arrows to one using Flashing Lights. We designed the cues to communicate path information, goal information, or both, and explored different Robot Movement Scenarios. We conducted an online user study with 229 participants using videos of the motion legibility cues. Our results show that the absence of cues was not socially acceptable, and that Projected Arrows were the more socially acceptable cue in most experimental conditions. We conclude that the presence and choice of motion legibility cues can positively influence robots' acceptance and successful deployment in public spaces.

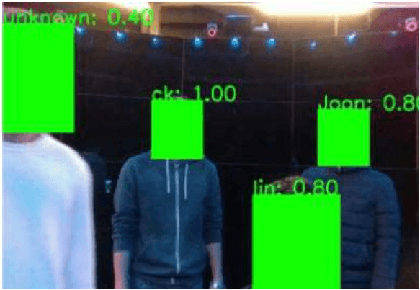

Autonomous Person-Specific Following Robot

Nov 11, 2020

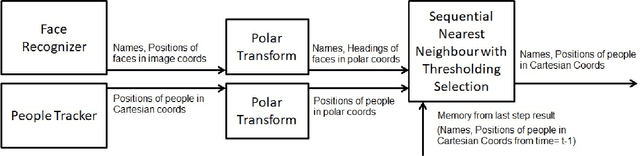

Abstract:Following a specific user is a desired or even required capability for service robots in many human-robot collaborative applications. However, most existing person-following robots follow people without knowledge of who it is following. In this paper, we proposed an identity-specific person tracker, capable of tracking and identifying nearby people, to enable person-specific following. Our proposed method uses a Sequential Nearest Neighbour with Thresholding Selection algorithm we devised to fuse together an anonymous person tracker and a face recogniser. Experiment results comparing our proposed method with alternative approaches showed that our method achieves better performance in tracking and identifying people, as well as improved robot performance in following a target individual.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge