Gwenolé Quellec

Patch Progression Masked Autoencoder with Fusion CNN Network for Classifying Evolution Between Two Pairs of 2D OCT Slices

Aug 27, 2025Abstract:Age-related Macular Degeneration (AMD) is a prevalent eye condition affecting visual acuity. Anti-vascular endothelial growth factor (anti-VEGF) treatments have been effective in slowing the progression of neovascular AMD, with better outcomes achieved through timely diagnosis and consistent monitoring. Tracking the progression of neovascular activity in OCT scans of patients with exudative AMD allows for the development of more personalized and effective treatment plans. This was the focus of the Monitoring Age-related Macular Degeneration Progression in Optical Coherence Tomography (MARIO) challenge, in which we participated. In Task 1, which involved classifying the evolution between two pairs of 2D slices from consecutive OCT acquisitions, we employed a fusion CNN network with model ensembling to further enhance the model's performance. For Task 2, which focused on predicting progression over the next three months based on current exam data, we proposed the Patch Progression Masked Autoencoder that generates an OCT for the next exam and then classifies the evolution between the current OCT and the one generated using our solution from Task 1. The results we achieved allowed us to place in the Top 10 for both tasks. Some team members are part of the same organization as the challenge organizers; therefore, we are not eligible to compete for the prize.

* 10 pages, 5 figures, 3 tables, challenge/conference paper

Suicide Risk Assessment Using Multimodal Speech Features: A Study on the SW1 Challenge Dataset

May 19, 2025Abstract:The 1st SpeechWellness Challenge conveys the need for speech-based suicide risk assessment in adolescents. This study investigates a multimodal approach for this challenge, integrating automatic transcription with WhisperX, linguistic embeddings from Chinese RoBERTa, and audio embeddings from WavLM. Additionally, handcrafted acoustic features -- including MFCCs, spectral contrast, and pitch-related statistics -- were incorporated. We explored three fusion strategies: early concatenation, modality-specific processing, and weighted attention with mixup regularization. Results show that weighted attention provided the best generalization, achieving 69% accuracy on the development set, though a performance gap between development and test sets highlights generalization challenges. Our findings, strictly tied to the MINI-KID framework, emphasize the importance of refining embedding representations and fusion mechanisms to enhance classification reliability.

Deep learning-enabled prediction of surgical errors during cataract surgery: from simulation to real-world application

Mar 28, 2025Abstract:Real-time prediction of technical errors from cataract surgical videos can be highly beneficial, particularly for telementoring, which involves remote guidance and mentoring through digital platforms. However, the rarity of surgical errors makes their detection and analysis challenging using artificial intelligence. To tackle this issue, we leveraged videos from the EyeSi Surgical cataract surgery simulator to learn to predict errors and transfer the acquired knowledge to real-world surgical contexts. By employing deep learning models, we demonstrated the feasibility of making real-time predictions using simulator data with a very short temporal history, enabling on-the-fly computations. We then transferred these insights to real-world settings through unsupervised domain adaptation, without relying on labeled videos from real surgeries for training, which are limited. This was achieved by aligning video clips from the simulator with real-world footage and pre-training the models using pretext tasks on both simulated and real surgical data. For a 1-second prediction window on the simulator, we achieved an overall AUC of 0.820 for error prediction using 600$\times$600 pixel images, and 0.784 using smaller 299$\times$299 pixel images. In real-world settings, we obtained an AUC of up to 0.663 with domain adaptation, marking an improvement over direct model application without adaptation, which yielded an AUC of 0.578. To our knowledge, this is the first work to address the tasks of learning surgical error prediction on a simulator using video data only and transferring this knowledge to real-world cataract surgery.

Context-Aware Vision Language Foundation Models for Ocular Disease Screening in Retinal Images

Mar 19, 2025Abstract:Foundation models are large-scale versatile systems trained on vast quantities of diverse data to learn generalizable representations. Their adaptability with minimal fine-tuning makes them particularly promising for medical imaging, where data variability and domain shifts are major challenges. Currently, two types of foundation models dominate the literature: self-supervised models and more recent vision-language models. In this study, we advance the application of vision-language foundation (VLF) models for ocular disease screening using the OPHDIAT dataset, which includes nearly 700,000 fundus photographs from a French diabetic retinopathy (DR) screening network. This dataset provides extensive clinical data (patient-specific information such as diabetic health conditions, and treatments), labeled diagnostics, ophthalmologists text-based findings, and multiple retinal images for each examination. Building on the FLAIR model $\unicode{x2013}$ a VLF model for retinal pathology classification $\unicode{x2013}$ we propose novel context-aware VLF models (e.g jointly analyzing multiple images from the same visit or taking advantage of past diagnoses and contextual data) to fully leverage the richness of the OPHDIAT dataset and enhance robustness to domain shifts. Our approaches were evaluated on both in-domain (a testing subset of OPHDIAT) and out-of-domain data (public datasets) to assess their generalization performance. Our model demonstrated improved in-domain performance for DR grading, achieving an area under the curve (AUC) ranging from 0.851 to 0.9999, and generalized well to ocular disease detection on out-of-domain data (AUC: 0.631-0.913).

LaTiM: Longitudinal representation learning in continuous-time models to predict disease progression

Apr 10, 2024

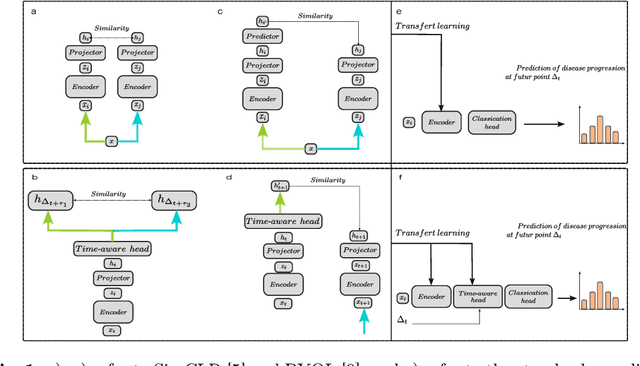

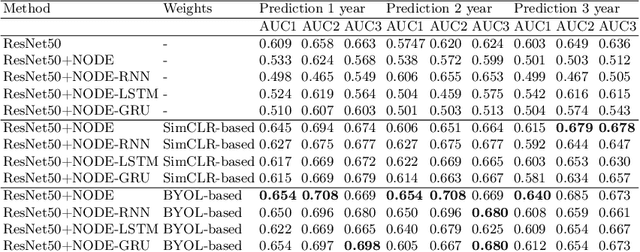

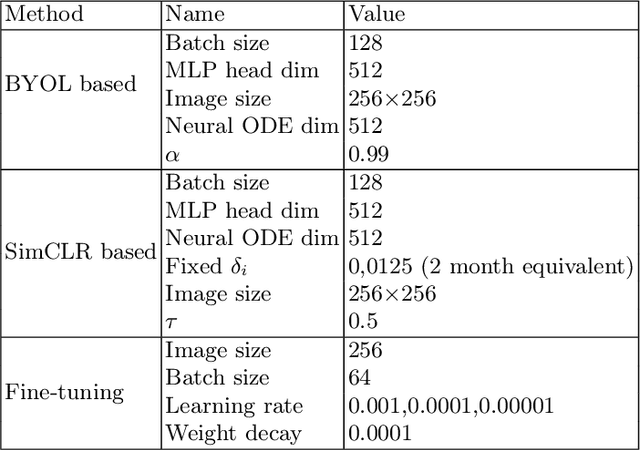

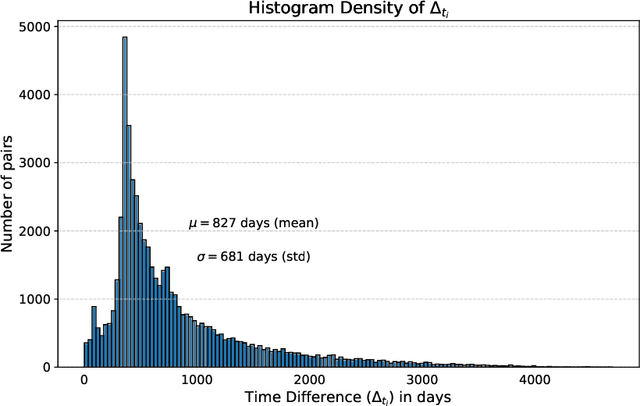

Abstract:This work proposes a novel framework for analyzing disease progression using time-aware neural ordinary differential equations (NODE). We introduce a "time-aware head" in a framework trained through self-supervised learning (SSL) to leverage temporal information in latent space for data augmentation. This approach effectively integrates NODEs with SSL, offering significant performance improvements compared to traditional methods that lack explicit temporal integration. We demonstrate the effectiveness of our strategy for diabetic retinopathy progression prediction using the OPHDIAT database. Compared to the baseline, all NODE architectures achieve statistically significant improvements in area under the ROC curve (AUC) and Kappa metrics, highlighting the efficacy of pre-training with SSL-inspired approaches. Additionally, our framework promotes stable training for NODEs, a commonly encountered challenge in time-aware modeling.

L-MAE: Longitudinal masked auto-encoder with time and severity-aware encoding for diabetic retinopathy progression prediction

Mar 24, 2024Abstract:Pre-training strategies based on self-supervised learning (SSL) have proven to be effective pretext tasks for many downstream tasks in computer vision. Due to the significant disparity between medical and natural images, the application of typical SSL is not straightforward in medical imaging. Additionally, those pretext tasks often lack context, which is critical for computer-aided clinical decision support. In this paper, we developed a longitudinal masked auto-encoder (MAE) based on the well-known Transformer-based MAE. In particular, we explored the importance of time-aware position embedding as well as disease progression-aware masking. Taking into account the time between examinations instead of just scheduling them offers the benefit of capturing temporal changes and trends. The masking strategy, for its part, evolves during follow-up to better capture pathological changes, ensuring a more accurate assessment of disease progression. Using OPHDIAT, a large follow-up screening dataset targeting diabetic retinopathy (DR), we evaluated the pre-trained weights on a longitudinal task, which is to predict the severity label of the next visit within 3 years based on the past time series examinations. Our results demonstrated the relevancy of both time-aware position embedding and masking strategies based on disease progression knowledge. Compared to popular baseline models and standard longitudinal Transformers, these simple yet effective extensions significantly enhance the predictive ability of deep classification models.

Generalizing deep learning models for medical image classification

Mar 21, 2024Abstract:Numerous Deep Learning (DL) models have been developed for a large spectrum of medical image analysis applications, which promises to reshape various facets of medical practice. Despite early advances in DL model validation and implementation, which encourage healthcare institutions to adopt them, some fundamental questions remain: are the DL models capable of generalizing? What causes a drop in DL model performances? How to overcome the DL model performance drop? Medical data are dynamic and prone to domain shift, due to multiple factors such as updates to medical equipment, new imaging workflow, and shifts in patient demographics or populations can induce this drift over time. In this paper, we review recent developments in generalization methods for DL-based classification models. We also discuss future challenges, including the need for improved evaluation protocols and benchmarks, and envisioned future developments to achieve robust, generalized models for medical image classification.

DISCOVER: 2-D Multiview Summarization of Optical Coherence Tomography Angiography for Automatic Diabetic Retinopathy Diagnosis

Jan 10, 2024Abstract:Diabetic Retinopathy (DR), an ocular complication of diabetes, is a leading cause of blindness worldwide. Traditionally, DR is monitored using Color Fundus Photography (CFP), a widespread 2-D imaging modality. However, DR classifications based on CFP have poor predictive power, resulting in suboptimal DR management. Optical Coherence Tomography Angiography (OCTA) is a recent 3-D imaging modality offering enhanced structural and functional information (blood flow) with a wider field of view. This paper investigates automatic DR severity assessment using 3-D OCTA. A straightforward solution to this task is a 3-D neural network classifier. However, 3-D architectures have numerous parameters and typically require many training samples. A lighter solution consists in using 2-D neural network classifiers processing 2-D en-face (or frontal) projections and/or 2-D cross-sectional slices. Such an approach mimics the way ophthalmologists analyze OCTA acquisitions: 1) en-face flow maps are often used to detect avascular zones and neovascularization, and 2) cross-sectional slices are commonly analyzed to detect macular edemas, for instance. However, arbitrary data reduction or selection might result in information loss. Two complementary strategies are thus proposed to optimally summarize OCTA volumes with 2-D images: 1) a parametric en-face projection optimized through deep learning and 2) a cross-sectional slice selection process controlled through gradient-based attribution. The full summarization and DR classification pipeline is trained from end to end. The automatic 2-D summary can be displayed in a viewer or printed in a report to support the decision. We show that the proposed 2-D summarization and classification pipeline outperforms direct 3-D classification with the advantage of improved interpretability.

Automated Detection of Myopic Maculopathy in MMAC 2023: Achievements in Classification, Segmentation, and Spherical Equivalent Prediction

Jan 08, 2024Abstract:Myopic macular degeneration is the most common complication of myopia and the primary cause of vision loss in individuals with pathological myopia. Early detection and prompt treatment are crucial in preventing vision impairment due to myopic maculopathy. This was the focus of the Myopic Maculopathy Analysis Challenge (MMAC), in which we participated. In task 1, classification of myopic maculopathy, we employed the contrastive learning framework, specifically SimCLR, to enhance classification accuracy by effectively capturing enriched features from unlabeled data. This approach not only improved the intrinsic understanding of the data but also elevated the performance of our classification model. For Task 2 (segmentation of myopic maculopathy plus lesions), we have developed independent segmentation models tailored for different lesion segmentation tasks and implemented a test-time augmentation strategy to further enhance the model's performance. As for Task 3 (prediction of spherical equivalent), we have designed a deep regression model based on the data distribution of the dataset and employed an integration strategy to enhance the model's prediction accuracy. The results we obtained are promising and have allowed us to position ourselves in the Top 6 of the classification task, the Top 2 of the segmentation task, and the Top 1 of the prediction task. The code is available at \url{https://github.com/liyihao76/MMAC_LaTIM_Solution}.

LMT: Longitudinal Mixing Training, a Framework to Predict Disease Progression from a Single Image

Oct 16, 2023Abstract:Longitudinal imaging is able to capture both static anatomical structures and dynamic changes in disease progression toward earlier and better patient-specific pathology management. However, conventional approaches rarely take advantage of longitudinal information for detection and prediction purposes, especially for Diabetic Retinopathy (DR). In the past years, Mix-up training and pretext tasks with longitudinal context have effectively enhanced DR classification results and captured disease progression. In the meantime, a novel type of neural network named Neural Ordinary Differential Equation (NODE) has been proposed for solving ordinary differential equations, with a neural network treated as a black box. By definition, NODE is well suited for solving time-related problems. In this paper, we propose to combine these three aspects to detect and predict DR progression. Our framework, Longitudinal Mixing Training (LMT), can be considered both as a regularizer and as a pretext task that encodes the disease progression in the latent space. Additionally, we evaluate the trained model weights on a downstream task with a longitudinal context using standard and longitudinal pretext tasks. We introduce a new way to train time-aware models using $t_{mix}$, a weighted average time between two consecutive examinations. We compare our approach to standard mixing training on DR classification using OPHDIAT a longitudinal retinal Color Fundus Photographs (CFP) dataset. We were able to predict whether an eye would develop a severe DR in the following visit using a single image, with an AUC of 0.798 compared to baseline results of 0.641. Our results indicate that our longitudinal pretext task can learn the progression of DR disease and that introducing $t_{mix}$ augmentation is beneficial for time-aware models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge