Pascale Massin

Context-Aware Vision Language Foundation Models for Ocular Disease Screening in Retinal Images

Mar 19, 2025Abstract:Foundation models are large-scale versatile systems trained on vast quantities of diverse data to learn generalizable representations. Their adaptability with minimal fine-tuning makes them particularly promising for medical imaging, where data variability and domain shifts are major challenges. Currently, two types of foundation models dominate the literature: self-supervised models and more recent vision-language models. In this study, we advance the application of vision-language foundation (VLF) models for ocular disease screening using the OPHDIAT dataset, which includes nearly 700,000 fundus photographs from a French diabetic retinopathy (DR) screening network. This dataset provides extensive clinical data (patient-specific information such as diabetic health conditions, and treatments), labeled diagnostics, ophthalmologists text-based findings, and multiple retinal images for each examination. Building on the FLAIR model $\unicode{x2013}$ a VLF model for retinal pathology classification $\unicode{x2013}$ we propose novel context-aware VLF models (e.g jointly analyzing multiple images from the same visit or taking advantage of past diagnoses and contextual data) to fully leverage the richness of the OPHDIAT dataset and enhance robustness to domain shifts. Our approaches were evaluated on both in-domain (a testing subset of OPHDIAT) and out-of-domain data (public datasets) to assess their generalization performance. Our model demonstrated improved in-domain performance for DR grading, achieving an area under the curve (AUC) ranging from 0.851 to 0.9999, and generalized well to ocular disease detection on out-of-domain data (AUC: 0.631-0.913).

ExplAIn: Explanatory Artificial Intelligence for Diabetic Retinopathy Diagnosis

Sep 01, 2020

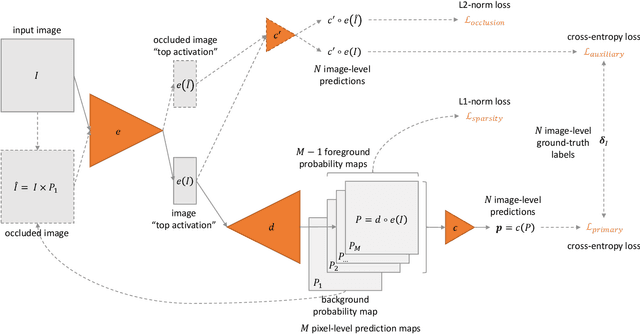

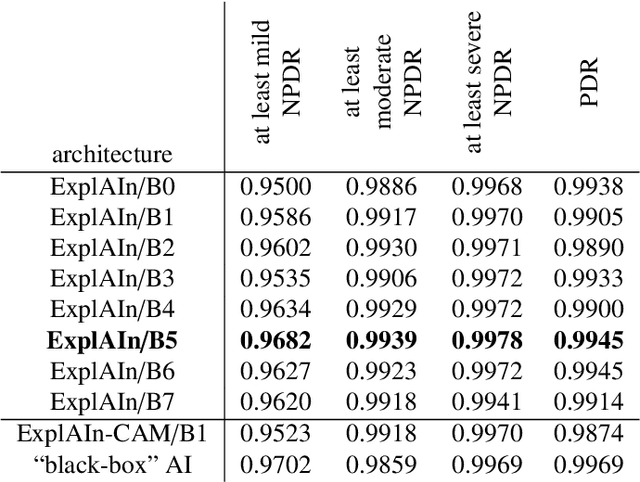

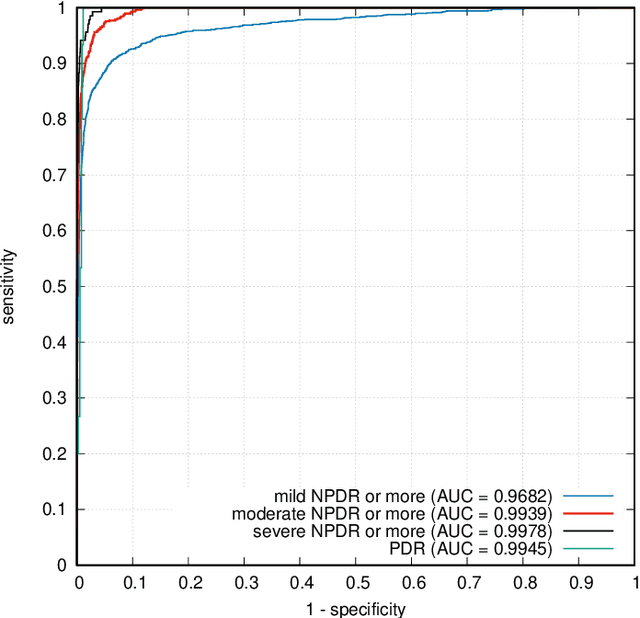

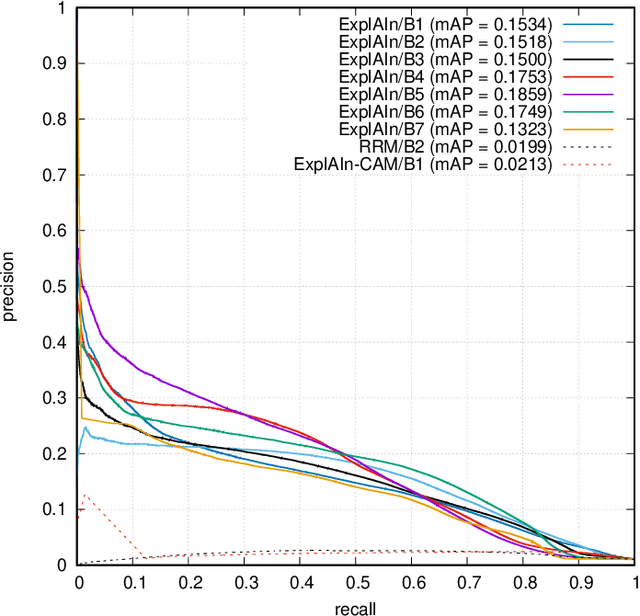

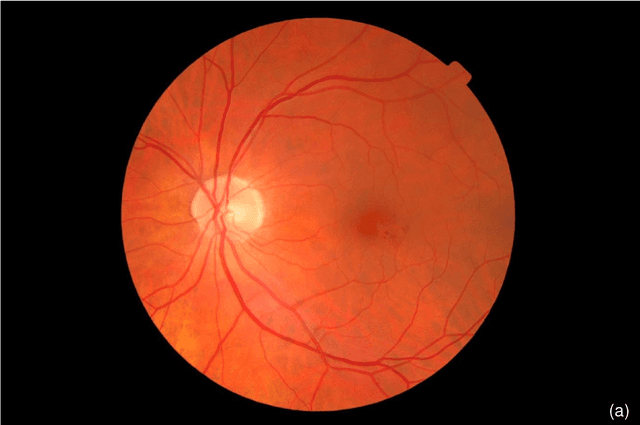

Abstract:In recent years, Artificial Intelligence (AI) has proven its relevance for medical decision support. However, the "black-box" nature of successful AI algorithms still holds back their wide-spread deployment. In this paper, we describe an eXplanatory Artificial Intelligence (XAI) that reaches the same level of performance as black-box AI, for the task of classifying Diabetic Retinopathy (DR) severity using Color Fundus Photography (CFP). This algorithm, called ExplAIn, learns to segment and categorize lesions in images; the final image-level classification directly derives from these multivariate lesion segmentations. The novelty of this explanatory framework is that it is trained from end to end, with image supervision only, just like black-box AI algorithms: the concepts of lesions and lesion categories emerge by themselves. For improved lesion localization, foreground/background separation is trained through self-supervision, in such a way that occluding foreground pixels transforms the input image into a healthy-looking image. The advantage of such an architecture is that automatic diagnoses can be explained simply by an image and/or a few sentences. ExplAIn is evaluated at the image level and at the pixel level on various CFP image datasets. We expect this new framework, which jointly offers high classification performance and explainability, to facilitate AI deployment.

Automatic detection of multiple pathologies in fundus photographs using spin-off learning

Jul 23, 2019

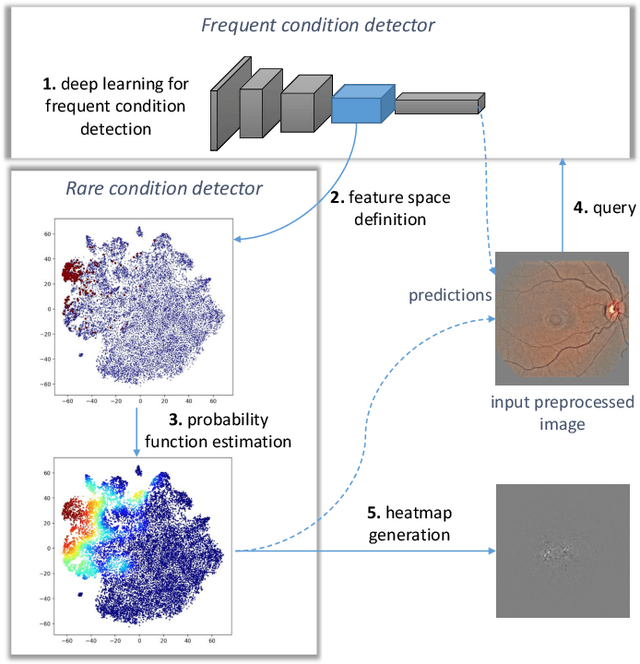

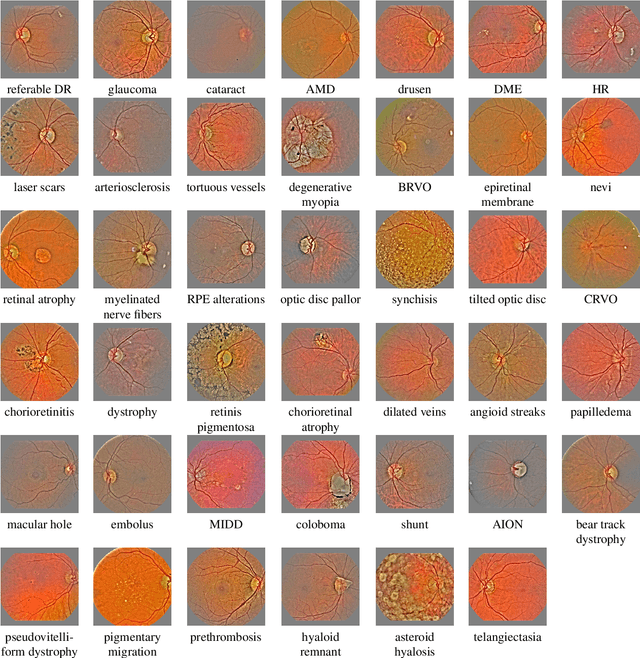

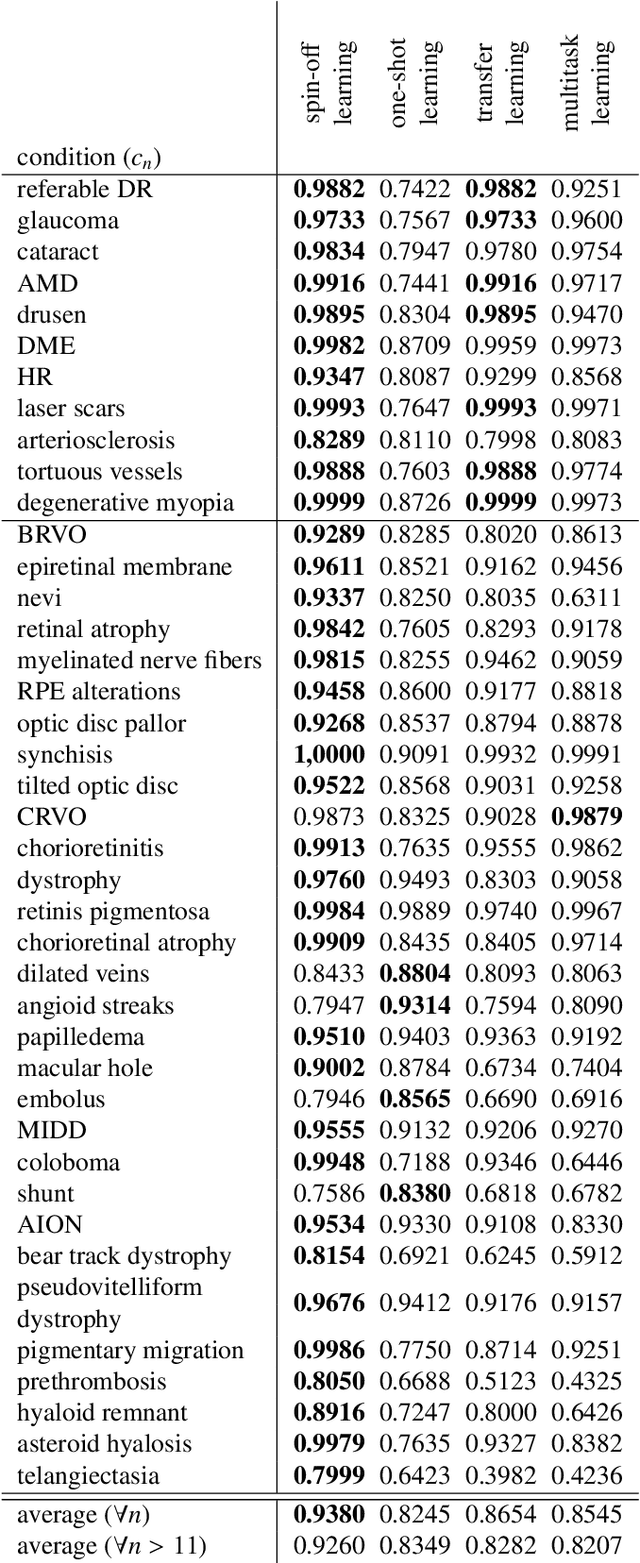

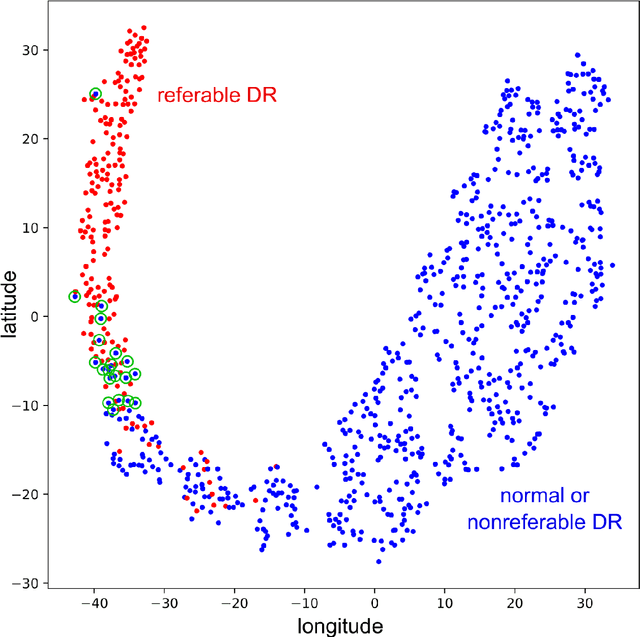

Abstract:In the last decades, large datasets of fundus photographs have been collected in diabetic retinopathy (DR) screening networks. Through deep learning, these datasets were used to train automatic detectors for DR and a few other frequent pathologies, with the goal to automate screening. One challenge limits the adoption of such systems so far: automatic detectors ignore rare conditions that ophthalmologists currently detect. The reason is that standard deep learning requires too many examples of these conditions. To address this limitation, we propose a new machine learning (ML) framework, called spin-off learning, for the automatic detection of rare conditions, like papilledema or anterior ischemic optic neuropathy. This framework extends convolutional neural networks (CNNs), trained for frequent conditions, with an unsupervised probabilistic model for rare condition detection. Spin-off learning is based on the observation that CNNs often perceive photographs containing the same anomalies as similar, even though these CNNs were trained to detect unrelated conditions. This observation was based on the t-SNE visualization tool, which we decided to incorporate in our probabilistic model. Spin-off learning supports heatmap generation, so the detected anomalies can be highlighted in images for decision support. Experiments in a dataset of 164,660 screening examinations from the OPHDIAT screening network show that spin-off learning can detect 37 conditions, out of 41, with an area under the ROC curve (AUC) greater than 0.8 (average AUC: 0.938). In particular, spin-off learning significantly outperforms other candidate ML frameworks for detecting rare conditions, including multitask learning, transfer learning and one-shot learning. We expect these richer predictions to trigger the adoption of automated eye pathology screening, which will revolutionize clinical practice in ophthalmology.

Instant automatic diagnosis of diabetic retinopathy

Jun 12, 2019

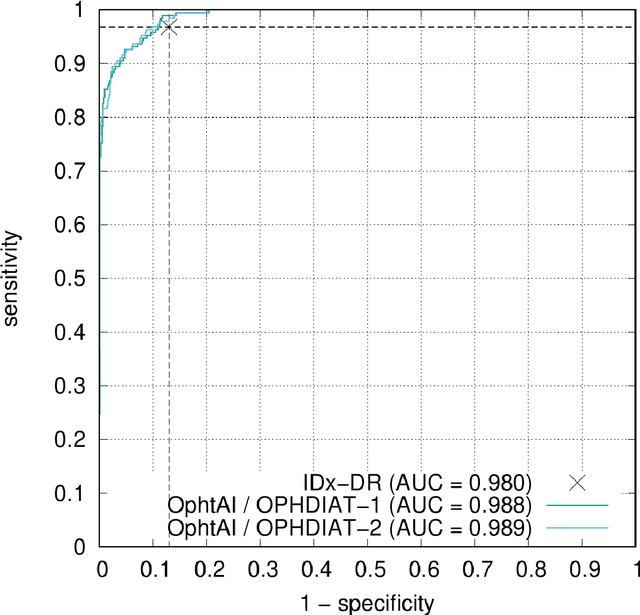

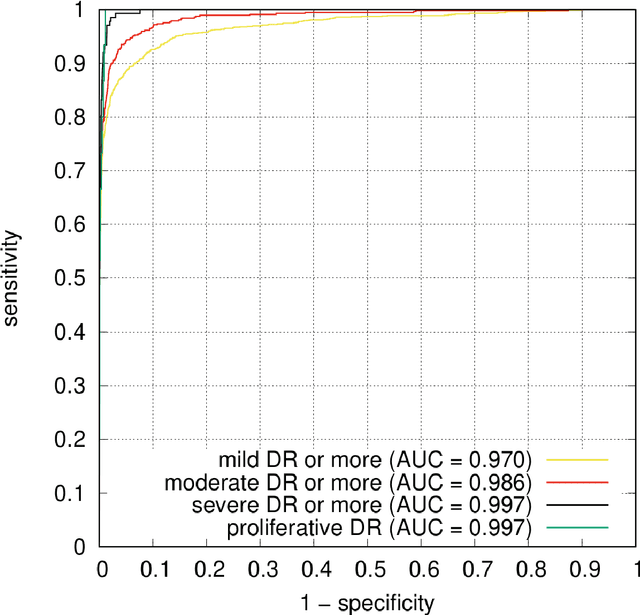

Abstract:The purpose of this study is to evaluate the performance of the OphtAI system for the automatic detection of referable diabetic retinopathy (DR) and the automatic assessment of DR severity using color fundus photography. OphtAI relies on ensembles of convolutional neural networks trained to recognize eye laterality, detect referable DR and assess DR severity. The system can either process single images or full examination records. To document the automatic diagnoses, accurate heatmaps are generated. The system was developed and validated using a dataset of 763,848 images from 164,660 screening procedures from the OPHDIAT screening program. For comparison purposes, it was also evaluated in the public Messidor-2 dataset. Referable DR can be detected with an area under the ROC curve of AUC = 0.989 in the Messidor-2 dataset, using the University of Iowa's reference standard (95% CI: 0.984-0.994). This is significantly better than the only AI system authorized by the FDA, evaluated in the exact same conditions (AUC = 0.980). OphtAI can also detect vision-threatening DR with an AUC of 0.997 (95% CI: 0.996-0.998) and proliferative DR with an AUC of 0.997 (95% CI: 0.995-0.999). The system runs in 0.3 seconds using a graphics processing unit and less than 2 seconds without. OphtAI is safer, faster and more comprehensive than the only AI system authorized by the FDA so far. Instant DR diagnosis is now possible, which is expected to streamline DR screening and to give easy access to DR screening to more diabetic patients.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge