ExplAIn: Explanatory Artificial Intelligence for Diabetic Retinopathy Diagnosis

Paper and Code

Sep 01, 2020

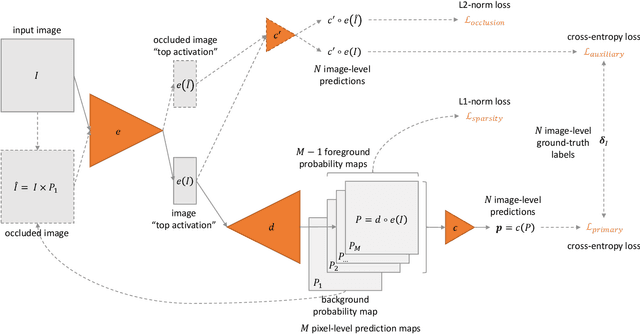

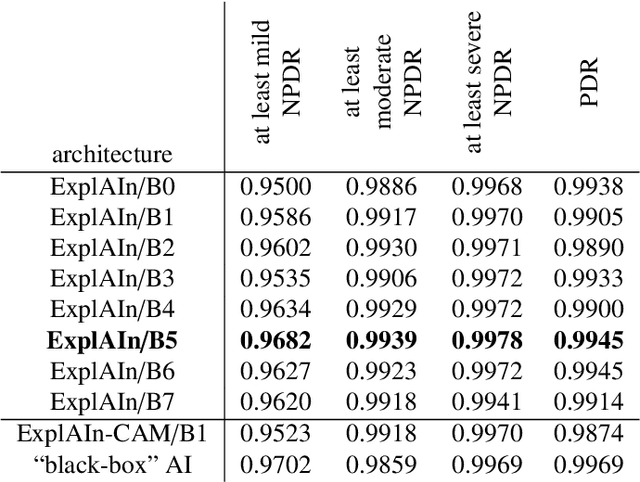

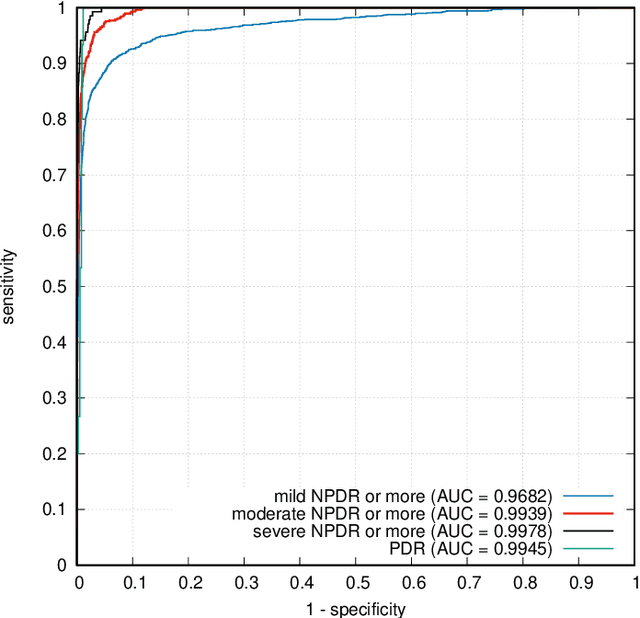

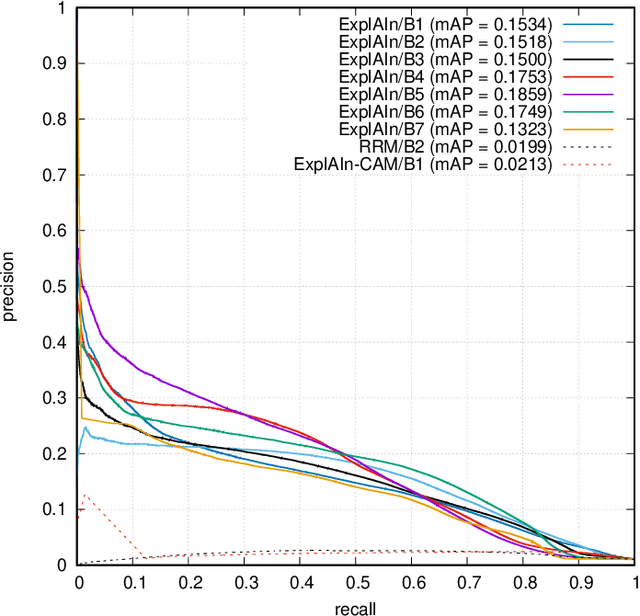

In recent years, Artificial Intelligence (AI) has proven its relevance for medical decision support. However, the "black-box" nature of successful AI algorithms still holds back their wide-spread deployment. In this paper, we describe an eXplanatory Artificial Intelligence (XAI) that reaches the same level of performance as black-box AI, for the task of classifying Diabetic Retinopathy (DR) severity using Color Fundus Photography (CFP). This algorithm, called ExplAIn, learns to segment and categorize lesions in images; the final image-level classification directly derives from these multivariate lesion segmentations. The novelty of this explanatory framework is that it is trained from end to end, with image supervision only, just like black-box AI algorithms: the concepts of lesions and lesion categories emerge by themselves. For improved lesion localization, foreground/background separation is trained through self-supervision, in such a way that occluding foreground pixels transforms the input image into a healthy-looking image. The advantage of such an architecture is that automatic diagnoses can be explained simply by an image and/or a few sentences. ExplAIn is evaluated at the image level and at the pixel level on various CFP image datasets. We expect this new framework, which jointly offers high classification performance and explainability, to facilitate AI deployment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge