Hugang Ren

DISCOVER: 2-D Multiview Summarization of Optical Coherence Tomography Angiography for Automatic Diabetic Retinopathy Diagnosis

Jan 10, 2024Abstract:Diabetic Retinopathy (DR), an ocular complication of diabetes, is a leading cause of blindness worldwide. Traditionally, DR is monitored using Color Fundus Photography (CFP), a widespread 2-D imaging modality. However, DR classifications based on CFP have poor predictive power, resulting in suboptimal DR management. Optical Coherence Tomography Angiography (OCTA) is a recent 3-D imaging modality offering enhanced structural and functional information (blood flow) with a wider field of view. This paper investigates automatic DR severity assessment using 3-D OCTA. A straightforward solution to this task is a 3-D neural network classifier. However, 3-D architectures have numerous parameters and typically require many training samples. A lighter solution consists in using 2-D neural network classifiers processing 2-D en-face (or frontal) projections and/or 2-D cross-sectional slices. Such an approach mimics the way ophthalmologists analyze OCTA acquisitions: 1) en-face flow maps are often used to detect avascular zones and neovascularization, and 2) cross-sectional slices are commonly analyzed to detect macular edemas, for instance. However, arbitrary data reduction or selection might result in information loss. Two complementary strategies are thus proposed to optimally summarize OCTA volumes with 2-D images: 1) a parametric en-face projection optimized through deep learning and 2) a cross-sectional slice selection process controlled through gradient-based attribution. The full summarization and DR classification pipeline is trained from end to end. The automatic 2-D summary can be displayed in a viewer or printed in a report to support the decision. We show that the proposed 2-D summarization and classification pipeline outperforms direct 3-D classification with the advantage of improved interpretability.

Multimodal Information Fusion For The Diagnosis Of Diabetic Retinopathy

Mar 20, 2023

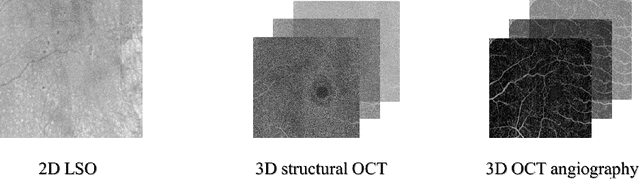

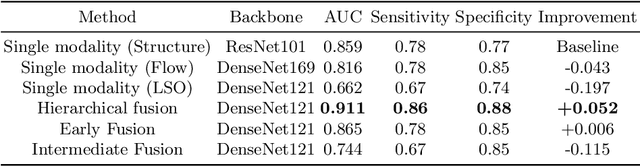

Abstract:Diabetes is a chronic disease characterized by excess sugar in the blood and affects 422 million people worldwide, including 3.3 million in France. One of the frequent complications of diabetes is diabetic retinopathy (DR): it is the leading cause of blindness in the working population of developed countries. As a result, ophthalmology is on the verge of a revolution in screening, diagnosing, and managing of pathologies. This upheaval is led by the arrival of technologies based on artificial intelligence. The "Evaluation intelligente de la r\'etinopathie diab\'etique" (EviRed) project uses artificial intelligence to answer a medical need: replacing the current classification of diabetic retinopathy which is mainly based on outdated fundus photography and providing an insufficient prediction precision. EviRed exploits modern fundus imaging devices and artificial intelligence to properly integrate the vast amount of data they provide with other available medical data of the patient. The goal is to improve diagnosis and prediction and help ophthalmologists to make better decisions during diabetic retinopathy follow-up. In this study, we investigate the fusion of different modalities acquired simultaneously with a PLEXElite 9000 (Carl Zeiss Meditec Inc. Dublin, California, USA), namely 3-D structural optical coherence tomography (OCT), 3-D OCT angiography (OCTA) and 2-D Line Scanning Ophthalmoscope (LSO), for the automatic detection of proliferative DR.

Multimodal Information Fusion for Glaucoma and DR Classification

Sep 05, 2022

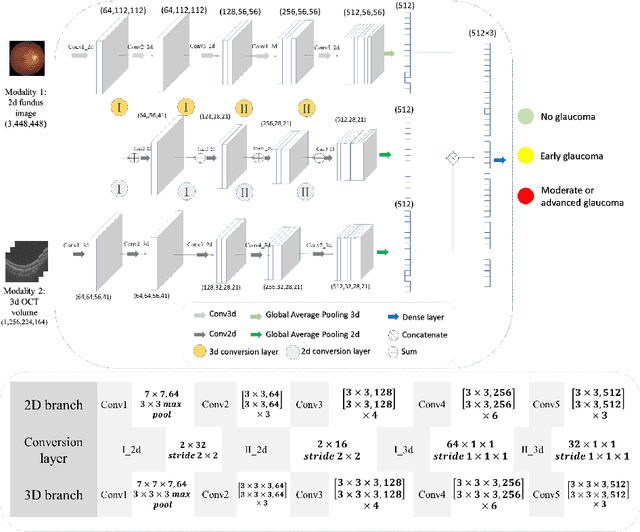

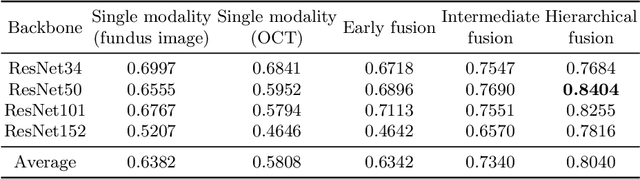

Abstract:Multimodal information is frequently available in medical tasks. By combining information from multiple sources, clinicians are able to make more accurate judgments. In recent years, multiple imaging techniques have been used in clinical practice for retinal analysis: 2D fundus photographs, 3D optical coherence tomography (OCT) and 3D OCT angiography, etc. Our paper investigates three multimodal information fusion strategies based on deep learning to solve retinal analysis tasks: early fusion, intermediate fusion, and hierarchical fusion. The commonly used early and intermediate fusions are simple but do not fully exploit the complementary information between modalities. We developed a hierarchical fusion approach that focuses on combining features across multiple dimensions of the network, as well as exploring the correlation between modalities. These approaches were applied to glaucoma and diabetic retinopathy classification, using the public GAMMA dataset (fundus photographs and OCT) and a private dataset of PlexElite 9000 (Carl Zeis Meditec Inc.) OCT angiography acquisitions, respectively. Our hierarchical fusion method performed the best in both cases and paved the way for better clinical diagnosis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge