Gunmin Lee

Safe CoR: A Dual-Expert Approach to Integrating Imitation Learning and Safe Reinforcement Learning Using Constraint Rewards

Jul 02, 2024

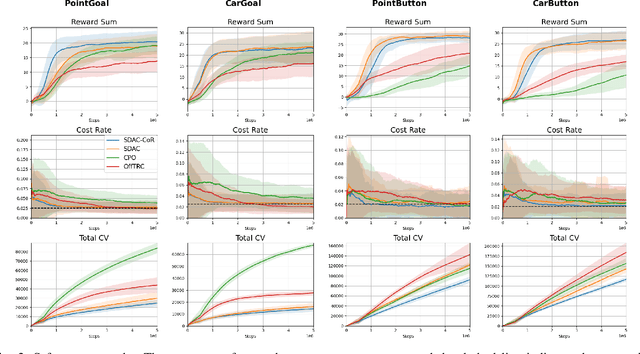

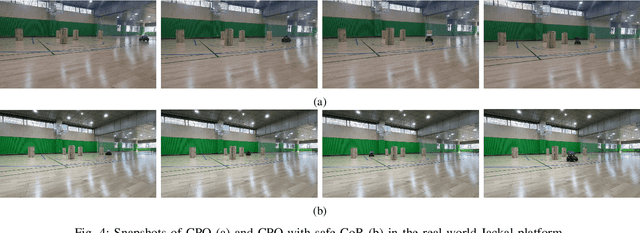

Abstract:In the realm of autonomous agents, ensuring safety and reliability in complex and dynamic environments remains a paramount challenge. Safe reinforcement learning addresses these concerns by introducing safety constraints, but still faces challenges in navigating intricate environments such as complex driving situations. To overcome these challenges, we present the safe constraint reward (Safe CoR) framework, a novel method that utilizes two types of expert demonstrations$\unicode{x2013}$reward expert demonstrations focusing on performance optimization and safe expert demonstrations prioritizing safety. By exploiting a constraint reward (CoR), our framework guides the agent to balance performance goals of reward sum with safety constraints. We test the proposed framework in diverse environments, including the safety gym, metadrive, and the real$\unicode{x2013}$world Jackal platform. Our proposed framework enhances the performance of algorithms by $39\%$ and reduces constraint violations by $88\%$ on the real-world Jackal platform, demonstrating the framework's efficacy. Through this innovative approach, we expect significant advancements in real-world performance, leading to transformative effects in the realm of safe and reliable autonomous agents.

Semi-Supervised Imitation Learning with Mixed Qualities of Demonstrations for Autonomous Driving

Sep 23, 2021

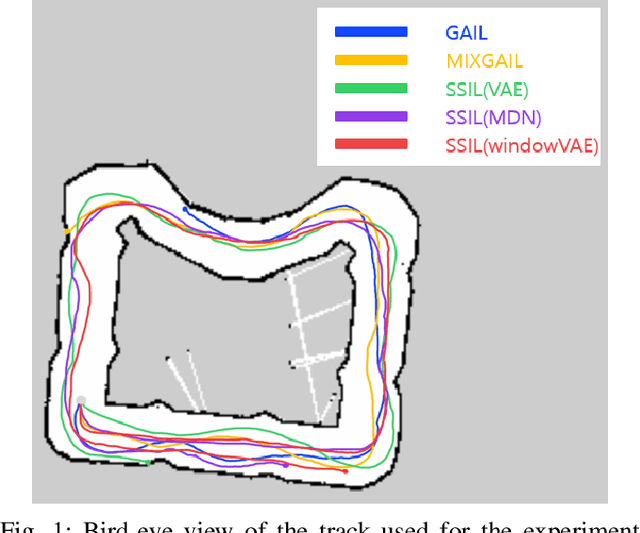

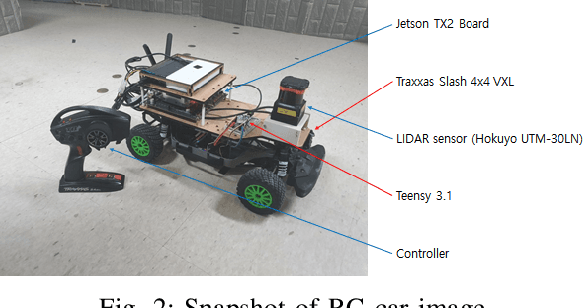

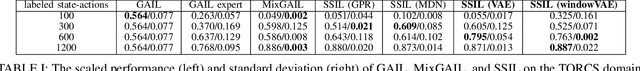

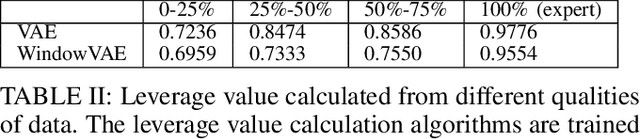

Abstract:In this paper, we consider the problem of autonomous driving using imitation learning in a semi-supervised manner. In particular, both labeled and unlabeled demonstrations are leveraged during training by estimating the quality of each unlabeled demonstration. If the provided demonstrations are corrupted and have a low signal-to-noise ratio, the performance of the imitation learning agent can be degraded significantly. To mitigate this problem, we propose a method called semi-supervised imitation learning (SSIL). SSIL first learns how to discriminate and evaluate each state-action pair's reliability in unlabeled demonstrations by assigning higher reliability values to demonstrations similar to labeled expert demonstrations. This reliability value is called leverage. After this discrimination process, both labeled and unlabeled demonstrations with estimated leverage values are utilized while training the policy in a semi-supervised manner. The experimental results demonstrate the validity of the proposed algorithm using unlabeled trajectories with mixed qualities. Moreover, the hardware experiments using an RC car are conducted to show that the proposed method can be applied to real-world applications.

Towards Defensive Autonomous Driving: Collecting and Probing Driving Demonstrations of Mixed Qualities

Sep 18, 2021

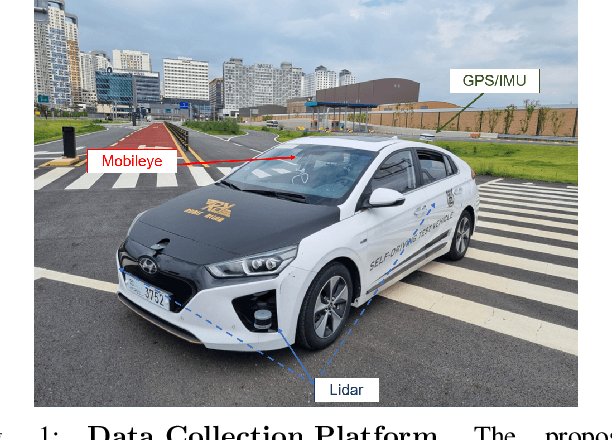

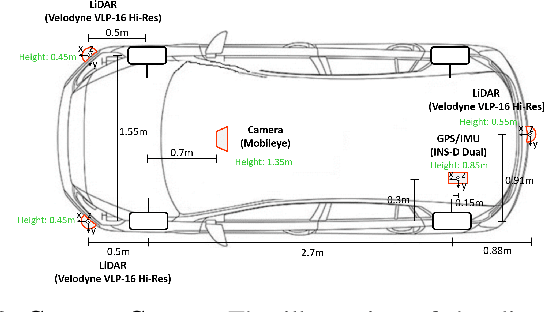

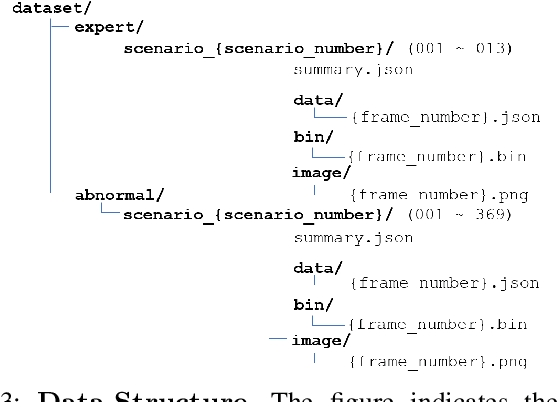

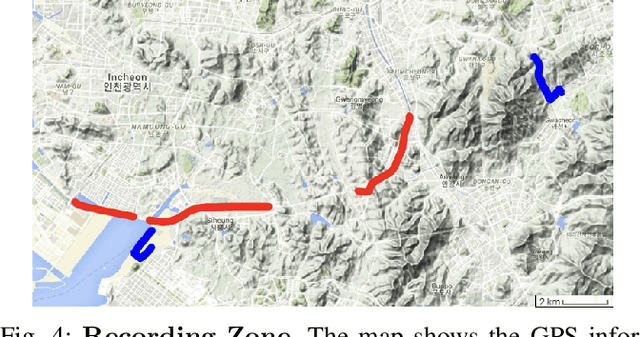

Abstract:Designing or learning an autonomous driving policy is undoubtedly a challenging task as the policy has to maintain its safety in all corner cases. In order to secure safety in autonomous driving, the ability to detect hazardous situations, which can be seen as an out-of-distribution (OOD) detection problem, becomes crucial. However, most conventional datasets only provide expert driving demonstrations, although some non-expert or uncommon driving behavior data are needed to implement a safety guaranteed autonomous driving platform. To this end, we present a novel dataset called the R3 Driving Dataset, composed of driving data with different qualities. The dataset categorizes abnormal driving behaviors into eight categories and 369 different detailed situations. The situations include dangerous lane changes and near-collision situations. To further enlighten how these abnormal driving behaviors can be detected, we utilize different uncertainty estimation and anomaly detection methods to the proposed dataset. From the results of the proposed experiment, it can be inferred that by using both uncertainty estimation and anomaly detection, most of the abnormal cases in the proposed dataset can be discriminated. The dataset of this paper can be downloaded from https://rllab-snu.github.io/projects/R3-Driving-Dataset/doc.html.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge