Guanlan Zhang

Master Rules from Chaos: Learning to Reason, Plan, and Interact from Chaos for Tangram Assembly

May 17, 2025Abstract:Tangram assembly, the art of human intelligence and manipulation dexterity, is a new challenge for robotics and reveals the limitations of state-of-the-arts. Here, we describe our initial exploration and highlight key problems in reasoning, planning, and manipulation for robotic tangram assembly. We present MRChaos (Master Rules from Chaos), a robust and general solution for learning assembly policies that can generalize to novel objects. In contrast to conventional methods based on prior geometric and kinematic models, MRChaos learns to assemble randomly generated objects through self-exploration in simulation without prior experience in assembling target objects. The reward signal is obtained from the visual observation change without manually designed models or annotations. MRChaos retains its robustness in assembling various novel tangram objects that have never been encountered during training, with only silhouette prompts. We show the potential of MRChaos in wider applications such as cutlery combinations. The presented work indicates that radical generalization in robotic assembly can be achieved by learning in much simpler domains.

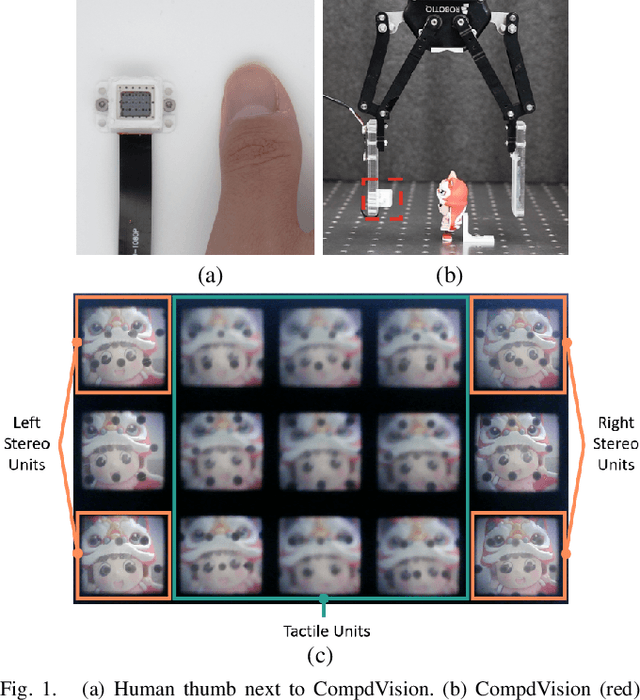

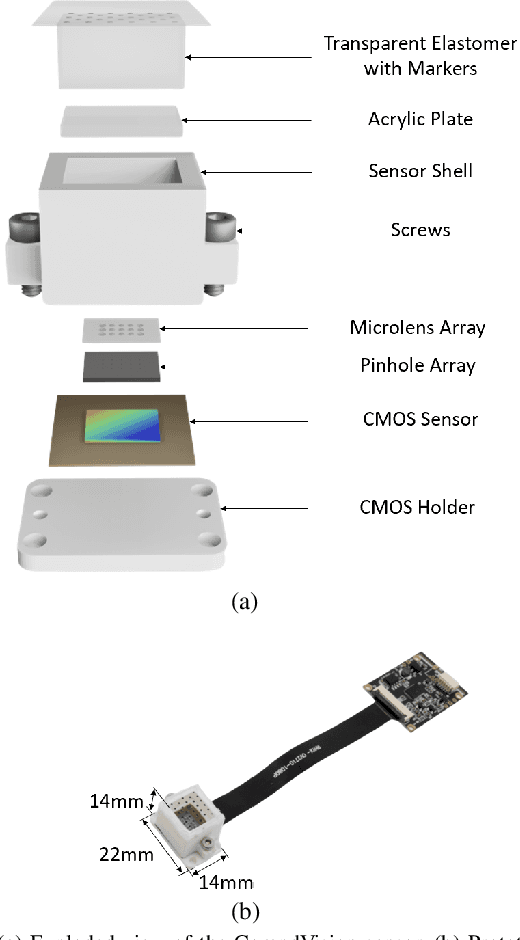

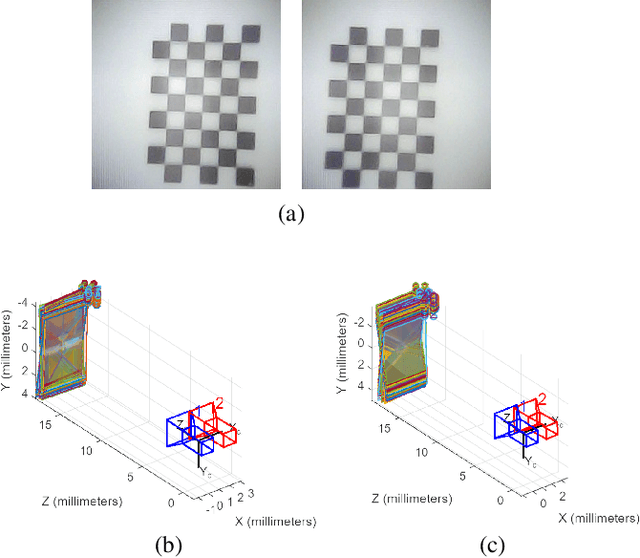

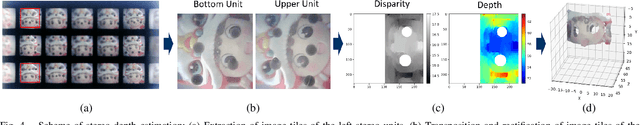

CompdVision: Combining Near-Field 3D Visual and Tactile Sensing Using a Compact Compound-Eye Imaging System

Dec 12, 2023

Abstract:As automation technologies advance, the need for compact and multi-modal sensors in robotic applications is growing. To address this demand, we introduce CompdVision, a novel sensor that combines near-field 3D visual and tactile sensing. This sensor, with dimensions of 22$\times$14$\times$14 mm, leverages the compound eye imaging system to achieve a compact form factor without compromising its dual modalities. CompdVision utilizes two types of vision units to meet diverse sensing requirements. Stereo units with far-focus lenses can see through the transparent elastomer, facilitating depth estimation beyond the contact surface, while tactile units with near-focus lenses track the movement of markers embedded in the elastomer to obtain contact deformation. Experimental results validate the sensor's superior performance in 3D visual and tactile sensing. The sensor demonstrates effective depth estimation within a 70mm range from its surface. Additionally, it registers high accuracy in tangential and normal force measurements. The dual modalities and compact design make the sensor a versatile tool for complex robotic tasks.

A Thin Format Vision-Based Tactile Sensor with A Micro Lens Array

Apr 19, 2022

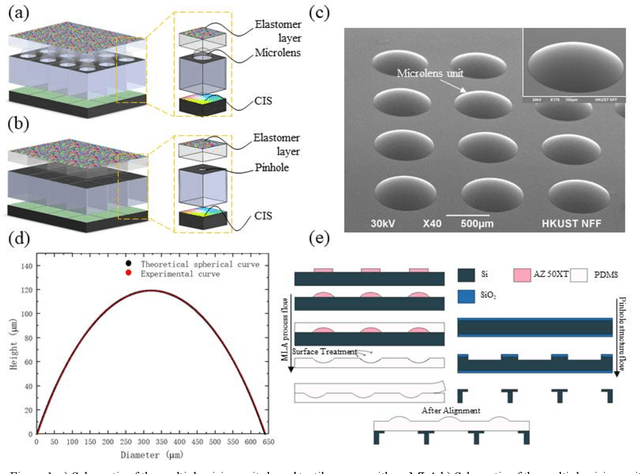

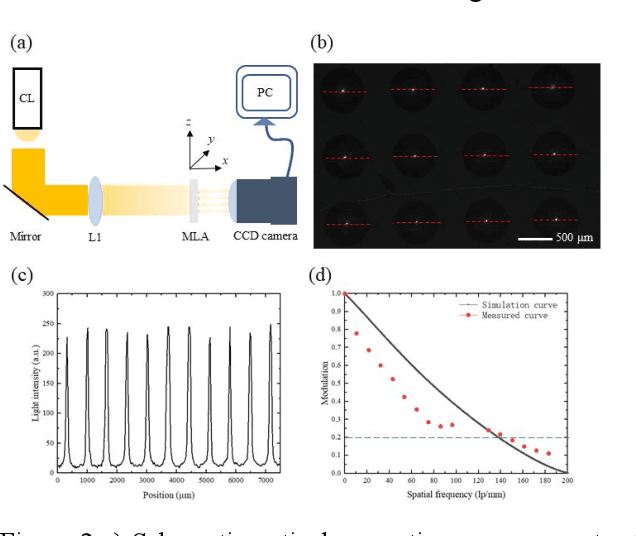

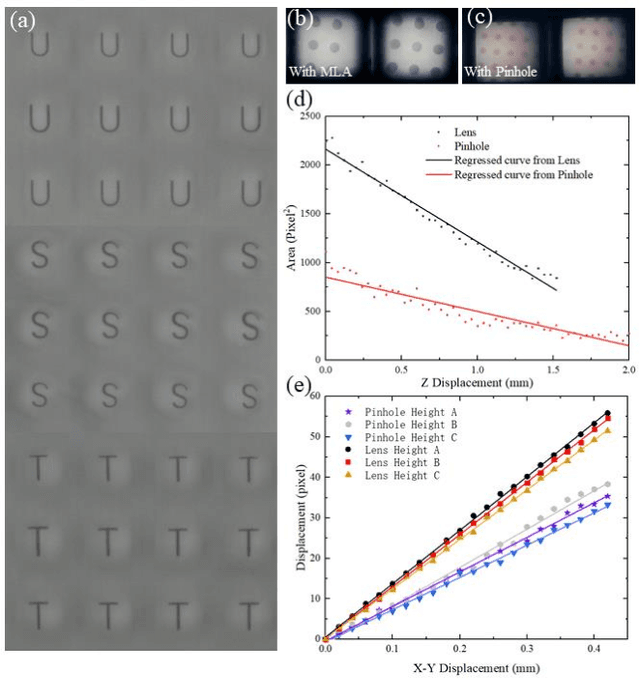

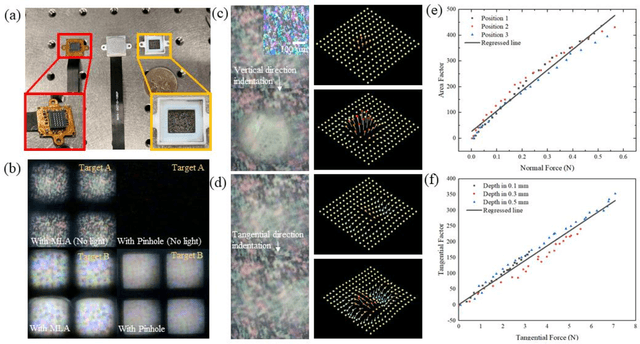

Abstract:Vision-based tactile sensors have been widely studied in the robotics field for high spatial resolution and compatibility with machine learning algorithms. However, the currently employed sensor's imaging system is bulky limiting its further application. Here we present a micro lens array (MLA) based vison system to achieve a low thickness format of the sensor package with high tactile sensing performance. Multiple micromachined micro lens units cover the whole elastic touching layer and provide a stitched clear tactile image, enabling high spatial resolution with a thin thickness of 5 mm. The thermal reflow and soft lithography method ensure the uniform spherical profile and smooth surface of micro lens. Both optical and mechanical characterization demonstrated the sensor's stable imaging and excellent tactile sensing, enabling precise 3D tactile information, such as displacement mapping and force distribution with an ultra compact-thin structure.

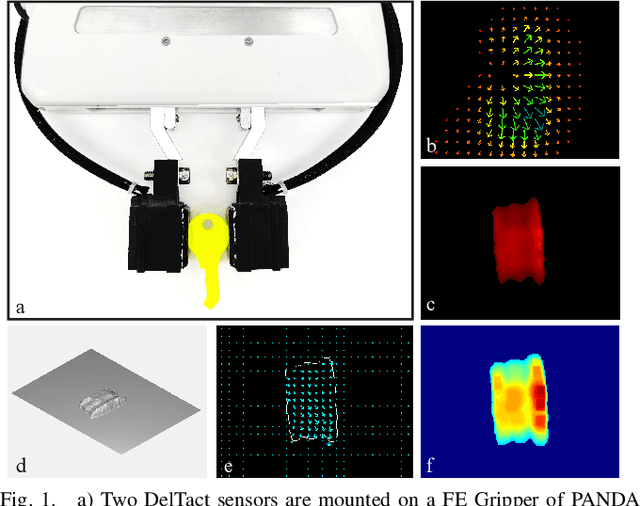

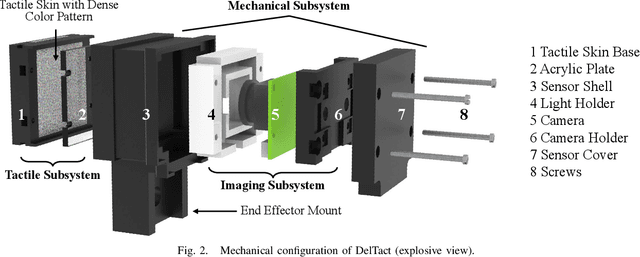

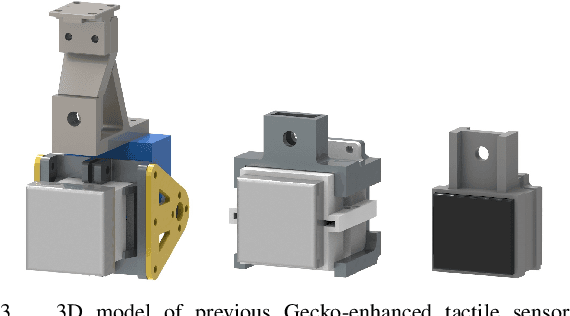

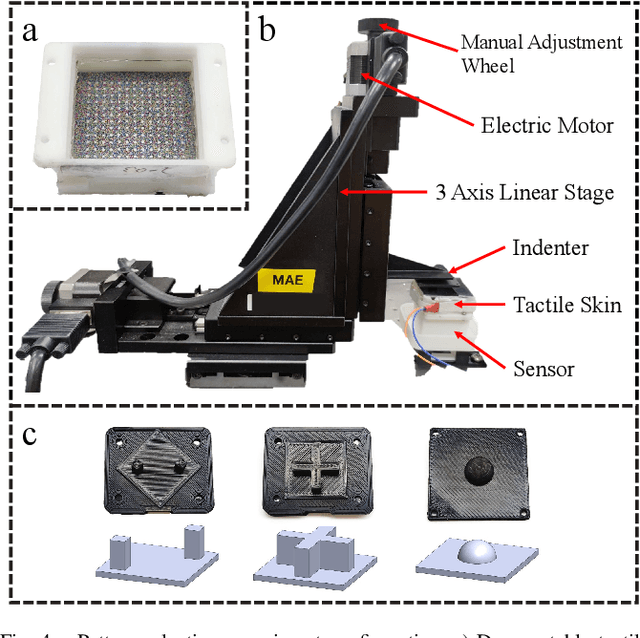

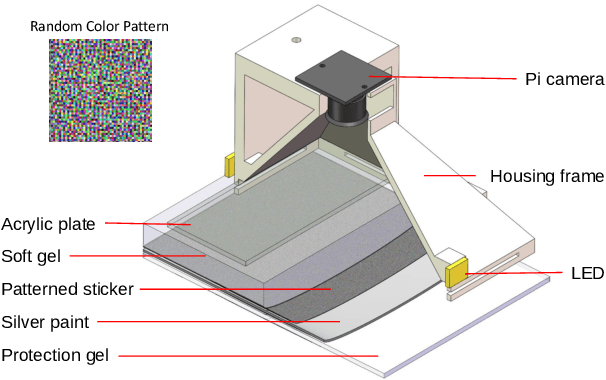

DelTact: A Vision-based Tactile Sensor Using Dense Color Pattern

Feb 15, 2022

Abstract:Tactile sensing is an essential perception for robots to complete dexterous tasks. As a promising tactile sensing technique, vision-based tactile sensors have been developed to improve robot performance in manipulation and grasping. Here we propose a new design of vision-based tactile sensor, DelTact, with its high-resolution sensing abilities of surface contact measurement. The sensor uses a modular hardware architecture for compactness whilst maintaining a robust overall design. Moreover, it adopts an improved dense random color pattern based on the previous version to achieve high accuracy of contact deformation tracking. In particular, we optimize the color pattern generation process and select the appropriate pattern for coordinating with a dense optical flow algorithm in a real-world experimental sensory setting using various objects for contact. The optical flow obtained from the raw image is processed to determine shape and force distribution on the contact surface. This sensor can be easily integrated with a parallel gripper where experimental results using qualitative and quantitative analysis demonstrate that the sensor is capable of providing tactile measurements with high temporal and spatial resolution.

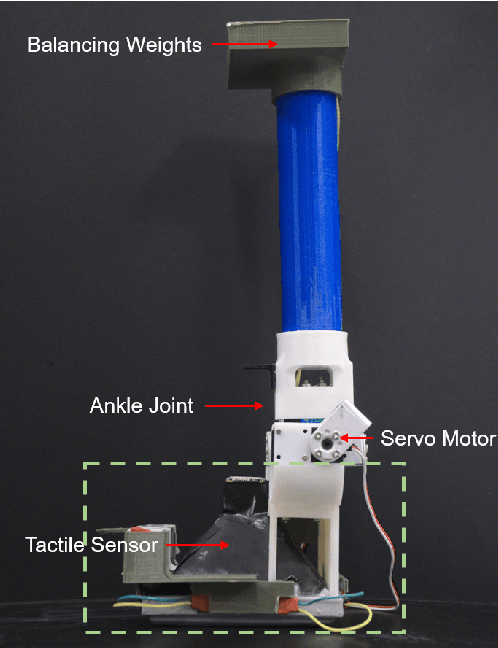

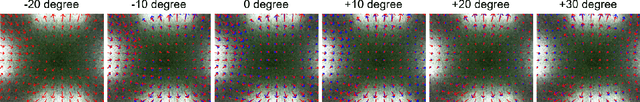

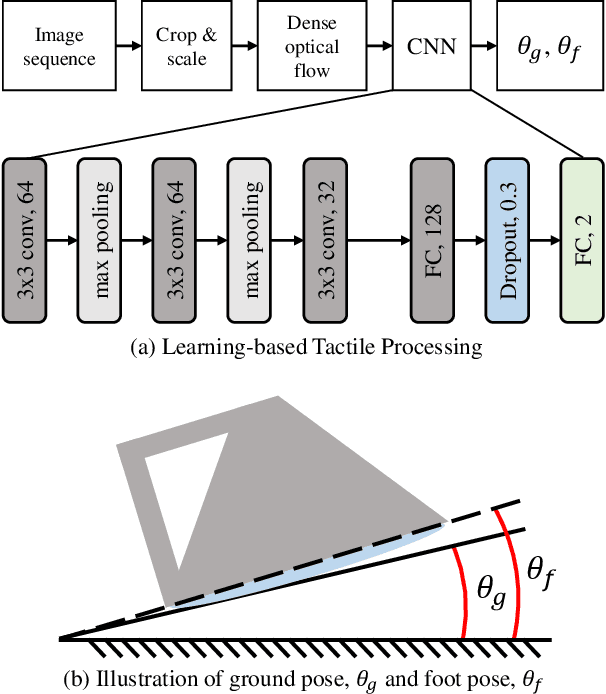

A Tactile Sensing Foot for Single Robot Leg Stabilization

Mar 26, 2021

Abstract:Tactile sensing on human feet is crucial for motion control, however, has not been explored in robotic counterparts. This work is dedicated to endowing tactile sensing to legged robot's feet and showing that a single-legged robot can be stabilized with only tactile sensing signals from its foot. We propose a robot leg with a novel vision-based tactile sensing foot system and implement a processing algorithm to extract contact information for feedback control in stabilizing tasks. A pipeline to convert images of the foot skin into high-level contact information using a deep learning framework is presented. The leg was quantitatively evaluated in a stabilization task on a tilting surface to show that the tactile foot was able to estimate both the surface tilting angle and the foot poses. Feasibility and effectiveness of the tactile system were investigated qualitatively in comparison with conventional single-legged robotic systems using inertia measurement units (IMU). Experiments demonstrate the capability of vision-based tactile sensors in assisting legged robots to maintain stability on unknown terrains and the potential for regulating more complex motions for humanoid robots.

* 7 pages, 9 figures, ICRA2021

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge