Chunli Jiang

Master Rules from Chaos: Learning to Reason, Plan, and Interact from Chaos for Tangram Assembly

May 17, 2025Abstract:Tangram assembly, the art of human intelligence and manipulation dexterity, is a new challenge for robotics and reveals the limitations of state-of-the-arts. Here, we describe our initial exploration and highlight key problems in reasoning, planning, and manipulation for robotic tangram assembly. We present MRChaos (Master Rules from Chaos), a robust and general solution for learning assembly policies that can generalize to novel objects. In contrast to conventional methods based on prior geometric and kinematic models, MRChaos learns to assemble randomly generated objects through self-exploration in simulation without prior experience in assembling target objects. The reward signal is obtained from the visual observation change without manually designed models or annotations. MRChaos retains its robustness in assembling various novel tangram objects that have never been encountered during training, with only silhouette prompts. We show the potential of MRChaos in wider applications such as cutlery combinations. The presented work indicates that radical generalization in robotic assembly can be achieved by learning in much simpler domains.

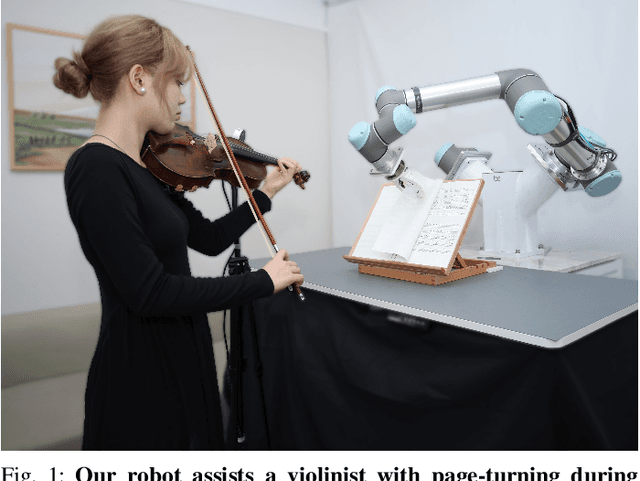

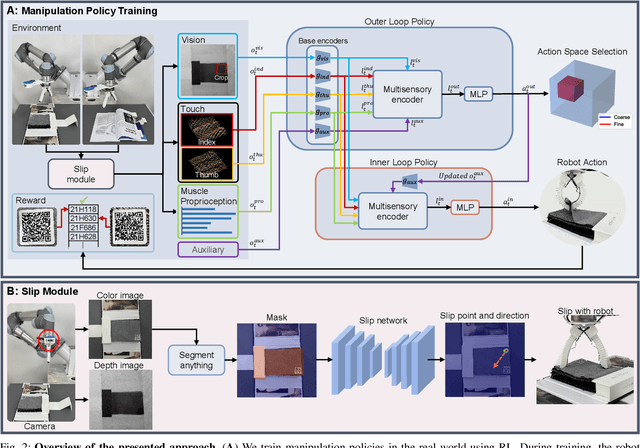

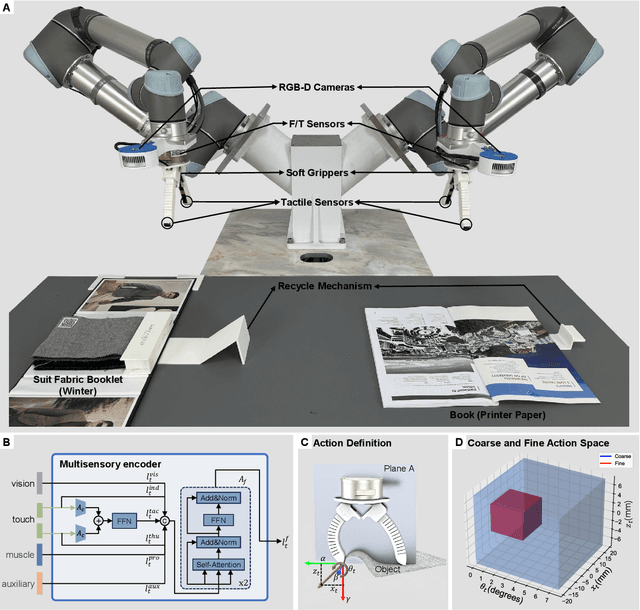

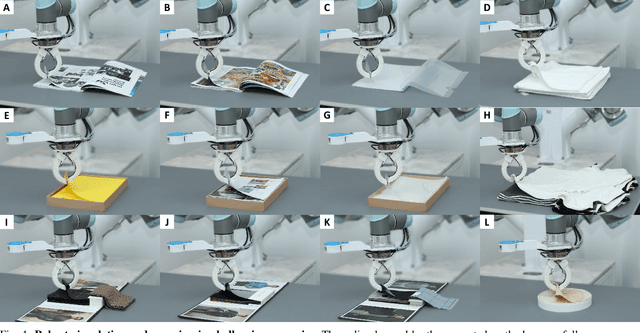

Learning thin deformable object manipulation with a multi-sensory integrated soft hand

Nov 21, 2024

Abstract:Robotic manipulation has made significant advancements, with systems demonstrating high precision and repeatability. However, this remarkable precision often fails to translate into efficient manipulation of thin deformable objects. Current robotic systems lack imprecise dexterity, the ability to perform dexterous manipulation through robust and adaptive behaviors that do not rely on precise control. This paper explores the singulation and grasping of thin, deformable objects. Here, we propose a novel solution that incorporates passive compliance, touch, and proprioception into thin, deformable object manipulation. Our system employs a soft, underactuated hand that provides passive compliance, facilitating adaptive and gentle interactions to dexterously manipulate deformable objects without requiring precise control. The tactile and force/torque sensors equipped on the hand, along with a depth camera, gather sensory data required for manipulation via the proposed slip module. The manipulation policies are learned directly from raw sensory data via model-free reinforcement learning, bypassing explicit environmental and object modeling. We implement a hierarchical double-loop learning process to enhance learning efficiency by decoupling the action space. Our method was deployed on real-world robots and trained in a self-supervised manner. The resulting policy was tested on a variety of challenging tasks that were beyond the capabilities of prior studies, ranging from displaying suit fabric like a salesperson to turning pages of sheet music for violinists.

Flipbot: Learning Continuous Paper Flipping via Coarse-to-Fine Exteroceptive-Proprioceptive Exploration

Apr 05, 2023Abstract:This paper tackles the task of singulating and grasping paper-like deformable objects. We refer to such tasks as paper-flipping. In contrast to manipulating deformable objects that lack compression strength (such as shirts and ropes), minor variations in the physical properties of the paper-like deformable objects significantly impact the results, making manipulation highly challenging. Here, we present Flipbot, a novel solution for flipping paper-like deformable objects. Flipbot allows the robot to capture object physical properties by integrating exteroceptive and proprioceptive perceptions that are indispensable for manipulating deformable objects. Furthermore, by incorporating a proposed coarse-to-fine exploration process, the system is capable of learning the optimal control parameters for effective paper-flipping through proprioceptive and exteroceptive inputs. We deploy our method on a real-world robot with a soft gripper and learn in a self-supervised manner. The resulting policy demonstrates the effectiveness of Flipbot on paper-flipping tasks with various settings beyond the reach of prior studies, including but not limited to flipping pages throughout a book and emptying paper sheets in a box.

ERRA: An Embodied Representation and Reasoning Architecture for Long-horizon Language-conditioned Manipulation Tasks

Apr 05, 2023Abstract:This letter introduces ERRA, an embodied learning architecture that enables robots to jointly obtain three fundamental capabilities (reasoning, planning, and interaction) for solving long-horizon language-conditioned manipulation tasks. ERRA is based on tightly-coupled probabilistic inferences at two granularity levels. Coarse-resolution inference is formulated as sequence generation through a large language model, which infers action language from natural language instruction and environment state. The robot then zooms to the fine-resolution inference part to perform the concrete action corresponding to the action language. Fine-resolution inference is constructed as a Markov decision process, which takes action language and environmental sensing as observations and outputs the action. The results of action execution in environments provide feedback for subsequent coarse-resolution reasoning. Such coarse-to-fine inference allows the robot to decompose and achieve long-horizon tasks interactively. In extensive experiments, we show that ERRA can complete various long-horizon manipulation tasks specified by abstract language instructions. We also demonstrate successful generalization to the novel but similar natural language instructions.

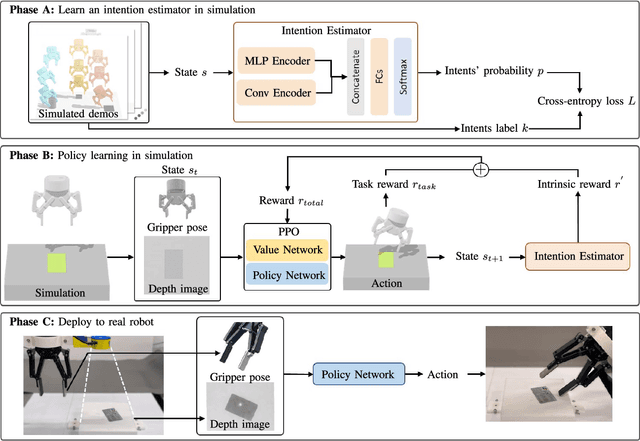

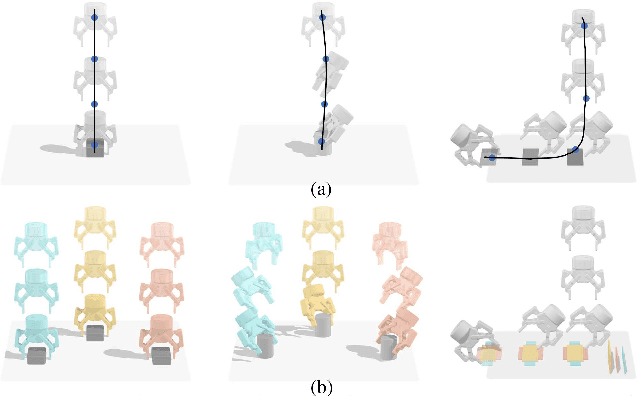

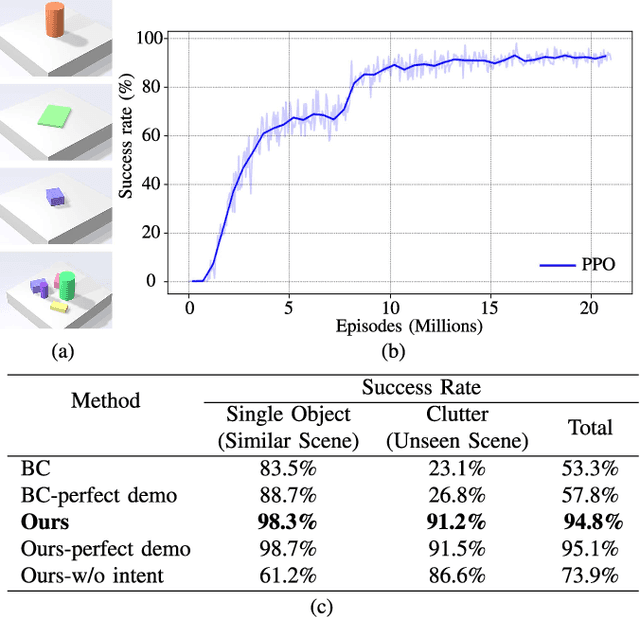

Learn to Grasp via Intention Discovery and its Application to Challenging Clutter

Apr 05, 2023

Abstract:Humans excel in grasping objects through diverse and robust policies, many of which are so probabilistically rare that exploration-based learning methods hardly observe and learn. Inspired by the human learning process, we propose a method to extract and exploit latent intents from demonstrations, and then learn diverse and robust grasping policies through self-exploration. The resulting policy can grasp challenging objects in various environments with an off-the-shelf parallel gripper. The key component is a learned intention estimator, which maps gripper pose and visual sensory to a set of sub-intents covering important phases of the grasping movement. Sub-intents can be used to build an intrinsic reward to guide policy learning. The learned policy demonstrates remarkable zero-shot generalization from simulation to the real world while retaining its robustness against states that have never been encountered during training, novel objects such as protractors and user manuals, and environments such as the cluttered conveyor.

* Accepted to IEEE Robotics and Automation Letters (RA-L)

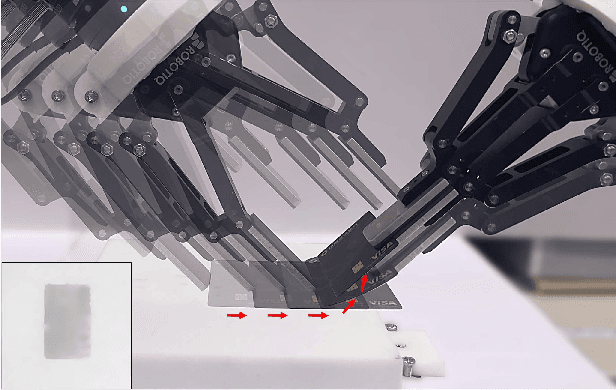

Dynamic Flex-and-Flip Manipulation of Deformable Linear Objects

Apr 02, 2023

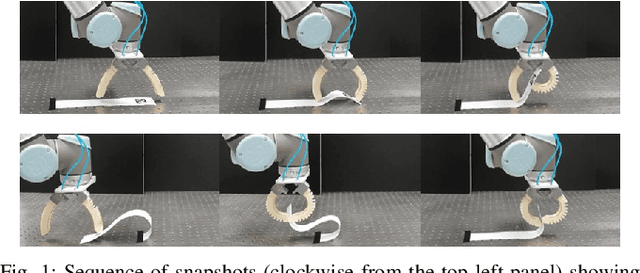

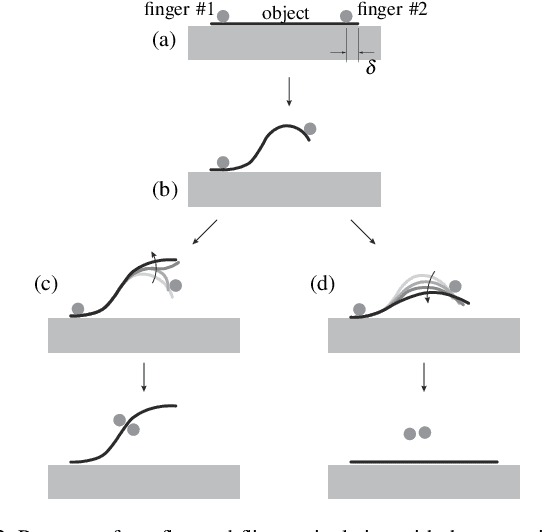

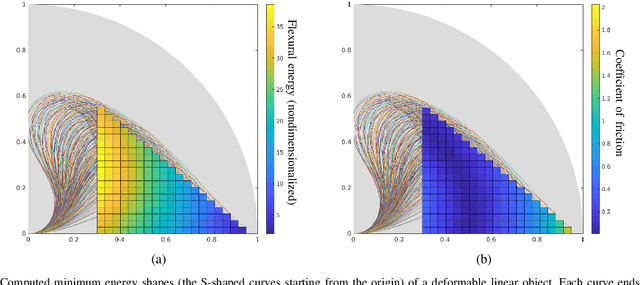

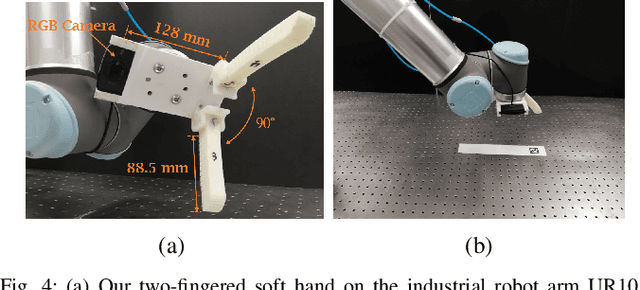

Abstract:This paper presents the technique of flex-and-flip manipulation. It is suitable for grasping thin, flexible linear objects lying on a flat surface. During the manipulation process, the object is first flexed by a robotic gripper whose fingers are placed on top of it, and later the increased internal energy of the object helps the gripper obtain a stable pinch grasp while the object flips into the space between the fingers. The dynamic interaction between the flexible object and the gripper is elaborated by analyzing how energy is exchanged. We also discuss the condition on friction to prevent loss of contact. Our flex-and-flip manipulation technique can be implemented with open-loop control and lends itself to underactuated, compliant finger mechanism. A set of experiments in robotic page turning performed with our customized hardware and software system demonstrates the effectiveness and robustness of the manipulation technique.

* 6 pages,8 figures, 2 tables

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge