Grady Williams

Locally Weighted Regression Pseudo-Rehearsal for Online Learning of Vehicle Dynamics

May 13, 2019

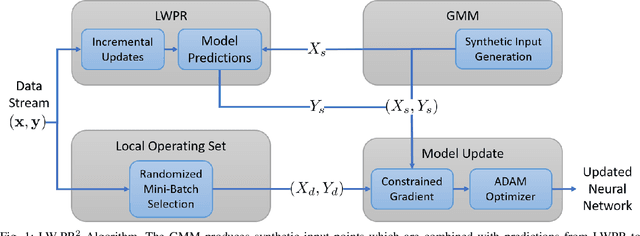

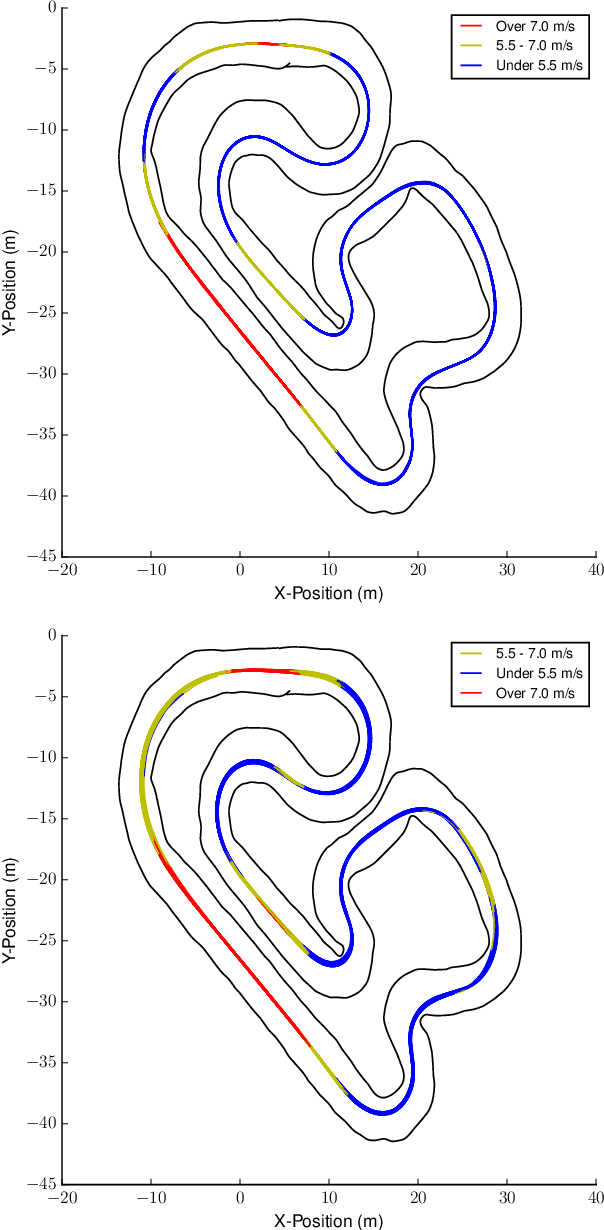

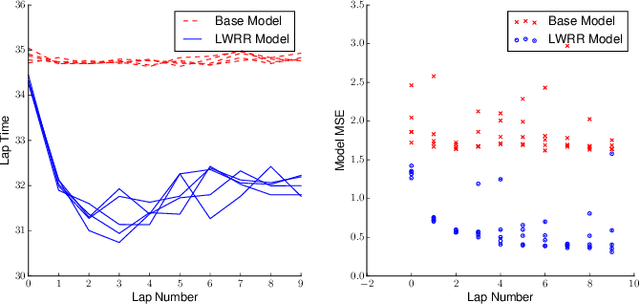

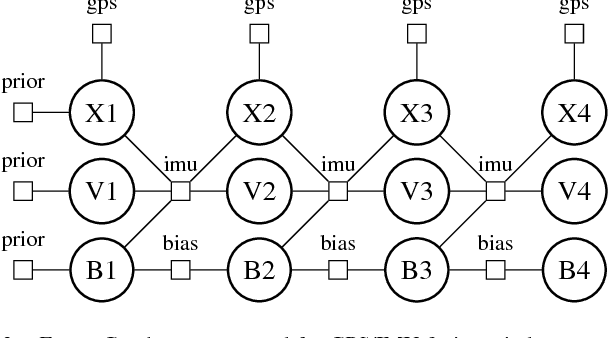

Abstract:We consider the problem of online adaptation of a neural network designed to represent vehicle dynamics. The neural network model is intended to be used by an MPC control law to autonomously control the vehicle. This problem is challenging because both the input and target distributions are non-stationary, and naive approaches to online adaptation result in catastrophic forgetting, which can in turn lead to controller failures. We present a novel online learning method, which combines the pseudo-rehearsal method with locally weighted projection regression. We demonstrate the effectiveness of the resulting Locally Weighted Projection Regression Pseudo-Rehearsal (LW-PR$^2$) method in simulation and on a large real world dataset collected with a 1/5 scale autonomous vehicle.

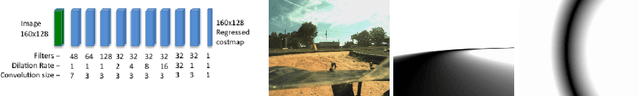

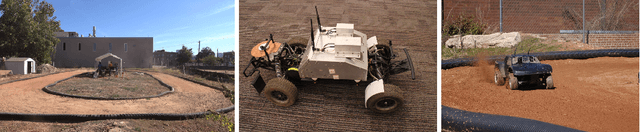

Vision-Based High Speed Driving with a Deep Dynamic Observer

Dec 10, 2018

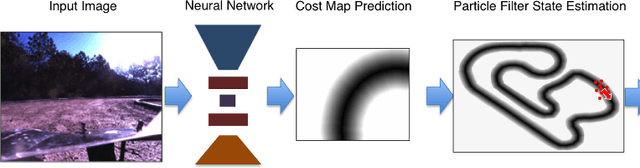

Abstract:In this paper we present a framework for combining deep learning-based road detection, particle filters, and Model Predictive Control (MPC) to drive aggressively using only a monocular camera, IMU, and wheel speed sensors. This framework uses deep convolutional neural networks combined with LSTMs to learn a local cost map representation of the track in front of the vehicle. A particle filter uses this dynamic observation model to localize in a schematic map, and MPC is used to drive aggressively using this particle filter based state estimate. We show extensive real world testing results, and demonstrate reliable operation of the vehicle at the friction limits on a complex dirt track. We reach speeds above 27 mph (12 m/s) on a dirt track with a 105 foot (32m) long straight using our 1:5 scale test vehicle. A video of these results can be found at https://www.youtube.com/watch?v=5ALIK-z-vUg

Variational Policy for Guiding Point Processes

Nov 10, 2017

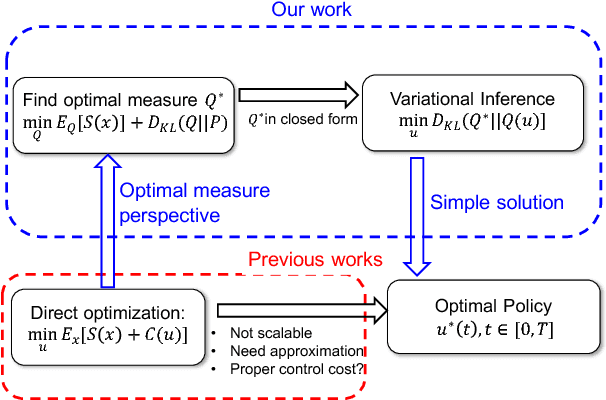

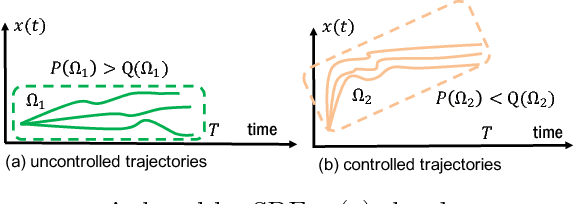

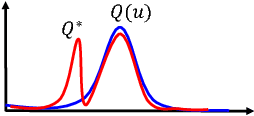

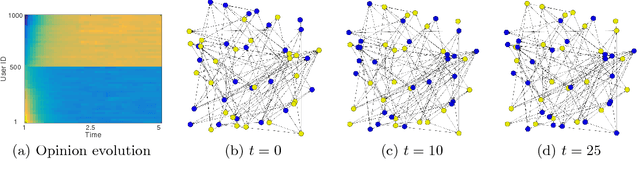

Abstract:Temporal point processes have been widely applied to model event sequence data generated by online users. In this paper, we consider the problem of how to design the optimal control policy for point processes, such that the stochastic system driven by the point process is steered to a target state. In particular, we exploit the key insight to view the stochastic optimal control problem from the perspective of optimal measure and variational inference. We further propose a convex optimization framework and an efficient algorithm to update the policy adaptively to the current system state. Experiments on synthetic and real-world data show that our algorithm can steer the user activities much more accurately and efficiently than other stochastic control methods.

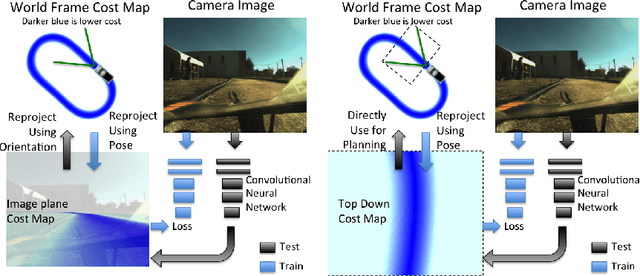

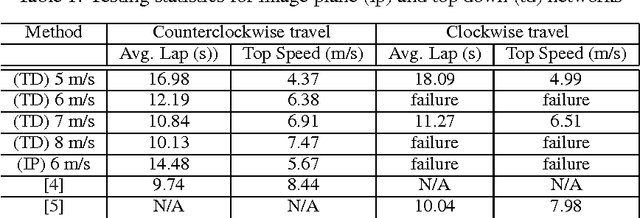

Aggressive Deep Driving: Model Predictive Control with a CNN Cost Model

Jul 17, 2017

Abstract:We present a framework for vision-based model predictive control (MPC) for the task of aggressive, high-speed autonomous driving. Our approach uses deep convolutional neural networks to predict cost functions from input video which are directly suitable for online trajectory optimization with MPC. We demonstrate the method in a high speed autonomous driving scenario, where we use a single monocular camera and a deep convolutional neural network to predict a cost map of the track in front of the vehicle. Results are demonstrated on a 1:5 scale autonomous vehicle given the task of high speed, aggressive driving.

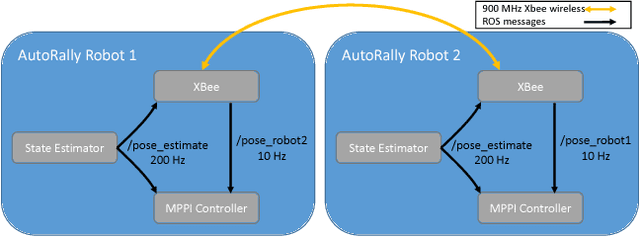

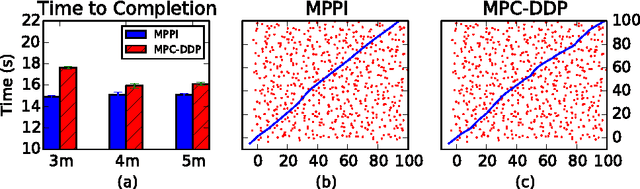

Autonomous Racing with AutoRally Vehicles and Differential Games

Jul 14, 2017

Abstract:Safe autonomous vehicles must be able to predict and react to the drivers around them. Previous control methods rely heavily on pre-computation and are unable to react to dynamic events as they unfold in real-time. In this paper, we extend Model Predictive Path Integral Control (MPPI) using differential game theory and introduce Best-Response MPPI (BR-MPPI) for real-time multi-vehicle interactions. Experimental results are presented using two AutoRally platforms in a racing format with BR-MPPI competing against a skilled human driver at the Georgia Tech Autonomous Racing Facility.

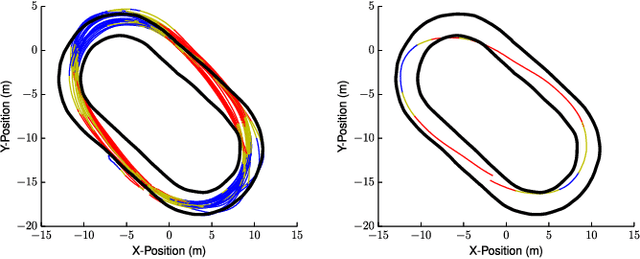

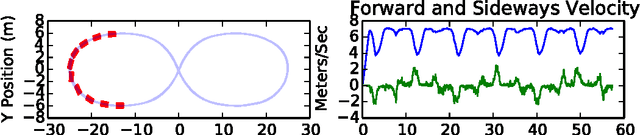

Information Theoretic Model Predictive Control: Theory and Applications to Autonomous Driving

Jul 07, 2017

Abstract:We present an information theoretic approach to stochastic optimal control problems that can be used to derive general sampling based optimization schemes. This new mathematical method is used to develop a sampling based model predictive control algorithm. We apply this information theoretic model predictive control (IT-MPC) scheme to the task of aggressive autonomous driving around a dirt test track, and compare its performance to a model predictive control version of the cross-entropy method.

Model Predictive Path Integral Control using Covariance Variable Importance Sampling

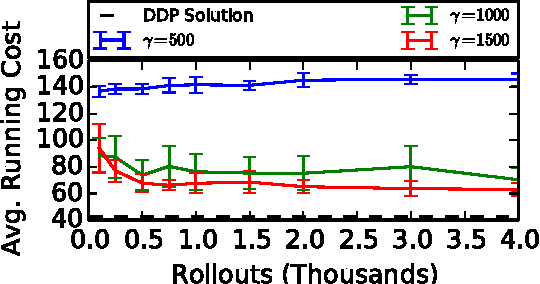

Oct 28, 2015

Abstract:In this paper we develop a Model Predictive Path Integral (MPPI) control algorithm based on a generalized importance sampling scheme and perform parallel optimization via sampling using a Graphics Processing Unit (GPU). The proposed generalized importance sampling scheme allows for changes in the drift and diffusion terms of stochastic diffusion processes and plays a significant role in the performance of the model predictive control algorithm. We compare the proposed algorithm in simulation with a model predictive control version of differential dynamic programming.

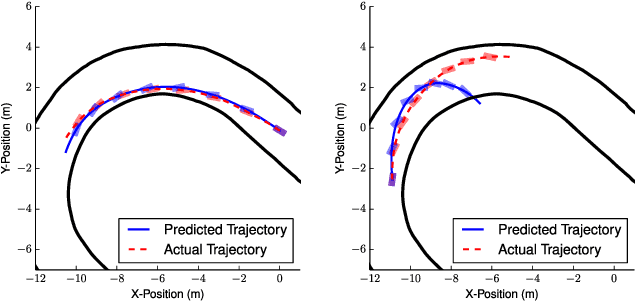

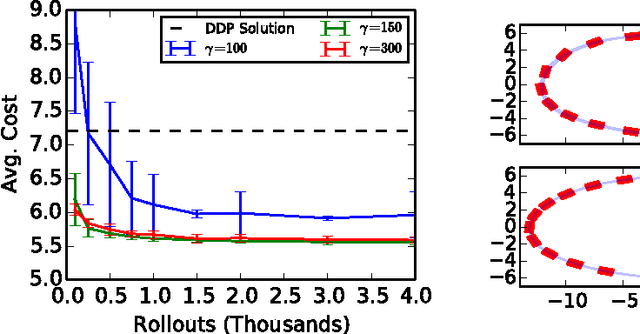

GPU Based Path Integral Control with Learned Dynamics

Mar 01, 2015

Abstract:We present an algorithm which combines recent advances in model based path integral control with machine learning approaches to learning forward dynamics models. We take advantage of the parallel computing power of a GPU to quickly take a massive number of samples from a learned probabilistic dynamics model, which we use to approximate the path integral form of the optimal control. The resulting algorithm runs in a receding-horizon fashion in realtime, and is subject to no restrictive assumptions about costs, constraints, or dynamics. A simple change to the path integral control formulation allows the algorithm to take model uncertainty into account during planning, and we demonstrate its performance on a quadrotor navigation task. In addition to this novel adaptation of path integral control, this is the first time that a receding-horizon implementation of iterative path integral control has been run on a real system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge