Gongxi Zhu

ASK: Adaptive Self-improving Knowledge Framework for Audio Text Retrieval

Dec 11, 2025

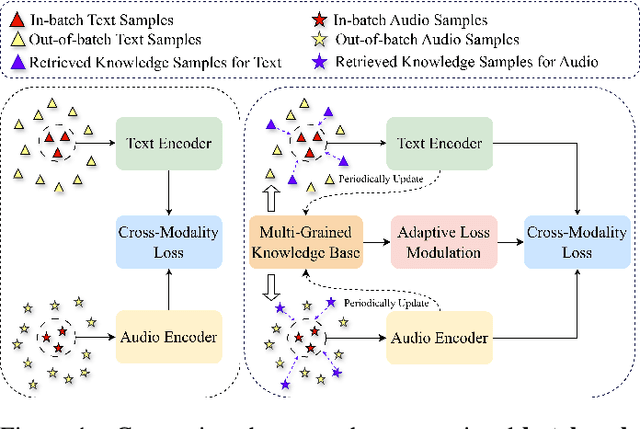

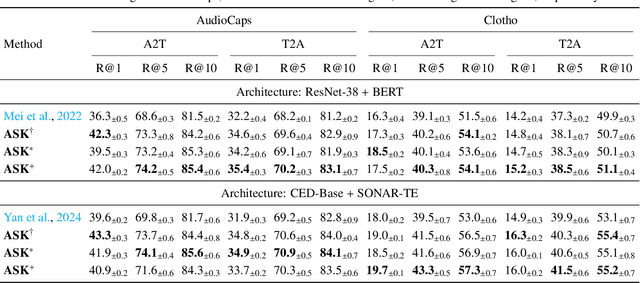

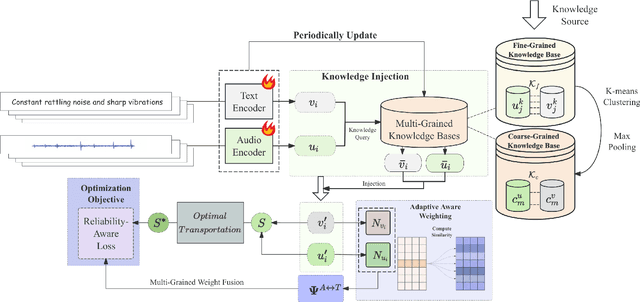

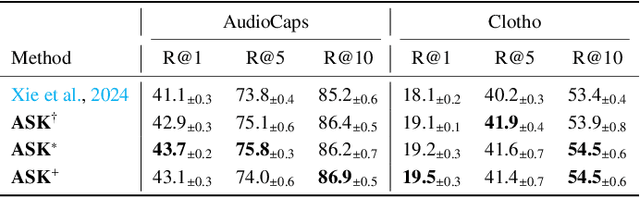

Abstract:The dominant paradigm for Audio-Text Retrieval (ATR) relies on mini-batch-based contrastive learning. This process, however, is inherently limited by what we formalize as the Gradient Locality Bottleneck (GLB), which structurally prevents models from leveraging out-of-batch knowledge and thus impairs fine-grained and long-tail learning. While external knowledge-enhanced methods can alleviate the GLB, we identify a critical, unaddressed side effect: the Representation-Drift Mismatch (RDM), where a static knowledge base becomes progressively misaligned with the evolving model, turning guidance into noise. To address this dual challenge, we propose the Adaptive Self-improving Knowledge (ASK) framework, a model-agnostic, plug-and-play solution. ASK breaks the GLB via multi-grained knowledge injection, systematically mitigates RDM through dynamic knowledge refinement, and introduces a novel adaptive reliability weighting scheme to ensure consistent knowledge contributes to optimization. Experimental results on two benchmark datasets with superior, state-of-the-art performance justify the efficacy of our proposed ASK framework.

FedAdOb: Privacy-Preserving Federated Deep Learning with Adaptive Obfuscation

Jun 03, 2024Abstract:Federated learning (FL) has emerged as a collaborative approach that allows multiple clients to jointly learn a machine learning model without sharing their private data. The concern about privacy leakage, albeit demonstrated under specific conditions, has triggered numerous follow-up research in designing powerful attacking methods and effective defending mechanisms aiming to thwart these attacking methods. Nevertheless, privacy-preserving mechanisms employed in these defending methods invariably lead to compromised model performances due to a fixed obfuscation applied to private data or gradients. In this article, we, therefore, propose a novel adaptive obfuscation mechanism, coined FedAdOb, to protect private data without yielding original model performances. Technically, FedAdOb utilizes passport-based adaptive obfuscation to ensure data privacy in both horizontal and vertical federated learning settings. The privacy-preserving capabilities of FedAdOb, specifically with regard to private features and labels, are theoretically proven through Theorems 1 and 2. Furthermore, extensive experimental evaluations conducted on various datasets and network architectures demonstrate the effectiveness of FedAdOb by manifesting its superior trade-off between privacy preservation and model performance, surpassing existing methods.

Unlearning during Learning: An Efficient Federated Machine Unlearning Method

May 24, 2024

Abstract:In recent years, Federated Learning (FL) has garnered significant attention as a distributed machine learning paradigm. To facilitate the implementation of the right to be forgotten, the concept of federated machine unlearning (FMU) has also emerged. However, current FMU approaches often involve additional time-consuming steps and may not offer comprehensive unlearning capabilities, which renders them less practical in real FL scenarios. In this paper, we introduce FedAU, an innovative and efficient FMU framework aimed at overcoming these limitations. Specifically, FedAU incorporates a lightweight auxiliary unlearning module into the learning process and employs a straightforward linear operation to facilitate unlearning. This approach eliminates the requirement for extra time-consuming steps, rendering it well-suited for FL. Furthermore, FedAU exhibits remarkable versatility. It not only enables multiple clients to carry out unlearning tasks concurrently but also supports unlearning at various levels of granularity, including individual data samples, specific classes, and even at the client level. We conducted extensive experiments on MNIST, CIFAR10, and CIFAR100 datasets to evaluate the performance of FedAU. The results demonstrate that FedAU effectively achieves the desired unlearning effect while maintaining model accuracy.

Evaluating Membership Inference Attacks and Defenses in Federated Learning

Feb 09, 2024

Abstract:Membership Inference Attacks (MIAs) pose a growing threat to privacy preservation in federated learning. The semi-honest attacker, e.g., the server, may determine whether a particular sample belongs to a target client according to the observed model information. This paper conducts an evaluation of existing MIAs and corresponding defense strategies. Our evaluation on MIAs reveals two important findings about the trend of MIAs. Firstly, combining model information from multiple communication rounds (Multi-temporal) enhances the overall effectiveness of MIAs compared to utilizing model information from a single epoch. Secondly, incorporating models from non-target clients (Multi-spatial) significantly improves the effectiveness of MIAs, particularly when the clients' data is homogeneous. This highlights the importance of considering the temporal and spatial model information in MIAs. Next, we assess the effectiveness via privacy-utility tradeoff for two type defense mechanisms against MIAs: Gradient Perturbation and Data Replacement. Our results demonstrate that Data Replacement mechanisms achieve a more optimal balance between preserving privacy and maintaining model utility. Therefore, we recommend the adoption of Data Replacement methods as a defense strategy against MIAs. Our code is available in https://github.com/Liar-Mask/FedMIA.

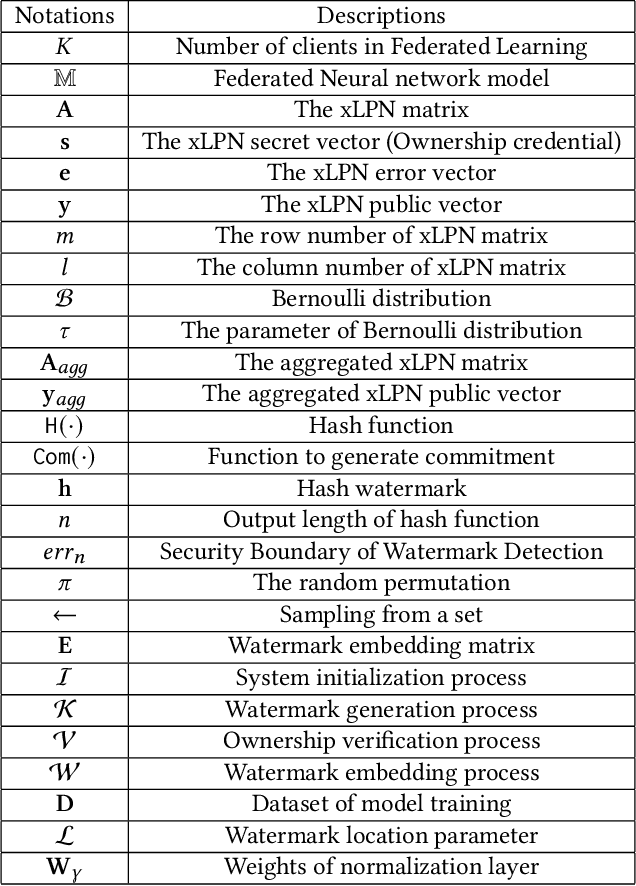

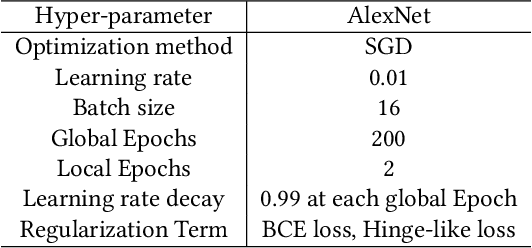

FedSOV: Federated Model Secure Ownership Verification with Unforgeable Signature

May 10, 2023

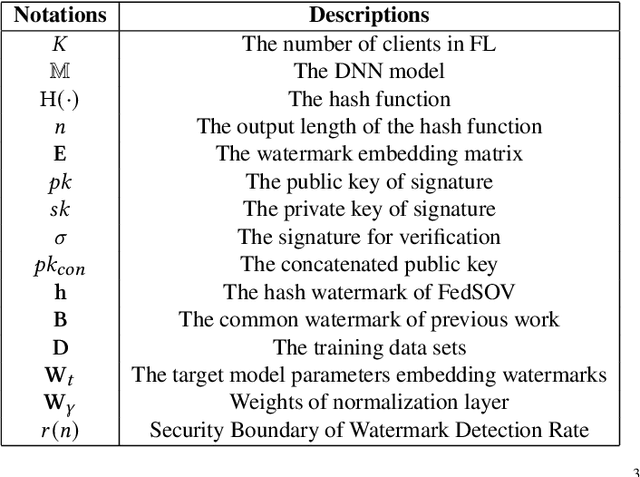

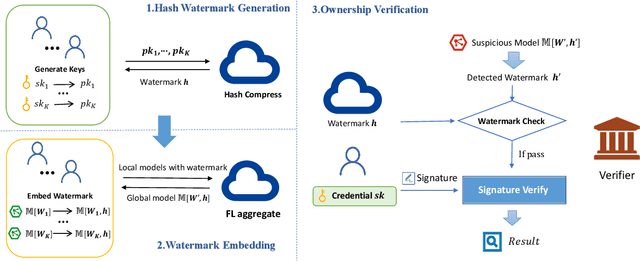

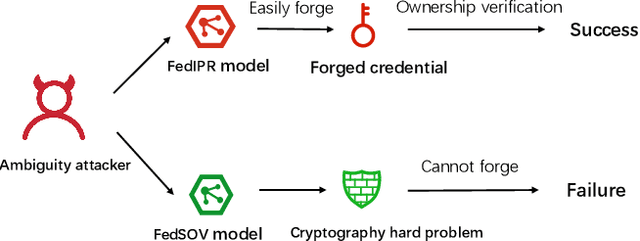

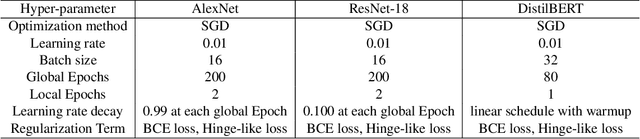

Abstract:Federated learning allows multiple parties to collaborate in learning a global model without revealing private data. The high cost of training and the significant value of the global model necessitates the need for ownership verification of federated learning. However, the existing ownership verification schemes in federated learning suffer from several limitations, such as inadequate support for a large number of clients and vulnerability to ambiguity attacks. To address these limitations, we propose a cryptographic signature-based federated learning model ownership verification scheme named FedSOV. FedSOV allows numerous clients to embed their ownership credentials and verify ownership using unforgeable digital signatures. The scheme provides theoretical resistance to ambiguity attacks with the unforgeability of the signature. Experimental results on computer vision and natural language processing tasks demonstrate that FedSOV is an effective federated model ownership verification scheme enhanced with provable cryptographic security.

FedZKP: Federated Model Ownership Verification with Zero-knowledge Proof

May 10, 2023

Abstract:Federated learning (FL) allows multiple parties to cooperatively learn a federated model without sharing private data with each other. The need of protecting such federated models from being plagiarized or misused, therefore, motivates us to propose a provable secure model ownership verification scheme using zero-knowledge proof, named FedZKP. It is shown that the FedZKP scheme without disclosing credentials is guaranteed to defeat a variety of existing and potential attacks. Both theoretical analysis and empirical studies demonstrate the security of FedZKP in the sense that the probability for attackers to breach the proposed FedZKP is negligible. Moreover, extensive experimental results confirm the fidelity and robustness of our scheme.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge