Giulio Corsi

Considerations Influencing Offense-Defense Dynamics From Artificial Intelligence

Dec 05, 2024Abstract:The rapid advancement of artificial intelligence (AI) technologies presents profound challenges to societal safety. As AI systems become more capable, accessible, and integrated into critical services, the dual nature of their potential is increasingly clear. While AI can enhance defensive capabilities in areas like threat detection, risk assessment, and automated security operations, it also presents avenues for malicious exploitation and large-scale societal harm, for example through automated influence operations and cyber attacks. Understanding the dynamics that shape AI's capacity to both cause harm and enhance protective measures is essential for informed decision-making regarding the deployment, use, and integration of advanced AI systems. This paper builds on recent work on offense-defense dynamics within the realm of AI, proposing a taxonomy to map and examine the key factors that influence whether AI systems predominantly pose threats or offer protective benefits to society. By establishing a shared terminology and conceptual foundation for analyzing these interactions, this work seeks to facilitate further research and discourse in this critical area.

Auditing Google's Search Algorithm: Measuring News Diversity Across Brazil, the UK, and the US

Oct 31, 2024Abstract:This study examines the influence of Google's search algorithm on news diversity by analyzing search results in Brazil, the UK, and the US. It explores how Google's system preferentially favors a limited number of news outlets. Utilizing algorithm auditing techniques, the research measures source concentration with the Herfindahl-Hirschman Index (HHI) and Gini coefficient, revealing significant concentration trends. The study underscores the importance of conducting horizontal analyses across multiple search queries, as focusing solely on individual results pages may obscure these patterns. Factors such as popularity, political bias, and recency were evaluated for their impact on news rankings. Findings indicate a slight leftward bias in search outcomes and a preference for popular, often national outlets. This bias, combined with a tendency to prioritize recent content, suggests that Google's algorithm may reinforce existing media inequalities. By analyzing the largest dataset to date -- 221,863 search results -- this research provides comprehensive, longitudinal insights into how algorithms shape public access to diverse news sources.

Artificial Intelligence in Brazilian News: A Mixed-Methods Analysis

Oct 22, 2024

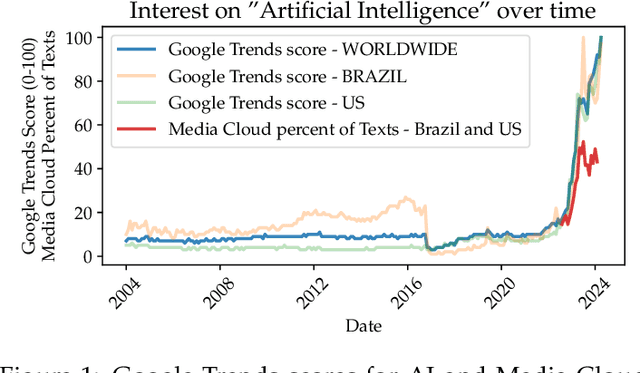

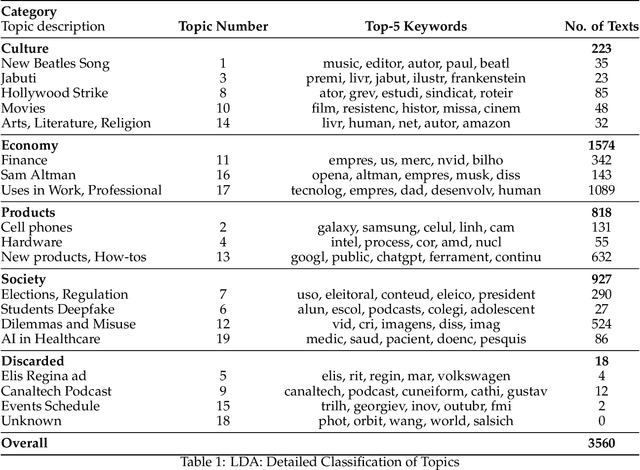

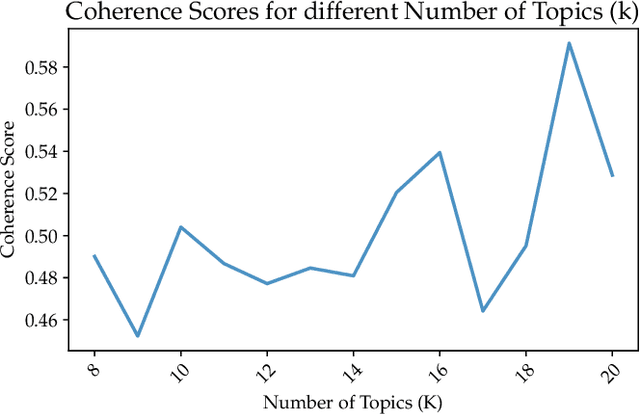

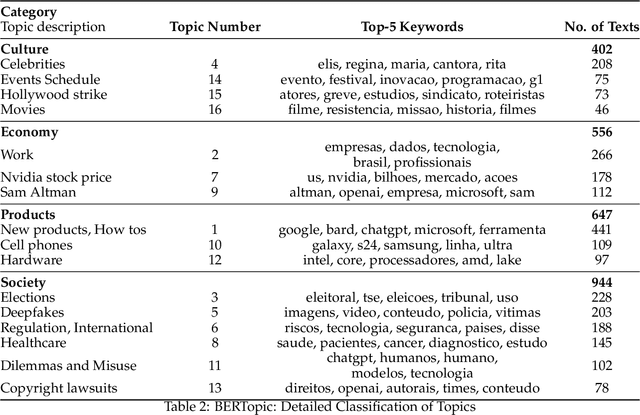

Abstract:The current surge in Artificial Intelligence (AI) interest, reflected in heightened media coverage since 2009, has sparked significant debate on AI's implications for privacy, social justice, workers' rights, and democracy. The media plays a crucial role in shaping public perception and acceptance of AI technologies. However, research into how AI appears in media has primarily focused on anglophone contexts, leaving a gap in understanding how AI is represented globally. This study addresses this gap by analyzing 3,560 news articles from Brazilian media published between July 1, 2023, and February 29, 2024, from 13 popular online news outlets. Using Computational Grounded Theory (CGT), the study applies Latent Dirichlet Allocation (LDA), BERTopic, and Named-Entity Recognition to investigate the main topics in AI coverage and the entities represented. The findings reveal that Brazilian news coverage of AI is dominated by topics related to applications in the workplace and product launches, with limited space for societal concerns, which mostly focus on deepfakes and electoral integrity. The analysis also highlights a significant presence of industry-related entities, indicating a strong influence of corporate agendas in the country's news. This study underscores the need for a more critical and nuanced discussion of AI's societal impacts in Brazilian media.

Foundational Challenges in Assuring Alignment and Safety of Large Language Models

Apr 15, 2024

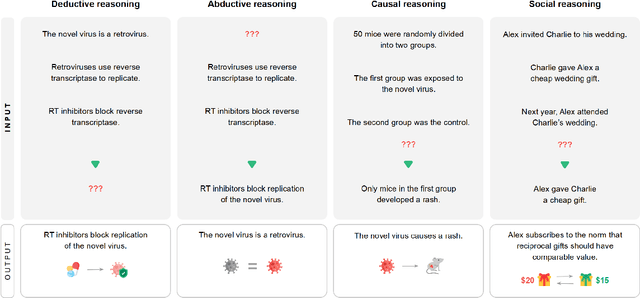

Abstract:This work identifies 18 foundational challenges in assuring the alignment and safety of large language models (LLMs). These challenges are organized into three different categories: scientific understanding of LLMs, development and deployment methods, and sociotechnical challenges. Based on the identified challenges, we pose $200+$ concrete research questions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge