Richard Mallah

The Singapore Consensus on Global AI Safety Research Priorities

Jun 25, 2025

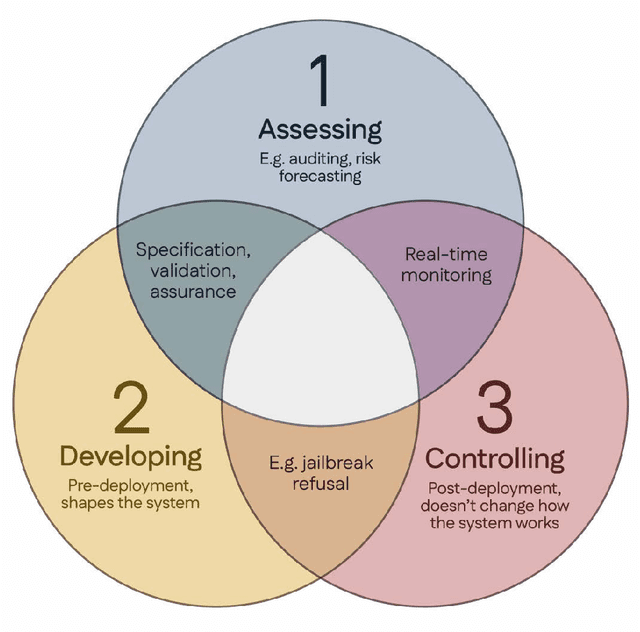

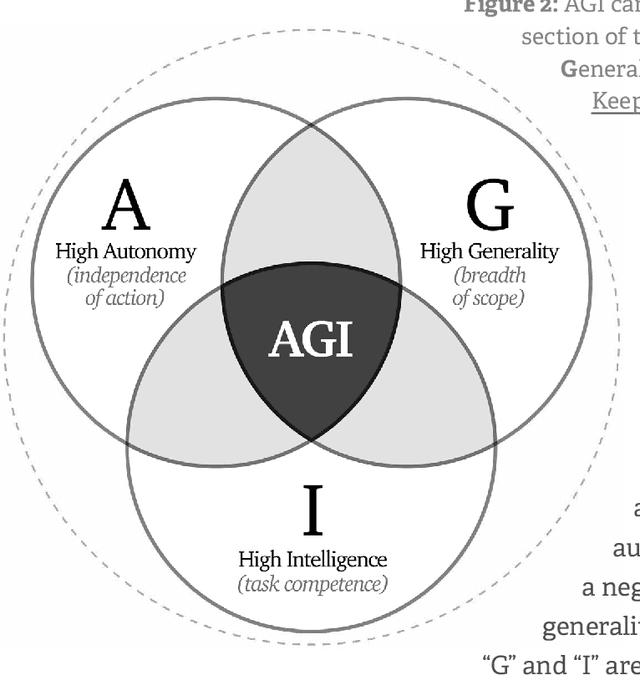

Abstract:Rapidly improving AI capabilities and autonomy hold significant promise of transformation, but are also driving vigorous debate on how to ensure that AI is safe, i.e., trustworthy, reliable, and secure. Building a trusted ecosystem is therefore essential -- it helps people embrace AI with confidence and gives maximal space for innovation while avoiding backlash. The "2025 Singapore Conference on AI (SCAI): International Scientific Exchange on AI Safety" aimed to support research in this space by bringing together AI scientists across geographies to identify and synthesise research priorities in AI safety. This resulting report builds on the International AI Safety Report chaired by Yoshua Bengio and backed by 33 governments. By adopting a defence-in-depth model, this report organises AI safety research domains into three types: challenges with creating trustworthy AI systems (Development), challenges with evaluating their risks (Assessment), and challenges with monitoring and intervening after deployment (Control).

Adapting Probabilistic Risk Assessment for AI

Apr 25, 2025

Abstract:Modern general-purpose artificial intelligence (AI) systems present an urgent risk management challenge, as their rapidly evolving capabilities and potential for catastrophic harm outpace our ability to reliably assess their risks. Current methods often rely on selective testing and undocumented assumptions about risk priorities, frequently failing to make a serious attempt at assessing the set of pathways through which Al systems pose direct or indirect risks to society and the biosphere. This paper introduces the probabilistic risk assessment (PRA) for AI framework, adapting established PRA techniques from high-reliability industries (e.g., nuclear power, aerospace) for the new challenges of advanced AI. The framework guides assessors in identifying potential risks, estimating likelihood and severity, and explicitly documenting evidence, underlying assumptions, and analyses at appropriate granularities. The framework's implementation tool synthesizes the results into a risk report card with aggregated risk estimates from all assessed risks. This systematic approach integrates three advances: (1) Aspect-oriented hazard analysis provides systematic hazard coverage guided by a first-principles taxonomy of AI system aspects (e.g. capabilities, domain knowledge, affordances); (2) Risk pathway modeling analyzes causal chains from system aspects to societal impacts using bidirectional analysis and incorporating prospective techniques; and (3) Uncertainty management employs scenario decomposition, reference scales, and explicit tracing protocols to structure credible projections with novelty or limited data. Additionally, the framework harmonizes diverse assessment methods by integrating evidence into comparable, quantified absolute risk estimates for critical decisions. We have implemented this as a workbook tool for AI developers, evaluators, and regulators, available on the project website.

Considerations Influencing Offense-Defense Dynamics From Artificial Intelligence

Dec 05, 2024Abstract:The rapid advancement of artificial intelligence (AI) technologies presents profound challenges to societal safety. As AI systems become more capable, accessible, and integrated into critical services, the dual nature of their potential is increasingly clear. While AI can enhance defensive capabilities in areas like threat detection, risk assessment, and automated security operations, it also presents avenues for malicious exploitation and large-scale societal harm, for example through automated influence operations and cyber attacks. Understanding the dynamics that shape AI's capacity to both cause harm and enhance protective measures is essential for informed decision-making regarding the deployment, use, and integration of advanced AI systems. This paper builds on recent work on offense-defense dynamics within the realm of AI, proposing a taxonomy to map and examine the key factors that influence whether AI systems predominantly pose threats or offer protective benefits to society. By establishing a shared terminology and conceptual foundation for analyzing these interactions, this work seeks to facilitate further research and discourse in this critical area.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge