Gaofeng Nie

Channel Estimation and Beamforming Design for MF-RIS-Aided Communication Systems

Jan 18, 2025

Abstract:In this letter, we study the beamforming design for channel estimation of multi-functional reconfigurable intelligent surface (MF-RIS)-aided multi-user communications that supports simultaneous signal reflection, refraction, and amplification. A least square (LS) based channel estimator is proposed for MF-RIS by considering both the coupled MF-RIS beams and the introduced thermal noise. With the discrete fourier transform (DFT)-matrix, the MF-RIS beamforming design problem is simplified under the proposed LS channel estimator. The optimal MF-RIS beamforming design that achieves the Cram\'er-Rao lower bound (CRLB) of channel estimator is obtained with the proposed alternating optimization algorithm. Simulation results demonstrate the effectiveness of the proposed beamforming design in reducing the impact of thermal noise.

Digital Twin Channel for 6G: Concepts, Architectures and Potential Applications

Mar 31, 2024

Abstract:Digital twin channel (DTC) is the real-time mapping of a wireless channel from the physical world to the digital world, which is expected to provide significant performance enhancements for the sixth-generation (6G) air-interface design. In this work, we first define five evolution levels of channel twins with the progression of wireless communication. The fifth level, autonomous DTC, is elaborated with multi-dimensional factors such as methodology, characterization precision, and data category. Then, we provide detailed insights into the requirements and architecture of a complete DTC for 6G. Subsequently, a sensing-enhanced real-time channel prediction platform and experimental validations are exhibited. Finally, drawing from the vision of the 6G network, we explore the potential applications and the open issues in future DTC research.

Convergence Analysis and Latency Minimization for Semi-Federated Learning in Massive IoT Networks

Oct 04, 2023

Abstract:As the number of sensors becomes massive in Internet of Things (IoT) networks, the amount of data is humongous. To process data in real-time while protecting user privacy, federated learning (FL) has been regarded as an enabling technique to push edge intelligence into IoT networks with massive devices. However, FL latency increases dramatically due to the increase of the number of parameters in deep neural network and the limited computation and communication capabilities of IoT devices. To address this issue, we propose a semi-federated learning (SemiFL) paradigm in which network pruning and over-the-air computation are efficiently applied. To be specific, each small base station collects the raw data from its served sensors and trains its local pruned model. After that, the global aggregation of local gradients is achieved through over-the-air computation. We first analyze the performance of the proposed SemiFL by deriving its convergence upper bound. To reduce latency, a convergence-constrained SemiFL latency minimization problem is formulated. By decoupling the original problem into several sub-problems, iterative algorithms are designed to solve them efficiently. Finally, numerical simulations are conducted to verify the effectiveness of our proposed scheme in reducing latency and guaranteeing the identification accuracy.

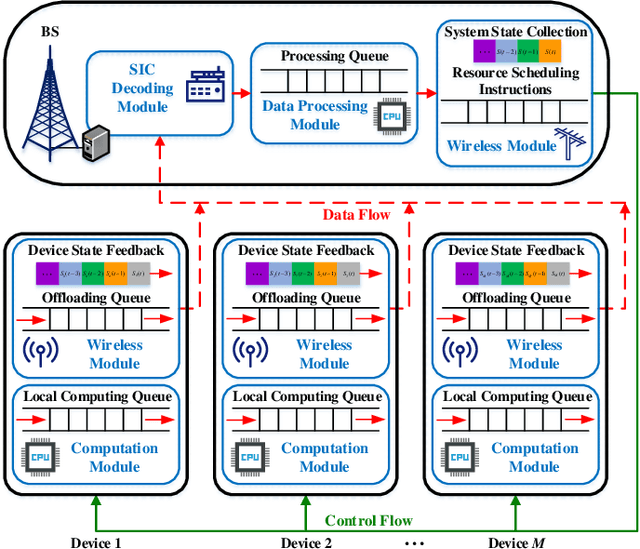

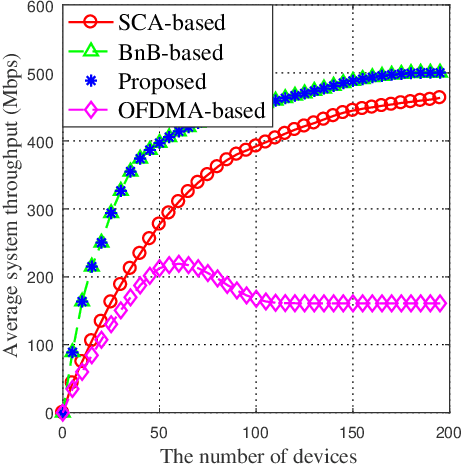

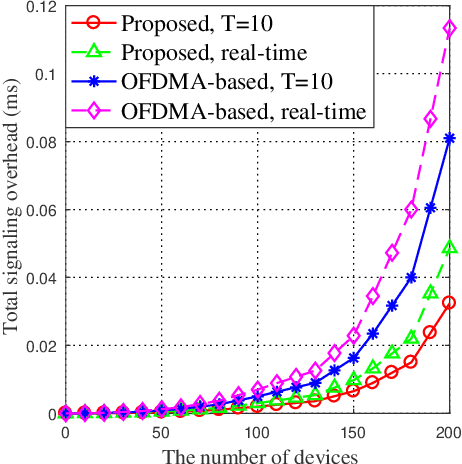

Online Offloading Scheduling for NOMA-Aided MEC Under Partial Device Knowledge

Jun 30, 2021

Abstract:By exploiting the superiority of non-orthogonal multiple access (NOMA), NOMA-aided mobile edge computing (MEC) can provide scalable and low-latency computing services for the Internet of Things. However, given the prevalent stochasticity of wireless networks and sophisticated signal processing of NOMA, it is critical but challenging to design an efficient task offloading algorithm for NOMA-aided MEC, especially under a large number of devices. This paper presents an online algorithm that jointly optimizes offloading decisions and resource allocation to maximize the long-term system utility (i.e., a measure of throughput and fairness). Since the optimization variables are temporary coupled, we first apply Lyapunov technique to decouple the long-term stochastic optimization into a series of per-slot deterministic subproblems, which does not require any prior knowledge of network dynamics. Second, we propose to transform the non-convex per-slot subproblem of optimizing NOMA power allocation equivalently to a convex form by introducing a set of auxiliary variables, whereby the time-complexity is reduced from the exponential complexity to $\mathcal{O} (M^{3/2})$. The proposed algorithm is proved to be asymptotically optimal, even under partial knowledge of the device states at the base station. Simulation results validate the superiority of the proposed algorithm in terms of system utility, stability improvement, and the overhead reduction.

Research on Resource Allocation for Efficient Federated Learning

Apr 19, 2021

Abstract:As a promising solution to achieve efficient learning among isolated data owners and solve data privacy issues, federated learning is receiving wide attention. Using the edge server as an intermediary can effectively collect sensor data, perform local model training, and upload model parameters for global aggregation. So this paper proposes a new framework for resource allocation in a hierarchical network supported by edge computing. In this framework, we minimize the weighted sum of system cost and learning cost by optimizing bandwidth, computing frequency, power allocation and subcarrier assignment. To solve this challenging mixed-integer non-linear problem, we first decouple the bandwidth optimization problem(P1) from the whole problem and obtain a closed-form solution. The remaining computational frequency, power, and subcarrier joint optimization problem(P2) can be further decomposed into two sub-problems: latency and computational frequency optimization problem(P3) and transmission power and subcarrier optimization problem(P4). P3 is a convex optimization problem that is easy to solve. In the joint optimization problem(P4), the optimal power under each subcarrier selection can be obtained first through the successive convex approximation(SCA) algorithm. Substituting the optimal power value obtained back to P4, the subproblem can be regarded as an assignment problem, so the Hungarian algorithm can be effectively used to solve it. The solution of problem P2 is accomplished by solving P3 and P4 iteratively. To verify the performance of the algorithm, we compare the proposed algorithm with five algorithms; namely Equal bandwidth allocation, Learning cost guaranteed, Greedy subcarrier allocation, System cost guaranteed and Time-biased algorithm. Numerical results show the significant performance gain and the robustness of the proposed algorithm in the face of parameter changes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge