Jianyang Ren

Convergence Analysis and Latency Minimization for Semi-Federated Learning in Massive IoT Networks

Oct 04, 2023

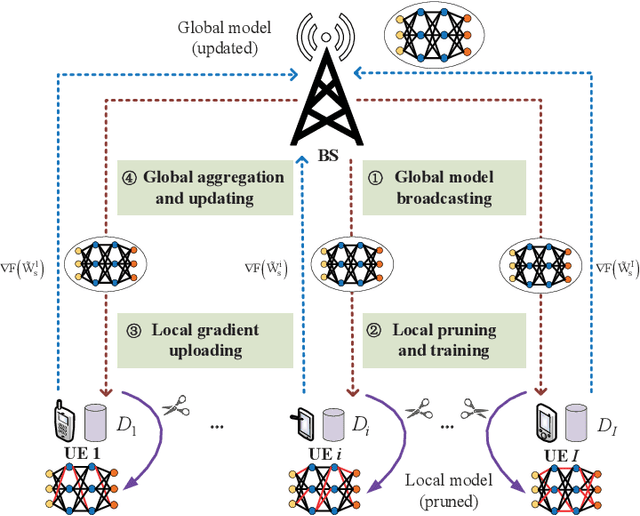

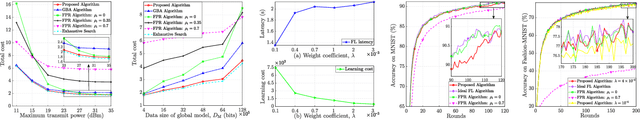

Abstract:As the number of sensors becomes massive in Internet of Things (IoT) networks, the amount of data is humongous. To process data in real-time while protecting user privacy, federated learning (FL) has been regarded as an enabling technique to push edge intelligence into IoT networks with massive devices. However, FL latency increases dramatically due to the increase of the number of parameters in deep neural network and the limited computation and communication capabilities of IoT devices. To address this issue, we propose a semi-federated learning (SemiFL) paradigm in which network pruning and over-the-air computation are efficiently applied. To be specific, each small base station collects the raw data from its served sensors and trains its local pruned model. After that, the global aggregation of local gradients is achieved through over-the-air computation. We first analyze the performance of the proposed SemiFL by deriving its convergence upper bound. To reduce latency, a convergence-constrained SemiFL latency minimization problem is formulated. By decoupling the original problem into several sub-problems, iterative algorithms are designed to solve them efficiently. Finally, numerical simulations are conducted to verify the effectiveness of our proposed scheme in reducing latency and guaranteeing the identification accuracy.

Towards Communication-Learning Trade-off for Federated Learning at the Network Edge

May 27, 2022

Abstract:In this letter, we study a wireless federated learning (FL) system where network pruning is applied to local users with limited resources. Although pruning is beneficial to reduce FL latency, it also deteriorates learning performance due to the information loss. Thus, a trade-off problem between communication and learning is raised. To address this challenge, we quantify the effects of network pruning and packet error on the learning performance by deriving the convergence rate of FL with a non-convex loss function. Then, closed-form solutions for pruning control and bandwidth allocation are proposed to minimize the weighted sum of FL latency and FL performance. Finally, numerical results demonstrate that 1) our proposed solution can outperform benchmarks in terms of cost reduction and accuracy guarantee, and 2) a higher pruning rate would bring less communication overhead but also worsen FL accuracy, which is consistent with our theoretical analysis.

* This paper has been accepted by IEEE Communications Letters

Research on Resource Allocation for Efficient Federated Learning

Apr 19, 2021

Abstract:As a promising solution to achieve efficient learning among isolated data owners and solve data privacy issues, federated learning is receiving wide attention. Using the edge server as an intermediary can effectively collect sensor data, perform local model training, and upload model parameters for global aggregation. So this paper proposes a new framework for resource allocation in a hierarchical network supported by edge computing. In this framework, we minimize the weighted sum of system cost and learning cost by optimizing bandwidth, computing frequency, power allocation and subcarrier assignment. To solve this challenging mixed-integer non-linear problem, we first decouple the bandwidth optimization problem(P1) from the whole problem and obtain a closed-form solution. The remaining computational frequency, power, and subcarrier joint optimization problem(P2) can be further decomposed into two sub-problems: latency and computational frequency optimization problem(P3) and transmission power and subcarrier optimization problem(P4). P3 is a convex optimization problem that is easy to solve. In the joint optimization problem(P4), the optimal power under each subcarrier selection can be obtained first through the successive convex approximation(SCA) algorithm. Substituting the optimal power value obtained back to P4, the subproblem can be regarded as an assignment problem, so the Hungarian algorithm can be effectively used to solve it. The solution of problem P2 is accomplished by solving P3 and P4 iteratively. To verify the performance of the algorithm, we compare the proposed algorithm with five algorithms; namely Equal bandwidth allocation, Learning cost guaranteed, Greedy subcarrier allocation, System cost guaranteed and Time-biased algorithm. Numerical results show the significant performance gain and the robustness of the proposed algorithm in the face of parameter changes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge