Francisco S. Melo

Entropic Risk-Aware Monte Carlo Tree Search

Jan 25, 2026Abstract:We propose a provably correct Monte Carlo tree search (MCTS) algorithm for solving \textit{risk-aware} Markov decision processes (MDPs) with \textit{entropic risk measure} (ERM) objectives. We provide a \textit{non-asymptotic} analysis of our proposed algorithm, showing that the algorithm: (i) is \textit{correct} in the sense that the empirical ERM obtained at the root node converges to the optimal ERM; and (ii) enjoys \textit{polynomial regret concentration}. Our algorithm successfully exploits the dynamic programming formulations for solving risk-aware MDPs with ERM objectives introduced by previous works in the context of an upper confidence bound-based tree search algorithm. Finally, we provide a set of illustrative experiments comparing our risk-aware MCTS method against relevant baselines.

Solving General-Utility Markov Decision Processes in the Single-Trial Regime with Online Planning

May 21, 2025Abstract:In this work, we contribute the first approach to solve infinite-horizon discounted general-utility Markov decision processes (GUMDPs) in the single-trial regime, i.e., when the agent's performance is evaluated based on a single trajectory. First, we provide some fundamental results regarding policy optimization in the single-trial regime, investigating which class of policies suffices for optimality, casting our problem as a particular MDP that is equivalent to our original problem, as well as studying the computational hardness of policy optimization in the single-trial regime. Second, we show how we can leverage online planning techniques, in particular a Monte-Carlo tree search algorithm, to solve GUMDPs in the single-trial regime. Third, we provide experimental results showcasing the superior performance of our approach in comparison to relevant baselines.

Implicit Repair with Reinforcement Learning in Emergent Communication

Feb 18, 2025Abstract:Conversational repair is a mechanism used to detect and resolve miscommunication and misinformation problems when two or more agents interact. One particular and underexplored form of repair in emergent communication is the implicit repair mechanism, where the interlocutor purposely conveys the desired information in such a way as to prevent misinformation from any other interlocutor. This work explores how redundancy can modify the emergent communication protocol to continue conveying the necessary information to complete the underlying task, even with additional external environmental pressures such as noise. We focus on extending the signaling game, called the Lewis Game, by adding noise in the communication channel and inputs received by the agents. Our analysis shows that agents add redundancy to the transmitted messages as an outcome to prevent the negative impact of noise on the task success. Additionally, we observe that the emerging communication protocol's generalization capabilities remain equivalent to architectures employed in simpler games that are entirely deterministic. Additionally, our method is the only one suitable for producing robust communication protocols that can handle cases with and without noise while maintaining increased generalization performance levels.

Distributed Value Decomposition Networks with Networked Agents

Feb 11, 2025

Abstract:We investigate the problem of distributed training under partial observability, whereby cooperative multi-agent reinforcement learning agents (MARL) maximize the expected cumulative joint reward. We propose distributed value decomposition networks (DVDN) that generate a joint Q-function that factorizes into agent-wise Q-functions. Whereas the original value decomposition networks rely on centralized training, our approach is suitable for domains where centralized training is not possible and agents must learn by interacting with the physical environment in a decentralized manner while communicating with their peers. DVDN overcomes the need for centralized training by locally estimating the shared objective. We contribute with two innovative algorithms, DVDN and DVDN (GT), for the heterogeneous and homogeneous agents settings respectively. Empirically, both algorithms approximate the performance of value decomposition networks, in spite of the information loss during communication, as demonstrated in ten MARL tasks in three standard environments.

Networked Agents in the Dark: Team Value Learning under Partial Observability

Jan 15, 2025

Abstract:We propose a novel cooperative multi-agent reinforcement learning (MARL) approach for networked agents. In contrast to previous methods that rely on complete state information or joint observations, our agents must learn how to reach shared objectives under partial observability. During training, they collect individual rewards and approximate a team value function through local communication, resulting in cooperative behavior. To describe our problem, we introduce the networked dynamic partially observable Markov game framework, where agents communicate over a switching topology communication network. Our distributed method, DNA-MARL, uses a consensus mechanism for local communication and gradient descent for local computation. DNA-MARL increases the range of the possible applications of networked agents, being well-suited for real world domains that impose privacy and where the messages may not reach their recipients. We evaluate DNA-MARL across benchmark MARL scenarios. Our results highlight the superior performance of DNA-MARL over previous methods.

NeuralThink: Algorithm Synthesis that Extrapolates in General Tasks

Feb 23, 2024

Abstract:While machine learning methods excel at pattern recognition, they struggle with complex reasoning tasks in a scalable, algorithmic manner. Recent Deep Thinking methods show promise in learning algorithms that extrapolate: learning in smaller environments and executing the learned algorithm in larger environments. However, these works are limited to symmetrical tasks, where the input and output dimensionalities are the same. To address this gap, we propose NeuralThink, a new recurrent architecture that can consistently extrapolate to both symmetrical and asymmetrical tasks, where the dimensionality of the input and output are different. We contribute with a novel benchmark of asymmetrical tasks for extrapolation. We show that NeuralThink consistently outperforms the prior state-of-the-art Deep Thinking architectures, in regards to stable extrapolation to large observations from smaller training sizes.

Making Friends in the Dark: Ad Hoc Teamwork Under Partial Observability

Sep 30, 2023

Abstract:This paper introduces a formal definition of the setting of ad hoc teamwork under partial observability and proposes a first-principled model-based approach which relies only on prior knowledge and partial observations of the environment in order to perform ad hoc teamwork. We make three distinct assumptions that set it apart previous works, namely: i) the state of the environment is always partially observable, ii) the actions of the teammates are always unavailable to the ad hoc agent and iii) the ad hoc agent has no access to a reward signal which could be used to learn the task from scratch. Our results in 70 POMDPs from 11 domains show that our approach is not only effective in assisting unknown teammates in solving unknown tasks but is also robust in scaling to more challenging problems.

Multi-Bellman operator for convergence of $Q$-learning with linear function approximation

Sep 28, 2023Abstract:We study the convergence of $Q$-learning with linear function approximation. Our key contribution is the introduction of a novel multi-Bellman operator that extends the traditional Bellman operator. By exploring the properties of this operator, we identify conditions under which the projected multi-Bellman operator becomes contractive, providing improved fixed-point guarantees compared to the Bellman operator. To leverage these insights, we propose the multi $Q$-learning algorithm with linear function approximation. We demonstrate that this algorithm converges to the fixed-point of the projected multi-Bellman operator, yielding solutions of arbitrary accuracy. Finally, we validate our approach by applying it to well-known environments, showcasing the effectiveness and applicability of our findings.

Interactively Teaching an Inverse Reinforcement Learner with Limited Feedback

Sep 16, 2023

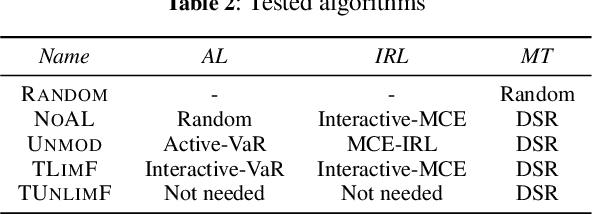

Abstract:We study the problem of teaching via demonstrations in sequential decision-making tasks. In particular, we focus on the situation when the teacher has no access to the learner's model and policy, and the feedback from the learner is limited to trajectories that start from states selected by the teacher. The necessity to select the starting states and infer the learner's policy creates an opportunity for using the methods of inverse reinforcement learning and active learning by the teacher. In this work, we formalize the teaching process with limited feedback and propose an algorithm that solves this teaching problem. The algorithm uses a modified version of the active value-at-risk method to select the starting states, a modified maximum causal entropy algorithm to infer the policy, and the difficulty score ratio method to choose the teaching demonstrations. We test the algorithm in a synthetic car driving environment and conclude that the proposed algorithm is an effective solution when the learner's feedback is limited.

Learning to Perceive in Deep Model-Free Reinforcement Learning

Jan 13, 2023Abstract:This work proposes a novel model-free Reinforcement Learning (RL) agent that is able to learn how to complete an unknown task having access to only a part of the input observation. We take inspiration from the concepts of visual attention and active perception that are characteristic of humans and tried to apply them to our agent, creating a hard attention mechanism. In this mechanism, the model decides first which region of the input image it should look at, and only after that it has access to the pixels of that region. Current RL agents do not follow this principle and we have not seen these mechanisms applied to the same purpose as this work. In our architecture, we adapt an existing model called recurrent attention model (RAM) and combine it with the proximal policy optimization (PPO) algorithm. We investigate whether a model with these characteristics is capable of achieving similar performance to state-of-the-art model-free RL agents that access the full input observation. This analysis is made in two Atari games, Pong and SpaceInvaders, which have a discrete action space, and in CarRacing, which has a continuous action space. Besides assessing its performance, we also analyze the movement of the attention of our model and compare it with what would be an example of the human behavior. Even with such visual limitation, we show that our model matches the performance of PPO+LSTM in two of the three games tested.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge